In today’s digital landscape, ensuring uninterrupted access to online services is paramount. Businesses and users alike depend on the constant availability of websites and applications. This is where the synergy between load balancers and high availability (HA) becomes critical, forming the backbone of resilient and reliable systems. Load balancers, acting as intelligent traffic directors, play a pivotal role in ensuring that services remain accessible, even when faced with unexpected challenges.

This guide delves into the intricacies of load balancing and its profound impact on achieving high availability. We will explore the fundamental concepts, practical applications, and various techniques employed to build robust and fault-tolerant systems. From understanding the core functions of load balancers to examining advanced features like session persistence and geographic load balancing, this exploration will provide a comprehensive understanding of this vital technology.

Introduction to Load Balancing

Load balancing is a crucial technique for distributing network traffic across multiple servers, ensuring that no single server is overwhelmed and that applications remain responsive and available. It’s a fundamental component of modern high-availability architectures, designed to improve performance, reliability, and scalability.A load balancer is essentially a traffic director. Its primary function is to distribute incoming client requests across a group of servers (also known as a server pool or a cluster).

This distribution is based on various algorithms, aiming to ensure that no single server is overloaded while also optimizing resource utilization.

Common Applications of Load Balancing

Load balancing finds widespread application across various online services and applications. Its implementation is crucial for ensuring seamless user experiences and maintaining service uptime.

- Web Servers: Load balancing is extensively used to manage traffic to web servers. When a website experiences high traffic, a load balancer directs incoming requests to different web servers, preventing any single server from becoming a bottleneck. This ensures that the website remains responsive even during peak hours. For instance, e-commerce sites like Amazon and eBay utilize load balancing to handle millions of requests per second during sales events.

- Databases: Load balancing can also be applied to database servers. By distributing database queries across multiple database instances, the load balancer helps to improve database performance and availability. This is particularly important for applications that rely heavily on database operations, such as banking systems or financial platforms. A real-world example is the use of load balancing by large financial institutions to manage their transaction processing.

- Application Servers: Load balancing is frequently employed to manage traffic to application servers. This ensures that application requests are distributed evenly across available servers, improving the application’s overall performance and resilience. For example, social media platforms such as Facebook and Twitter use load balancing to handle a vast number of user interactions and maintain consistent service delivery.

- API Gateways: Load balancers are also used in API gateways to manage and distribute API requests. This helps to improve the performance and availability of APIs, ensuring that they can handle a large volume of requests without experiencing slowdowns. Companies that offer public APIs, such as Google and Salesforce, use load balancing to ensure API stability and responsiveness for their users.

High Availability

In the realm of system design, ensuring continuous operation is paramount. High availability (HA) is a crucial concept that focuses on minimizing downtime and maximizing system uptime. It is a critical aspect of modern infrastructure, particularly for applications and services that users rely on constantly. This section delves into the core objective of high availability, exploring its meaning, benefits, and the critical role it plays in mitigating the consequences of system failures.

Defining High Availability

High availability (HA) refers to a system’s ability to remain operational for a significant period, typically measured in terms of uptime percentage. A system with high availability is designed to withstand failures and continue providing service without significant interruption. The goal is to eliminate or minimize downtime, ensuring users can access the system’s resources whenever needed.

Benefits of High Availability

High availability offers several key benefits, primarily revolving around uptime and resilience. Implementing HA strategies can lead to substantial improvements in service delivery and user experience.

- Enhanced Uptime: High availability systems are designed to reduce downtime. This is often expressed as a percentage, with higher percentages indicating greater availability. For example, a system with 99.999% availability (also known as “five nines”) is expected to experience only around five minutes of downtime per year. This contrasts sharply with systems without HA, which may experience hours or even days of downtime due to failures.

- Increased Resilience: HA systems are resilient to failures. This means that when a component fails (e.g., a server, network device, or database), the system can automatically switch to a redundant component or continue operating with reduced capacity. This automatic failover minimizes the impact of failures on users.

- Improved User Experience: By minimizing downtime and ensuring continuous service, HA systems contribute to a better user experience. Users can access applications and services without interruption, which leads to increased satisfaction and loyalty.

- Reduced Costs: Downtime can be costly. HA can reduce downtime and associated costs, such as lost revenue, productivity losses, and the cost of fixing the problem.

- Business Continuity: HA supports business continuity by ensuring that critical systems remain operational even during failures. This allows businesses to continue their operations and meet their obligations to customers and stakeholders.

Consequences of System Downtime and HA Mitigation

System downtime can have severe consequences, impacting businesses in various ways. High availability strategies are designed to mitigate these risks.

- Financial Loss: Downtime can result in direct financial losses, such as lost revenue from sales, missed transactions, and penalties for failing to meet service level agreements (SLAs). For example, an e-commerce website experiencing downtime during a peak shopping period could lose significant sales.

- Reputational Damage: System outages can damage a company’s reputation. Customers may lose trust in the service and may switch to competitors. Negative publicity from a major outage can be difficult to recover from.

- Productivity Loss: Downtime can lead to reduced employee productivity. Employees may be unable to access critical systems, leading to delays in completing tasks and projects.

- Data Loss: In some cases, downtime can result in data loss. This can be especially damaging if critical data is lost or corrupted.

- Legal and Regulatory Consequences: Depending on the industry and the nature of the service, downtime may lead to legal or regulatory consequences. For example, a financial institution that experiences a prolonged outage may be subject to fines or other penalties.

High availability mitigates these consequences by implementing redundancy and failover mechanisms. For example, a load balancer can automatically redirect traffic to a healthy server if a server fails, preventing downtime and ensuring continuous service. Database replication ensures data is available even if the primary database server fails.

The Role of a Load Balancer in HA

Load balancers are critical components in achieving high availability (HA) for applications and services. By distributing incoming network traffic across multiple servers, they ensure that no single server becomes a bottleneck or a single point of failure. This architecture enhances both performance and resilience, leading to a more reliable user experience.

How Load Balancers Contribute to Achieving High Availability

Load balancers are essential for high availability because they provide several key functionalities that directly address the challenges of server outages and performance bottlenecks.

- Traffic Distribution: Load balancers intelligently distribute incoming client requests across a pool of available servers. This prevents any single server from being overwhelmed, leading to improved performance and responsiveness. The distribution algorithms can be based on various factors, such as server load, response time, or round-robin, ensuring an even distribution of traffic.

- Redundancy: Load balancers enable redundancy by utilizing multiple servers to handle requests. If one server fails, the load balancer automatically redirects traffic to the remaining healthy servers, minimizing downtime and ensuring service continuity. This failover mechanism is a cornerstone of HA.

- Scalability: Load balancers facilitate scalability. As demand increases, new servers can be easily added to the server pool, and the load balancer will automatically start distributing traffic to them. This allows applications to handle growing traffic volumes without performance degradation.

- Health Checks: Load balancers regularly monitor the health of the backend servers. This proactive monitoring allows the load balancer to identify and remove unhealthy servers from the traffic pool before they can impact users.

How Load Balancers Detect Server Failures and Reroute Traffic

Load balancers use various methods to detect server failures and reroute traffic, ensuring continuous service availability. The core mechanism involves regular health checks.

- Health Checks: Load balancers perform health checks to determine the availability and responsiveness of each server in the pool. These checks can take different forms:

- Ping Checks: Simple ICMP ping requests to verify server reachability.

- TCP Checks: Establish TCP connections to specific ports to check service availability.

- HTTP/HTTPS Checks: Send HTTP/HTTPS requests to a specific URL and analyze the response code and content. This provides a more in-depth check of the application’s health.

If a health check fails, the load balancer marks the server as unhealthy.

- Automated Failover: Upon detecting a server failure, the load balancer immediately stops sending traffic to the affected server. It then reroutes all traffic intended for the failed server to the remaining healthy servers in the pool. This failover process is typically automated and happens within seconds, minimizing the impact on users.

- Monitoring and Alerts: Load balancers provide monitoring capabilities and generate alerts when server failures or performance issues are detected. This allows administrators to quickly diagnose and resolve the underlying problem. These alerts can be sent via email, SMS, or integrated into monitoring systems.

Scenario: Impact of a Load Balancer During a Server Outage

Consider an e-commerce website that uses a load balancer to distribute traffic across three web servers. Without a load balancer, a single server failure would result in all traffic being directed to the remaining servers, potentially causing performance degradation or even service interruption. With a load balancer, the impact of a server outage is significantly reduced.

Scenario Details:

- Initial State: Three web servers (Server A, Server B, and Server C) are actively serving traffic. The load balancer is configured with a round-robin distribution algorithm.

- Server Failure: Server B experiences a hardware failure and becomes unresponsive.

- Load Balancer Action: The load balancer detects the failure of Server B through health checks. It immediately removes Server B from the active server pool.

- Traffic Rerouting: All traffic that was previously directed to Server B is automatically rerouted to Server A and Server C.

- User Experience: Users accessing the website experience minimal interruption. Existing connections may be momentarily affected, but new requests are seamlessly handled by the remaining servers. The overall website performance remains stable.

- Administrator Action: The administrator receives an alert about the server failure and begins troubleshooting the issue. Server B is eventually repaired or replaced. Once back online, the load balancer automatically adds Server B back into the pool.

Impact:

In this scenario, the load balancer prevents a complete service outage. The impact on users is minimized, allowing the e-commerce website to continue operating with reduced capacity until the failed server is restored. The load balancer plays a crucial role in maintaining high availability and ensuring a positive user experience, even in the face of server failures.

Load Balancing Algorithms

Load balancing algorithms are the core of a load balancer’s functionality. They determine how incoming client requests are distributed across the available servers. The choice of algorithm significantly impacts performance, efficiency, and overall system resilience. Understanding these algorithms and their characteristics is crucial for designing a robust and scalable high-availability architecture.

Round Robin

The Round Robin algorithm is one of the simplest and most commonly used load balancing techniques. It distributes incoming requests sequentially to each server in a cyclical manner.

- How it Works: Each new request is sent to the next server in the list. Once the last server is reached, the cycle starts again from the beginning.

- Strengths:

- Simplicity: Easy to understand and implement.

- Fairness: Distributes requests evenly across servers.

- No Server State: Doesn’t require servers to maintain state, making it suitable for stateless applications.

- Weaknesses:

- Doesn’t consider server load: Treats all servers equally, regardless of their current workload or processing capacity. A slow server can negatively impact overall performance.

- Not ideal for varying request sizes: May not be optimal if requests consume different amounts of resources.

- Effective Situations: Round Robin is most effective when:

- All servers have similar hardware and processing capabilities.

- Requests are relatively uniform in size and resource requirements.

- The application is stateless.

Least Connections

The Least Connections algorithm directs new requests to the server with the fewest active connections at the time of the request. This algorithm dynamically adjusts to the current load on each server.

- How it Works: The load balancer tracks the number of active connections to each server. The incoming request is sent to the server with the fewest connections.

- Strengths:

- Dynamic Load Balancing: Adapts to real-time server loads, improving overall performance.

- Prioritizes Underutilized Servers: Ensures that servers are used efficiently, preventing overload.

- Weaknesses:

- Requires Monitoring: Needs the load balancer to continuously monitor the number of connections on each server, adding some overhead.

- May not be optimal for short-lived connections: If connections are very brief, the algorithm might not accurately reflect the actual server load.

- Effective Situations: Least Connections is most effective when:

- Server resources (CPU, memory, etc.) are the primary bottleneck.

- Requests have varying resource requirements.

- A mix of short-lived and long-lived connections exists.

IP Hash

The IP Hash algorithm uses the client’s IP address to generate a hash key. This hash key determines which server receives the client’s requests. This ensures that a client consistently connects to the same server.

- How it Works: The client’s IP address is used to calculate a hash value. This hash value is then mapped to a specific server. All requests from the same IP address will consistently be directed to the same server, as long as the server is available.

- Strengths:

- Session Persistence: Provides session affinity, which is crucial for applications that require sticky sessions (e.g., shopping carts, user logins).

- Simple Implementation: Relatively easy to implement.

- Weaknesses:

- Uneven Distribution: If clients from certain IP ranges generate significantly more traffic, some servers may become overloaded.

- Requires Server Availability: If a server fails, all clients previously connected to that server will be redirected to a new server, potentially disrupting their sessions.

- IPv4 and IPv6 Considerations: Requires careful configuration and consideration when dealing with both IPv4 and IPv6 addresses, as the hash calculation will be different.

- Effective Situations: IP Hash is most effective when:

- Session persistence is required.

- Applications need to maintain user sessions across multiple requests.

- The number of clients is relatively large, and IP addresses are distributed evenly.

Types of Load Balancers

Load balancers come in various forms, each with its own set of characteristics, advantages, and disadvantages. The choice of load balancer depends heavily on the specific needs of an application, including factors such as traffic volume, budget, performance requirements, and existing infrastructure. Understanding the different types allows for an informed decision when designing a highly available system.

Hardware Load Balancers

Hardware load balancers are physical appliances dedicated to the task of distributing network traffic. They are typically high-performance devices designed to handle significant traffic loads and offer advanced features.Hardware load balancers are often deployed in data centers and are known for their reliability and performance.

| Feature | Description | Advantages | Disadvantages |

|---|---|---|---|

| Performance | Utilize specialized hardware for high throughput and low latency. | Exceptional performance, high scalability, advanced features (SSL offloading, DDoS protection). | High initial cost, complex configuration, vendor lock-in, physical infrastructure requirements. |

| Scalability | Can handle large traffic volumes and scale to accommodate increasing demands. | Excellent for high-traffic websites and applications, able to handle peak loads. | Scaling can require purchasing additional hardware, which can be expensive. |

| Management | Managed through dedicated interfaces, often with advanced monitoring and reporting capabilities. | Centralized management, detailed insights into traffic patterns and performance. | Requires specialized expertise to configure and maintain. |

| Cost | Significant upfront investment. | Can be cost-effective in the long run for high-traffic environments due to their longevity and performance. | High initial investment can be a barrier to entry for smaller organizations or projects. |

Hardware load balancers, such as those from F5 Networks (BIG-IP) or Citrix (NetScaler), are often used by large enterprises and organizations with demanding performance requirements. They offer features like SSL offloading, which decrypts SSL/TLS traffic, freeing up server resources and improving performance. They also provide advanced security features, including DDoS protection and Web Application Firewall (WAF) capabilities.

Software Load Balancers

Software load balancers are applications that run on commodity hardware or virtual machines. They offer a cost-effective alternative to hardware load balancers, especially for smaller deployments or environments where hardware investment is not feasible.These load balancers are flexible and can be deployed in various environments, including on-premises servers and cloud instances.

| Feature | Description | Advantages | Disadvantages |

|---|---|---|---|

| Cost | Typically less expensive than hardware load balancers, often open-source or subscription-based. | Lower initial cost, flexible deployment options, can be easily scaled. | Performance can be limited by the underlying hardware, requires more management overhead. |

| Flexibility | Easily deployed on various platforms, including virtual machines and cloud environments. | Highly adaptable to different infrastructure setups, can be customized. | Performance depends on the underlying infrastructure; may require more resources. |

| Management | Managed through software interfaces or command-line tools. | Easier to configure and manage compared to hardware load balancers, can be automated. | Requires expertise in software configuration and management. |

| Scalability | Scalable by adding more instances or utilizing auto-scaling features. | Scalable to meet changing demands; allows dynamic adjustments to resource allocation. | Scaling can require careful planning and resource allocation to avoid bottlenecks. |

Examples of software load balancers include HAProxy and Nginx. HAProxy is a popular open-source load balancer known for its high performance and flexibility. Nginx, primarily a web server, also functions as a load balancer and is commonly used for HTTP and HTTPS traffic. These options offer a cost-effective solution for many organizations, particularly those with less demanding traffic patterns. They are particularly well-suited for cloud-native applications.

Cloud-Based Load Balancers

Cloud-based load balancers are services provided by cloud providers, such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). They offer a managed solution, allowing users to offload the responsibility of infrastructure management to the cloud provider.These load balancers provide scalability, reliability, and ease of use.

| Feature | Description | Advantages | Disadvantages |

|---|---|---|---|

| Cost | Pay-as-you-go pricing model, often based on traffic volume or usage. | No upfront investment, scalable pricing, reduced operational overhead. | Can become expensive for high-traffic applications; vendor lock-in. |

| Scalability | Automatically scales based on traffic demands. | Highly scalable, can handle sudden traffic spikes without manual intervention. | Dependence on the cloud provider’s infrastructure. |

| Management | Managed by the cloud provider, with a user-friendly interface. | Simplified management, automated updates, and monitoring. | Limited customization options compared to self-managed solutions. |

| Integration | Seamlessly integrates with other cloud services. | Easy integration with other cloud services, such as auto-scaling and content delivery networks. | Dependence on the cloud provider’s ecosystem. |

Examples of cloud-based load balancers include AWS Elastic Load Balancing (ELB), Azure Load Balancer, and Google Cloud Load Balancing. They are designed to integrate seamlessly with other cloud services, such as auto-scaling and content delivery networks. Cloud-based load balancers are an excellent choice for organizations that want to focus on their applications rather than infrastructure management. The pricing models can be attractive for variable workloads.

For instance, a company experiencing seasonal traffic spikes might find the automatic scaling capabilities of a cloud load balancer particularly beneficial.

Health Checks and Monitoring

Load balancers are crucial components in ensuring high availability, but their effectiveness relies heavily on their ability to monitor the health of the backend servers. Regularly assessing the operational status of these servers is essential for directing traffic only to those that are functioning correctly. This proactive approach prevents users from being directed to unavailable or poorly performing servers, thereby maintaining a seamless user experience.

Health Checks on Backend Servers

Health checks are automated processes that load balancers use to determine the availability and responsiveness of backend servers. These checks mimic user requests and assess the server’s ability to handle them.

- Types of Health Checks: Load balancers employ various types of health checks to assess server health. These include:

- Ping Checks: The most basic check, which sends an ICMP (Internet Control Message Protocol) echo request to the server. It determines if the server is reachable.

- TCP Checks: These checks establish a TCP connection to a specific port on the server, verifying that the server is listening and responding.

- HTTP/HTTPS Checks: The load balancer sends an HTTP or HTTPS request (e.g., GET, HEAD) to a specific URL on the server. It examines the response code (e.g., 200 OK) and potentially the content of the response to determine the server’s health.

- Custom Checks: Many load balancers allow for custom health checks, enabling administrators to tailor checks to specific application requirements. This could involve executing a script, querying a database, or checking the status of a service.

- Health Check Intervals and Thresholds: The frequency of health checks (the interval) and the number of consecutive failures required to mark a server as unhealthy (the threshold) are configurable parameters. Balancing these parameters is important:

- Short Intervals: Enable faster detection of server failures but can increase network traffic and server load.

- Long Intervals: Reduce network traffic and server load but may delay the detection of server failures.

- Thresholds: Prevent false positives by requiring multiple failures before a server is taken out of rotation.

- Health Check Responses: Based on the health check results, the load balancer makes decisions:

- Healthy Server: The server is considered healthy and receives traffic.

- Unhealthy Server: The server is removed from the pool of available servers and does not receive traffic until it passes a health check.

- Degraded Server: Some load balancers can identify degraded servers (e.g., high latency) and adjust traffic distribution accordingly.

Importance of Monitoring and Alerting

Monitoring and alerting are essential components of a load-balanced environment. They provide real-time insights into the performance and health of the system, allowing administrators to proactively address issues before they impact users.

- Real-time Visibility: Monitoring tools provide dashboards and reports that visualize key metrics such as:

- Server availability.

- Request rates.

- Response times.

- Error rates.

- Resource utilization (CPU, memory, disk I/O).

- Proactive Issue Detection: Monitoring allows administrators to identify potential problems before they escalate into outages. For example, if response times are increasing, it could indicate a server overload or a performance bottleneck.

- Alerting and Notifications: Alerting systems automatically notify administrators when predefined thresholds are exceeded or when specific events occur (e.g., a server failure). This enables prompt intervention and reduces downtime. Notifications can be delivered via email, SMS, or integration with other tools.

- Performance Optimization: By analyzing monitoring data, administrators can identify areas for performance optimization, such as:

- Scaling the infrastructure.

- Optimizing application code.

- Adjusting load balancing configurations.

Configuring Health Checks and Monitoring

The configuration process for health checks and monitoring varies depending on the load balancer and monitoring tools used. However, the general steps are consistent.

- Choose Health Check Type: Select the appropriate health check type based on the application and its requirements. For example, use HTTP/HTTPS checks for web applications to verify the server’s ability to serve web pages.

- Configure Health Check Parameters: Set the health check interval, timeout, and the number of retries. Consider factors such as network latency and server responsiveness.

- Define Monitoring Metrics: Determine which metrics to monitor, such as request rates, response times, and error rates. Choose a monitoring tool that supports these metrics and integrates with the load balancer.

- Set Alerting Thresholds: Define thresholds for each metric that trigger alerts. For example, set an alert if the average response time exceeds a certain value or if the error rate increases above a certain percentage.

- Configure Alerting Channels: Configure the alerting system to send notifications via the desired channels (e.g., email, SMS).

- Test and Validate: Test the health checks and alerting configuration to ensure they function as expected. Simulate server failures and performance issues to verify that alerts are triggered correctly.

Session Persistence and Sticky Sessions

Session persistence, often referred to as sticky sessions, is a crucial aspect of load balancing, especially when dealing with applications that require maintaining user sessions across multiple requests. It ensures that a user’s requests are consistently routed to the same backend server for the duration of their session. This is particularly important for applications where session data is stored locally on the server.

Session Persistence Explained

Session persistence, also known as sticky sessions, is a load balancing technique that aims to direct a user’s requests to the same backend server for the duration of their session. This ensures that the user’s session data, stored on that specific server, is consistently available. This approach is vital for applications that rely on session affinity, such as e-commerce platforms or online banking services.

The load balancer identifies a user’s session, typically through a cookie or by inspecting the source IP address, and then uses this information to route subsequent requests to the same server. This guarantees a consistent user experience by maintaining session state.

Use Cases for Session Persistence

Session persistence is essential in various scenarios where maintaining session affinity is critical for application functionality and user experience. Here are some key use cases:

- E-commerce Platforms: In e-commerce applications, session persistence is crucial for maintaining a user’s shopping cart. When a user adds items to their cart, this information is often stored in a session on a specific server. Session persistence ensures that subsequent requests, such as viewing the cart or proceeding to checkout, are routed to the same server, allowing the user to continue their shopping experience seamlessly.

- Online Banking: For online banking applications, session persistence is used to maintain a user’s login state and ensure secure access to their account information. This is especially important to protect sensitive data, as each request is routed to the same server where the session is maintained.

- Web Applications with Complex Session Data: Web applications that store complex session data, such as user preferences, application state, or other personalized information, benefit greatly from session persistence. By routing all requests from a specific user to the same server, the application can easily retrieve and manage the user’s session data, ensuring a consistent user experience.

- Applications with Legacy Architectures: In legacy architectures where applications might not be designed to share session data across servers, session persistence can be a temporary solution to ensure application compatibility while transitioning to a more modern architecture.

Drawbacks of Session Persistence and Alternative Solutions

While session persistence provides benefits in certain scenarios, it also presents potential drawbacks. Understanding these drawbacks is essential for making informed decisions about load balancing strategies.

- Server Overload: One of the primary drawbacks is the potential for uneven load distribution. If one backend server receives a disproportionate number of sticky sessions, it can become overloaded, while other servers remain underutilized. This can lead to performance bottlenecks and reduced overall application efficiency.

- Failure Impact: If a server with sticky sessions fails, all sessions associated with that server are lost, impacting the users connected to it. This can lead to a negative user experience, especially if the session contains critical information.

- Scalability Challenges: Session persistence can limit the scalability of an application. As the number of users increases, it becomes more challenging to maintain an even distribution of sessions across servers. This limitation can make it difficult to scale the application to handle increased traffic.

Alternative solutions to mitigate the drawbacks of session persistence include:

- Session Replication: Session replication involves replicating session data across multiple servers. This ensures that if one server fails, the session data is still available on another server. While this approach improves fault tolerance, it can increase the overhead of managing and synchronizing session data.

- Session Clustering: Session clustering involves storing session data in a shared, centralized location, such as a database or a distributed cache like Redis or Memcached. This allows any server in the cluster to access a user’s session data, eliminating the need for sticky sessions. This approach offers better scalability and fault tolerance.

- Stateless Applications: The best approach, if possible, is to design the application to be stateless. In stateless applications, all necessary information for a request is contained within the request itself. This eliminates the need for session persistence altogether, allowing for true load balancing and optimal resource utilization. This is usually achieved by storing user data in the client-side, like using tokens.

SSL/TLS Termination and Offloading

Load balancers play a crucial role in securing network traffic by handling SSL/TLS encryption and decryption. This process, known as SSL/TLS termination, enhances both security and performance for web applications. The load balancer acts as a central point for managing SSL/TLS certificates, freeing up backend servers from the computationally intensive task of encryption and decryption.

SSL/TLS Termination and Offloading Explained

SSL/TLS termination is the process where a load balancer decrypts incoming HTTPS traffic (encrypted using SSL/TLS) and forwards the unencrypted HTTP traffic to the backend servers. Conversely, when a response is received from a backend server, the load balancer encrypts the HTTP traffic before sending it back to the client as HTTPS. This offloading of the SSL/TLS processing to the load balancer has significant performance benefits.

Benefits of SSL/TLS Offloading for Performance

Offloading SSL/TLS processing to a load balancer offers several performance advantages. Backend servers are relieved from the CPU-intensive operations of encryption and decryption, allowing them to focus on application logic and serving content. This can lead to improved response times and increased server capacity.

- Reduced Server Load: Backend servers experience less CPU load as they are not burdened with encryption/decryption tasks. This frees up resources for application processing, leading to better performance and the ability to handle more concurrent requests.

- Improved Response Times: By offloading SSL/TLS processing, the overall time to serve a request is reduced. Clients receive responses faster, leading to a better user experience.

- Centralized Certificate Management: Load balancers simplify certificate management. Certificates are stored and managed centrally, making updates and renewals easier and more secure than managing them on individual backend servers.

- Enhanced Scalability: With SSL/TLS offloading, backend servers can scale more effectively. Because they are not bogged down by encryption/decryption, more servers can be added to handle increased traffic without performance degradation.

Diagram of SSL/TLS Termination Process

The following describes the process of SSL/TLS termination performed by a load balancer:

The diagram depicts the flow of an HTTPS request from a client to a backend server through a load balancer.

1. Client initiates HTTPS connection

A client (e.g., a web browser) sends an HTTPS request to the load balancer. The request is encrypted using SSL/TLS.

2. Load Balancer receives encrypted request

The load balancer receives the encrypted HTTPS request.

3. Load Balancer decrypts the request

The load balancer, using its SSL/TLS certificate and private key, decrypts the HTTPS request. This is where the SSL/TLS termination happens. The request is now in plain HTTP format.

4. Load Balancer forwards the request

The load balancer forwards the unencrypted HTTP request to one of the backend servers, selected based on the configured load balancing algorithm.

5. Backend server processes the request

The backend server receives the HTTP request, processes it, and generates an HTTP response.

6. Backend server sends the response

The backend server sends the HTTP response back to the load balancer.

7. Load Balancer encrypts the response

The load balancer receives the HTTP response from the backend server. It then encrypts the response using SSL/TLS.

8. Load Balancer sends encrypted response

The load balancer sends the encrypted HTTPS response back to the client.

9. Client receives and decrypts the response

The client receives the encrypted HTTPS response and decrypts it, allowing the user to view the content.

This process ensures that all communication between the client and the load balancer is encrypted, while the communication between the load balancer and the backend servers can be unencrypted, optimizing performance. For example, a large e-commerce website experiencing high traffic would benefit greatly from SSL/TLS offloading, as it would free up server resources to handle more transactions and improve the overall shopping experience.

Geographic Load Balancing

Geographic load balancing, also known as Global Server Load Balancing (GSLB), extends the principles of load balancing across geographically dispersed data centers. This approach ensures high availability and optimal performance for users worldwide by directing traffic to the closest or most suitable server based on the user’s location or other criteria. It’s a crucial component for applications with a global user base, as it addresses the challenges of latency, regional outages, and regulatory compliance.

Concept of Geographic Load Balancing

Geographic load balancing operates by intelligently routing user requests to the most appropriate server, considering factors such as the user’s geographical location, server health, and network conditions. This is typically achieved through DNS-based load balancing, where the DNS server responds to user requests with the IP address of the geographically closest or best-performing server.

Benefits of Geographic Load Balancing for Global Applications

Using geographic load balancing offers significant advantages for global applications, improving user experience and business resilience.

- Reduced Latency: By directing users to servers closer to their location, geographic load balancing minimizes latency, resulting in faster page load times and improved application responsiveness. This is particularly critical for applications where real-time interaction is essential.

- Improved Availability: If one data center experiences an outage, geographic load balancing automatically redirects traffic to a healthy data center in another region, ensuring continuous service availability. This resilience protects against regional disruptions.

- Disaster Recovery: GSLB plays a key role in disaster recovery strategies. In the event of a major outage at a primary data center, traffic can be quickly rerouted to a backup data center in a different geographic location, minimizing downtime.

- Compliance and Localization: Geographic load balancing can help organizations meet data residency requirements by directing traffic to servers within specific geographic regions. This is vital for adhering to regulations like GDPR and CCPA.

- Enhanced User Experience: By optimizing performance and ensuring availability, geographic load balancing contributes to a better user experience, which can lead to increased user engagement and satisfaction.

Examples of Geographic Load Balancing in Action

Geographic load balancing is widely used by various organizations to optimize performance and ensure high availability for their global users.

- Content Delivery Networks (CDNs): CDNs, such as Cloudflare and Akamai, use GSLB to distribute content across a network of servers located around the world. When a user requests content, the CDN directs the request to the server closest to the user, minimizing latency and improving download speeds. For example, a user in London accessing a website hosted on a CDN would be served content from a server in London or a nearby location, rather than a server in the United States.

- E-commerce Platforms: E-commerce businesses with a global presence utilize GSLB to ensure that users can access their websites quickly and reliably, regardless of their location. If a user in Japan is browsing an e-commerce site, GSLB will direct them to a server in the Asia-Pacific region, ensuring a fast and localized shopping experience.

- Streaming Services: Streaming services, such as Netflix and Spotify, use GSLB to deliver content to users around the world. The services automatically route users to the closest server that can provide the requested content, minimizing buffering and ensuring a smooth streaming experience. For instance, a user in Australia streaming a movie would connect to a server in Australia or a nearby location to optimize the video streaming quality.

- Financial Institutions: Financial institutions leverage GSLB to maintain high availability and ensure uninterrupted access to their services for customers globally. During peak traffic periods or in the event of a regional outage, GSLB redirects traffic to available servers, preventing service disruptions. A bank with users in Europe, Asia, and North America would utilize GSLB to distribute the traffic based on the region to avoid delays in transactions.

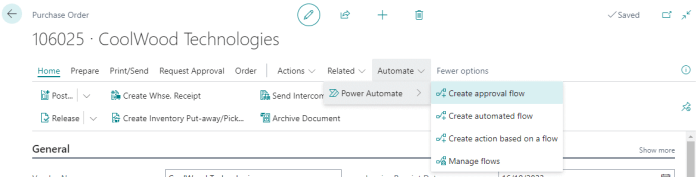

Load Balancer Configuration and Deployment

Configuring and deploying a load balancer is a critical step in ensuring high availability and optimal performance for any application. This process involves several key steps, from selecting the appropriate load balancer to configuring its settings and deploying it into the production environment. Careful planning and execution are essential to avoid downtime and maintain a seamless user experience.

Steps Involved in Configuring a Load Balancer

The configuration of a load balancer is a multi-step process that requires careful attention to detail. The following steps Artikel the key activities involved in setting up a load balancer to manage traffic efficiently:

- Choose a Load Balancer: Selecting the right load balancer depends on factors such as the application’s needs, budget, and infrastructure. Consider hardware load balancers, software-based load balancers, or cloud-based load balancing services.

- Define the Application: Determine the application’s specifics, including the protocols it uses (HTTP, HTTPS, TCP, UDP), the ports it listens on, and the backend servers that will handle the traffic. This information is crucial for configuring the load balancer’s rules and settings.

- Configure Health Checks: Implement health checks to monitor the status of the backend servers. These checks determine if a server is available and responsive. Configure the frequency and type of health checks (e.g., HTTP GET requests, TCP connections) based on the application’s requirements.

- Configure Load Balancing Algorithms: Select and configure the load balancing algorithm that will distribute traffic among the backend servers. Common algorithms include round robin, least connections, and IP hash. The choice depends on the application’s needs and the desired traffic distribution strategy.

- Configure Session Persistence (if needed): If the application requires session persistence, configure the load balancer to maintain user sessions on the same backend server. This can be achieved through techniques such as cookie-based persistence or IP address affinity.

- Configure SSL/TLS Termination (if needed): If the load balancer will handle SSL/TLS termination, configure the necessary certificates and keys. This involves uploading the certificates and configuring the load balancer to decrypt and encrypt traffic.

- Set up Monitoring and Logging: Configure monitoring and logging to track the load balancer’s performance and health. This includes setting up metrics for traffic volume, response times, and server status. Logging provides valuable insights for troubleshooting and performance analysis.

- Test the Configuration: Thoroughly test the load balancer configuration to ensure it functions correctly. This involves simulating traffic and verifying that traffic is distributed as expected, health checks are working, and session persistence is functioning if configured.

- Deploy the Configuration: Once the configuration is tested and validated, deploy it to the production environment. This may involve updating DNS records to point to the load balancer’s IP address or configuring the load balancer within a cloud environment.

- Monitor and Maintain: Continuously monitor the load balancer’s performance and health after deployment. Regularly review the configuration and make adjustments as needed to optimize performance and adapt to changing application needs.

Checklist for Deploying a Load Balancer in a Production Environment

Deploying a load balancer in a production environment requires a systematic approach to minimize risks and ensure a smooth transition. This checklist provides a structured approach to guide the deployment process:

- Planning and Preparation:

- Define the application’s requirements and performance goals.

- Select the appropriate load balancer solution (hardware, software, or cloud-based).

- Document the configuration settings and deployment plan.

- Establish a rollback plan in case of issues.

- Configuration:

- Configure health checks to monitor backend server availability.

- Set up load balancing algorithms to distribute traffic effectively.

- Configure session persistence (if required) for stateful applications.

- Configure SSL/TLS termination (if necessary) for secure communication.

- Set up monitoring and logging to track performance and troubleshoot issues.

- Testing:

- Test the configuration in a staging or pre-production environment.

- Verify that traffic is distributed correctly across backend servers.

- Confirm that health checks are functioning as expected.

- Test session persistence (if enabled).

- Test SSL/TLS termination (if enabled).

- Deployment:

- Update DNS records to point to the load balancer’s IP address (or integrate with cloud provider services).

- Gradually introduce traffic to the load balancer (e.g., using a percentage-based approach).

- Monitor the load balancer’s performance and health during the initial deployment phase.

- Post-Deployment:

- Continuously monitor the load balancer’s performance and health.

- Review logs for errors and performance issues.

- Make adjustments to the configuration as needed to optimize performance.

- Establish a process for regular maintenance and updates.

Examples of Common Configuration Settings

Understanding common configuration settings is crucial for effectively deploying and managing a load balancer. These settings control how the load balancer directs traffic and interacts with backend servers.

- Load Balancing Algorithm: This setting determines how traffic is distributed among the backend servers.

- Round Robin: Distributes traffic sequentially to each server in a rotating fashion. This is simple to configure and suitable for basic load balancing.

- Least Connections: Directs traffic to the server with the fewest active connections. This algorithm dynamically balances traffic based on server load.

- IP Hash: Directs traffic from a specific client IP address to the same backend server. This provides session persistence.

- Example: In a round-robin configuration, each incoming request would be sent to a different server in the backend pool in a sequential manner.

- Health Checks: These settings monitor the health of the backend servers.

- HTTP Health Check: The load balancer sends an HTTP GET request to a specific URL on each backend server to verify its availability.

- TCP Health Check: The load balancer establishes a TCP connection with the backend server to check its responsiveness.

- Frequency and Timeout: Define the frequency of health checks and the timeout period.

- Example: Configure an HTTP health check to send a GET request to `/health` every 5 seconds, with a timeout of 3 seconds. If a server doesn’t respond within 3 seconds, it’s marked as unhealthy.

- Session Persistence: This setting ensures that users are directed to the same backend server for subsequent requests.

- Cookie-based Persistence: The load balancer inserts a cookie into the user’s browser to track their session and direct them to the same server.

- IP Address Affinity: The load balancer uses the client’s IP address to direct traffic to the same server.

- Example: Configure cookie-based persistence to use a cookie named `JSESSIONID` to maintain user sessions.

- SSL/TLS Termination: This setting handles the decryption and encryption of SSL/TLS traffic.

- Certificate Upload: Upload the SSL/TLS certificate and private key to the load balancer.

- Port Configuration: Configure the load balancer to listen on port 443 for HTTPS traffic.

- Example: Upload an SSL certificate and private key to the load balancer and configure it to terminate SSL/TLS traffic, offloading the encryption/decryption process from the backend servers.

- Monitoring and Logging: This setting configures how the load balancer’s performance is monitored and logged.

- Metrics: Configure the load balancer to collect metrics such as traffic volume, response times, and server status.

- Logging: Configure the load balancer to log events, errors, and access information.

- Example: Configure the load balancer to send metrics to a monitoring system and log access events to a log aggregation service.

Future Trends in Load Balancing

The landscape of load balancing is continuously evolving, driven by advancements in cloud computing, containerization, and the increasing complexity of modern applications. These trends are not merely incremental improvements; they represent fundamental shifts in how we design and manage highly available systems. They are reshaping the future of high availability by enhancing resilience, improving performance, and offering greater flexibility in resource allocation.

Service Meshes and Load Balancing

Service meshes are emerging as a crucial component in modern application architectures, particularly for microservices. They provide a dedicated infrastructure layer for handling service-to-service communication. Load balancing is a core function within a service mesh, offering sophisticated traffic management capabilities.

- Advanced Traffic Management: Service meshes provide granular control over traffic, enabling features such as traffic shaping, A/B testing, and canary deployments. For example, a service mesh might route 10% of traffic to a new version of a service for testing purposes, automatically rolling back if errors are detected.

- Observability and Monitoring: Service meshes offer built-in observability, providing detailed metrics and logs for all service interactions. This allows for better monitoring of load balancer performance and the health of individual services.

- Decentralized Load Balancing: Instead of relying on a central load balancer, service meshes often employ a distributed approach. Each service instance has a sidecar proxy that handles load balancing, eliminating a single point of failure.

Application-Aware Load Balancing

Application-aware load balancing goes beyond simple layer 4 or layer 7 routing by inspecting and acting upon the actual content of the application traffic. This allows for more intelligent and efficient load distribution.

- Content-Based Routing: This enables routing decisions based on the content of the request, such as the URL path, HTTP headers, or even the data within the request body. For instance, requests for static assets can be routed to a content delivery network (CDN), while requests for dynamic content are directed to the application servers.

- Rate Limiting and Throttling: Application-aware load balancers can implement rate limiting and throttling to protect against abuse and ensure fair access to resources. This prevents a single user or application from overwhelming the system.

- User Session Management: By understanding application-level session information, load balancers can ensure that users are consistently routed to the same server throughout their session, improving user experience.

Automated Load Balancing and Infrastructure as Code

The rise of DevOps and infrastructure as code (IaC) has significantly impacted load balancing practices. Automation is becoming increasingly crucial for managing load balancers efficiently and scaling them dynamically.

- Dynamic Scaling: Load balancers can be automatically scaled up or down based on real-time traffic patterns, ensuring optimal performance and resource utilization. For example, during peak hours, the load balancer can automatically provision additional instances to handle increased traffic.

- Configuration as Code: IaC allows load balancer configurations to be defined as code, enabling version control, automated testing, and consistent deployments. This reduces the risk of human error and improves the speed of deployment.

- Integration with Cloud Platforms: Load balancers are increasingly integrated with cloud platforms, offering seamless integration with other services such as auto-scaling groups and container orchestration platforms.

Serverless Load Balancing

Serverless computing is changing the way applications are deployed and managed. Load balancing plays a crucial role in distributing traffic to serverless functions.

- Event-Driven Scaling: Serverless load balancers automatically scale based on the number of incoming requests, eliminating the need to provision and manage servers.

- Cost Optimization: Serverless load balancing helps optimize costs by only paying for the resources consumed. This is particularly beneficial for applications with unpredictable traffic patterns.

- Simplified Management: Serverless load balancing reduces the operational overhead associated with managing infrastructure, allowing developers to focus on building applications.

AI and Machine Learning in Load Balancing

Artificial intelligence (AI) and machine learning (ML) are beginning to play a significant role in optimizing load balancing.

- Predictive Load Balancing: ML algorithms can analyze historical traffic patterns to predict future demand and proactively scale resources.

- Anomaly Detection: AI can detect anomalies in traffic patterns and automatically mitigate potential issues, such as DDoS attacks.

- Intelligent Routing: AI can optimize routing decisions based on real-time performance metrics and application-level data, ensuring the best possible user experience.

Ultimate Conclusion

In conclusion, the integration of load balancers is indispensable for achieving high availability in modern web applications and services. By intelligently distributing traffic, monitoring server health, and providing features like SSL/TLS offloading and session persistence, load balancers ensure continuous operation and optimal performance. As technology evolves, understanding the nuances of load balancing will remain crucial for building and maintaining resilient, scalable, and user-friendly online experiences.

Embracing these practices ensures that your online presence remains available and performs optimally, regardless of the challenges that may arise.

Answers to Common Questions

What is the primary function of a load balancer?

A load balancer distributes network traffic across multiple servers to prevent any single server from becoming overloaded. This improves performance, reliability, and scalability.

How does a load balancer contribute to high availability?

Load balancers monitor the health of backend servers and automatically reroute traffic away from failing servers, ensuring that users are always directed to available resources. This minimizes downtime.

What are some common load balancing algorithms?

Common algorithms include round robin (distributes traffic sequentially), least connections (sends traffic to the server with the fewest active connections), and IP hash (directs traffic from the same IP address to the same server).

What are the different types of load balancers?

Load balancers can be hardware-based, software-based, or cloud-based. Each type offers different advantages in terms of cost, performance, and scalability.

How does session persistence work?

Session persistence (also known as sticky sessions) ensures that a user’s requests are consistently directed to the same backend server throughout their session. This is useful for applications that store user data on the server.