What is Test-Driven Development (TDD)? It’s a software development approach that flips the traditional coding process on its head, placing testing at the forefront. Instead of writing code and then testing it, TDD emphasizes writing tests

-before* you write the actual code. This may sound counterintuitive, but it leads to cleaner, more robust, and maintainable software.

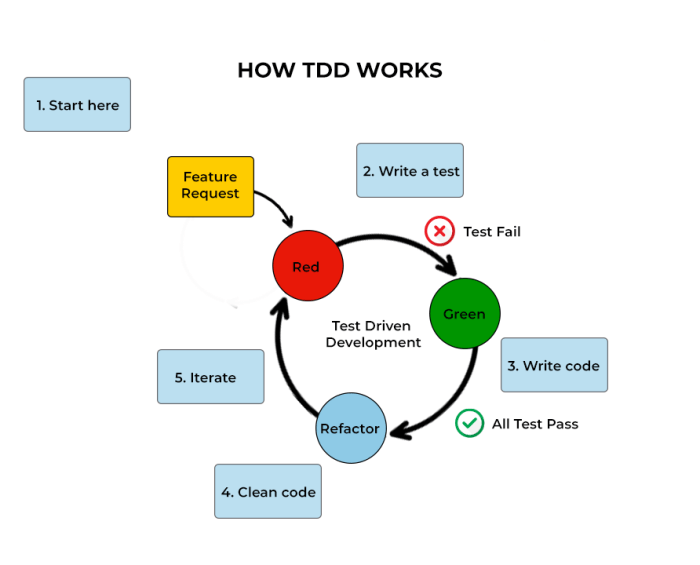

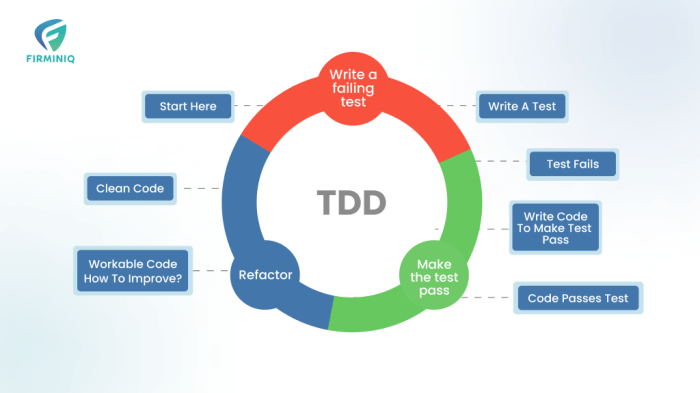

This guide will explore the core principles of TDD, its benefits, and how it can be implemented in various programming languages. We’ll journey through the ‘Red-Green-Refactor’ cycle, delve into effective testing techniques, and examine how TDD integrates with Agile methodologies. Whether you’re a seasoned developer or just starting, understanding TDD can significantly improve your software development practices.

Introduction to Test-Driven Development (TDD)

Test-Driven Development (TDD) is a software development process that relies on the repetition of a very short development cycle: first the developer writes an (initially failing) automated test case that defines a desired improvement or new function, then produces the minimum amount of code to pass that test, and finally refactors the new code to acceptable standards. This approach encourages a more robust and maintainable codebase.

Core Concept of TDD

At its heart, TDD is about writing the tests

- before* you write the code that will make those tests pass. This seemingly counterintuitive approach fundamentally changes the way software is developed. It forces developers to think about the desired functionality and behavior of their code

- before* they start coding. This process helps clarify requirements and design, leading to more focused and less error-prone development.

Historical Overview of TDD

TDD’s roots are in Extreme Programming (XP), a software development methodology that emphasizes frequent releases, short development cycles, and continuous feedback. The principles of TDD emerged in the late 1990s, largely through the work of Kent Beck, who is considered a key figure in popularizing the practice. It built upon earlier ideas from methodologies like XP and became a recognized practice, gaining traction as developers saw its benefits in producing more reliable and maintainable code.

Over time, TDD evolved, with various tools and frameworks emerging to support the process, solidifying its place as a standard practice in many software development teams.

Fundamental Principle: Writing Tests Before the Code

The core tenet of TDD is the “test-first” approach. This involves a specific sequence of steps:

- Write a Failing Test: Before writing any code, a developer writes a test that describes a small piece of functionality or a specific behavior the code should exhibit. This test will initially fail because the code doesn’t exist yet.

- Write the Minimum Code to Pass the Test: The developer then writes the simplest code possible to make the test pass. The focus is on getting the test to pass quickly, not on producing perfect or elegant code at this stage.

- Refactor the Code: Once the test passes, the developer refactors the code to improve its design, readability, and maintainability, ensuring that the tests continue to pass after the changes.

This cycle, often referred to as “Red-Green-Refactor,” is repeated for each small piece of functionality.

The “Red-Green-Refactor” cycle is the foundation of TDD.

This iterative process ensures that the code is always driven by tests, providing a safety net for changes and refactoring. This practice leads to higher-quality code, reduced bugs, and a more maintainable software product.

The TDD Cycle

The core of Test-Driven Development (TDD) is the iterative Red-Green-Refactor cycle. This cycle is a disciplined approach to software development where tests are written before the actual code. It ensures that the code is written to meet specific requirements, is well-tested, and remains maintainable.

Red-Green-Refactor Cycle

The Red-Green-Refactor cycle is the fundamental process of TDD. It involves three distinct phases that are repeated throughout the development process.

- Red: In this phase, a failing test is written. This test defines a specific requirement or functionality that the code should implement. The test initially fails because the corresponding code does not yet exist. This phase establishes the “problem” that needs to be solved.

- Green: The goal of the Green phase is to make the test pass. This is achieved by writing the minimal amount of code necessary to satisfy the test. The focus is on functionality, not elegance or efficiency. The code is written to solve the problem defined by the test.

- Refactor: Once the test passes, the Refactor phase begins. This involves improving the code without changing its external behavior (i.e., without breaking the passing tests). This includes cleaning up the code, removing duplication, improving readability, and optimizing performance. The tests serve as a safety net during refactoring, ensuring that the changes do not introduce regressions.

Step-by-Step Procedure for Implementing the Cycle

Implementing the Red-Green-Refactor cycle involves a structured approach to ensure effective TDD practices. This structured approach helps to maintain code quality and facilitates the development process.

- Write a Test (Red Phase): Start by writing a test that defines a small, specific piece of functionality. This test should fail initially. The test should clearly articulate the desired behavior of the code.

- Run the Test: Execute the test to confirm that it fails. This validates that the test is correctly written and that it will fail when the desired functionality is not yet implemented.

- Write the Minimum Code to Pass the Test (Green Phase): Write the simplest possible code to make the test pass. Focus on functionality rather than perfection at this stage. This may involve “hardcoding” values or implementing a basic solution.

- Run the Test: Execute the test again to verify that it now passes.

- Refactor the Code (Refactor Phase): Review and improve the code, paying attention to readability, design, and performance. Ensure that the code remains clean and efficient.

- Run the Test Suite: Execute all tests to ensure that the refactoring has not introduced any regressions. This ensures that the code continues to function as expected after the changes.

- Repeat: Repeat the cycle, adding new tests and functionality incrementally. Continue to iterate through the Red-Green-Refactor cycle for each new feature or change.

Example of the Cycle with Code Snippets (Python)

The following example illustrates the Red-Green-Refactor cycle using Python and demonstrates how the process works in practice.

- Red Phase: Write a failing test

We want to create a function that adds two numbers. First, we define a test using the `unittest` framework:

“`python

import unittestclass TestAdder(unittest.TestCase):

def test_add_two_numbers(self):

self.assertEqual(add(2, 3), 5)

“`

This test asserts that the `add` function, when called with arguments 2 and 3, should return 5. Initially, the `add` function does not exist, causing the test to fail. - Green Phase: Write the minimum code to pass the test

Now, we write the simplest possible code to make the test pass. This involves creating the `add` function:

“`python

def add(x, y):

return x + y

“`

This implementation directly adds the two input numbers and returns the result. - Refactor Phase: Refactor the code

In this example, the code is already simple and efficient. However, if we had more complex logic, we could refactor it. For instance, if we initially implemented the `add` function using a less efficient method, we could refactor it to use the `+` operator for improved performance. Since the original implementation is straightforward, no significant refactoring is needed in this case.

We can add a comment to clarify the function’s purpose.

“`python

def add(x, y):

# Adds two numbers

return x + y

“`

Then, we run all tests to ensure they still pass after the (minor) refactoring.

Benefits of Using TDD

Test-Driven Development (TDD) offers numerous advantages that enhance the software development process and the resulting code. Implementing TDD isn’t just about writing tests; it fundamentally changes the way developers approach coding, leading to higher-quality, more maintainable, and less buggy software. These benefits contribute to reduced development costs, improved collaboration, and increased confidence in the codebase.

Improved Code Quality

TDD directly influences code quality by enforcing a disciplined approach to development. The focus on writing testsbefore* the code itself encourages developers to think critically about the intended functionality and design of each component. This proactive approach leads to cleaner, more modular, and more robust code.

- Reduced Complexity: Writing tests first forces developers to break down complex problems into smaller, more manageable units. Each test focuses on a specific aspect of the code, making it easier to understand, debug, and modify. This modularity reduces the overall complexity of the system.

- Enhanced Design: The TDD cycle encourages a design that is testable. This often leads to loosely coupled components and well-defined interfaces, promoting better separation of concerns and making the code more flexible.

- Increased Code Coverage: TDD naturally results in high code coverage. Each test verifies a specific piece of functionality, ensuring that a significant portion of the codebase is exercised and validated. This comprehensive testing reduces the likelihood of undetected errors.

- Early Error Detection: Bugs are identified and addressed early in the development cycle, when they are easier and less expensive to fix. This proactive approach prevents errors from propagating through the system, saving time and resources.

Bug Reduction Advantages

One of the most significant benefits of TDD is the substantial reduction in the number of bugs found in the final product. By writing tests before the code, developers can catch and correct errors early in the development process, leading to a more stable and reliable application.

- Proactive Bug Prevention: TDD shifts the focus from reactive debugging to proactive bug prevention. Tests act as a safety net, catching errors before they can cause significant problems.

- Regression Testing: With a comprehensive suite of tests in place, developers can confidently make changes to the code without fear of introducing new bugs. The tests automatically verify that existing functionality remains intact.

- Reduced Debugging Time: When a test fails, it provides immediate feedback on the location and nature of the error. This simplifies the debugging process and reduces the time required to identify and fix bugs.

- Increased Code Reliability: The combination of early error detection, regression testing, and high code coverage results in a more reliable and robust application. This reduces the risk of unexpected behavior and improves the overall user experience.

Contribution to More Maintainable Codebases

TDD contributes significantly to the maintainability of a codebase. Well-tested code is easier to understand, modify, and extend. The test suite serves as living documentation, clarifying the intended behavior of each component and making it easier for developers to work on the code over time.

- Improved Code Understanding: Tests serve as a form of documentation, explaining how the code is supposed to work. This makes it easier for developers to understand the purpose and functionality of different code sections.

- Simplified Refactoring: With a comprehensive test suite in place, developers can confidently refactor the code without fear of breaking existing functionality. The tests provide a safety net that ensures that the refactoring process does not introduce new bugs.

- Easier Feature Addition: When adding new features, developers can write tests first to define the desired behavior. This ensures that the new feature integrates seamlessly with the existing code and that no regressions are introduced.

- Reduced Technical Debt: TDD encourages developers to write clean, well-designed code, reducing the accumulation of technical debt. This leads to a more maintainable and sustainable codebase over the long term.

Writing Effective Tests

Writing effective tests is a critical aspect of Test-Driven Development (TDD). Good tests provide confidence in the codebase, catch bugs early, and facilitate refactoring. They also serve as documentation, clarifying the intended behavior of the code. This section will delve into the characteristics of a good test and explore different types of tests, providing examples to illustrate their practical application.

Finally, we’ll examine how to structure tests for optimal readability and maintainability.

Characteristics of a Good Test

A good test possesses several key characteristics. These characteristics contribute to the test’s effectiveness in validating code and ensuring its reliability.* Clear and Concise: A good test should be easy to understand and should clearly state what it is testing. It should avoid unnecessary complexity.

Independent

Tests should not depend on the order in which they are executed or on the results of other tests. This ensures that a failing test accurately reflects a problem in the code and is not caused by an issue in another test.

Repeatable

Tests should produce the same results every time they are run. This repeatability is crucial for reliable testing and debugging.

Fast

Tests should execute quickly. Slow tests can hinder the development process and discourage frequent testing.

Focused

Each test should verify a single, specific aspect of the code. This focused approach makes it easier to identify the root cause of a failure.

Automated

Tests should be automated so they can be run easily and frequently. This is essential for continuous integration and continuous delivery.

Reliable

Tests should reliably detect failures in the code. They should not produce false positives or false negatives.

Different Types of Tests

Different types of tests serve distinct purposes in the software development lifecycle. Understanding these different types is crucial for building a robust testing strategy. Here are some common types of tests:* Unit Tests: These tests verify the behavior of individual units of code, such as functions or methods. They are typically the fastest and most fine-grained tests. Unit tests are written to test the smallest testable parts of an application.

Example

A unit test for a function that calculates the area of a rectangle would assert that the function returns the correct area for various input values (e.g., different lengths and widths).

Integration Tests

These tests verify the interaction between different units of code or components. They ensure that the different parts of the system work together correctly.

Example

An integration test might verify that a user registration process correctly interacts with the database to store user information and with the email service to send a confirmation email.

Component Tests

Component tests focus on verifying the functionality of a specific component or module of the system. They often involve testing the component’s interactions with its dependencies.

Example

A component test for a user interface component might verify that it correctly displays data retrieved from a backend service and that user interactions trigger the expected actions.

System Tests (End-to-End Tests)

These tests verify the entire system, from the user interface to the database. They simulate real-world user scenarios.

Example

A system test might simulate a user logging in, placing an order, and checking the order status.

Acceptance Tests

These tests are used to validate that the system meets the requirements of the stakeholders. They are often written in a language that is understandable by non-technical users.

Example

An acceptance test might verify that a user can successfully create an account and log in with the correct credentials.

Performance Tests

These tests evaluate the performance characteristics of the system, such as response time, throughput, and resource utilization.

Example

A performance test might measure the time it takes to load a webpage under different levels of user traffic.

Structuring Tests for Readability and Maintainability

Structuring tests effectively is essential for ensuring their readability and maintainability. Well-structured tests are easier to understand, modify, and debug. Here are some key principles:* Naming Conventions: Use clear and descriptive names for test methods and classes. The names should accurately reflect the functionality being tested.

Example

Instead of `test1()`, use names like `testCalculateRectangleArea_ValidInput_ReturnsCorrectArea()`. This name immediately conveys the purpose of the test.

Test Organization

Organize tests logically, often using a directory structure that mirrors the structure of the code being tested. This makes it easier to find and manage tests.

Arrange-Act-Assert

Follow the Arrange-Act-Assert pattern. This pattern helps to structure tests in a consistent and readable way.

Arrange

Set up the necessary preconditions for the test.

Act

Execute the code being tested.

Assert

Verify the expected outcome.

Test Fixtures

Use test fixtures to set up and tear down the environment for each test. This helps to ensure that tests are independent and repeatable. Test fixtures ensure a clean state before each test run.

Avoid Duplication

Refactor test code to eliminate duplication. This improves maintainability and reduces the risk of errors. Create reusable helper methods or classes to avoid repeating common setup or assertion logic.

Keep Tests Focused

Ensure each test has a single responsibility. This makes it easier to understand the test’s purpose and to identify the cause of any failures.By adhering to these principles, developers can create tests that are not only effective in detecting bugs but also contribute to the overall quality and maintainability of the codebase.

Common TDD Techniques

Test-Driven Development (TDD) employs various techniques to enhance the effectiveness and efficiency of the testing process. These techniques help developers write cleaner, more maintainable, and more robust code. This section explores some of the most commonly used TDD techniques.

Use of Mocks and Stubs in Testing

Mocks and stubs are essential tools in TDD, particularly when dealing with dependencies. They allow developers to isolate the unit under test and control its interactions with external components, making tests faster, more predictable, and easier to maintain.Mocks and stubs serve different purposes:

- Stubs: Stubs provide predefined responses to method calls. They are used to simulate the behavior of dependencies, allowing the unit under test to run without relying on the actual implementation of those dependencies. Stubs are typically used when the dependency’s behavior is simple or when you only need to verify that the unit under test calls the dependency’s methods.

- Mocks: Mocks are more sophisticated than stubs. They not only provide predefined responses but also verify that the unit under test interacts with its dependencies in the expected way. Mocks track method calls, including the number of times a method is called, the order of calls, and the arguments passed to the methods. Mocks are used to test the interactions between the unit under test and its dependencies.

Here’s an example using Python with the `unittest.mock` library to illustrate the difference:“`pythonimport unittestfrom unittest.mock import MagicMock# Assume we have a class that interacts with an external serviceclass MyClass: def __init__(self, service): self.service = service def do_something(self, data): result = self.service.process_data(data) return result – 2# Test with a stubclass MyClassStubTest(unittest.TestCase): def test_do_something_with_stub(self): # Create a stub for the service stub_service = MagicMock() stub_service.process_data.return_value = 5 # Stub the return value # Instantiate MyClass with the stub my_instance = MyClass(stub_service) # Call the method and assert the result result = my_instance.do_something(“some data”) self.assertEqual(result, 10) # Verify that process_data was called stub_service.process_data.assert_called_once_with(“some data”)“`In this stub example, we provide a predefined return value.“`python# Test with a mockclass MyClassMockTest(unittest.TestCase): def test_do_something_with_mock(self): # Create a mock for the service mock_service = MagicMock() # Instantiate MyClass with the mock my_instance = MyClass(mock_service) # Call the method result = my_instance.do_something(“some data”) # Assert the result self.assertEqual(result, None) # Depends on the service implementation # Assert that the service’s method was called with the correct arguments mock_service.process_data.assert_called_once_with(“some data”)“`In this mock example, we can also assert that the method was called with specific arguments.

Applying the “Arrange-Act-Assert” Pattern

The “Arrange-Act-Assert” (AAA) pattern provides a clear structure for writing tests, making them easier to understand and maintain. This pattern helps developers organize their tests logically and ensures that each test focuses on a specific aspect of the unit under test.The AAA pattern consists of three distinct phases:

- Arrange: This phase sets up the test environment. It involves creating objects, setting up dependencies (using mocks or stubs), and preparing the data required for the test. This phase should focus on getting the system into the desired state before the action.

- Act: This phase executes the code that is being tested. It involves calling the method or function that is being tested, triggering the behavior that needs to be verified. This is the core of the test.

- Assert: This phase verifies the outcome of the action. It involves checking that the actual results match the expected results. Assertions are used to validate that the code behaved as expected.

Here’s an example in Python using the `unittest` framework:“`pythonimport unittestclass Calculator: def add(self, x, y): return x + yclass CalculatorTest(unittest.TestCase): def test_add_positive_numbers(self): # Arrange calculator = Calculator() x = 5 y = 3 expected_result = 8 # Act actual_result = calculator.add(x, y) # Assert self.assertEqual(actual_result, expected_result) def test_add_negative_numbers(self): # Arrange calculator = Calculator() x = -5 y = -3 expected_result = -8 # Act actual_result = calculator.add(x, y) # Assert self.assertEqual(actual_result, expected_result)“`In this example:

- The “Arrange” phase sets up a `Calculator` object, and defines input values and the expected result.

- The “Act” phase calls the `add` method with the input values.

- The “Assert” phase checks if the actual result matches the expected result using `self.assertEqual()`.

This structure makes tests easy to read and understand.

Techniques for Handling Dependencies in Tests

Dependencies can complicate testing, but various techniques can be used to handle them effectively. The goal is to isolate the unit under test and control its interactions with dependencies to ensure the tests are reliable and focused.Here are several common techniques:

- Dependency Injection: This involves passing dependencies to the unit under test, typically through the constructor or method parameters. This allows you to replace the real dependencies with mocks or stubs during testing.

- Mocking and Stubbing: As discussed earlier, mocks and stubs are used to simulate the behavior of dependencies. They allow you to control the responses of dependencies and verify how the unit under test interacts with them.

- Test Doubles: Test doubles is a general term that encompasses mocks, stubs, spies, and fakes. They are all used to replace real dependencies with controlled versions during testing.

- Interface Segregation: Define interfaces for dependencies. This makes it easier to create mock implementations of those interfaces for testing purposes.

- Using a Testing Framework: Most testing frameworks (e.g., JUnit for Java, pytest for Python) provide features for mocking and dependency injection. These frameworks often include tools to create and manage test doubles.

Here’s an example demonstrating dependency injection and mocking:“`pythonimport unittestfrom unittest.mock import MagicMock# Assume we have a class that depends on an external serviceclass DataProcessor: def __init__(self, service): self.service = service def process_data(self, data): processed_data = self.service.transform(data) return processed_data.upper()# Test the DataProcessor class using a mockclass DataProcessorTest(unittest.TestCase): def test_process_data_with_mock(self): # Arrange mock_service = MagicMock() mock_service.transform.return_value = “transformed data” data_processor = DataProcessor(mock_service) input_data = “some data” expected_result = “TRANSFORMED DATA” # Act actual_result = data_processor.process_data(input_data) # Assert self.assertEqual(actual_result, expected_result) mock_service.transform.assert_called_once_with(input_data)“`In this example, `DataProcessor` takes a `service` as a dependency.

The test creates a `MagicMock` object to simulate the service. The `transform` method of the mock is configured to return a specific value. This isolates the `DataProcessor` and allows the test to verify its behavior without relying on the actual service implementation.

TDD vs. Other Testing Approaches

Understanding how Test-Driven Development (TDD) compares to other testing approaches is crucial for making informed decisions about software development practices. This section will delve into the differences between TDD and Behavior-Driven Development (BDD), contrast TDD with traditional testing methodologies, and explore scenarios where TDD might not be the most suitable choice.

Comparing TDD with Behavior-Driven Development (BDD)

Both TDD and BDD are agile software development practices that emphasize testing. However, they differ in their focus and approach. BDD, built upon TDD principles, places a greater emphasis on collaboration and understanding user behavior.

- Focus: TDD primarily focuses on the technical implementation of the code. BDD, on the other hand, prioritizes the behavior of the system from the user’s perspective. It emphasizes how the system

-should* behave. - Test Language: TDD tests are typically written using technical terms specific to the programming language. BDD uses a more business-readable, domain-specific language (DSL), often using a “Given-When-Then” format, making tests accessible to non-technical stakeholders. For example:

Given the user is logged in

When the user clicks the “Submit” button

Then the system should display a confirmation message. - Collaboration: BDD strongly encourages collaboration between developers, testers, and business stakeholders to define and validate system behavior. TDD, while it can benefit from collaboration, is often driven primarily by the developer.

- Test Scope: TDD tends to focus on unit tests, testing individual components in isolation. BDD can encompass a wider range of tests, including integration and acceptance tests, to verify the system’s overall behavior.

Differences Between TDD and Traditional Testing Methodologies

Traditional testing methodologies, such as the waterfall model’s testing phase, differ significantly from TDD. The key distinction lies in when testing occurs and how it is integrated into the development process.

- Testing Phase: Traditional testing typically occurs after the code has been written. In contrast, TDD involves writing tests

-before* the code, guiding the development process. - Test Purpose: Traditional testing aims to find bugs and verify that the code meets the requirements. TDD not only identifies bugs but also drives the design of the code, ensuring it is testable and well-structured.

- Test Coverage: Traditional testing may not always achieve comprehensive test coverage, especially if testing is rushed or budget-constrained. TDD, due to its test-first approach, encourages higher test coverage, as tests are written for every piece of functionality.

- Feedback Loop: Traditional testing provides feedback later in the development cycle, potentially leading to costly rework. TDD offers immediate feedback, allowing developers to catch errors early and iterate quickly.

- Code Design: Traditional testing may not significantly influence code design. TDD promotes a design that is inherently testable, leading to more modular and maintainable code. For example, the need to write a test for a specific functionality often leads to a cleaner separation of concerns within the code.

Scenarios Where TDD Might Not Be the Best Approach

While TDD offers numerous benefits, it’s not always the ideal solution for every project. Certain situations might make alternative testing strategies or a hybrid approach more suitable.

- Legacy Codebases: Applying TDD to large, complex, and poorly documented legacy codebases can be challenging. The lack of existing tests and the difficulty of refactoring without breaking existing functionality can hinder the process. It might be more practical to introduce tests gradually or refactor the code first before adopting TDD fully.

- Rapid Prototyping: In scenarios where the primary goal is rapid prototyping and exploring different design options, the overhead of writing tests upfront might slow down the process. The focus is often on quick iterations and experimentation rather than rigorous testing at the initial stages.

- Performance-Critical Systems: In highly performance-critical systems, the focus might be on optimizing code for speed and efficiency rather than solely on testability. While TDD can still be used, the emphasis might shift towards performance testing and profiling after the initial functionality is implemented.

- Projects with Fixed Requirements: In projects with very rigid and unchanging requirements, the benefits of TDD’s iterative design approach might be less pronounced. If the requirements are well-defined and unlikely to change, traditional testing or a combination of testing methods might suffice.

- Resource Constraints: Implementing TDD requires a certain level of skill and discipline. In projects with limited resources, such as time or experienced developers, the investment in learning and practicing TDD might not be feasible. In such cases, a more traditional testing approach might be considered.

Implementing TDD in Different Programming Languages

The principles of Test-Driven Development (TDD) are language-agnostic, meaning the core concepts remain the same regardless of the programming language used. However, the specific tools and frameworks for implementing TDD vary depending on the language ecosystem. This section explores practical implementations of TDD in Python, Java, and JavaScript, showcasing how to write tests and integrate them into the development workflow.

Implementing TDD in Python using unittest

Python offers a built-in testing framework called `unittest`, which is part of the Python standard library. This framework provides the necessary tools for writing and running tests based on the principles of TDD.To effectively utilize `unittest`, follow these steps:

- Write a Failing Test: Begin by writing a test that describes the desired behavior of a function or class. This test should initially fail.

- Write the Minimal Code: Implement the smallest amount of code necessary to make the test pass.

- Refactor: Refactor the code to improve its structure, readability, and efficiency without changing its behavior.

Here’s an example demonstrating TDD with `unittest`:

# my_calculator.pyclass Calculator: def add(self, x, y): return x + y # test_my_calculator.pyimport unittestfrom my_calculator import Calculatorclass TestCalculator(unittest.TestCase): def test_add(self): calculator = Calculator() result = calculator.add(2, 3) self.assertEqual(result, 5)if __name__ == '__main__': unittest.main() In this example:

- `my_calculator.py` defines a simple `Calculator` class.

- `test_my_calculator.py` contains the test suite using `unittest`.

- `TestCalculator` inherits from `unittest.TestCase`.

- `test_add` is a test method that asserts the result of the `add` method.

- Running `test_my_calculator.py` executes the tests. Initially, if the `add` method in `Calculator` doesn’t exist, the test will fail. Then, the minimal implementation of the `add` method is created to pass the test.

Setting up TDD in Java using JUnit

JUnit is a widely used testing framework in Java for implementing TDD. It provides annotations, assertions, and other utilities to facilitate the writing and execution of tests.

Setting up JUnit involves these steps:

- Add JUnit to Your Project: Include the JUnit dependency in your project’s build configuration (e.g., `pom.xml` for Maven or `build.gradle` for Gradle).

- Create a Test Class: Create a Java class to hold your tests. This class typically resides in a separate “test” directory.

- Write Test Methods: Annotate test methods with `@Test`. These methods contain the assertions that verify the expected behavior.

- Run Tests: Use your IDE or build tool to run the tests and view the results.

Here’s a Java example using JUnit:

// Calculator.javapublic class Calculator public int add(int x, int y) return x + y; // CalculatorTest.javaimport org.junit.jupiter.api.Test;import static org.junit.jupiter.api.Assertions.assertEquals;public class CalculatorTest @Test public void testAdd() Calculator calculator = new Calculator(); int result = calculator.add(2, 3); assertEquals(5, result); In this example:

- `Calculator.java` defines a simple `Calculator` class.

- `CalculatorTest.java` is the test class.

- `@Test` annotation marks the `testAdd` method as a test case.

- `assertEquals` is an assertion method that checks if the actual result matches the expected result.

- The test will fail if the `add` method doesn’t return the correct sum. The minimal implementation is added to pass the test.

Using TDD in JavaScript with Jest

Jest is a popular JavaScript testing framework developed by Facebook. It offers a simple and intuitive API, excellent performance, and a wide range of features, including snapshot testing and mocking.

To implement TDD with Jest:

- Install Jest: Install Jest as a development dependency using npm or yarn: `npm install –save-dev jest` or `yarn add –dev jest`.

- Write Tests: Create test files (typically with the `.test.js` or `.spec.js` extension) and write test cases using Jest’s `test` and `expect` functions.

- Run Tests: Run the tests using the `jest` command in your terminal.

Here’s a JavaScript example using Jest:

// calculator.jsmodule.exports = add: (x, y) => x + y,; // calculator.test.jsconst calculator = require('./calculator');test('adds 2 + 3 to equal 5', () => expect(calculator.add(2, 3)).toBe(5);); In this example:

- `calculator.js` exports an `add` function.

- `calculator.test.js` contains the test.

- `test` is Jest’s function for defining a test case.

- `expect` and `toBe` are Jest’s assertion functions.

- The test checks if `calculator.add(2, 3)` returns 5. If not, the test fails. After the test fails, the minimal code is added to pass the test.

Test Coverage and Code Quality

Test coverage is a critical aspect of software development, particularly when employing Test-Driven Development (TDD). It provides a measure of how much of the codebase is exercised by automated tests. A high level of test coverage often indicates a more robust and reliable software product, as a larger portion of the code has been verified. This section explores the concept of test coverage, how to measure and improve it, and its correlation with code quality.

Understanding Test Coverage

Test coverage quantifies the extent to which the source code is executed when the test suite is run. It helps identify areas of the code that are not being tested and therefore might contain undiscovered bugs. The goal is not necessarily 100% coverage, as this can be difficult and might involve testing trivial code, but rather to achieve a level of coverage that provides confidence in the software’s reliability.

There are several metrics used to assess test coverage:

- Line Coverage: Measures the percentage of lines of code that have been executed during testing. This is the simplest metric, but it can be misleading as it doesn’t account for conditional statements.

- Branch Coverage (or Decision Coverage): Measures the percentage of branches (e.g., `if` statements, `switch` statements) that have been tested. This ensures that both sides of a conditional statement are exercised.

- Condition Coverage (or Predicate Coverage): Measures the coverage of individual conditions within a boolean expression. This is particularly important when dealing with complex logical expressions.

- Statement Coverage: Measures the percentage of statements in the source code that have been executed during testing.

- Function Coverage: Measures the percentage of functions/methods that have been called during testing.

- Path Coverage: Measures the percentage of possible paths through a function or method that have been tested. This is the most comprehensive but also the most complex to achieve.

Measuring and Improving Test Coverage

Measuring test coverage involves using specialized tools integrated into the development environment or build process. These tools analyze the source code and the test suite to determine which parts of the code are executed during testing. Many modern IDEs and testing frameworks offer built-in coverage analysis capabilities.

Here’s how to measure and improve test coverage:

- Utilize Coverage Tools: Employ tools like JaCoCo (for Java), Istanbul (for JavaScript), Coverage.py (for Python), or gcov (for C/C++) to generate coverage reports. These reports highlight areas of the code that are not covered by tests.

- Analyze Coverage Reports: Examine the reports generated by coverage tools. Identify code sections with low coverage.

- Write More Tests: Create new tests or modify existing ones to cover the uncovered code. Focus on edge cases, boundary conditions, and potential failure scenarios.

- Refactor Tests: Review existing tests to ensure they are effective and cover the intended functionality. Refactor tests to improve their clarity and maintainability.

- Prioritize High-Risk Areas: Focus testing efforts on critical parts of the application, such as security-sensitive components or modules with a history of bugs.

- Iterative Process: Test coverage improvement is an iterative process. Regularly run coverage analysis, identify gaps, write more tests, and repeat the cycle.

An example of a simplified coverage report could show that a specific class has 80% line coverage, 60% branch coverage, and 90% statement coverage. This suggests that while most lines and statements are executed, some branches within the code are not being tested.

Correlation between High Test Coverage and Code Quality

High test coverage, while not a guarantee of bug-free code, is strongly correlated with improved code quality. Code with high test coverage tends to be more reliable, maintainable, and less prone to errors.

Here’s how high test coverage contributes to code quality:

- Reduced Bugs: High coverage increases the likelihood of detecting and fixing bugs early in the development cycle.

- Improved Maintainability: Code with good test coverage is easier to understand and modify, as tests act as documentation and help ensure that changes don’t break existing functionality.

- Enhanced Design: Writing tests often forces developers to think about the design of their code, leading to better-structured and more modular code.

- Increased Confidence: High test coverage provides developers with greater confidence in their code, reducing the risk of unexpected failures.

- Facilitates Refactoring: When refactoring code, tests provide a safety net, allowing developers to make changes with confidence that they haven’t introduced new bugs.

Consider a scenario where a software company, “Acme Corp,” releases a new feature. Before release, the feature underwent rigorous testing, achieving 95% line coverage and 90% branch coverage. After deployment, the feature experienced significantly fewer bug reports compared to similar features released with lower test coverage. This real-world example illustrates the positive impact of high test coverage on code quality and user satisfaction.

In contrast, consider a hypothetical scenario where a project has low test coverage, such as 40% line coverage. In this case, the likelihood of encountering bugs during runtime increases. Developers may spend more time debugging and fixing issues, leading to higher development costs and potentially damaging the software’s reputation. This contrast emphasizes the importance of test coverage in ensuring software quality.

Challenges and Pitfalls of TDD

Adopting Test-Driven Development (TDD) offers numerous benefits, but it’s not without its challenges. Successfully navigating these pitfalls requires a clear understanding of the potential difficulties and a proactive approach to mitigate them. Recognizing these challenges allows teams to implement TDD more effectively and avoid common setbacks that can hinder its adoption and effectiveness.

Common Challenges Faced When Adopting TDD

Transitioning to TDD often presents several hurdles. These challenges can range from initial learning curves to more complex issues related to project scope and team dynamics. Addressing these challenges early on is crucial for a successful TDD implementation.

- Steep Learning Curve: Developers new to TDD may find the initial learning curve challenging. Understanding the TDD cycle (Red-Green-Refactor) and writing effective tests requires practice and a shift in mindset. This is especially true if developers are accustomed to writing tests after the code.

- Time Investment: TDD can appear to require more time upfront, as it involves writing tests before the actual code. However, this investment often pays off in the long run by reducing debugging time and improving code quality.

- Resistance to Change: Some developers may resist adopting TDD due to a preference for their existing workflow or a lack of understanding of its benefits. Addressing this requires effective communication, training, and demonstrating the value of TDD through early successes.

- Test Maintenance: As the codebase grows, so does the number of tests. Maintaining and updating these tests can become a significant task. Poorly written or overly complex tests can make maintenance even more challenging.

- Testing Complex Logic: Testing complex algorithms or intricate business logic can be difficult. It may require careful planning, mocking, and the use of advanced testing techniques.

- Inadequate Test Coverage: Failing to achieve sufficient test coverage can lead to undetected bugs and reduced confidence in the codebase. Determining what constitutes “sufficient” coverage can be challenging and may vary depending on the project’s requirements.

- Integration Testing Challenges: Integrating TDD with existing codebases or dealing with dependencies on external systems (databases, APIs) can be complex. It often requires careful planning and the use of mocking and stubbing techniques.

Potential Drawbacks of Over-Testing

While comprehensive testing is a goal, excessive testing can lead to several drawbacks. Over-testing can negatively impact development time, maintainability, and the overall efficiency of the development process.

- Increased Development Time: Writing an excessive number of tests, particularly for trivial functionality, can significantly increase development time. This can slow down the overall development process and potentially delay project delivery.

- Reduced Maintainability: Overly detailed tests can make the codebase more difficult to maintain. Changes to the code may require updating a large number of tests, increasing the risk of introducing errors.

- Test Fragility: Tests that are overly sensitive to changes in the code can become fragile. Small modifications to the code can cause numerous tests to fail, leading to wasted time and effort in debugging and updating the tests.

- False Sense of Security: Over-testing can sometimes provide a false sense of security. Even with a large number of tests, there is no guarantee that all potential bugs have been caught. Developers may become overconfident and neglect other important aspects of code quality.

- Increased Resource Consumption: Running a large number of tests can consume significant resources, particularly in terms of CPU time and memory. This can slow down the development process and potentially impact the performance of the development environment.

Strategies to Avoid Common TDD Pitfalls

Mitigating the challenges and avoiding the pitfalls of TDD requires a proactive and well-planned approach. Implementing these strategies can significantly improve the effectiveness of TDD and ensure its successful adoption.

- Start Small and Iterate: Begin with a small, manageable set of tests and gradually increase the scope of testing. This allows teams to learn and adapt to TDD without being overwhelmed.

- Focus on High-Value Tests: Prioritize testing critical functionality and areas of the code that are most likely to change. This helps to maximize the return on investment in testing.

- Write Clear and Concise Tests: Tests should be easy to understand and maintain. Avoid complex test setups and use meaningful test names.

- Use Mocking and Stubbing Wisely: When testing code with dependencies on external systems, use mocking and stubbing to isolate the code under test. Avoid over-mocking, which can lead to brittle tests.

- Refactor Tests Regularly: As the codebase evolves, refactor tests to keep them clean and maintainable. Remove unnecessary tests and update tests to reflect changes in the code.

- Automate Test Execution: Integrate tests into the build process to ensure that tests are run automatically after each code change. This helps to catch bugs early and reduce the risk of regressions.

- Embrace Continuous Integration: Continuous Integration (CI) helps to integrate code changes frequently, automatically building and testing the software. This approach allows developers to identify and resolve integration issues promptly, ensuring a more stable and reliable product.

- Provide Training and Mentoring: Ensure that developers receive adequate training on TDD principles and techniques. Pair programming and code reviews can also help to share knowledge and improve testing practices.

- Establish Clear Testing Guidelines: Define clear guidelines for test coverage, test naming conventions, and testing best practices. This helps to ensure consistency and maintainability across the codebase.

- Monitor Test Coverage and Code Quality: Use code coverage tools to monitor the percentage of code that is covered by tests. Regularly review code quality metrics to identify areas for improvement.

TDD in Agile Development

![Test-Driven Development (TDD) – Quick Guide [2023] Test-Driven Development (TDD) – Quick Guide [2023]](https://wp.ahmadjn.dev/wp-content/uploads/2025/06/627938f23cc6dc07b69e22a7_test-driven-development-tdd-cycle.jpeg)

Test-Driven Development (TDD) aligns exceptionally well with Agile methodologies, creating a synergistic approach to software development. Agile’s iterative and incremental nature complements TDD’s focus on short cycles of development and testing, leading to higher quality software and faster delivery. This integration fosters a culture of collaboration and continuous improvement, critical for successful Agile projects.

Integrating TDD with Agile Methodologies

The integration of TDD with Agile methodologies enhances software development practices. TDD provides a structured approach to building software incrementally, which perfectly aligns with Agile’s iterative sprints. Agile teams typically work in short cycles, frequently delivering working software. TDD supports this by ensuring each iteration produces tested, functional code.

TDD practices reinforce Agile principles such as:

- Customer Collaboration: TDD encourages developers to understand and translate customer requirements into test cases, fostering a shared understanding of the software’s functionality. This ensures the development team is building the right features.

- Responding to Change: TDD’s iterative nature allows for easy adaptation to changing requirements. As customer needs evolve, the tests can be updated and the code refactored to accommodate the modifications.

- Working Software: TDD ensures that each increment of code is thoroughly tested, contributing to a working software product at the end of each sprint. This aligns directly with the Agile principle of delivering working software frequently.

- Individuals and Interactions: TDD promotes close collaboration among developers, testers, and business stakeholders. The process of writing tests often involves discussing requirements and clarifying understanding, fostering better communication and team dynamics.

TDD Practices in Scrum

Scrum, a popular Agile framework, benefits significantly from the implementation of TDD. Scrum teams can effectively utilize TDD throughout the sprint lifecycle.

- Sprint Planning: During sprint planning, the team defines user stories and acceptance criteria. These acceptance criteria can be directly translated into test cases using TDD. This provides a clear definition of “done” for each story.

- Sprint Execution (Daily Scrum): Daily Scrums involve the team discussing progress and any impediments. Using TDD, the team can quickly assess the status of features by checking the test results. This helps identify and address any issues promptly.

- Sprint Review: At the end of the sprint, the team demonstrates the completed features to stakeholders. The passing tests provide tangible evidence of the implemented functionality and compliance with acceptance criteria.

- Sprint Retrospective: The retrospective is a time for the team to reflect on the sprint. TDD can be evaluated to identify areas for improvement in testing practices or the development process. This feedback loop contributes to continuous improvement.

An example of TDD within a Scrum sprint:

- User Story: “As a user, I want to be able to add items to my shopping cart.”

- Acceptance Criteria:

- The cart should allow the user to add a product.

- The cart should correctly display the product name and price.

- The cart should calculate the total cost.

- TDD Implementation:

- Write a failing test to verify that a product can be added to the cart.

- Write code to make the test pass.

- Write a failing test to verify that the product name and price are displayed correctly.

- Write code to make the test pass.

- Write a failing test to verify the total cost calculation.

- Write code to make the test pass.

- Sprint Review: The team demonstrates the shopping cart functionality, with all tests passing, confirming that the user story and acceptance criteria have been met.

Facilitating Continuous Integration and Continuous Delivery (CI/CD) with TDD

TDD is a crucial enabler for Continuous Integration and Continuous Delivery (CI/CD) pipelines. Automated testing, a core tenet of TDD, provides the foundation for CI/CD practices. CI/CD aims to automate the software release process, allowing for frequent and reliable releases.

- Automated Testing: TDD’s emphasis on writing automated tests creates a suite of tests that can be run automatically as part of the CI/CD pipeline.

- Early Bug Detection: Automated tests detect bugs early in the development cycle, reducing the cost and effort required to fix them. This helps ensure that only high-quality code is integrated and deployed.

- Faster Feedback Loops: Automated tests provide immediate feedback on code changes. If a test fails, the developer knows immediately that something is wrong, allowing them to fix the issue promptly.

- Increased Confidence in Releases: The confidence in releasing new versions of the software increases because the automated tests provide assurance that the changes have not introduced any regressions.

The process typically involves:

- Developers write code and tests using TDD.

- Code is committed to a version control system (e.g., Git).

- The CI/CD pipeline automatically triggers a build process.

- Automated tests are executed.

- If all tests pass, the code is automatically deployed to a staging or production environment.

- If any tests fail, the build fails, and the developers are notified to address the issue.

Using CI/CD with TDD, companies like Netflix and Amazon are able to release updates multiple times per day. These releases are made with high confidence because of the extensive automated testing, resulting in faster innovation and better customer satisfaction. This also allows teams to experiment more, and to recover from any issues that arise quickly.

Tools and Frameworks for TDD

Test-driven development (TDD) relies heavily on tools and frameworks to streamline the testing process and ensure code quality. These tools automate test execution, provide feedback, and help developers write and manage tests effectively. Understanding the available options and how to use them is crucial for successful TDD implementation.

Popular Testing Frameworks for Different Languages

Choosing the right testing framework depends on the programming language used. Several robust frameworks are available, each offering unique features and capabilities. The following table provides an overview of some popular testing frameworks, their key features, and a basic example of how to use them:

| Language | Framework | Features | Example Use |

|---|---|---|---|

| Python | pytest |

| # content of test_example.py def add(a, b): return a + b def test_add(): assert add(2, 3) == 5 |

| Java | JUnit |

| import org.junit.jupiter.api.Test; import static org.junit.jupiter.api.Assertions.assertEquals; class MyClassTest @Test void testAdd() assertEquals(5, MyClass.add(2, 3)); |

| JavaScript | Jest |

| // example.js function sum(a, b) return a + b; // example.test.js test('adds 1 + 2 to equal 3', () => expect(sum(1, 2)).toBe(3); ); |

| C# | NUnit |

| using NUnit.Framework; namespace MyNamespace [TestFixture] public class MyClassTests [Test] public void Add_TwoNumbers_ReturnsSum() Assert.AreEqual(5, MyClass.Add(2, 3)); |

The Role of IDEs and Other Tools in Supporting TDD

Integrated Development Environments (IDEs) and other tools play a crucial role in facilitating TDD. They provide features that streamline the testing workflow and improve developer productivity.

- IDE Integration: IDEs like IntelliJ IDEA, Visual Studio, and Eclipse offer built-in support for testing frameworks. This integration includes features such as:

- Test runners that allow developers to execute tests directly from the IDE.

- Code completion and refactoring support for test code.

- Visual indicators of test results (e.g., green checkmarks for passing tests, red crosses for failing tests).

- Test Runners: Test runners are tools that execute tests and report the results. They often provide options for running specific tests, generating reports, and integrating with continuous integration systems. Popular test runners include:

- pytest for Python.

- JUnit for Java.

- Jest for JavaScript.

- Mocking Frameworks: Mocking frameworks allow developers to create mock objects that simulate the behavior of dependencies. This is useful for isolating units of code and testing them in isolation. Examples include:

- Mockito for Java.

- unittest.mock for Python.

- Jest’s built-in mocking capabilities for JavaScript.

- Code Coverage Tools: Code coverage tools measure the percentage of code that is executed during testing. This helps developers identify areas of code that are not adequately tested. Popular tools include:

- Coverage.py for Python.

- JaCoCo for Java.

- Istanbul for JavaScript.

Demonstration of Using a Specific Testing Tool to Write and Run Tests

Let’s consider using pytest, a popular testing framework for Python, to demonstrate the process of writing and running tests.

- Installation: Install pytest using pip:

pip install pytest - Create a simple function: Create a Python file (e.g.,

my_module.py) containing a function to be tested:# my_module.py def square(x): return x- x

- Write a test: Create a test file (e.g.,

test_my_module.py) in the same directory. The test file should contain test functions that start with “test_”.# test_my_module.py import my_module def test_square(): assert my_module.square(2) == 4 assert my_module.square(3) == 9

- Run the tests: Open a terminal or command prompt and navigate to the directory containing the files. Run pytest:

pytest- Pytest will automatically discover and run any functions in the test files that start with “test_”.

- The output will indicate the number of tests run, the number of failures (if any), and the time taken to run the tests.

The output from pytest will provide a summary of the test results. For example:

============================= test session starts ==============================platform darwin -- Python 3.9.7, pytest-7.1.1, py-1.11.0, pluggy-1.0.0rootdir: /path/to/your/projectcollected 1 itemtest_my_module.py . [100%]============================== 1 passed in 0.01s ===============================

This output shows that one test passed successfully.

If any assertions in the test function fail, pytest will report the failure, providing details about the cause of the failure. This iterative process of writing a test, running it, and refactoring the code until the test passes is the core of TDD.

Wrap-Up

In conclusion, what is Test-Driven Development (TDD) is a powerful methodology that fosters higher-quality code, reduces bugs, and enhances maintainability. By embracing the principles of TDD, developers can create more reliable and efficient software. While it requires a shift in mindset, the long-term benefits, including improved code quality and reduced development time, make it a worthwhile investment for any software project.

Remember, writing tests first is not just a practice; it’s a commitment to excellence in software development.

Expert Answers

What is the core principle of TDD?

The core principle is to write a test before you write the code that makes the test pass. This forces you to think about the requirements and design of your code upfront.

What is the ‘Red-Green-Refactor’ cycle?

The ‘Red-Green-Refactor’ cycle is the fundamental workflow in TDD. First, you write a test that fails (Red). Then, you write the minimal code to make the test pass (Green). Finally, you refactor the code to improve its design and readability while ensuring the tests still pass.

What are the main benefits of using TDD?

TDD leads to higher code quality, reduces bugs, makes code more maintainable, provides better documentation through tests, and helps in designing a robust and flexible system.

What types of tests are commonly used in TDD?

Common test types include unit tests (testing individual components), integration tests (testing the interaction between components), and end-to-end tests (testing the entire system).

Is TDD suitable for all types of projects?

While TDD is highly beneficial, it might not be the best approach for all projects. It’s most effective for projects where requirements are well-defined and change infrequently. For rapidly evolving projects, the initial overhead of writing tests might slow down the development process.