The concept of serverless computing, explained for beginners, represents a paradigm shift in how applications are developed and deployed. It moves away from the traditional model of managing servers, offering a more streamlined and efficient approach to building software. Imagine ordering food online; you don’t concern yourself with the restaurant’s kitchen infrastructure, only the food arriving. Serverless mirrors this, allowing developers to focus solely on their application’s code without the burden of server management.

This guide provides a detailed exploration of serverless computing, covering its core components, benefits, and practical applications. We’ll demystify the terminology, compare it with traditional methods, and offer real-world examples to illustrate its impact across various industries. The goal is to provide a solid understanding of serverless, empowering beginners to grasp its potential and begin their journey into this transformative technology.

Introduction to Serverless Computing

Serverless computing represents a paradigm shift in cloud computing, allowing developers to build and run applications without managing servers. This approach abstracts away the underlying infrastructure, enabling a focus on writing code and delivering value to users. The core principle centers on automatically scaling resources and only charging for the actual compute time consumed.

Defining Serverless Computing

Serverless computing, despite its name, doesn’t mean there are no servers. Instead, it signifies that the management of servers is handled by a cloud provider. Developers upload their code, and the provider dynamically allocates and manages the necessary resources to execute that code. This eliminates the need for tasks such as server provisioning, patching, and scaling. The system automatically adjusts resources based on demand, ensuring optimal performance and cost efficiency.

Real-World Analogy: Ordering Food Online

Consider ordering food online. You don’t need to own a kitchen or employ chefs to get a meal. Instead, you use a platform (the cloud provider) to place your order (upload your code). The platform then handles the cooking (running your code), packaging (managing resources), and delivery (providing the results). You only pay for the meal you receive (the compute time used).

You don’t pay for the kitchen space or the chefs’ idle time (server infrastructure when your code isn’t running).

Pay-as-You-Go Model

The “pay-as-you-go” model is a cornerstone of serverless computing. Cloud providers charge users based on the actual resources consumed by their applications. This typically includes:

- Compute Time: The duration your code runs.

- Memory Usage: The amount of memory your code consumes during execution.

- Number of Requests: The number of times your code is triggered or executed.

- Data Transfer: The amount of data transferred in and out of your application.

This contrasts with traditional cloud models, where users often pay for reserved server capacity, regardless of actual usage.

The fundamental advantage of pay-as-you-go is cost optimization. You only pay for what you use, eliminating waste and potentially reducing operational expenses, especially for applications with variable workloads.

For example, a website that experiences a surge in traffic during peak hours will automatically scale up resources, and the user will only be charged for the additional compute time. Conversely, during periods of low traffic, the resources will scale down, and the user will pay less. This dynamic scaling and cost structure makes serverless particularly attractive for applications with unpredictable or spiky traffic patterns.

Core Components of Serverless Architecture

Serverless architecture, despite its name, relies on a complex interplay of underlying components to function effectively. Understanding these core building blocks is crucial for grasping how serverless applications are designed, deployed, and scaled. These components work in concert, enabling developers to focus on writing code rather than managing servers.

Functions

Functions are the fundamental units of execution in a serverless environment. They are small, self-contained pieces of code that perform a specific task. Each function is designed to be stateless, meaning it does not retain any information from previous executions. This characteristic allows for independent scaling and efficient resource utilization.Functions typically encompass a few key characteristics:

- Code Deployment: Functions are deployed as individual units, often packaged with their dependencies. This allows for isolated updates and versioning.

- Resource Allocation: Serverless platforms automatically allocate resources (CPU, memory, etc.) to functions based on demand. This dynamic allocation is a core feature of the serverless model.

- Statelessness: Functions are designed to be stateless. Any state information needed is typically stored externally, such as in a database or cache.

- Execution Time Limits: Serverless functions typically have execution time limits, often ranging from seconds to minutes, depending on the platform and configuration. This constraint encourages developers to write concise and efficient code.

Event Triggers

Event triggers are the mechanisms that initiate the execution of serverless functions. They act as the “glue” that connects various services and components within a serverless application. These triggers respond to specific events, such as HTTP requests, database updates, or scheduled timers.Event triggers initiate function execution based on specific events, and they are essential for driving the execution of functions.

- Event Sources: Event triggers can originate from various sources, including HTTP requests, database changes, file uploads, scheduled events, and messages from message queues.

- Event Data: When an event occurs, the event trigger provides data to the function. This data contains information about the event, such as the request parameters for an HTTP request or the updated data for a database change.

- Function Invocation: The event trigger invokes the function, passing the event data as input. The function then processes the data and performs its designated task.

- Asynchronous Execution: Many event triggers support asynchronous execution, allowing functions to be invoked without blocking the event source. This is especially important for handling high volumes of events.

Databases

While serverless functions themselves are stateless, serverless applications often require persistent storage for data. Databases provide this essential functionality. Serverless applications can interact with various database services.Databases provide storage for persistent data and interact with serverless functions.

- Database Options: Serverless applications can utilize both relational and NoSQL databases. Popular choices include Amazon DynamoDB, Google Cloud Firestore, and Azure Cosmos DB for NoSQL databases, and managed relational database services.

- Data Access: Functions interact with databases using APIs provided by the database services. This allows functions to read, write, and update data.

- Database Triggers: Some databases provide built-in triggers that can automatically invoke serverless functions in response to database events, such as data changes. This further streamlines the integration between databases and serverless applications.

- Scalability and Performance: Serverless databases are designed to scale automatically to handle varying workloads. They typically offer high performance and availability.

Benefits of Serverless Computing

Serverless computing offers a compelling set of advantages for both developers and businesses, fundamentally reshaping how applications are built, deployed, and managed. These benefits stem from the architectural shift away from traditional server management, leading to increased efficiency, reduced costs, and enhanced scalability. By abstracting away the underlying infrastructure, serverless empowers teams to focus on core application logic and innovation, ultimately driving faster time-to-market and improved business agility.

Cost Reduction in Serverless Computing

One of the most significant benefits of serverless computing is its potential to drastically reduce operational costs. This is primarily achieved through a “pay-per-use” model, where users are only charged for the actual compute time and resources consumed by their application.

- Elimination of Idle Resources: Unlike traditional server deployments, where resources are provisioned and paid for regardless of usage, serverless functions are only active when triggered by an event. This eliminates the cost associated with idle server capacity, leading to significant savings, especially for applications with variable or infrequent workloads. For instance, consider an image processing service that is only used during peak hours.

With serverless, you only pay for the compute time used during those peak hours, while with traditional servers, you’d be paying for the server’s uptime 24/7.

- Reduced Operational Overhead: Serverless providers handle the underlying infrastructure management, including server provisioning, patching, and scaling. This frees up developers and operations teams from these time-consuming tasks, reducing the need for specialized personnel and associated labor costs. This allows companies to reallocate resources to more strategic initiatives.

- Optimized Resource Allocation: Serverless platforms automatically scale resources based on demand. This ensures that applications have the necessary compute power when needed without over-provisioning, which leads to cost efficiency. The auto-scaling capabilities also prevent performance bottlenecks during peak traffic periods.

Simplified Application Deployment and Scaling in Serverless Computing

Serverless computing dramatically simplifies the processes of application deployment and scaling, providing developers with powerful tools to manage their applications more efficiently. The inherent architecture of serverless platforms streamlines these crucial aspects of software development.

- Simplified Deployment Pipelines: Serverless platforms often integrate with CI/CD (Continuous Integration/Continuous Deployment) tools, enabling automated deployment pipelines. Developers can deploy new code updates with minimal manual intervention, reducing the risk of errors and accelerating the release cycle. For example, a developer can automatically deploy a new version of a function whenever code is pushed to a specific branch in a Git repository.

- Automatic Scaling: Serverless platforms automatically scale applications based on incoming traffic and demand. This means that the application can handle sudden spikes in traffic without manual intervention, ensuring a seamless user experience. This eliminates the need to manually configure and manage scaling rules, reducing operational overhead. For instance, an e-commerce website built on serverless can automatically scale its functions to handle a surge in traffic during a flash sale.

- Reduced Infrastructure Management: Because the underlying infrastructure is managed by the serverless provider, developers do not need to worry about server provisioning, patching, or maintenance. This frees up developers to focus on writing code and building new features, rather than managing infrastructure. This reduces the complexity of the development process and allows teams to be more agile.

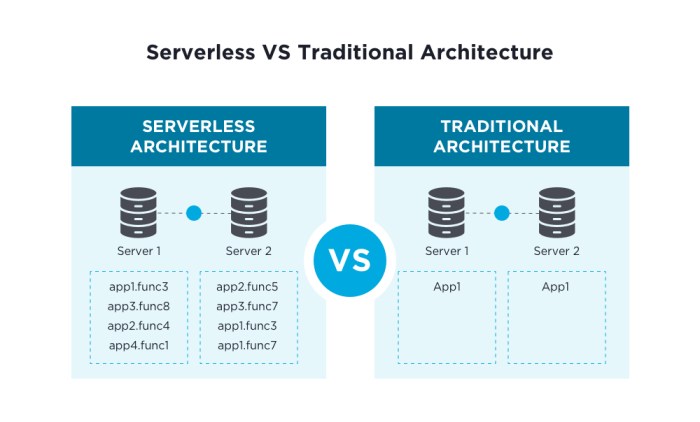

Serverless vs. Traditional Computing: A Comparison

Serverless computing and traditional computing represent fundamentally different approaches to deploying and managing applications. Understanding the distinctions between these two models is crucial for making informed decisions about application architecture and infrastructure choices. This comparison highlights the key differences, emphasizing resource management, cost structures, and operational overhead.

Resource Management Differences

The core difference lies in how resources are managed. Traditional computing necessitates manual provisioning, scaling, and maintenance of servers. Serverless computing abstracts away server management, allowing developers to focus solely on code.

- Traditional Computing: Involves direct management of servers, including operating system configuration, security patching, and capacity planning. Resources are allocated upfront, often leading to idle capacity and wasted resources during periods of low demand. Scaling typically requires manual intervention or automated scripts to adjust server instances.

- Serverless Computing: The cloud provider dynamically allocates and manages resources. Developers deploy code as functions, and the platform automatically scales resources based on demand. There is no need to provision or manage servers; the cloud provider handles all underlying infrastructure aspects. This approach facilitates automatic scaling, ensuring resources are available when needed.

Cost, Management, and Scalability Comparison

The following table provides a comparative analysis of serverless and traditional computing across key aspects.

| Aspect | Traditional Computing | Serverless Computing |

|---|---|---|

| Cost Model | Pay-as-you-go (infrastructure), upfront costs (hardware, licensing), fixed costs (maintenance) | Pay-per-use (function executions, resource consumption), no idle resource costs, typically lower operational costs |

| Management Overhead | High (server provisioning, OS management, security patching, capacity planning, monitoring) | Low (focus on code, cloud provider handles infrastructure, automatic scaling, reduced operational burden) |

| Scalability | Manual or automated scaling, potential for over-provisioning or under-provisioning, requires configuration and maintenance | Automatic scaling based on demand, high scalability with no manual intervention, scales nearly instantly |

| Development Complexity | More complex (server configuration, infrastructure setup, deployment pipelines) | Potentially simpler (focus on code, reduced infrastructure management, faster development cycles) |

| Deployment | Complex and time-consuming (requires server setup, configuration, and deployment tools) | Simplified and automated (code uploaded as functions, automatic deployment by the cloud provider) |

Serverless Use Cases: Real-World Examples

Serverless computing’s versatility has fueled its adoption across numerous industries, offering a cost-effective and scalable solution for a wide range of applications. The pay-per-use model, coupled with automatic scaling, makes it particularly attractive for workloads with fluctuating demands. This section explores practical applications of serverless computing, demonstrating its impact on various business domains.

Serverless Applications in Diverse Industries

Serverless architecture has found its niche in several industries, fundamentally changing how businesses approach application development and deployment. Its benefits extend beyond cost savings; serverless enhances agility, allowing organizations to rapidly iterate and respond to market changes. The following examples illustrate serverless implementation across different sectors.* E-commerce: E-commerce platforms leverage serverless functions for various tasks, including product catalog management, order processing, and payment gateway integrations.

Serverless allows these platforms to handle peak traffic during sales events without provisioning excess infrastructure. For example, a major online retailer could use serverless functions to process thousands of transactions per second during a flash sale, scaling resources automatically to meet demand.

Media and Entertainment

Media companies use serverless for video transcoding, image processing, and content delivery. This enables on-demand streaming and efficient content distribution. Consider a video-on-demand service; serverless functions can be triggered to automatically convert uploaded videos into different formats and resolutions, making them accessible on various devices.

Finance

Financial institutions employ serverless for fraud detection, real-time data analytics, and API management. The ability to quickly process large datasets and respond to events in real-time is critical in this industry. A credit card company could use serverless functions to analyze transaction data and identify fraudulent activities in real-time, blocking suspicious transactions instantly.

Healthcare

Healthcare providers use serverless for processing medical records, powering patient portals, and managing IoT devices. Serverless allows for secure and scalable data management, crucial for patient privacy. An example is the use of serverless functions to process data from wearable devices, providing real-time health monitoring and alerts to patients and healthcare providers.

Gaming

Game developers utilize serverless for backend services, such as player authentication, leaderboards, and matchmaking. Serverless scales automatically to handle the fluctuating player base during peak hours. Consider a multiplayer online game; serverless functions can manage player sessions, handle in-game events, and update leaderboards, scaling resources to accommodate thousands of concurrent players.

Serverless Implementation in Web Applications, Mobile Backends, and Data Processing

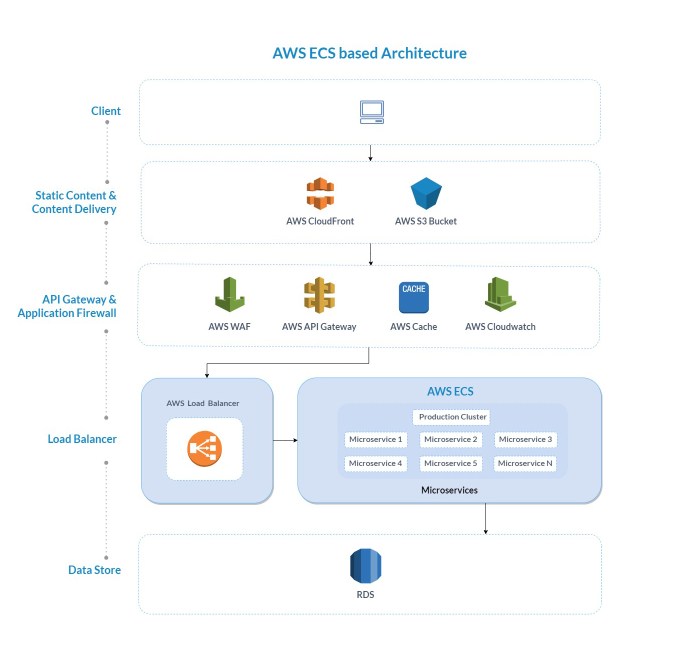

Serverless architecture is well-suited for web applications, mobile backends, and data processing tasks, providing significant advantages in terms of scalability, cost efficiency, and development speed. The following sections delve into the specifics of each area.

Web Applications

Web applications benefit from serverless by allowing developers to focus on front-end development and business logic, without managing the underlying infrastructure. Serverless functions handle tasks such as API requests, user authentication, and database interactions.* API Gateways: Serverless functions often serve as the foundation for API gateways, acting as the entry point for client requests. These gateways handle routing, authentication, authorization, and rate limiting.

For instance, a web application can use a serverless API gateway to expose its backend services to mobile clients, ensuring secure access and efficient request handling.

Static Website Hosting

Serverless platforms provide cost-effective solutions for hosting static websites. Content Delivery Networks (CDNs) automatically cache website content, ensuring fast loading times for users globally. An example is a company hosting its marketing website on a serverless platform, leveraging a CDN to deliver content quickly to visitors worldwide.

Backend-as-a-Service (BaaS) Integration

Serverless seamlessly integrates with BaaS services for features like user authentication, database storage, and push notifications. This simplifies development by providing pre-built components. Consider a web application using a BaaS platform for user authentication, storing user data in a serverless database, and sending push notifications to users through serverless functions.

Mobile Backends

Serverless simplifies mobile backend development by providing scalable and cost-effective solutions for handling mobile app functionalities. This approach reduces the operational overhead of managing servers.* User Authentication and Authorization: Serverless functions handle user authentication, managing user credentials, and providing secure access to app resources. For example, a mobile banking app can use serverless functions to authenticate users securely, verifying their identity and authorizing access to their account information.

Push Notifications

Serverless functions can be triggered to send push notifications to mobile users based on events or user actions. This enables timely communication and enhances user engagement. An example is a mobile e-commerce app using serverless functions to send push notifications to users when their order is shipped or when there are new product releases.

Real-time Data Synchronization

Serverless facilitates real-time data synchronization between the mobile app and the backend, enabling features such as live chat and collaborative editing. For instance, a messaging app can use serverless functions to manage real-time chat conversations, ensuring that messages are delivered instantly to all participants.

Data Processing

Serverless computing is well-suited for data processing tasks, providing scalable and cost-efficient solutions for handling large datasets. The pay-per-use model is particularly beneficial for tasks with variable workloads.* Data Transformation and ETL: Serverless functions can be used to transform and extract, transform, and load (ETL) data from various sources, preparing it for analysis and reporting. For example, a data analytics company can use serverless functions to extract data from different sources, transform it into a usable format, and load it into a data warehouse.

Image and Video Processing

Serverless functions automate image and video processing tasks, such as resizing, format conversion, and thumbnail generation. For instance, a social media platform can use serverless functions to automatically resize uploaded images for different display sizes.

Log Analysis and Monitoring

Serverless functions process and analyze log data from applications and infrastructure, enabling real-time monitoring and alerting. An example is a website monitoring tool that uses serverless functions to analyze website logs, identify errors, and send alerts to administrators.

Common Serverless Use Cases

Serverless computing is applied in a variety of use cases across different industries. These use cases are characterized by their ability to scale dynamically, reduce operational overhead, and minimize costs.* API Development: Building and deploying APIs with serverless functions, enabling easy integration with other services and applications.

Web Application Backends

Powering the backend of web applications with serverless functions, handling tasks such as data storage, user authentication, and business logic.

Mobile Backends

Providing backend services for mobile applications, including user authentication, push notifications, and data synchronization.

Chatbots and Conversational Interfaces

Developing chatbots and conversational interfaces with serverless functions, enabling natural language processing and intelligent responses.

Data Processing and Transformation

Processing and transforming data from various sources, including ETL pipelines, data cleansing, and data enrichment.

IoT Backend

Managing and processing data from IoT devices, including data ingestion, device management, and real-time analytics.

Event-Driven Applications

Building event-driven applications that respond to events in real-time, such as order processing, fraud detection, and system monitoring.

Batch Processing

Executing batch jobs and scheduled tasks, such as report generation, data backups, and system maintenance.

Serverless Databases

Utilizing serverless databases for storing and managing data, offering automatic scaling and pay-per-use pricing.

Machine Learning Inference

Deploying machine learning models and performing inference tasks, enabling real-time predictions and insights.

Serverless Function as a Service (FaaS) Explained

Function as a Service (FaaS) is a pivotal component of serverless computing, allowing developers to execute code without managing servers. It represents a paradigm shift, focusing on code execution rather than infrastructure provisioning. FaaS platforms handle all server-side management, including scaling, provisioning, and maintenance, thereby allowing developers to concentrate solely on writing code.

Understanding FaaS Functionality

FaaS enables developers to deploy individual functions that respond to specific events. These events can originate from various sources, such as HTTP requests, database updates, file uploads, or scheduled triggers. When an event occurs, the FaaS provider automatically invokes the corresponding function, allocating the necessary resources for execution. After the function completes, the resources are released. This event-driven, on-demand execution model is a key characteristic of FaaS, promoting efficient resource utilization and cost-effectiveness.

The underlying infrastructure is completely abstracted away from the developer, who only interacts with the code.

Example: Processing User Input with FaaS

A common use case for FaaS involves processing user input. Consider a scenario where a user submits a form. A FaaS function can be triggered by this submission, process the input data, and perform actions such as validating the data, storing it in a database, and sending a confirmation email. This entire process occurs without the developer needing to manage any server infrastructure.

The function’s scalability is automatically handled by the FaaS provider, ensuring the system can handle fluctuations in user traffic.

Code Snippet: Simple Python FaaS Function

Below is a Python function demonstrating the ease of use in FaaS. This function takes a user’s name as input and returns a greeting.

import jsondef greet(event, context): """ This function greets a user. """ try: name = event.get('name') if not name: name = "World" greeting = f"Hello, name!" return 'statusCode': 200, 'body': json.dumps('message': greeting) except Exception as e: return 'statusCode': 500, 'body': json.dumps('error': str(e))

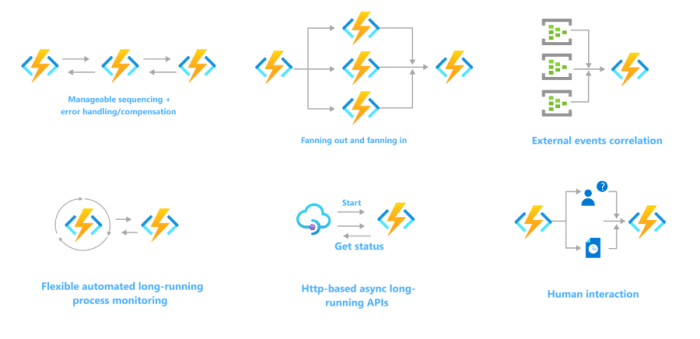

Serverless Event-Driven Architecture

Serverless event-driven architecture represents a paradigm shift in software design, enabling systems to react dynamically to events without requiring continuous server operation. This approach significantly enhances scalability, reduces operational overhead, and allows for the creation of highly responsive and efficient applications.

Concept of Event-Driven Architecture in Serverless

Event-driven architecture, within the context of serverless computing, is a design pattern where applications are built around the concept of events. Events are significant occurrences or changes in state, such as a file upload, a database update, or a user action. Serverless functions are triggered by these events, executing specific tasks in response. This contrasts with traditional systems that often rely on constant polling or scheduled tasks.

This approach leverages the inherent elasticity of serverless platforms, allowing resources to be allocated and de-allocated automatically based on the event volume.

How Events Trigger Functions and Initiate Workflows

Events act as the catalysts for serverless function execution. When an event occurs, it is detected by the serverless platform, which then invokes the appropriate function. This invocation process is managed by the platform’s event trigger mechanism. This trigger mechanism can vary depending on the specific serverless provider and the type of event.

- Event Sources: Events can originate from various sources, including:

- Cloud Storage: Uploads or deletions of files in cloud storage services (e.g., Amazon S3, Google Cloud Storage).

- Databases: Changes in database records (e.g., inserting, updating, or deleting data in a database like Amazon DynamoDB, Google Cloud Firestore).

- Message Queues: Messages published to message queues (e.g., Amazon SQS, Google Cloud Pub/Sub).

- API Gateways: HTTP requests received by API gateways (e.g., Amazon API Gateway, Google Cloud Endpoints).

- Scheduled Events: Time-based triggers, such as scheduled tasks (e.g., AWS CloudWatch Events, Google Cloud Scheduler).

- Function Invocation: When an event occurs, the serverless platform automatically invokes the associated function. The function receives the event data as input. This data provides context about the event, such as the file name uploaded to storage, the database record that changed, or the message received from the queue.

- Workflow Initiation: Functions can initiate complex workflows by triggering other functions. This allows for building sophisticated applications where multiple functions work together to process an event. For example, when a file is uploaded, one function might resize the image, another might extract metadata, and a third might store the image and metadata in a database.

Role of Event Buses and Their Importance in Serverless Systems

Event buses serve as central hubs for routing events in serverless systems, improving decoupling and facilitating more complex event-driven architectures. They act as a central point for receiving, filtering, and routing events to various functions or other services.

- Decoupling: Event buses decouple event producers (the services that generate events) from event consumers (the functions that respond to events). This decoupling enhances the system’s maintainability and scalability, as changes to one component do not necessarily affect others.

- Event Filtering and Routing: Event buses provide mechanisms for filtering and routing events based on event types, attributes, or content. This allows functions to subscribe to only the events they are interested in, reducing unnecessary function invocations and improving efficiency.

- Scalability and Reliability: Event buses are designed to handle a high volume of events, ensuring that events are reliably delivered to their consumers. They often provide features such as message persistence and retry mechanisms to handle failures.

- Event Transformation: Event buses can transform events before they are delivered to consumers. This can involve changing the format of the event data, adding or removing attributes, or enriching the event with additional information.

- Examples of Event Buses:

- Amazon EventBridge: A serverless event bus service that allows you to connect applications with data from various sources. It supports filtering, routing, and event transformation.

- Google Cloud Pub/Sub: A globally distributed messaging service that enables applications to send and receive messages reliably. It supports publish-subscribe messaging patterns.

- Azure Event Grid: A fully managed event routing service that allows you to build event-driven applications. It supports various event sources and destinations.

Serverless Database Considerations

Serverless computing fundamentally alters how applications interact with data storage. Traditional database architectures, designed for persistent server environments, often present challenges when integrated with the ephemeral and scalable nature of serverless functions. Understanding the nuances of database integration is crucial for building efficient, cost-effective, and scalable serverless applications. This section will delve into the intricacies of database integration, exploring compatible options, addressing challenges, and outlining best practices.

Database Integration with Serverless Applications

Databases interact with serverless applications primarily through API calls initiated by serverless functions. These functions, triggered by events, execute code that interacts with the database to read, write, update, or delete data. The architecture often involves an API gateway that handles incoming requests, authenticates users, and routes traffic to the appropriate serverless functions. These functions, in turn, utilize database client libraries or SDKs to communicate with the database.

This design decouples the application logic from the underlying infrastructure, enabling independent scaling of both the application and the database.

Serverless-Compatible Database Options

The choice of database significantly impacts the performance, cost, and scalability of a serverless application. Several database options are designed to seamlessly integrate with serverless architectures.

- NoSQL Databases: NoSQL databases, particularly document databases and key-value stores, are frequently favored for their scalability, flexibility, and ease of integration with serverless functions. They often provide auto-scaling capabilities, aligning with the dynamic nature of serverless workloads.

- Amazon DynamoDB: A fully managed NoSQL database service offered by AWS, DynamoDB is designed for high performance and automatic scaling. It offers both key-value and document data models.

DynamoDB’s pay-per-request pricing model makes it cost-effective for serverless applications with fluctuating traffic.

- Google Cloud Firestore: A NoSQL document database from Google Cloud, Firestore offers real-time data synchronization and offline support. It is well-suited for applications that require highly responsive data access.

- Azure Cosmos DB: Microsoft’s globally distributed, multi-model database service, Cosmos DB, supports various APIs, including SQL, MongoDB, Cassandra, Gremlin, and Table. Its global distribution capabilities and automatic scaling features make it suitable for geographically dispersed serverless applications.

- Amazon DynamoDB: A fully managed NoSQL database service offered by AWS, DynamoDB is designed for high performance and automatic scaling. It offers both key-value and document data models.

- Serverless Relational Databases: While traditional relational databases can be used with serverless, they often require more configuration and management. Serverless relational database offerings are emerging to address these limitations.

- Amazon Aurora Serverless: An on-demand, auto-scaling version of Amazon Aurora, a MySQL and PostgreSQL-compatible relational database. Aurora Serverless automatically starts up, shuts down, and scales capacity based on application needs, eliminating the need for manual capacity management.

- Google Cloud SQL: Google Cloud SQL provides fully managed relational database services for MySQL, PostgreSQL, and SQL Server. It offers auto-scaling features and is designed to simplify database administration.

- Other Options:

- Database-as-a-Service (DBaaS): Several DBaaS providers offer databases that can be integrated with serverless applications, although they may not be specifically designed for serverless. These options require careful consideration of scaling and pricing.

Challenges and Best Practices for Serverless Database Usage

Integrating databases with serverless applications presents several challenges, including connection management, cold starts, and data access patterns. Effective strategies are crucial for optimizing performance, cost, and scalability.

- Connection Management: Serverless functions are often short-lived, making connection pooling essential to avoid the overhead of establishing new database connections for each function invocation.

- Connection Pooling: Employ connection pooling libraries within serverless functions to reuse existing database connections. This reduces the latency associated with establishing new connections.

- Database Proxy: Utilize database proxies, which can manage database connections and optimize performance, especially for relational databases.

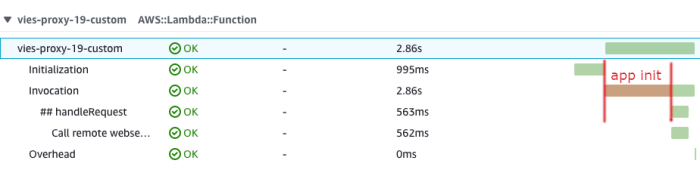

- Cold Starts: The initial execution of a serverless function, known as a cold start, can introduce latency. This latency can be exacerbated by database connection initialization.

- Warm-up Functions: Implement “warm-up” functions that proactively establish database connections to minimize cold start times.

- Optimize Code: Optimize function code and dependencies to reduce initialization time.

- Data Access Patterns: Efficient data access patterns are crucial for minimizing database costs and maximizing performance.

- Batch Operations: Utilize batch operations to reduce the number of database requests, particularly for write operations.

- Caching: Implement caching mechanisms to reduce the load on the database and improve response times.

- Data Modeling: Design data models that optimize query performance and minimize data retrieval costs.

- Security: Securing database access is paramount in serverless environments.

- Least Privilege: Grant serverless functions only the necessary permissions to access the database.

- Encryption: Employ encryption at rest and in transit to protect sensitive data.

- Network Security: Utilize virtual private clouds (VPCs) and security groups to restrict database access.

- Monitoring and Logging: Comprehensive monitoring and logging are essential for troubleshooting and optimizing database performance.

- Database Metrics: Monitor key database metrics, such as query performance, connection usage, and storage utilization.

- Function Logs: Log function invocations and database interactions to identify performance bottlenecks and errors.

- Cost Optimization: Serverless database pricing models can be complex. Understanding the pricing structure and optimizing data access patterns are crucial for cost management.

- Right-Sizing: Select the appropriate database capacity and scaling options to minimize costs.

- Query Optimization: Optimize database queries to reduce the number of read and write operations.

- Data Lifecycle Management: Implement data lifecycle management policies to archive or delete older data, reducing storage costs.

Serverless Security Best Practices

Serverless computing, while offering numerous advantages in terms of scalability and cost-efficiency, introduces a distinct set of security considerations. The distributed nature of serverless architectures, coupled with the reliance on third-party services and the ephemeral nature of functions, necessitates a proactive and multifaceted approach to security. Ignoring these considerations can expose applications to vulnerabilities, potentially leading to data breaches, service disruptions, and reputational damage.

This section Artikels key security best practices to mitigate risks and ensure the secure operation of serverless applications.

Security Considerations for Serverless Applications

Serverless applications, by design, alter the traditional security perimeter. Instead of a centralized, easily managed infrastructure, security responsibilities are distributed across various components and services. This shift requires a comprehensive understanding of the specific vulnerabilities associated with serverless architectures.

- Function Security: Securing the individual functions is paramount. This involves proper code sanitization to prevent injection attacks, secure handling of secrets and sensitive data, and rigorous input validation to prevent malicious inputs from compromising function execution.

- API Gateway Security: The API gateway acts as the entry point for all interactions with serverless functions. It is critical to secure the gateway by implementing robust authentication and authorization mechanisms, rate limiting to prevent denial-of-service attacks, and input validation to filter malicious requests.

- Data Security: Data storage and access control are crucial. Serverless applications often interact with various data stores. Implementing encryption at rest and in transit, employing least-privilege access control, and regularly auditing data access are essential to protect sensitive information.

- Dependency Management: Serverless functions often rely on third-party libraries and dependencies. Keeping these dependencies up-to-date and regularly scanning for vulnerabilities is critical to mitigate the risk of supply chain attacks.

- Monitoring and Logging: Comprehensive monitoring and logging are vital for detecting and responding to security incidents. Implementing robust logging to capture all relevant events and establishing real-time monitoring to identify suspicious activity are crucial for incident response.

- Identity and Access Management (IAM): IAM is the cornerstone of serverless security. Implementing fine-grained access control using IAM roles and policies, based on the principle of least privilege, is essential to restrict access to resources and minimize the impact of potential security breaches.

Authentication and Authorization in Serverless

Authentication and authorization are fundamental security pillars, ensuring that only authorized users and services can access and interact with serverless resources. Serverless architectures often leverage various authentication and authorization methods, requiring careful configuration and management.

- Authentication Methods: Various authentication methods can be employed, including:

- API Keys: Simple, but often less secure, suitable for basic access control.

- OAuth 2.0 and OpenID Connect (OIDC): Industry-standard protocols for delegated authentication and authorization, enabling secure integration with various identity providers.

- JSON Web Tokens (JWTs): Compact and self-contained tokens used to securely transmit information between parties, often used in conjunction with OAuth 2.0 and OIDC.

- Service Accounts: Used for authentication between serverless functions and other services, providing automated and secure access.

- Authorization Strategies: Authorization determines what a user or service can access. Key strategies include:

- Role-Based Access Control (RBAC): Assigning permissions to roles and then assigning users or services to those roles.

- Attribute-Based Access Control (ABAC): Making access decisions based on attributes of the user, the resource, and the environment.

- Least Privilege: Granting only the minimum necessary permissions to users and services.

- Integration with Identity Providers: Serverless platforms often integrate with identity providers (IdPs) such as AWS Cognito, Azure Active Directory, or Google Identity Platform. This integration allows for centralized user management and simplifies the authentication process.

Recommendations for Securing Serverless Functions and Data

Securing serverless functions and the data they process involves a combination of code-level practices, infrastructure configuration, and ongoing monitoring. Implementing these recommendations will help to minimize security risks and ensure the integrity and confidentiality of your applications.

- Code Security Best Practices:

- Input Validation: Always validate all input data to prevent injection attacks (e.g., SQL injection, cross-site scripting).

- Secure Coding Practices: Follow secure coding guidelines, such as OWASP recommendations, to avoid common vulnerabilities.

- Secret Management: Never hardcode secrets (API keys, passwords) in your code. Use secure secret management solutions (e.g., AWS Secrets Manager, Azure Key Vault) to store and manage secrets securely.

- Dependency Management: Regularly update dependencies and scan for vulnerabilities using tools like Snyk or OWASP Dependency-Check.

- Code Reviews: Conduct regular code reviews to identify and address potential security issues.

- Infrastructure Security Best Practices:

- IAM Policies: Implement fine-grained IAM policies based on the principle of least privilege to restrict access to resources.

- Network Security: Configure network security settings, such as VPCs and security groups, to control network traffic and isolate serverless functions.

- API Gateway Configuration: Secure your API gateway by implementing authentication, authorization, and rate limiting.

- Encryption: Encrypt data at rest and in transit. Use encryption keys managed by a secure key management service (KMS).

- Logging and Monitoring: Enable comprehensive logging and monitoring to detect and respond to security incidents. Implement alerting to be notified of suspicious activity.

- Data Security Best Practices:

- Encryption: Encrypt data at rest and in transit using appropriate encryption algorithms (e.g., AES-256).

- Access Control: Implement strict access control policies to limit access to sensitive data. Use RBAC or ABAC to define and enforce access permissions.

- Data Masking and Tokenization: Mask or tokenize sensitive data to reduce the risk of data breaches.

- Regular Audits: Conduct regular security audits to identify and address vulnerabilities in data storage and access control.

- Data Loss Prevention (DLP): Implement DLP solutions to prevent sensitive data from leaving the organization.

- Example: Securing an AWS Lambda Function:

Consider a scenario where a serverless function (e.g., AWS Lambda) processes user data. To secure this function:

- IAM Role: Assign the function an IAM role with the least privileges required to access necessary resources (e.g., S3 bucket for storing data).

- Input Validation: Validate all incoming data to prevent injection attacks.

- Secret Management: Store API keys or database credentials in AWS Secrets Manager and access them securely from the function.

- Encryption: Encrypt data stored in S3 using KMS-managed encryption keys.

- Logging and Monitoring: Enable CloudWatch logging to monitor function execution and identify any errors or suspicious activity.

Serverless Development Tools and Frameworks

The adoption of serverless computing necessitates the utilization of specialized tools and frameworks to facilitate efficient development, deployment, and management of serverless applications. These tools streamline the development lifecycle, enhancing developer productivity and simplifying complex tasks associated with serverless architectures. They offer a range of functionalities, from code editing and testing to infrastructure management and monitoring, enabling developers to focus on business logic rather than infrastructure concerns.

Popular Serverless Development Tools

Several tools and frameworks have emerged to support serverless development, each offering unique features and capabilities. These tools cater to various stages of the development process, providing solutions for code management, testing, deployment, and monitoring.

- Serverless Framework: A widely adopted open-source framework that simplifies the deployment and management of serverless applications across multiple cloud providers. It uses a YAML configuration file to define infrastructure and functions, streamlining the deployment process. For instance, a developer can define an AWS Lambda function and its associated API Gateway endpoint within a single `serverless.yml` file, significantly reducing the complexity of infrastructure provisioning.

- AWS SAM (Serverless Application Model): An open-source framework specifically designed for building serverless applications on AWS. It extends AWS CloudFormation and provides a simplified way to define serverless resources, such as Lambda functions, API Gateway, and DynamoDB tables. SAM also includes a CLI tool for local testing and deployment, which allows developers to test their functions locally before deploying them to the cloud.

- Azure Functions Core Tools: A set of command-line tools for developing, testing, and deploying Azure Functions locally and to the Azure cloud. It provides functionalities for creating, managing, and debugging function apps, enabling developers to streamline their development workflow. The core tools support various programming languages and trigger types, facilitating the creation of diverse serverless applications.

- Google Cloud Functions Framework: A framework for building and deploying Google Cloud Functions. It provides a local development environment and a set of libraries to help developers write, test, and deploy their functions. This framework supports various programming languages and allows developers to integrate their functions with other Google Cloud services.

- Terraform: While not exclusively a serverless tool, Terraform is a powerful infrastructure-as-code (IaC) tool that can be used to provision and manage serverless infrastructure across multiple cloud providers. Terraform enables developers to define infrastructure resources in code and automate their deployment, promoting consistency and reducing the risk of manual errors. This allows for the consistent and repeatable deployment of serverless infrastructure, such as Lambda functions, API Gateways, and databases, across different environments.

Benefits of Serverless Frameworks

Utilizing serverless frameworks offers several advantages, including increased developer productivity, reduced operational overhead, and improved application scalability. These frameworks provide abstractions and automation capabilities that simplify the complexities of serverless development.

- Simplified Deployment: Serverless frameworks automate the deployment process, reducing the manual effort required to provision and configure infrastructure. Developers can define their infrastructure as code and deploy it with a single command, streamlining the deployment workflow.

- Infrastructure-as-Code (IaC): Frameworks promote the use of IaC, allowing developers to manage infrastructure resources through code. This approach enables version control, facilitates collaboration, and ensures consistent deployments across different environments. This means that all infrastructure configurations are defined in a declarative manner, allowing for easier tracking of changes and rollbacks if needed.

- Local Development and Testing: Many frameworks provide local development environments, enabling developers to test their functions locally before deploying them to the cloud. This accelerates the development cycle and reduces the cost of testing. Local testing environments simulate the cloud environment, allowing developers to identify and fix issues early in the development process.

- Cross-Cloud Support: Several frameworks support multiple cloud providers, allowing developers to deploy their applications to different platforms without significant code changes. This promotes portability and reduces vendor lock-in.

- Simplified Configuration: Frameworks simplify the configuration of serverless resources, such as functions, triggers, and API gateways. Developers can define these resources in a declarative manner, reducing the complexity of infrastructure management.

Streamlining the Development Process with Serverless Tools

Serverless development tools streamline the development process by automating tasks and providing features that enhance developer productivity. They facilitate various stages of the development lifecycle, from code writing and testing to deployment and monitoring.

- Automated Infrastructure Provisioning: Tools automate the provisioning of serverless infrastructure, such as functions, API gateways, and databases. Developers can define their infrastructure in code and deploy it with a single command, reducing the manual effort required.

- Simplified Testing: Tools provide local testing environments and testing frameworks that simplify the testing of serverless functions. Developers can test their functions locally before deploying them to the cloud, reducing the cost of testing.

- Enhanced Debugging: Tools provide debugging capabilities that help developers identify and fix issues in their serverless functions. Developers can use debuggers to step through their code and inspect variables, making it easier to identify and resolve bugs.

- Improved Monitoring and Logging: Tools integrate with monitoring and logging services, providing developers with insights into the performance and health of their serverless applications. Developers can use these insights to identify and resolve performance issues and ensure the reliability of their applications.

- Faster Iteration Cycles: The combination of automated deployment, local testing, and debugging capabilities enables faster iteration cycles. Developers can quickly test and deploy changes, accelerating the development process.

Getting Started with Serverless Computing

Serverless computing offers a streamlined approach to application development, allowing developers to focus on writing code without managing underlying infrastructure. This section Artikels the practical steps required to deploy a basic serverless function and provides a setup process using a specific cloud provider to illustrate the implementation. The goal is to provide a clear, actionable guide for beginners entering the serverless world.

Deploying a Basic Serverless Function: Step-by-Step Guide

The deployment of a basic serverless function involves several key steps, from setting up the environment to testing the deployed function. These steps are generally consistent across different cloud providers, though the specifics of the tools and interfaces may vary. Understanding these fundamental actions is crucial for successfully deploying and managing serverless applications.

- Account Setup and Access Credentials: The first step involves creating an account with a chosen cloud provider (e.g., AWS, Azure, Google Cloud) if you don’t already have one. This account provides access to the provider’s services. You’ll then need to obtain the necessary access credentials, typically in the form of API keys or service account keys, which allow your development tools and scripts to interact with the cloud resources on your behalf.

Securely store these credentials, as they grant significant access to your cloud resources.

- Installation of Cloud Provider’s Command-Line Interface (CLI): Most cloud providers offer a CLI tool that facilitates the management of cloud resources from the command line. Installing this CLI allows you to interact with the cloud services through commands, automating tasks such as function deployment, configuration, and monitoring. For example, in AWS, you would install the AWS CLI.

- Function Code Development: Write the code for your serverless function. This code is typically written in a supported programming language, such as Python, Node.js, Java, or Go. The function’s logic should be designed to perform a specific task, such as processing data, responding to an API request, or triggering other services. Ensure your code is well-structured and handles potential errors gracefully.

- Function Package Creation: Package your function code along with any necessary dependencies. This typically involves creating a deployment package, such as a ZIP file, that includes your function code and any required libraries. The packaging process ensures that all necessary components are available when the function is executed in the cloud environment.

- Deployment Configuration: Configure the deployment settings for your function. This includes specifying the function’s name, the runtime environment (e.g., Python 3.9, Node.js 16), the memory allocation, and any necessary environment variables. You might also configure triggers, which determine how and when the function is invoked (e.g., HTTP requests, events from other services).

- Function Deployment: Deploy the packaged function code to the cloud provider’s serverless platform. This typically involves using the CLI tool to upload the package and create the function. The cloud provider then handles the infrastructure provisioning, scaling, and management of the function.

- Testing and Validation: Test the deployed function to ensure it behaves as expected. This may involve invoking the function through its API endpoint, simulating event triggers, or using the cloud provider’s testing tools. Verify that the function’s output is correct and that any integrations with other services are working properly.

- Monitoring and Logging: Set up monitoring and logging to track the function’s performance and identify any issues. Cloud providers offer built-in tools for monitoring metrics such as invocation count, execution time, and error rates. Logging allows you to capture detailed information about function executions, which is useful for debugging and troubleshooting.

Serverless Deployment with AWS: A Practical Example

Amazon Web Services (AWS) provides a comprehensive suite of services for serverless computing, including AWS Lambda, API Gateway, and DynamoDB. The following example demonstrates the process of deploying a simple “Hello, World!” function using AWS Lambda and API Gateway.

- Prerequisites: An active AWS account, the AWS CLI installed and configured, and a basic understanding of Python.

- Function Code (Python): Create a Python file (e.g., `hello.py`) containing the following code:

def lambda_handler(event, context): return 'statusCode': 200, 'body': 'Hello, World!'This code defines a Lambda function that returns a “Hello, World!” message when invoked.

- Packaging: Package the `hello.py` file into a ZIP archive. For example, on Linux/macOS:

zip hello.zip hello.pyThis creates a file named `hello.zip` containing your function code.

- Deployment using AWS CLI: Use the AWS CLI to deploy the function. This involves several steps:

- Create the Lambda function:

aws lambda create-function \ --function-name my-hello-world-function \ --runtime python3.9 \ --handler hello.lambda_handler \ --zip-file fileb://hello.zip \ --role <YOUR_LAMBDA_EXECUTION_ROLE_ARN>Replace `<YOUR_LAMBDA_EXECUTION_ROLE_ARN>` with the ARN (Amazon Resource Name) of an IAM role that grants the Lambda function permission to execute.

If you don’t have one, you’ll need to create one with the necessary permissions.

- Create an API Gateway:

aws apigateway create-rest-api --name my-hello-world-api - Create a resource and method (GET)

aws apigateway get-resources --rest-api-id <YOUR_API_ID>aws apigateway put-method \ --rest-api-id <YOUR_API_ID> \ --resource-id <YOUR_RESOURCE_ID> \ --http-method GET \ --authorization-type NONEaws apigateway put-integration \ --rest-api-id <YOUR_API_ID> \ --resource-id <YOUR_RESOURCE_ID> \ --http-method GET \ --type AWS_PROXY \ --integration-http-method POST \ --uri arn:aws:apigateway:<YOUR_REGION>:lambda:path/2015-03-31/functions/<YOUR_LAMBDA_FUNCTION_ARN>/invocations - Deploy the API:

aws apigateway create-deployment \ --rest-api-id <YOUR_API_ID> \ --stage-name prod

- Create the Lambda function:

- Testing: After deployment, the AWS CLI will provide the URL of the API Gateway endpoint. Navigate to that URL in your web browser or use a tool like `curl` to test the function. You should see the “Hello, World!” message.

Last Recap

In conclusion, serverless computing, as explained for beginners, presents a compelling alternative to traditional infrastructure, offering significant advantages in terms of cost, scalability, and development efficiency. From understanding its fundamental principles to exploring real-world applications, this exploration underscores the transformative power of serverless. As technology continues to evolve, serverless computing is poised to become an increasingly integral part of the software development landscape, offering developers a powerful and flexible platform for innovation.

Q&A

What is FaaS?

FaaS, or Function-as-a-Service, is a core component of serverless computing where developers write and deploy individual functions that are executed in response to events.

How does serverless handle scaling?

Serverless platforms automatically scale applications based on demand, allocating resources dynamically to handle increasing workloads without manual intervention.

Is serverless cheaper than traditional computing?

Serverless can often be more cost-effective due to its pay-as-you-go pricing model, but costs depend on usage patterns and application design.

What are the security considerations for serverless?

Security in serverless involves securing functions, data, and event triggers, including implementing authentication, authorization, and following security best practices for cloud platforms.