Provisioned concurrency for Lambda functions represents a strategic paradigm shift in serverless computing, fundamentally altering how we perceive and manage function execution. It is a proactive mechanism designed to preemptively initialize and maintain a specified number of execution environments, thereby mitigating the cold start problem, a common performance bottleneck in serverless architectures. This approach stands in stark contrast to on-demand concurrency, where function instances are created only when triggered, leading to potential latency during periods of high demand.

This discussion will dissect the core concepts of provisioned concurrency, exploring its operational mechanics, benefits, and practical implications. We will analyze its role in enhancing application performance, particularly in scenarios demanding low-latency responses. Furthermore, we will delve into the technical aspects of configuring, monitoring, and optimizing provisioned concurrency to ensure efficient resource utilization and cost-effectiveness.

Understanding Provisioned Concurrency

Provisioned concurrency represents a proactive strategy for managing the performance of AWS Lambda functions. It addresses the cold start problem by initializing a specified number of execution environments, ready to process incoming requests. This pre-warming approach ensures that functions can respond with minimal latency, providing a consistent user experience, especially during periods of high demand.

Fundamental Concept of Provisioned Concurrency

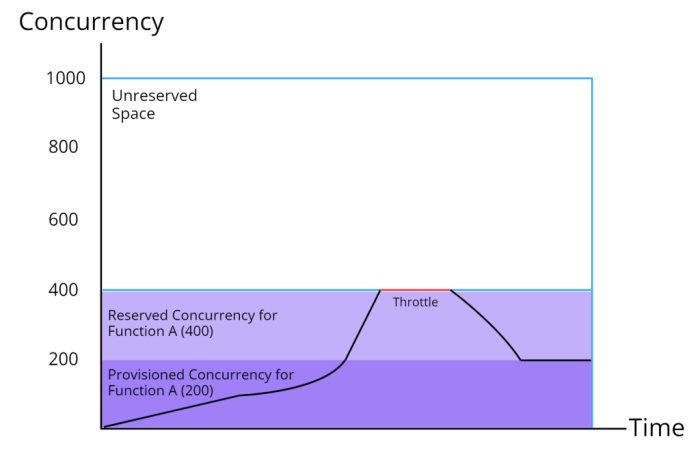

Provisioned concurrency in AWS Lambda pre-allocates execution environments for your function. This means that when a function is configured with provisioned concurrency, AWS creates and maintains a pool of initialized function instances. These instances are kept warm and ready to handle invocations, eliminating or significantly reducing the latency associated with cold starts. The number of instances provisioned is configurable, allowing you to scale resources based on anticipated traffic.

Distinction Between Provisioned and On-Demand Concurrency

The key difference lies in how function invocations are handled. On-demand concurrency is the default behavior in Lambda. When a function is invoked, and no execution environments are available, Lambda provisions new instances. This process, known as a cold start, introduces latency. Provisioned concurrency, on the other hand, mitigates this latency by pre-initializing instances.

- On-Demand Concurrency: The function instances are created when the function is invoked and the request arrives. This means the initial invocation experiences a cold start, leading to higher latency. As the number of concurrent requests increases, Lambda scales up by creating more instances. This scaling can be slower and unpredictable, especially during sudden spikes in traffic.

- Provisioned Concurrency: The function instances are pre-initialized and kept warm. When a request arrives, it’s routed to one of these ready instances. This significantly reduces latency because the execution environment is already prepared. Provisioned concurrency offers more predictable performance, as the response time remains consistent, regardless of the incoming request rate, up to the provisioned capacity.

Benefits and Use Cases of Provisioned Concurrency

Provisioned concurrency is particularly beneficial in scenarios where low latency and predictable performance are critical.

- High-Volume, Real-Time Applications: Consider a real-time data processing pipeline that handles incoming sensor data. Provisioned concurrency ensures consistent low-latency processing of each data point, crucial for applications where data freshness is paramount. For instance, in financial trading applications, processing stock market data requires extremely low latency to enable timely trading decisions.

- Interactive Web Applications: Interactive web applications, such as e-commerce platforms or social media feeds, benefit from a responsive user experience. Provisioned concurrency reduces the delay in responding to user requests, leading to a smoother and more engaging user experience. Imagine an e-commerce site during a flash sale; provisioned concurrency can help handle the surge in traffic without causing slow loading times.

- API Gateways: API gateways often serve as the entry point for various services. Using provisioned concurrency with Lambda functions behind an API gateway ensures fast response times for API calls. For example, an API providing weather updates could use provisioned concurrency to deliver the latest information quickly to users, improving the user experience.

- Batch Processing with Tight SLAs: Even in batch processing scenarios, where a deadline exists, provisioned concurrency can be useful. If you have a batch job that must complete within a specific timeframe, provisioned concurrency can guarantee that the Lambda function is ready to process data without delay.

Provisioned concurrency allows you to optimize for consistent performance by allocating resources ahead of time. It involves a trade-off: you pay for the provisioned capacity, regardless of whether it is actively processing requests. Therefore, careful planning and monitoring are essential to ensure that provisioned concurrency is used effectively and cost-efficiently.

The Purpose of Provisioned Concurrency

Provisioned Concurrency in AWS Lambda serves to optimize the performance characteristics of serverless functions, particularly in scenarios where predictable and consistent response times are critical. It addresses the inherent latency associated with cold starts, a common challenge in serverless computing, by pre-initializing function execution environments. This pre-warming approach ensures that function invocations are served by ready-to-use instances, thus minimizing the time it takes for a function to begin processing a request.

Goals of Provisioned Concurrency

Provisioned Concurrency is designed to meet specific performance objectives. The primary goal is to reduce the latency experienced by users when interacting with Lambda functions. This is achieved by eliminating or significantly minimizing cold start times, leading to a more responsive application. Another key objective is to provide predictable performance under varying loads, ensuring that the function’s execution time remains consistent regardless of the number of concurrent requests.

Key Performance Metrics Improved

Provisioned Concurrency directly impacts several critical performance metrics. The most significant improvement is in the reduction of cold start times. By pre-warming function instances, the time required to initialize the execution environment is eliminated. This translates to lower latency, as requests are processed immediately without the delay of provisioning a new container. Additionally, provisioned concurrency improves the consistency of response times.

- Latency: Provisioned Concurrency drastically reduces the time it takes for a function to respond to an invocation. This reduction is most noticeable for the first invocation after a period of inactivity, where cold starts are typically encountered. The result is a more responsive user experience. For example, a function that typically takes 500ms to initialize (cold start) and 100ms to execute a request could see its latency reduced to just 100ms with provisioned concurrency enabled, as the initialization time is eliminated.

- Cold Start Times: This is the primary metric that provisioned concurrency targets. It eliminates the need for Lambda to create a new execution environment each time a function is invoked, which significantly reduces the delay caused by cold starts. Consider a scenario where a function experiences frequent cold starts due to infrequent use. With provisioned concurrency, the function instances are kept warm, allowing for immediate execution.

- Consistency of Response Times: By pre-warming instances, provisioned concurrency ensures that function invocations consistently meet performance expectations. The execution environment is ready to process requests immediately, providing more predictable performance, which is particularly important for real-time applications.

Scenario: Application Success with Provisioned Concurrency

Consider an e-commerce platform that hosts flash sales events. These events are characterized by a sudden surge in traffic, often leading to performance bottlenecks if not handled correctly. During a flash sale, thousands of users might attempt to access product listings, add items to their carts, and complete purchases simultaneously.Without provisioned concurrency, the Lambda functions responsible for handling these requests could experience significant cold starts.

This would result in a poor user experience, with slow page load times and potential transaction failures, leading to customer frustration and lost revenue.However, with provisioned concurrency, the e-commerce platform can pre-warm the Lambda functions that handle these critical operations. By provisioning enough concurrency to handle the expected peak load, the platform can ensure that all incoming requests are processed immediately.

The function instances are ready to respond, minimizing latency and providing a seamless user experience. The consistent performance would lead to a higher conversion rate and greater customer satisfaction.In this scenario, provisioned concurrency is crucial for the success of the flash sale event. It allows the platform to handle the sudden increase in traffic without sacrificing performance, ultimately leading to a successful event and increased revenue.

This is a case where the investment in provisioned concurrency directly translates into a better user experience and improved business outcomes.

Configuring Provisioned Concurrency

Configuring provisioned concurrency is crucial for ensuring that your Lambda functions are ready to handle invocations with minimal latency. This proactive approach involves specifying the number of concurrent executions you want to pre-initialize, thereby reducing the cold start time experienced by users. This section Artikels the steps for configuration using both the AWS Management Console and the AWS Command Line Interface (CLI), along with monitoring strategies to assess the effectiveness of your configuration.

Configuring Provisioned Concurrency with the AWS Management Console

The AWS Management Console provides a user-friendly interface for configuring provisioned concurrency. This method is suitable for users who prefer a graphical interface and require a straightforward approach to setting up provisioned concurrency.To configure provisioned concurrency via the AWS Management Console, follow these steps:

- Navigate to the Lambda Function: Open the AWS Management Console and navigate to the Lambda service. Select the function for which you want to configure provisioned concurrency.

- Access the Configuration Tab: In the function’s details page, click on the “Configuration” tab.

- Select “Concurrency”: Within the “Configuration” tab, choose the “Concurrency” option.

- Edit Provisioned Concurrency: Click the “Edit” button. In the “Provisioned concurrency” section, you will be presented with options to configure the provisioned concurrency.

- Add Provisioned Concurrency: Select “Add provisioned concurrency”. You can then configure the number of provisioned concurrency instances. You can specify the amount of provisioned concurrency to allocate for the function. You can also set an alias, such as ‘$LATEST’ or a specific alias created for version control.

- Set Provisioned Concurrency: Input the desired number of provisioned concurrency instances. Consider factors like expected traffic, latency requirements, and cost implications.

- Save the Configuration: Click “Save” to apply the changes. The console will begin provisioning the specified concurrency.

- Monitor Provisioning Status: Observe the provisioning status within the console. The status will indicate whether the provisioned concurrency is in the “Provisioning,” “Active,” or “Failed” state.

The console provides real-time feedback on the provisioning process, allowing for easy monitoring and adjustment of the concurrency settings. This visual feedback simplifies the management of Lambda function performance.

Configuring Provisioned Concurrency with the AWS CLI

The AWS CLI offers a programmatic approach to configuring provisioned concurrency, which is ideal for automation and infrastructure-as-code practices. Using the CLI allows for repeatable and scriptable configurations.The following are the steps and code examples for configuring provisioned concurrency using the AWS CLI:

- Install and Configure AWS CLI: Ensure the AWS CLI is installed and configured with the necessary credentials and region.

- Use the `put-provisioned-concurrency-config` Command: The `put-provisioned-concurrency-config` command is used to set the provisioned concurrency for a Lambda function.

- Specify Function Name and Alias: The function name and alias are essential parameters for the command. The alias specifies which version of the function the provisioned concurrency should be applied to.

- Specify Provisioned Concurrency: Define the number of provisioned concurrency instances to allocate.

Here are example commands using both Python (using the Boto3 library) and JavaScript (using the AWS SDK for JavaScript) to configure provisioned concurrency: Python (Boto3):“`pythonimport boto3lambda_client = boto3.client(‘lambda’)function_name = ‘my-lambda-function’alias_name = ‘PROD’ # Or ‘$LATEST’provisioned_concurrency = 5try: response = lambda_client.put_provisioned_concurrency_config( FunctionName=function_name, Qualifier=alias_name, ProvisionedConcurrentExecutions=provisioned_concurrency ) print(f”Provisioned concurrency configured successfully: response”)except Exception as e: print(f”Error configuring provisioned concurrency: e”)“` JavaScript (AWS SDK v3):“`javascriptimport LambdaClient, PutProvisionedConcurrencyConfigCommand from “@aws-sdk/client-lambda”;const client = new LambdaClient( region: “us-east-1” ); // Replace with your regionconst params = FunctionName: “my-lambda-function”, Qualifier: “PROD”, // Or ‘$LATEST’ ProvisionedConcurrentExecutions: 5,;const command = new PutProvisionedConcurrencyConfigCommand(params);try const data = await client.send(command); console.log(“Success”, data); catch (error) console.error(“Error”, error);“`These code examples demonstrate how to programmatically set provisioned concurrency.

They use the `put-provisioned-concurrency-config` command through the AWS SDKs, enabling automation and integration into infrastructure pipelines. Remember to replace placeholders like `my-lambda-function`, `PROD`, and the region with your specific values.

Monitoring Provisioned Concurrency Usage and Performance

Monitoring is critical for understanding the impact of provisioned concurrency on function performance and cost. CloudWatch provides comprehensive metrics for this purpose. By analyzing these metrics, you can optimize your provisioned concurrency configuration to meet your performance goals while managing costs effectively.Key CloudWatch metrics to monitor include:

- ProvisionedConcurrencyExecutions: The number of concurrent executions for the provisioned concurrency. This metric indicates how frequently the provisioned concurrency is utilized.

- UnreservedConcurrentExecutions: The number of concurrent executions that are not provisioned. This metric is useful for identifying if there is a need to increase the provisioned concurrency.

- ProvisionedConcurrencySpilloverExecutions: The number of invocations that were not served by provisioned concurrency, and instead used unreserved concurrency. This indicates that the provisioned concurrency is not sufficient for the current load.

- ConcurrentExecutions: The total number of concurrent executions.

- Invocations: The number of times your function code is executed.

- Duration: The amount of time your function code spends processing an event.

- ColdStartCount: The number of cold starts that occurred.

Monitoring these metrics helps to answer the following questions:

- Is the provisioned concurrency sufficient to handle the load?

- Are cold starts being effectively minimized?

- Are there any unexpected performance bottlenecks?

You can set up CloudWatch alarms to receive notifications when specific thresholds are exceeded. For instance, you can set an alarm when `ProvisionedConcurrencySpilloverExecutions` exceeds a certain value, indicating that the provisioned concurrency needs to be increased. Similarly, you can set an alarm when `Duration` consistently increases, indicating a performance issue. The use of CloudWatch dashboards allows you to visualize these metrics over time, enabling you to analyze trends and identify areas for optimization.

Pricing and Cost Considerations

Provisioned concurrency in AWS Lambda introduces a new dimension to cost optimization for serverless applications. Understanding the pricing model and its implications is crucial for effectively managing Lambda function expenses. This section will dissect the cost structures of provisioned concurrency, compare them with on-demand concurrency, and provide practical examples to illustrate the financial trade-offs.

Pricing Model for Provisioned Concurrency

The pricing for provisioned concurrency in AWS Lambda comprises two primary components: the provisioned concurrency cost and the execution cost. The provisioned concurrency cost is incurred for the duration that the provisioned concurrency is active, irrespective of function invocations. The execution cost, on the other hand, is based on the number of invocations and the duration of each invocation, similar to on-demand concurrency.* Provisioned Concurrency Cost: This is charged per GB-second for the memory allocated to the function, multiplied by the number of provisioned concurrent instances.

This cost applies for the entire duration that the provisioned concurrency is enabled. For example, if a function is configured with 1 GB of memory and 100 provisioned concurrency instances, the cost is calculated based on the number of GB-seconds for which the 100 instances are provisioned. The pricing varies by region; consult the AWS Lambda pricing page for specific rates.

Execution Cost

This is incurred for each function invocation that utilizes the provisioned concurrency. The cost is based on the duration of the function execution, measured from the time the function starts running until it finishes, rounded up to the nearest millisecond. The execution cost is the same as for on-demand concurrency.

Cost Comparison: Provisioned Concurrency vs. On-Demand Concurrency

Comparing the costs of provisioned concurrency with on-demand concurrency requires a careful assessment of the workload characteristics. Provisioned concurrency is most cost-effective when the function is invoked frequently and consistently, and the execution duration is predictable. On-demand concurrency is more suitable for sporadic workloads with unpredictable traffic patterns.* On-Demand Concurrency: The pricing model is based on the number of requests and the duration of the function execution.

You pay only for the time your code is running and the resources it consumes. This model is ideal for infrequent or bursty workloads where the demand is not constant.

Provisioned Concurrency

Offers predictable performance and lower latency. It is suitable for use cases that demand high performance and low latency, and where there is a consistent demand. The cost is higher initially due to the provisioned concurrency cost, but the execution cost can be lower if the function executions are frequent.Consider the following scenario: a Lambda function with 512 MB of memory.

If the function runs for 1 second and is invoked 100 times, the execution cost will be the same for both provisioned and on-demand concurrency. However, if you provision 100 instances and the function runs continuously for 1 hour, the provisioned concurrency cost will be substantial, even if the function is not actively invoked.

Cost Implications of Provisioned Concurrency

The cost implications of provisioned concurrency vary significantly based on the execution duration and concurrency levels. A table below illustrates the cost implications, assuming a hypothetical price of $0.00001667 per GB-second for provisioned concurrency and $0.0000000001667 per millisecond for execution time (these are illustrative and the actual prices may vary depending on the region). The table examines two scenarios: a function with a short execution time (100 milliseconds) and a function with a longer execution time (1 second), both under different concurrency levels.

| Scenario | Function Execution Duration | Provisioned Concurrency Instances | Provisioned Concurrency Cost (per hour) | Execution Cost (per hour) | Total Cost (per hour) |

|---|---|---|---|---|---|

| Scenario 1: Short Execution | 100 milliseconds (0.1 seconds) | 10 | $0.006 | $0.0006 | $0.0066 |

| Scenario 1: Short Execution | 100 milliseconds (0.1 seconds) | 100 | $0.06 | $0.006 | $0.066 |

| Scenario 2: Long Execution | 1 second | 10 | $0.006 | $0.006 | $0.012 |

| Scenario 2: Long Execution | 1 second | 100 | $0.06 | $0.06 | $0.12 |

The table illustrates that as the concurrency level and function execution time increase, the overall cost also increases. The provisioned concurrency cost becomes a significant portion of the total cost, highlighting the importance of careful planning and optimization of function execution duration and concurrency settings.

Monitoring and Troubleshooting

Provisioned concurrency introduces a new dimension to Lambda function management, necessitating robust monitoring and proactive troubleshooting. Effectively monitoring the health and performance of provisioned concurrency is critical to ensuring that functions meet performance expectations and remain cost-effective. This section delves into the techniques for monitoring, identifying common issues, and providing a structured approach to troubleshooting problems related to provisioned concurrency.

Monitoring Lambda Function Health and Performance

Comprehensive monitoring is crucial for maintaining optimal performance and cost-efficiency when using provisioned concurrency. Several metrics and tools are available to gain insights into function behavior.

- CloudWatch Metrics: Amazon CloudWatch provides the primary mechanism for monitoring Lambda functions. Key metrics to observe include:

- `ProvisionedConcurrencyInvocations`: The number of invocations served by provisioned concurrency. This metric indicates the workload handled by provisioned instances.

- `ProvisionedConcurrencyUtilization`: The percentage of provisioned concurrency instances that are actively serving requests. A high utilization rate indicates that the provisioned concurrency is effectively handling the workload.

- `ProvisionedConcurrencySpilloverInvocations`: The number of invocations that were not served by provisioned concurrency and were instead served by on-demand concurrency. A high number of spillover invocations indicates that the provisioned concurrency is insufficient for the workload.

- `ConcurrentExecutions`: The number of function instances running concurrently.

- `ThrottledInvocations`: The number of invocations that were throttled.

- `Errors`: The number of errors that occurred during function execution.

- `Duration`: The time taken for the function to execute.

- CloudWatch Logs: Examining logs is essential for identifying the root causes of issues. CloudWatch Logs captures detailed information about each function invocation, including timestamps, execution times, error messages, and any custom logging statements. By analyzing these logs, developers can pinpoint performance bottlenecks, identify errors, and gain insights into function behavior.

- AWS X-Ray: For complex applications, AWS X-Ray provides a powerful distributed tracing service. X-Ray allows you to trace requests as they travel through your application, providing detailed insights into the performance of each component, including Lambda functions. X-Ray helps identify latency issues and bottlenecks by visualizing the path of requests and providing timing information for each segment of the request.

- Performance Testing: Regularly conducting performance tests is essential for validating the effectiveness of provisioned concurrency. These tests should simulate realistic workloads and measure key performance indicators (KPIs), such as invocation latency, error rates, and throughput. Performance tests can identify potential issues before they impact production workloads. Tools like Apache JMeter or custom scripts can be used to simulate load and measure performance.

Common Issues and Troubleshooting

Several issues can arise when using provisioned concurrency. Understanding these issues and their potential causes is crucial for effective troubleshooting.

- Spillover Invocations: Occurs when the number of incoming requests exceeds the capacity of the provisioned concurrency. This results in invocations being served by on-demand concurrency, increasing latency and costs.

- Cause: Insufficient provisioned concurrency capacity, sudden spikes in traffic, or inefficient function code.

- Troubleshooting: Increase the provisioned concurrency capacity, optimize function code for faster execution, or implement autoscaling to dynamically adjust provisioned concurrency based on traffic patterns.

- Cold Starts: Even with provisioned concurrency, cold starts can occur if the function’s provisioned concurrency is not fully utilized or if the function code has issues.

- Cause: Insufficient provisioned concurrency, function code errors, or infrequent function invocations.

- Troubleshooting: Increase the provisioned concurrency capacity, optimize function code, or ensure the function is invoked regularly to keep instances warm.

- High Latency: Functions may experience high latency if the provisioned concurrency capacity is insufficient or if the function code is inefficient.

- Cause: Insufficient provisioned concurrency, inefficient function code, or dependencies that introduce latency.

- Troubleshooting: Increase the provisioned concurrency capacity, optimize function code, and profile function execution to identify performance bottlenecks.

- Errors: Errors can occur due to various reasons, including function code errors, configuration issues, or resource limitations.

- Cause: Function code errors, configuration issues, or resource limitations (e.g., memory, timeout).

- Troubleshooting: Examine CloudWatch Logs for error messages, verify function configuration, and ensure sufficient resources are allocated.

Troubleshooting Checklist

A structured approach to troubleshooting provisioned concurrency issues can streamline the resolution process. The following checklist provides a step-by-step guide:

- Identify the Problem:

- Define the specific issue (e.g., spillover invocations, high latency, errors).

- Determine the scope and impact of the problem.

- Gather Information:

- Review CloudWatch metrics (e.g., `ProvisionedConcurrencyUtilization`, `ProvisionedConcurrencySpilloverInvocations`, `ConcurrentExecutions`, `Duration`, `Errors`).

- Examine CloudWatch Logs for error messages, performance bottlenecks, and other relevant information.

- Check AWS X-Ray traces for detailed insights into function execution.

- Analyze the Data:

- Identify patterns and trends in the metrics and logs.

- Correlate metrics with events and time periods.

- Determine the root cause of the problem.

- Implement a Solution:

- Based on the root cause analysis, implement a solution (e.g., increase provisioned concurrency, optimize function code, fix configuration issues).

- Test the solution to ensure it resolves the problem.

- Monitor and Validate:

- Continuously monitor CloudWatch metrics to ensure the problem is resolved.

- Conduct performance tests to validate the solution.

- Document the troubleshooting steps and the solution.

Use Cases and Best Practices

Provisioned concurrency is a powerful tool, but its effectiveness hinges on its strategic application. Understanding ideal use cases and adhering to best practices is crucial for maximizing its benefits and avoiding unnecessary costs. This section delves into these aspects, providing a practical guide to leveraging provisioned concurrency for optimal performance and resource utilization.

Ideal Use Cases for Provisioned Concurrency

Provisioned concurrency excels in scenarios where predictable, consistent performance is paramount, and cold starts significantly impact user experience. Several use cases stand out as prime candidates for its implementation.Web Applications and APIs:Provisioned concurrency is particularly well-suited for web applications and APIs that experience consistent traffic patterns. By pre-warming function instances, it eliminates cold starts, ensuring low-latency responses for every request.

This is crucial for maintaining a seamless user experience, especially during peak hours or periods of high demand. Consider an e-commerce platform. During flash sales or holiday seasons, maintaining responsiveness is vital. Provisioned concurrency allows the platform to handle a surge in traffic without performance degradation.Mobile Backend Services:Mobile applications frequently rely on backend services to handle data processing, authentication, and other core functionalities.

Provisioned concurrency can significantly improve the responsiveness of these services, ensuring that mobile users receive timely responses. Imagine a social media application. Users expect rapid loading of content and instant updates. Provisioned concurrency helps the backend service keep up with user demand.Batch Processing and Scheduled Tasks:While provisioned concurrency is primarily designed for interactive applications, it can also benefit batch processing and scheduled tasks.

If these tasks have strict latency requirements or need to complete within a specific timeframe, pre-warming function instances can help ensure timely execution. For example, a daily data aggregation job could leverage provisioned concurrency to guarantee it finishes before the start of business hours.

Best Practices for Implementing Provisioned Concurrency

Effective implementation of provisioned concurrency requires careful planning and execution. Several best practices should be followed to optimize performance, minimize costs, and ensure efficient resource utilization.Configuration and Capacity Planning:Accurately estimating the required provisioned concurrency is crucial. Under-provisioning can lead to performance bottlenecks, while over-provisioning results in unnecessary costs.Consider the following factors:

- Traffic Patterns: Analyze historical traffic data to identify peak load periods and predict future demand. Use monitoring tools to track request rates, error rates, and latency.

- Function Execution Time: Measure the average execution time of your functions. Longer execution times may require more provisioned concurrency to handle concurrent requests.

- Memory Allocation: Ensure that the function’s memory allocation is sufficient to handle the expected load. Insufficient memory can lead to increased execution times and performance degradation.

Monitoring and Optimization:Continuously monitor the performance of your functions and adjust provisioned concurrency based on observed metrics.Use the following metrics for monitoring:

- Provisioned Concurrency Utilization: Track the percentage of provisioned concurrency that is being utilized. If the utilization is consistently low, you may be over-provisioning. If the utilization is consistently high, you may need to increase provisioned concurrency.

- Cold Start Count: Monitor the number of cold starts. If the number of cold starts is significant, it indicates that provisioned concurrency is insufficient.

- Latency: Track the end-to-end latency of your function invocations. High latency may indicate insufficient provisioned concurrency or other performance bottlenecks.

Automated Scaling:Consider implementing automated scaling strategies to dynamically adjust provisioned concurrency based on real-time demand. This can help optimize costs and ensure that the application has sufficient capacity to handle fluctuations in traffic. Tools like AWS Application Auto Scaling can be used to automatically scale provisioned concurrency.Cost Optimization:Provisioned concurrency incurs costs, so it’s essential to optimize its usage to minimize expenses.Consider the following:

- Schedule Provisioning: Schedule provisioned concurrency only during peak hours or periods of high demand.

- Right-Sizing: Accurately estimate the required provisioned concurrency to avoid over-provisioning.

- Use On-Demand Concurrency: Utilize on-demand concurrency during off-peak hours or for less critical workloads.

Procedure for Testing and Validating Provisioned Concurrency Configurations

Thorough testing and validation are essential to ensure that provisioned concurrency is configured correctly and meets performance requirements. This procedure Artikels a systematic approach to testing and validating provisioned concurrency configurations.Phase 1: Baseline Performance TestingEstablish a baseline performance measurement before enabling provisioned concurrency. This provides a benchmark against which to compare performance improvements.Steps:

- Function Deployment: Deploy the Lambda function that will be using provisioned concurrency.

- Traffic Simulation: Simulate a range of traffic loads, including typical and peak loads, using a load testing tool.

- Performance Metrics Collection: Collect performance metrics, including latency, error rates, and cold start counts.

- Baseline Recording: Record the baseline performance metrics for comparison.

Phase 2: Provisioned Concurrency ConfigurationConfigure provisioned concurrency for the Lambda function.Steps:

- Provisioning: Configure the desired amount of provisioned concurrency based on traffic analysis and performance requirements.

- Testing Environment: If possible, test the provisioned concurrency in a non-production environment.

Phase 3: Load Testing with Provisioned ConcurrencyExecute load tests with provisioned concurrency enabled to evaluate its impact on performance.Steps:

- Traffic Simulation: Simulate the same range of traffic loads used in the baseline testing.

- Performance Metrics Collection: Collect the same performance metrics as in the baseline testing.

- Comparison: Compare the performance metrics with and without provisioned concurrency.

- Analysis: Analyze the results to determine the effectiveness of the provisioned concurrency configuration.

Phase 4: Validation and OptimizationValidate the configuration and make adjustments as needed.Steps:

- Iteration: Iterate on the provisioned concurrency configuration based on the test results.

- Monitoring: Continuously monitor the performance of the function in production.

- Alerting: Set up alerts to notify when performance degrades or provisioned concurrency utilization changes.

Provisioned Concurrency and Cold Starts

Provisioned concurrency is fundamentally designed to address the performance bottleneck of cold starts in AWS Lambda functions. Cold starts, the initial delay experienced when a Lambda function is invoked and its execution environment must be initialized, can significantly impact application responsiveness and user experience. Provisioned concurrency mitigates this issue by pre-initializing a specified number of execution environments, making them immediately available to serve incoming requests.

Mitigating Cold Starts with Provisioned Concurrency

Provisioned concurrency directly tackles the cold start problem by maintaining a pool of “warm” function instances. When a Lambda function is invoked, the invocation is routed to one of these pre-initialized instances if available. This bypasses the need for the function’s execution environment to be created from scratch, thus dramatically reducing the latency associated with cold starts.

Comparing Cold Start Behavior

The impact of provisioned concurrency is most evident when comparing the cold start behavior of functions with and without it.

- Without Provisioned Concurrency: When a Lambda function is invoked for the first time (or after a period of inactivity), a cold start occurs. This involves several steps, including:

- Creating a new execution environment (e.g., a container).

- Downloading the function code.

- Initializing the runtime environment.

- Loading and initializing the function’s dependencies.

This process can take several seconds, resulting in noticeable latency.

- With Provisioned Concurrency: When provisioned concurrency is enabled, a pool of pre-initialized execution environments is maintained. When a function is invoked, the invocation is routed to an available warm instance. This eliminates or significantly reduces the steps involved in a cold start. The function code is already loaded, and the runtime environment is ready. This results in much faster invocation times.

Impact on Cold Start Latency: Execution Time Comparisons

The reduction in cold start latency achieved with provisioned concurrency can be quantified through execution time comparisons. Consider a Lambda function configured to process image resizing requests. The average execution time without provisioned concurrency, during a cold start, might be 3 seconds.

Scenario 1: Without Provisioned Concurrency

- First invocation: 3 seconds (cold start).

- Subsequent invocations: 500 milliseconds (warm start).

Scenario 2: With Provisioned Concurrency

- All invocations: 500 milliseconds (warm start).

The impact is a dramatic improvement in responsiveness. For instance, the total execution time for processing 10 requests in Scenario 1 is significantly higher than in Scenario 2.

The effectiveness of provisioned concurrency is dependent on several factors:

- The function’s initialization time.

- The number of concurrent requests.

- The configuration of provisioned concurrency (the number of provisioned instances).

Provisioning enough concurrency to handle the expected traffic is crucial to minimize cold starts. If the number of concurrent requests exceeds the provisioned concurrency, some requests will still experience cold starts.

Integrating Provisioned Concurrency with Other AWS Services

Provisioned concurrency, while powerful on its own, truly shines when integrated with other AWS services. This integration allows for the creation of robust, scalable, and responsive applications that can handle fluctuating workloads and provide consistent performance. Understanding these integrations and their architectural implications is crucial for optimizing the cost and efficiency of serverless applications.

API Gateway Integration

API Gateway serves as the entry point for most serverless applications, and its integration with Lambda functions using provisioned concurrency is a common and beneficial pattern.API Gateway can be configured to invoke Lambda functions that have provisioned concurrency enabled. When a request arrives at API Gateway, it routes the request to the Lambda function. If provisioned concurrency is available, the request is handled immediately by a pre-warmed function instance.

If provisioned concurrency is fully utilized, API Gateway will still route the request, but it might experience cold start delays, depending on the available concurrency and the function’s configuration.The benefits of this integration include:

- Reduced Latency: Provisioned concurrency minimizes latency by ensuring function instances are ready to handle incoming requests immediately.

- Predictable Performance: The consistent availability of pre-warmed instances leads to more predictable response times, crucial for user-facing applications.

- Scalability: API Gateway automatically scales to handle the volume of requests, and provisioned concurrency ensures that Lambda functions can handle the load.

Consider an example scenario: an e-commerce website using API Gateway to handle product catalog requests. Provisioned concurrency can be configured for the Lambda function that retrieves product details from a database. By pre-warming function instances, the website can ensure fast response times for product searches, even during peak shopping hours.

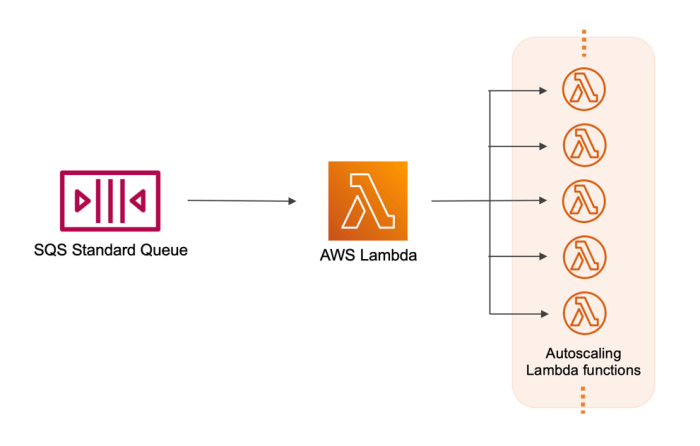

SQS Integration

Simple Queue Service (SQS) is a fully managed message queuing service that enables decoupling of application components. Integrating provisioned concurrency with SQS allows for asynchronous processing of messages with improved performance.When an SQS queue receives a message, it triggers a Lambda function. The Lambda function then processes the message. By using provisioned concurrency, the Lambda function can process messages more quickly and efficiently, particularly when dealing with a high volume of messages.The advantages of this integration include:

- Enhanced Throughput: Provisioned concurrency allows Lambda functions to process a larger number of messages per unit of time.

- Improved Responsiveness: Messages are processed more quickly, reducing the overall processing time for asynchronous tasks.

- Fault Tolerance: SQS provides message persistence and retry mechanisms, ensuring that messages are processed even if Lambda function instances encounter errors.

For example, consider an application that processes image uploads. The application can use SQS to queue image processing tasks and trigger a Lambda function to perform operations like resizing and watermarking. Provisioned concurrency can be applied to the Lambda function, ensuring that image processing tasks are completed promptly, even during periods of high upload activity.

DynamoDB Integration

DynamoDB is a fully managed NoSQL database service. When integrating Lambda functions with DynamoDB, provisioned concurrency can be employed to optimize database access and improve overall application performance.Lambda functions can be triggered by DynamoDB streams to process data changes (inserts, updates, and deletes) in a DynamoDB table. Provisioned concurrency ensures that Lambda functions are readily available to process these events without significant latency.The benefits of this integration include:

- Faster Data Processing: Provisioned concurrency ensures that Lambda functions can process data changes from DynamoDB streams quickly.

- Improved Scalability: The combination of DynamoDB’s scalability and Lambda’s ability to handle concurrent requests results in a highly scalable data processing solution.

- Real-time Data Updates: Provisioned concurrency enables real-time processing of data changes, which is important for applications that require up-to-date information.

An example is a social media platform that uses DynamoDB to store user posts. When a new post is created, a DynamoDB stream triggers a Lambda function to update a user’s feed or perform other related actions. Provisioned concurrency ensures that these updates happen in near real-time.

RDS Integration

Relational Database Service (RDS) is a managed database service that supports various database engines. Integrating Lambda functions with RDS, while requiring careful architectural considerations, can be achieved to perform database operations.Lambda functions can be configured to connect to an RDS database to perform tasks such as querying data, inserting new records, or updating existing entries. Provisioned concurrency can be used to ensure that Lambda functions are ready to handle database requests with minimal latency.The architectural considerations are:

- Connection Pooling: Implement connection pooling within the Lambda function to reuse database connections and reduce connection overhead.

- Database Performance: Optimize database queries and ensure that the database is appropriately sized to handle the load from the Lambda functions.

- Network Configuration: Configure the Lambda function to access the RDS database within the same VPC (Virtual Private Cloud) to minimize network latency.

An example is a web application that uses an RDS database to store user data. When a user logs in, a Lambda function can be triggered to authenticate the user and retrieve their profile information from the database. Provisioned concurrency can ensure that this authentication process is quick and efficient.

Limitations and Considerations

Provisioned concurrency, while offering significant advantages in performance and cost predictability, is not a universally applicable solution. Understanding its limitations and potential drawbacks is crucial for making informed decisions about its implementation. Careful consideration of these factors ensures that provisioned concurrency is used effectively and does not lead to unintended consequences.

Function Scaling and Resource Allocation Limitations

Provisioned concurrency introduces specific constraints on how Lambda functions can scale and the resources they can utilize. These limitations necessitate careful planning and monitoring to avoid bottlenecks or inefficiencies.

- Limited Scalability Beyond Provisioned Capacity: A Lambda function with provisioned concurrency can only handle as many concurrent invocations as provisioned. If the incoming traffic exceeds the provisioned capacity, some requests will experience cold starts and be handled by unprovisioned instances, leading to latency spikes. This can be a significant issue during traffic surges or unexpected load increases.

- Resource Allocation Constraints: Each provisioned instance has a fixed amount of memory and CPU allocated, as defined in the function’s configuration. While you can adjust the memory allocated to the function, there’s a limit. If a function requires more resources than provisioned, the performance will be constrained. This contrasts with the autoscaling behavior of unprovisioned functions, which can dynamically scale up to meet demand.

- Concurrency Limits: AWS account-level concurrency limits also apply to provisioned concurrency. While provisioned concurrency reserves a certain number of instances, it does not circumvent these limits. Exceeding account-level concurrency limits can lead to throttling and request failures, even with provisioned capacity.

- Cold Start Mitigation is Not Absolute: While provisioned concurrency significantly reduces cold starts, it doesn’t eliminate them entirely. When scaling up provisioned concurrency or when the incoming traffic briefly exceeds the provisioned amount, some cold starts may still occur.

Potential Drawbacks of Using Provisioned Concurrency

Implementing provisioned concurrency without careful planning can lead to increased costs and other operational challenges. Recognizing these drawbacks is vital for optimizing its usage and minimizing its negative impacts.

- Increased Costs if Underutilized: Provisioned concurrency incurs costs regardless of function usage. You pay for the provisioned capacity, even if the function isn’t actively processing requests. If the function’s traffic patterns are highly variable or unpredictable, you may end up paying for unused capacity, which increases costs unnecessarily. This can be particularly problematic for functions with infrequent invocations.

- Complexity in Configuration and Management: Managing provisioned concurrency adds complexity to your infrastructure. You need to monitor function metrics (e.g., concurrent executions, invocation duration, error rates) and dynamically adjust the provisioned capacity to match the anticipated load. This requires automation and careful tuning to avoid over-provisioning or under-provisioning.

- Operational Overhead: The introduction of provisioned concurrency increases the operational overhead. You must implement monitoring, alerting, and automated scaling mechanisms to ensure that the provisioned capacity aligns with the actual traffic patterns. This requires expertise and ongoing maintenance.

- Potential for Over-Provisioning and Resource Waste: Over-provisioning can lead to wasted resources and increased costs. It is essential to analyze the function’s traffic patterns and performance metrics to determine the optimal amount of provisioned concurrency. Using too much provisioned concurrency can result in idle instances and unnecessary expenses.

Decision-Making Process for Provisioned Concurrency Suitability

Determining whether provisioned concurrency is suitable for a specific Lambda function involves a systematic evaluation of several factors. The following flowchart Artikels a decision-making process.

Flowchart Description:

1. Start: The process begins with the evaluation of a Lambda function.

2. Is Function Performance Critical? This is the first decision point. If the function’s performance (e.g., latency) is critical, the process proceeds to the next step. If not, provisioned concurrency may not be necessary, and the process ends.

3. Does the Function Experience Cold Starts? If the function frequently experiences cold starts, this suggests that provisioned concurrency could be beneficial. If not, the process proceeds to the next step.

4. Is Traffic Predictable? This is a crucial consideration. Predictable traffic patterns allow for more accurate capacity planning. If traffic is predictable, the process moves forward. If not, the process goes to step 6.

5. Analyze Function Metrics: The user should analyze the function’s metrics (e.g., invocation count, execution duration, concurrent executions). This analysis provides insights into the function’s behavior and resource needs. Based on the analysis, determine the optimal provisioned capacity.

6. Is the Cost Acceptable? Provisioned concurrency increases costs. The user must assess whether the cost of provisioned concurrency is acceptable given the expected performance improvements and the function’s usage. If the cost is acceptable, provisioned concurrency is considered suitable. If not, the process ends, and provisioned concurrency is deemed unsuitable.

7. Implement Provisioned Concurrency: If the cost is acceptable, provisioned concurrency is implemented with appropriate monitoring and scaling strategies.

8. Monitor and Optimize: After implementation, the function’s performance is continually monitored, and provisioned concurrency is adjusted based on traffic patterns and cost considerations.

Advanced Configuration and Optimization

Provisioned concurrency, while offering significant performance benefits, necessitates careful configuration and optimization to realize its full potential and minimize costs. This section delves into advanced configuration options, including autoscaling, and explores methods for tailoring provisioned concurrency settings to function workload patterns. We will also examine how AWS Lambda Extensions can be leveraged to further enhance performance.

Autoscaling Provisioned Concurrency

Autoscaling dynamically adjusts the amount of provisioned concurrency based on observed demand. This automated approach reduces the manual effort required to maintain optimal performance and can minimize costs by scaling down provisioned concurrency during periods of low traffic.To enable autoscaling, configure an Application Auto Scaling policy. This policy defines the scaling behavior, specifying the minimum and maximum provisioned concurrency values, as well as the scaling metric.

A common metric is the `ProvisionedConcurrencyUtilization`, which represents the percentage of provisioned concurrency that is currently in use. When utilization exceeds a predefined threshold, the Auto Scaling service automatically increases the provisioned concurrency; conversely, it decreases provisioned concurrency when utilization falls below a threshold.

- Scaling Policy Configuration: The scaling policy uses a target value for the scaling metric. For example, a target value of 70% for `ProvisionedConcurrencyUtilization` would trigger scaling actions when the utilization exceeds or falls below this threshold.

- Step Scaling Actions: Step scaling actions allow for more granular control over scaling. Instead of a single increase or decrease, you can define multiple steps, each with a different adjustment based on the metric’s deviation from the target value. This can help to prevent over-provisioning or under-provisioning.

- Cooldown Periods: Cooldown periods prevent the scaling policy from reacting too quickly to short-term fluctuations in demand. They introduce a delay after a scaling action before another scaling action can be triggered. This allows the function’s provisioned concurrency to stabilize before further adjustments.

- Example: Consider a function with a baseline provisioned concurrency of 100. An autoscaling policy is configured with a target `ProvisionedConcurrencyUtilization` of 70%. The minimum provisioned concurrency is set to 50, and the maximum is set to 200. If the function’s utilization consistently exceeds 70%, the Auto Scaling service will automatically increase the provisioned concurrency, up to the maximum of 200.

If utilization drops below 70%, the service will scale down the provisioned concurrency, but not below the minimum of 50.

Optimizing Provisioned Concurrency Settings

Optimizing provisioned concurrency involves aligning the provisioned capacity with the function’s workload patterns to ensure optimal performance and cost-effectiveness. This requires understanding the function’s traffic patterns, latency requirements, and cost constraints.Analyzing historical data provides valuable insights into workload patterns. This data includes the number of invocations, average duration, and peak concurrency. Tools like AWS CloudWatch can be used to monitor these metrics and identify periods of high and low demand.

The goal is to provision sufficient concurrency to handle peak loads while minimizing the amount of unused provisioned concurrency during off-peak hours.

- Workload Pattern Analysis: Examine historical invocation data to identify peak load times, average request duration, and the frequency of requests. This analysis reveals the function’s capacity requirements over time.

- Peak Load Forecasting: Predict future demand based on historical trends, seasonal variations, and planned events. Consider factors like marketing campaigns or sales promotions that could significantly increase traffic.

- Latency Requirements: Define acceptable latency thresholds. Provisioned concurrency is particularly beneficial for reducing cold start latency. Determine the acceptable level of latency and adjust provisioned concurrency accordingly.

- Cost Considerations: Balance the cost of provisioned concurrency with the performance benefits. Provisioning more concurrency than necessary increases costs. The cost of provisioned concurrency depends on the duration it is provisioned.

- Dynamic Adjustment Strategies: Implement dynamic adjustment strategies. This might involve using autoscaling to automatically adjust the provisioned concurrency based on demand or manually adjusting provisioned concurrency at specific times based on forecasted traffic.

Enhancing Performance with AWS Lambda Extensions

AWS Lambda Extensions offer a mechanism to integrate tools and processes directly into the Lambda execution environment. This includes the ability to add custom logic, instrumentation, and monitoring capabilities. Extensions can significantly improve the performance of provisioned concurrency functions by optimizing tasks like logging, monitoring, and security scanning.One use case involves integrating a performance monitoring extension to collect detailed metrics about function invocations.

This can help identify performance bottlenecks and areas for optimization.Consider a hypothetical performance monitoring extension that captures metrics related to function initialization, execution, and network requests. This extension runs alongside the function code within the same execution environment.“`text// Hypothetical Extension – initialization.js (runs during Lambda initialization)exports.handler = async () => const startTime = Date.now(); console.log(`[Extension] Initialization started at: $startTime`); // Simulate some initialization tasks await new Promise(resolve => setTimeout(resolve, 100)); // Simulate 100ms initialization const endTime = Date.now(); const initializationTime = endTime – startTime; console.log(`[Extension] Initialization completed in: $initializationTimems`); // Send initialization time to a monitoring service (e.g., CloudWatch) console.log(`[Extension] Sending initialization metrics to monitoring service`); // In a real extension, this would involve sending metrics to an API or a monitoring system.;“““text// Hypothetical Extension – invocation.js (runs before and after function execution)exports.handler = async (event, context) => const startTime = Date.now(); console.log(`[Extension] Invocation started at: $startTime`); // Simulate some pre-invocation tasks await new Promise(resolve => setTimeout(resolve, 50)); // Simulate 50ms pre-invocation overhead const result = await context.lambdaContext.function(event, context); // Call the actual function const endTime = Date.now(); const invocationTime = endTime – startTime; console.log(`[Extension] Invocation completed in: $invocationTimems`); // Send invocation metrics to a monitoring service console.log(`[Extension] Sending invocation metrics to monitoring service`); return result;;“`In this example:

- The `initialization.js` extension measures the time taken for the extension’s own initialization.

- The `invocation.js` extension measures the total time spent in each invocation, including the time spent in the Lambda function itself and the overhead introduced by the extension.

- This data is sent to a monitoring service (e.g., CloudWatch) for analysis.

By analyzing these metrics, developers can identify performance bottlenecks, such as slow initialization processes or inefficient network calls, and optimize their code accordingly. This level of detailed performance data is particularly valuable for provisioned concurrency functions, as it helps ensure that the provisioned capacity is used efficiently and that the function is delivering the desired performance.

Wrap-Up

In summary, provisioned concurrency offers a powerful tool for optimizing Lambda function performance, particularly in latency-sensitive applications. By understanding its operational nuances, including configuration, monitoring, and cost considerations, developers can leverage this feature to achieve significant improvements in response times and overall application responsiveness. While it introduces additional complexities and cost implications, the benefits, especially in use cases demanding predictable performance, often outweigh the drawbacks.

The judicious application of provisioned concurrency, coupled with best practices in function design and monitoring, is key to unlocking its full potential in a serverless environment.

Q&A

What is the primary advantage of provisioned concurrency?

The primary advantage is the reduction of cold start times, resulting in significantly lower latency for function invocations, especially during periods of high traffic or unpredictable demand.

How does provisioned concurrency impact cost?

Provisioned concurrency incurs additional costs because you are paying for the provisioned instances even when they are idle. However, it can reduce costs in situations where frequent cold starts lead to longer execution times and increased resource consumption.

Can provisioned concurrency be used with all Lambda function types?

Yes, provisioned concurrency can be configured for any Lambda function, although its benefits are most pronounced for functions that handle frequent requests and require low-latency responses.

How do I monitor the performance of a Lambda function using provisioned concurrency?

You can monitor performance using CloudWatch metrics such as `ProvisionedConcurrencyInvocations`, `ProvisionedConcurrencySpillover`, and `ConcurrentExecutions` to assess the effectiveness of your provisioned concurrency settings.