Data validation and testing plans are fundamental to ensuring the integrity and reliability of data across various applications. This crucial process involves systematically verifying data quality and accuracy, which is essential for sound decision-making, operational efficiency, and regulatory compliance. Without rigorous validation and testing, data can be prone to errors, inconsistencies, and ultimately, misleading insights. This analysis delves into the core components, methodologies, and best practices of creating and implementing effective data validation and testing plans.

This guide provides a detailed examination of the key elements involved in crafting and executing these plans. It explores the essential techniques, tools, and strategies used to identify, correct, and prevent data quality issues. By understanding these principles, organizations can significantly improve the trustworthiness of their data assets and gain a competitive edge through data-driven insights.

Defining Data Validation and Testing Plans

Data validation and testing plans are fundamental components of robust data management strategies. They are essential for ensuring the integrity, reliability, and usability of data throughout its lifecycle. The implementation of these plans minimizes errors, enhances data quality, and supports informed decision-making.

Core Purpose of a Data Validation and Testing Plan in Data Management

The primary objective of a data validation and testing plan is to establish a systematic approach to verify the accuracy and completeness of data. This encompasses a comprehensive set of procedures and techniques designed to identify and rectify data quality issues. The plan serves as a roadmap for data quality assurance, providing guidelines for data cleansing, error detection, and the overall maintenance of data integrity.

It ultimately supports the creation of trustworthy datasets.

Definition of Data Validation

Data validation is the process of confirming that data meets predefined rules, constraints, and specifications. Its core function is to ensure data accuracy and consistency, thereby safeguarding data quality. Data validation involves various checks and processes, including:

- Format Validation: This involves checking if data adheres to a specified format, such as date formats (YYYY-MM-DD), email addresses, or numerical precision.

- Range Validation: This assesses whether data falls within acceptable minimum and maximum values. For example, a test might validate that a customer’s age is between 0 and 120 years.

- Type Validation: This ensures that data is of the correct data type (e.g., integer, string, date). For instance, a field designated for numerical data should not contain text.

- Consistency Validation: This checks for data consistency across multiple fields or datasets. For example, validating that a customer’s state in their address matches the state code provided.

- Completeness Validation: This ensures that all required fields are populated. It identifies missing values in crucial data points.

Data validation techniques, such as the application of regular expressions or the use of database constraints, are often employed to automate and streamline these checks.

Data validation ensures that data is “fit for purpose” by identifying and preventing errors.

Definition of Data Testing

Data testing is the process of systematically examining data to assess its accuracy, completeness, and reliability within a specific context. It involves the execution of test cases designed to expose data quality issues and verify that data transformations, integrations, and calculations are functioning correctly. Data testing is crucial for ensuring the trustworthiness of data-driven insights and decisions.Data testing encompasses a variety of methodologies:

- Unit Testing: Testing individual data components or modules in isolation. This involves verifying the functionality of specific data processing units.

- Integration Testing: Testing the interaction between different data components or systems. This checks the flow of data between integrated parts.

- System Testing: Testing the complete data system to verify its functionality and performance. This evaluates the system’s overall data processing capabilities.

- Acceptance Testing: Testing conducted to determine whether the system meets the specified acceptance criteria. This verifies the system’s suitability for its intended purpose.

- Performance Testing: Assessing the system’s performance under various load conditions. This evaluates the system’s ability to handle large volumes of data and user requests.

The selection of specific testing methods depends on the scope and complexity of the data management project. Thorough data testing helps to minimize data-related risks and ensures that the data supports accurate decision-making. For example, in a financial system, data testing is critical to ensure the accuracy of transactions, account balances, and financial reports.

Key Components of a Data Validation Plan

A robust data validation plan is fundamental to ensuring data quality, integrity, and reliability within any data-driven system. It Artikels the procedures, methods, and criteria used to verify the accuracy and consistency of data throughout its lifecycle. A well-defined plan minimizes errors, supports informed decision-making, and protects against data-related risks.

Essential Elements of a Data Validation Plan

The essential elements of a data validation plan encompass a range of activities and considerations, all working together to ensure data quality. These elements must be carefully considered and documented to create a comprehensive and effective plan.

- Data Sources and Scope Definition: Identifying all data sources that will be subject to validation is critical. This includes understanding the origin of the data, the format in which it is received, and the expected data types. Defining the scope involves specifying which data elements will be validated and the extent of the validation effort. For example, a plan might specify validation for all customer demographic data but only a subset of transaction data based on business criticality.

- Validation Rules and Criteria: Defining specific rules and criteria is the core of the validation process. These rules specify what constitutes valid data and how it should be checked. This can include range checks, format checks, data type validation, and cross-field validation. For example, a rule might state that a customer’s age must be between 0 and 120 years, or that a date field must conform to the YYYY-MM-DD format.

- Validation Methods and Techniques: The methods used to apply the validation rules must be defined. This includes the tools and technologies employed, such as database constraints, data quality software, or custom scripts. The choice of method depends on factors like the data volume, the complexity of the rules, and the existing infrastructure.

- Error Handling and Reporting: The plan must specify how errors will be handled when invalid data is detected. This includes defining error codes, the process for logging errors, and the procedures for notifying stakeholders. It also includes defining the reporting requirements, such as regular reports on the number and types of errors detected.

- Data Cleansing and Correction Procedures: Procedures for correcting invalid data are a critical part of the plan. This includes identifying the responsible parties for correcting data, the tools and processes they will use, and the workflow for ensuring that corrected data is re-validated. For example, a procedure might involve contacting a customer to verify their address if the address field fails validation.

- Testing and Quality Assurance: A testing phase is essential to ensure that the validation plan functions as expected. This includes unit testing of individual validation rules, integration testing of the validation process, and user acceptance testing to ensure that the system meets the needs of the users.

- Documentation and Version Control: Thorough documentation is essential for maintaining and updating the data validation plan. This includes documenting the validation rules, the methods used, the error handling procedures, and the testing results. Version control ensures that changes to the plan are tracked and managed effectively.

Comparison of Data Validation Techniques

Various data validation techniques can be employed to ensure data quality. The selection of the most appropriate techniques depends on the specific data and the business requirements.

- Range Checks: Range checks verify that a data value falls within a specified minimum and maximum value. This is commonly used for numeric data, such as age, income, or quantities. For instance, a range check might ensure that a product price is within a reasonable range (e.g., $0.01 to $10,000).

Example:

IF (age < 0 OR age > 120) THEN

Flag as invalid;

- Format Checks: Format checks ensure that data conforms to a specific pattern or format. This is frequently used for data such as dates, email addresses, phone numbers, and postal codes. For example, a format check might ensure that a date is in the YYYY-MM-DD format, or that an email address contains the “@” symbol and a valid domain.

Example:

IF (NOT email_address LIKE ‘%@%.%’) THEN

Flag as invalid;

- Data Type Validation: Data type validation verifies that a data value conforms to the expected data type. This includes checking for numeric, text, date, and boolean values. For instance, a data type validation might ensure that a field intended to hold a number does not contain text characters.

Example:

IF (IS_NUMERIC(salary) = FALSE) THEN

Flag as invalid;

- Lookup Validation: Lookup validation verifies that a data value exists in a predefined list or table. This is used to ensure data consistency and accuracy, such as validating a country code against a list of valid country codes. This prevents typos and ensures consistency across datasets.

- Cross-Field Validation: Cross-field validation checks the relationship between two or more data fields. This is often used to ensure data consistency and logical integrity. For instance, it can be used to verify that the total amount of an invoice matches the sum of the individual line items.

Determining the Scope of Data Validation

The scope of data validation should be carefully determined based on data sensitivity and business requirements. This involves assessing the potential impact of data errors and prioritizing validation efforts accordingly.

- Data Sensitivity: The sensitivity of the data is a key factor in determining the scope of validation. Data that is considered highly sensitive, such as financial data or personal health information, requires a more comprehensive validation plan. This is due to the potential for significant financial or reputational damage resulting from data errors. For example, a healthcare provider would implement strict validation rules for patient medical records, including data type checks, range checks, and format checks.

- Business Requirements: Business requirements also influence the scope of validation. The criticality of the data to business operations and decision-making determines the level of validation needed. Data used for critical business processes, such as financial reporting or order fulfillment, requires more stringent validation than data used for less critical purposes.

- Risk Assessment: A risk assessment should be conducted to identify potential data quality risks and their impact. This assessment helps prioritize validation efforts and allocate resources effectively. The assessment considers the likelihood of data errors, the potential impact of those errors, and the cost of implementing validation controls. For example, if a data error could lead to a significant financial loss, a more comprehensive validation plan is warranted.

- Regulatory Compliance: Compliance with industry regulations and legal requirements can also influence the scope of validation. Regulations often specify the data elements that must be validated and the validation methods that must be used. For instance, companies handling personal data must comply with data privacy regulations, which often include specific data validation requirements.

- Cost-Benefit Analysis: A cost-benefit analysis is often performed to determine the optimal level of validation. This involves weighing the cost of implementing and maintaining validation controls against the benefits of improved data quality, such as reduced errors, improved decision-making, and increased customer satisfaction.

Key Components of a Data Testing Plan

A robust data testing plan is crucial for ensuring data quality and reliability. It systematically verifies data integrity, accuracy, and consistency, ultimately supporting informed decision-making and preventing costly errors. This plan Artikels the strategies, methodologies, and resources necessary to thoroughly test data across its lifecycle.

Test Cases and Test Data

Test cases and test data form the core of any effective data testing plan. They are meticulously designed to validate data against predefined criteria and expected outcomes.Test cases are specific sets of conditions or variables under which a tester will determine whether a system under test satisfies requirements or works correctly. They provide a structured approach to data validation.* Each test case should include the following elements:

Test Case ID

A unique identifier for the test case.

Test Objective

A clear statement of what the test case aims to verify.

Test Data

The specific data inputs used for the test.

Test Steps

The sequence of actions performed to execute the test.

Expected Result

The anticipated outcome of the test.

Actual Result

The observed outcome of the test after execution.

Pass/Fail Status

An indication of whether the test case passed or failed.Test data, on the other hand, is the specific input used to execute test cases. The quality and representativeness of test data directly impact the effectiveness of the testing process. The data should encompass a variety of scenarios, including valid, invalid, and edge-case inputs.

Importance of Test Data Selection

The selection of appropriate test data is paramount for thorough data verification. It ensures that all aspects of data processing, storage, and retrieval are rigorously evaluated. A well-selected dataset minimizes the risk of undetected errors and maximizes the confidence in data quality.* Effective test data should exhibit the following characteristics:

Representativeness

It should reflect the characteristics of the real-world data.

Completeness

It should cover all data types, formats, and ranges.

Accuracy

It should be free from errors and inconsistencies.

Validity

It should adhere to predefined rules and constraints.

Edge-case coverage

It should include extreme values and boundary conditions to identify potential vulnerabilities.Selecting a diverse range of test data is essential. This includes positive tests (valid data), negative tests (invalid data), and boundary tests (data at the limits of acceptable ranges). For example, if validating a field for age, positive tests might include ages 25 and 60, negative tests could include -5 and 150, and boundary tests might use 0 and 120.

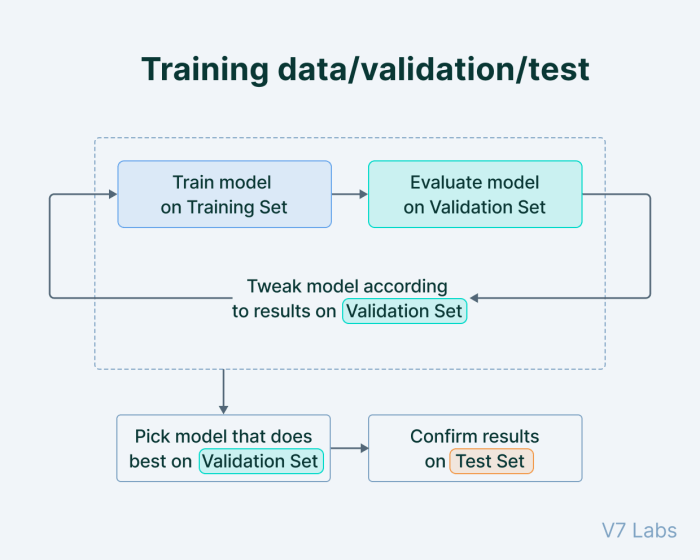

Testing Methodologies

Different testing methodologies are employed at various stages of the data lifecycle. Each methodology focuses on specific aspects of data validation and contributes to the overall quality assurance process.

| Testing Methodology | Goal | Description | Example |

|---|---|---|---|

| Unit Testing | Verify the functionality of individual components or modules. | Focuses on testing the smallest testable parts of an application, isolating them and verifying their behavior. This often involves testing functions, procedures, or classes in isolation. | Testing a single function that calculates the average of a list of numbers. Test cases would include various lists of numbers (positive, negative, zero, and mixed) to ensure the function correctly calculates the average in all scenarios. |

| Integration Testing | Validate the interaction between different components or modules. | Tests the interfaces between integrated modules to detect defects in the interactions between them. This ensures that the modules work together correctly. | Testing the interaction between a data input module and a data processing module. Test cases would verify that the data is correctly passed between the modules and processed as expected, including checking data transformations and calculations. |

| System Testing | Assess the end-to-end functionality of the entire system. | Evaluates the complete system against the specified requirements. This includes testing all integrated components to ensure the system functions as a whole. | Testing a complete data warehousing system. Test cases would verify the data loading, transformation, storage, and reporting functionalities. It ensures that data flows correctly from source systems to the data warehouse and that reports generate accurate information. |

| Acceptance Testing | Confirm that the system meets the user’s requirements and is ready for deployment. | Performed by the end-users or stakeholders to determine if the system meets the business requirements and is acceptable for use. This can include user acceptance testing (UAT) or operational acceptance testing (OAT). | End-users testing a new sales reporting system. They would use the system to generate sales reports and verify that the reports contain the correct data, are easy to understand, and meet their business needs. |

Data Sources and Validation Requirements

Identifying and validating data sources is crucial for the integrity and reliability of any data validation and testing plan. The quality of the data directly impacts the effectiveness of analysis, decision-making, and operational processes. Rigorous analysis of data sources and the establishment of clear validation requirements are fundamental steps in ensuring data accuracy and consistency.

Identifying and Analyzing Data Sources

A comprehensive approach to identifying and analyzing data sources involves understanding the origin, structure, and characteristics of each source. This process ensures the data validation requirements are tailored to the specific attributes of each source, leading to a more robust and effective validation plan.

- Source Identification: The initial step involves identifying all data sources that contribute to the data ecosystem. This includes databases, APIs, flat files, and any other systems or processes that generate or transmit data. A data inventory should be created, documenting each source’s name, description, and purpose.

- Data Source Profiling: This involves examining the data structure, data types, and data formats within each source. Understanding the schema, including the fields, data types, and relationships between data elements, is critical. Tools such as data profiling software can automate this process, providing insights into data quality metrics like completeness, accuracy, and consistency.

- Data Lineage Analysis: Data lineage traces the data’s journey from its origin to its final destination, identifying all transformations and processing steps along the way. This analysis is essential for understanding how data is modified and how potential errors can propagate. It helps pinpoint the source of data quality issues and allows for targeted validation rules.

- Metadata Collection: Metadata, or data about data, provides valuable context for validation. This includes information about the data source, data definitions, data ownership, and data access policies. Metadata helps in understanding the meaning and usage of data, enabling the development of appropriate validation rules.

- Risk Assessment: Each data source should be assessed for potential risks related to data quality. This involves identifying factors that could impact data accuracy, such as data entry errors, system failures, or data transformation issues. The risk assessment informs the prioritization of validation efforts and the selection of appropriate validation techniques.

Data Validation Rules for Various Data Sources

Data validation rules must be customized based on the specific characteristics of each data source. These rules are designed to detect and prevent data quality issues, ensuring the data conforms to defined standards and business requirements. The examples below demonstrate the application of validation rules across different data sources.

- Databases: Databases typically store structured data, making them suitable for implementing a wide range of validation rules.

- Data Type Validation: Ensures that data conforms to the defined data types (e.g., numeric, text, date). For instance, a “Salary” field should only contain numeric values, and a “Date of Birth” field should follow a specific date format.

- Range Validation: Checks if data values fall within a predefined range. For example, a “Quantity” field should have a value greater than zero and less than or equal to a maximum allowable quantity.

- Constraint Validation: Enforces database constraints such as primary keys, foreign keys, and unique constraints. This ensures data integrity and consistency across related tables.

- Referential Integrity Checks: Validates that foreign keys reference existing primary keys in related tables. This prevents orphaned records and maintains relationships between data entities.

- Pattern Matching: Uses regular expressions to validate data formats, such as email addresses, phone numbers, and postal codes.

- APIs: APIs (Application Programming Interfaces) often serve as data sources, transmitting data between systems. Validation in this context is essential for data integrity during data exchange.

- Schema Validation: Verifies that the data received through an API conforms to a predefined schema (e.g., JSON schema, XML schema). This ensures that the data structure is correct and that all required fields are present.

- Data Type Validation: Similar to database validation, this checks that the data types in the API response match the expected types.

- Content Validation: Validates the content of the data, such as checking for specific values or ranges. For example, ensuring that a status code from an API call is one of the expected values.

- Rate Limiting Checks: Validates that the number of API calls does not exceed the allowed rate limits.

- Flat Files: Flat files, such as CSV or TXT files, require specific validation rules due to their less structured nature.

- Format Validation: Checks that the file format and data structure are correct (e.g., comma-separated values in a CSV file).

- Data Type Validation: Verifies that the data types within each field are consistent with the expected types.

- Delimiter Validation: Ensures that delimiters (e.g., commas, tabs) are correctly used to separate data fields.

- Record Count Validation: Verifies that the number of records in the file matches the expected number, providing a check for data completeness.

Documenting Data Validation Rules and Requirements

Effective documentation is critical for managing and maintaining data validation rules and requirements. This documentation serves as a reference for data quality initiatives, enabling consistency and traceability. A well-documented plan also facilitates collaboration among stakeholders.

- Requirements Specification: Define the data validation requirements based on business rules, regulatory compliance, and data quality goals. This includes specifying what data needs to be validated, the validation criteria, and the expected outcomes.

- Validation Rule Definition: Document each validation rule in detail, including its purpose, the data source it applies to, the validation logic, and the error handling procedures. This includes specifying the data fields to be validated, the validation criteria, and the expected outcomes.

- Metadata Integration: Link the validation rules to relevant metadata, such as data definitions, data ownership, and data lineage information. This provides context and helps in understanding the purpose of each rule.

- Documentation Tools: Utilize documentation tools like data dictionaries, data catalogs, or validation rule management systems to store and manage validation rules. These tools often provide features for version control, access control, and reporting.

- Testing Procedures: Include testing procedures in the documentation to ensure the validation rules are working as expected. This includes test cases, test data, and expected results.

- Change Management: Implement a change management process for updating validation rules and requirements. This ensures that any changes are documented, approved, and communicated to all stakeholders.

- Example of a Validation Rule Documentation Table:

Rule ID Data Source Field Validation Rule Error Message Severity Action VR001 Customers Database Email Address Must be a valid email format (using regular expression) Invalid email format Critical Reject record VR002 Orders Flat File Order Date Must be in YYYY-MM-DD format Invalid date format Major Flag for review This table format provides a clear, organized structure for documenting validation rules, facilitating easy reference and maintenance. Each row represents a validation rule, and the columns detail crucial information such as the rule’s identifier, the data source, the specific field being validated, the validation criteria, error messages, the severity level, and the recommended action to be taken upon rule violation.

This detailed approach ensures that all validation rules are well-defined, trackable, and consistently applied.

Validation Methods and Techniques

Data validation and testing are crucial for ensuring data quality. This section explores various validation methods and techniques, providing insights into their application and effectiveness in different scenarios. The selection of appropriate methods is contingent upon the specific characteristics of the data and the validation requirements.

Data Profiling

Data profiling is a fundamental data validation technique that involves the examination of data to understand its structure, content, and quality. This process provides insights into the characteristics of the data, including data types, value ranges, completeness, and consistency. It is an essential first step in any data validation plan.

- Data Type Analysis: Examining the data types of each field to ensure they align with the expected format. For example, a field designated for numerical data should not contain text.

- Value Range Analysis: Identifying the minimum and maximum values, as well as the distribution of values, within a field. This helps detect outliers or values that fall outside the acceptable range. For instance, a field representing age should have a reasonable range, such as 0 to 120 years.

- Completeness Analysis: Assessing the presence of missing values or null values in each field. High rates of missing data can indicate data entry errors or incomplete data collection.

- Pattern Analysis: Discovering patterns within the data, such as repeating values, frequent combinations, or inconsistencies. This can reveal potential data quality issues or opportunities for data improvement. For example, identifying a large number of duplicate records.

- Frequency Distribution Analysis: Determining the frequency of each unique value in a field. This can help identify common values, outliers, and potential data entry errors. For example, in a ‘gender’ field, a frequency analysis can highlight unusual values.

Data Cleansing

Data cleansing, also known as data scrubbing, is the process of identifying and correcting errors, inconsistencies, and inaccuracies in data. This process improves data quality and ensures that the data is fit for its intended use. Data cleansing often follows data profiling, which helps identify the areas that need correction.

- Handling Missing Values: Addressing missing data through various techniques, such as imputation (replacing missing values with estimated values) or deletion (removing records with missing values). The choice of technique depends on the extent of missing data and its impact on the analysis.

- Correcting Invalid Data: Identifying and correcting values that are outside the acceptable range or that violate predefined rules. This can involve correcting spelling errors, standardizing formats, or removing invalid characters.

- Removing Duplicate Records: Identifying and removing duplicate records to ensure data integrity. This can involve comparing records based on key fields and identifying records that have identical or nearly identical values.

- Standardizing Data Formats: Ensuring consistency in data formats, such as date formats, currency formats, and address formats. This facilitates data integration and analysis. For example, converting all dates to a consistent format (YYYY-MM-DD).

- Data Transformation: Converting data from one format or structure to another. This includes tasks like unit conversions (e.g., converting Celsius to Fahrenheit), and data aggregation (e.g., summing sales data by month).

Data Transformation

Data transformation is the process of converting data from one format or structure to another. This is often necessary to make data compatible with different systems or to prepare it for analysis. This process may involve various operations, including data type conversions, data aggregation, and data normalization.

- Data Type Conversion: Changing the data type of a field to match the expected format. For example, converting a text field containing numerical values to a numeric data type.

- Data Aggregation: Summarizing data by grouping it based on certain criteria and applying aggregate functions. This can include calculating sums, averages, counts, and other statistical measures. For example, calculating the total sales for each product category.

- Data Normalization: Scaling numerical data to a specific range, such as 0 to 1, to eliminate the effects of different scales. This is often used in machine learning and data analysis. Common techniques include min-max scaling and z-score normalization.

- Data Encoding: Converting categorical data into a numerical format for use in analysis. Common techniques include one-hot encoding and label encoding. For example, converting a “color” field with values like “red,” “green,” and “blue” into numerical representations.

- Data Filtering: Selecting specific subsets of data based on certain criteria. This can be used to remove unwanted data or to focus on specific areas of interest. For example, filtering sales data to only include sales from a specific region.

Regular Expressions

Regular expressions (regex) are powerful tools for pattern matching in text data. They provide a flexible way to validate data formats and identify specific patterns. Regex can be used to validate email addresses, phone numbers, and other data fields that have specific formats.

Regular expressions are sequences of characters that define a search pattern. They use special characters and operators to specify the pattern to be matched.

Example: A regular expression to validate an email address could be: ^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]2,$. This regex checks for the presence of a valid email format, including an “@” symbol and a domain.

Lookup Tables

Lookup tables, also known as reference tables, are used to validate data against a predefined set of valid values. They are particularly useful for validating categorical data, such as codes, categories, or classifications.Example: A lookup table for validating country codes could contain a list of valid ISO country codes (e.g., US, CA, GB). Any data entry with a country code not present in the lookup table would be flagged as invalid.

Checksums

Checksums are used to verify the integrity of data by calculating a numerical value based on the data’s content. This value is then compared to a previously calculated checksum to detect any changes or errors in the data.

A checksum is a value derived from a block of data that can be used to detect errors introduced during storage or transmission.

Example: In data transmission, a checksum is often calculated at the sender’s end and transmitted along with the data. The receiver recalculates the checksum and compares it to the received checksum. If the checksums do not match, it indicates that the data has been corrupted during transmission.

Choosing the Appropriate Validation Methods

The choice of the most appropriate validation methods depends on the characteristics of the data and the specific validation requirements. A combination of methods is often used to achieve comprehensive data validation.

- Data Format and Structure: If the data has a specific format, such as an email address or a phone number, regular expressions can be used to validate the format.

- Categorical Data: For data with a limited set of valid values, lookup tables are effective.

- Data Integrity: Checksums are used to ensure data integrity, particularly when data is stored or transmitted.

- Data Volume and Complexity: For large and complex datasets, a combination of methods, including data profiling and data cleansing, may be required.

- Data Type: Data type analysis, a part of data profiling, should be used to check if the data is in the expected format.

Testing Strategies and Procedures

Data testing is a crucial component of any data validation plan. Employing well-defined testing strategies and procedures ensures data accuracy, reliability, and integrity. These strategies provide a systematic approach to identify and rectify data quality issues before they impact downstream processes or decision-making. This section Artikels different testing strategies, the process of creating test cases, and best practices for execution and documentation.

Different Data Testing Strategies

Several data testing strategies can be employed, each with its strengths and weaknesses. The choice of strategy depends on the specific data characteristics, validation requirements, and project goals.

- Black-Box Testing: This testing approach treats the data system as a “black box,” focusing on inputs and outputs without knowledge of the internal workings. Testers create test cases based on specifications and requirements, validating that the system produces the correct outputs for given inputs. Black-box testing is beneficial for verifying functional requirements and user acceptance.

- Example: Testing an address validation service. The tester provides an address as input and checks if the output is a valid, standardized address, without knowing how the validation process occurs internally.

- White-Box Testing: In contrast to black-box testing, white-box testing involves knowledge of the internal structure and code of the data system. Testers use this knowledge to design test cases that cover specific code paths, data transformations, and internal logic. White-box testing is valuable for identifying code-level errors, performance bottlenecks, and ensuring code coverage.

- Example: Testing a data transformation script. The tester examines the script’s code to create test cases that exercise all branches of the logic, ensuring that all data transformations are performed correctly.

- Gray-Box Testing: This strategy combines elements of both black-box and white-box testing. Testers have partial knowledge of the internal structure of the data system, such as database schemas or data flow diagrams, which they use to design test cases. Gray-box testing is often used when the system is complex, and a complete understanding of the internal workings is not feasible.

- Example: Testing a data warehouse. The tester has access to the data warehouse schema and knows the data flow from source systems to the warehouse. They use this knowledge to design test cases that verify data integration, transformation, and loading processes.

Step-by-Step Procedure for Creating Test Cases and Test Data

Creating effective test cases and test data is fundamental to a successful data testing process. This procedure provides a structured approach for designing and implementing tests.

- Define Test Objectives: Clearly articulate the goals of the test. Determine what aspects of the data or system need to be validated. For example, data accuracy, completeness, consistency, and timeliness.

- Analyze Requirements: Review data requirements, specifications, and business rules. Understand the expected data characteristics, validation rules, and acceptable data ranges.

- Identify Test Scenarios: Based on the test objectives and requirements, identify various scenarios to be tested. Each scenario should represent a specific use case or data validation rule.

- Design Test Cases: For each test scenario, design test cases that specify the input data, expected output, and the steps to execute the test. Ensure each test case has a unique identifier, description, and priority.

- Create Test Data: Develop or obtain the necessary test data to execute the test cases. This data should cover a range of valid and invalid inputs, including boundary values and edge cases.

- Document Test Cases: Create detailed documentation for each test case, including the test case identifier, description, input data, expected output, actual output, pass/fail status, and any relevant comments.

- Review and Approve Test Cases: Have the test cases and test data reviewed and approved by relevant stakeholders, such as data analysts, business users, or subject matter experts.

Best Practices for Executing Data Tests and Documenting Test Results

Effective test execution and comprehensive documentation are essential for ensuring the reliability and maintainability of the data testing process.

- Establish a Test Environment: Set up a dedicated test environment that mirrors the production environment as closely as possible. This includes data sources, systems, and configurations.

- Execute Test Cases: Execute the test cases according to the defined procedures. Record the actual output and compare it with the expected output.

- Log Test Results: Document the results of each test case, including the test case identifier, execution date, tester, input data, actual output, pass/fail status, and any relevant comments or observations.

- Identify and Report Defects: If a test case fails, identify the root cause of the failure and report it as a defect. Provide detailed information about the defect, including the test case identifier, steps to reproduce the defect, and any relevant error messages.

- Track Defect Resolution: Monitor the progress of defect resolution, ensuring that defects are addressed promptly and effectively.

- Regression Testing: After fixing defects or making changes to the data system, perform regression testing to ensure that the changes have not introduced any new issues or broken existing functionality.

- Document Test Results and Metrics: Maintain a central repository for test results, including test execution reports, defect reports, and test metrics. Regularly analyze the test results to identify trends and areas for improvement.

- Automate Testing Where Possible: Automate repetitive test cases to improve efficiency and reduce the risk of human error. Use testing tools to automate data validation and testing processes.

- Use Version Control: Utilize version control systems for test cases, test data, and test scripts to manage changes, track revisions, and facilitate collaboration.

- Regularly Review and Update Tests: Review and update test cases and test data periodically to reflect changes in data requirements, business rules, and system functionality.

Tools and Technologies for Validation and Testing

The selection of appropriate tools and technologies is crucial for the effective implementation of data validation and testing plans. These tools automate processes, improve accuracy, and enhance the efficiency of data quality assurance. The choice of tool depends on factors such as the data source, the complexity of the validation rules, the testing requirements, and the available budget.

Popular Data Validation and Testing Tools

A variety of tools cater to different data validation and testing needs, ranging from open-source solutions to commercial software packages. Understanding the capabilities of these tools is essential for selecting the right one for a given project.

- Data Quality Tools: These tools are designed specifically for data profiling, cleansing, and validation. They often offer features for rule definition, data monitoring, and reporting. Examples include Informatica Data Quality, IBM InfoSphere Quality Stage, and Trifacta Wrangler.

- Testing Frameworks: These frameworks provide a structured approach to testing, including test case management, execution, and reporting. They support various testing methodologies, such as unit testing, integration testing, and system testing. Examples include JUnit, Selenium, and TestNG.

- Database Management Systems (DBMS): Many DBMSs offer built-in features for data validation, such as constraints and triggers. These features enforce data integrity at the database level. Examples include Oracle, MySQL, and PostgreSQL.

- Scripting Languages: Languages like Python and R are used extensively for data validation and testing. They provide flexibility in defining custom validation rules and automating testing processes. Libraries such as Pandas and NumPy in Python are particularly useful for data manipulation and analysis.

- Data Profiling Tools: These tools analyze data to identify patterns, anomalies, and data quality issues. They provide insights into data characteristics, such as data types, distributions, and missing values. Examples include Ataccama ONE and Datawatch Monarch.

Examples of Tool Usage for Validation and Testing

Specific tools are utilized to implement validation rules and execute tests. These examples illustrate how these tools can be applied in practice.

- Using SQL Constraints in PostgreSQL: PostgreSQL, a robust open-source relational database, employs SQL constraints to enforce data integrity. For instance, a `NOT NULL` constraint ensures that a column cannot contain null values. A `CHECK` constraint validates data based on a specified condition. For example, the following SQL statement creates a table named `employees` and enforces a `CHECK` constraint on the `salary` column to ensure it is greater than zero:

CREATE TABLE employees ( employee_id SERIAL PRIMARY KEY, first_name VARCHAR(50), last_name VARCHAR(50), salary DECIMAL(10, 2) CHECK (salary > 0) );

This constraint prevents the insertion of invalid salary values.

- Implementing Data Validation with Python and Pandas: Python, combined with the Pandas library, provides powerful capabilities for data validation. A data validation script might read data from a CSV file, check for missing values, and validate data types. For example:

import pandas as pd # Read data from CSV df = pd.read_csv('data.csv') # Check for missing values missing_values = df.isnull().sum() print("Missing Values:\n", missing_values) # Validate data types print("\nData Types:\n", df.dtypes) # Validate a specific column (e.g., 'age') df['age'] = pd.to_numeric(df['age'], errors='coerce') #Convert to numeric, replace errors with NaN invalid_age_count = df['age'].isnull().sum() print(f"\nInvalid age count: invalid_age_count")This script reads data, checks for missing values, and verifies data types, providing a basic example of data validation in Python.

- Automated Testing with Selenium: Selenium is a popular tool for automating web browser testing. It is used to validate the functionality of web applications. A typical Selenium test involves writing scripts that simulate user interactions with a web page. For example, a test might involve navigating to a website, filling out a form, and submitting it. Selenium then verifies the results of the submission.

Comparison of Data Validation Tools

The following table compares several popular data validation tools based on key features. The tools are evaluated based on their capabilities to address various data quality needs.

| Tool | Key Features | Strengths | Limitations | Use Cases |

|---|---|---|---|---|

| Informatica Data Quality | Data profiling, data cleansing, data validation, data masking, real-time data quality monitoring. | Comprehensive data quality capabilities, strong integration with other Informatica products, robust rule definition and execution. | Can be expensive, may require significant setup and configuration. | Large enterprises needing a comprehensive data quality solution. |

| Trifacta Wrangler | Data wrangling, data profiling, data cleansing, data transformation, interactive data exploration. | User-friendly interface, good for data preparation, supports a wide range of data sources. | May not be suitable for very complex validation rules, less emphasis on real-time monitoring. | Data scientists and analysts preparing data for analysis. |

| IBM InfoSphere Quality Stage | Data profiling, data cleansing, data validation, data matching, data enrichment. | Scalable, integrates well with other IBM products, supports complex data quality rules. | Can be complex to configure and maintain, licensing costs. | Organizations with complex data integration needs. |

| Pandas (Python Library) | Data manipulation, data cleaning, data transformation, data validation (through custom scripts). | Flexible, open-source, supports a wide range of data formats, good for ad-hoc analysis. | Requires coding skills, may not be suitable for large datasets without optimization. | Data scientists and analysts working with structured data. |

| SQL (Database Constraints) | Data validation through constraints (e.g., NOT NULL, CHECK, UNIQUE, FOREIGN KEY). | Enforces data integrity at the database level, efficient for data storage and retrieval. | Limited to database-specific data validation, may not handle complex validation rules. | Database administrators and developers. |

Data Quality Metrics and Reporting

Data quality metrics are crucial for evaluating the success of data validation and testing plans. They provide quantifiable measures to assess the degree to which data meets predefined quality standards, enabling informed decision-making and continuous improvement of data management processes. Without these metrics, it is challenging to gauge the effectiveness of validation efforts and to identify areas that require attention.

Importance of Data Quality Metrics

Data quality metrics are essential for ensuring the reliability and trustworthiness of data. They provide a structured way to measure and monitor various aspects of data quality, allowing organizations to proactively address data issues.

- Monitoring Data Quality: Metrics enable continuous monitoring of data quality over time. This helps in identifying trends, anomalies, and potential problems before they significantly impact business operations.

- Quantifying Data Quality: Metrics provide quantifiable measures, allowing for objective assessments of data quality. This contrasts with subjective evaluations and facilitates more accurate comparisons.

- Facilitating Decision-Making: High-quality data supports better decision-making. Metrics provide insights into the quality of data used for analysis and reporting, informing strategic decisions.

- Driving Continuous Improvement: By tracking metrics, organizations can identify areas for improvement in data validation and testing processes. This iterative approach leads to enhanced data quality.

- Ensuring Compliance: In regulated industries, data quality metrics are vital for demonstrating compliance with data governance policies and regulatory requirements.

Data Quality Metrics

Several data quality metrics can be employed to assess the quality of data. These metrics provide a comprehensive view of different data aspects.

- Accuracy: Accuracy measures the degree to which data correctly reflects the real-world values.

- Completeness: Completeness assesses the extent to which all required data elements are present.

- Consistency: Consistency evaluates the degree to which data is consistent across different data sources or within a single dataset.

- Timeliness: Timeliness refers to the availability of data within a required timeframe.

- Validity: Validity ensures that data conforms to defined business rules and constraints.

- Uniqueness: Uniqueness guarantees that data elements are not duplicated within a dataset.

Accuracy = (Number of Correct Data Values / Total Number of Data Values)

– 100%

For example, in a customer database, accuracy would be reflected in the correctness of customer names, addresses, and contact information. In a medical context, this could be the correctness of patient diagnoses or lab results. A high accuracy rate ensures that the data accurately represents the facts.

Completeness = (Number of Non-Missing Values / Total Number of Expected Values)

– 100%

For instance, in a sales database, completeness refers to whether all required sales figures, customer details, and transaction dates are present. A low completeness rate could indicate missing data, which can skew analyses. Consider an e-commerce platform where the absence of product descriptions (incomplete data) could lead to fewer sales.

Consistency is assessed through comparison of related data elements, often involving cross-validation and referential integrity checks.

An example is ensuring that customer addresses in a billing system match those in a shipping system. In financial reporting, consistency involves adhering to accounting principles across financial statements. Inconsistencies can lead to errors and unreliable results.

Timeliness is measured by the data’s age and the frequency of updates, often expressed as the delay between data creation and availability.

For example, in a stock market application, the timeliness of data is critical; real-time stock prices are essential. In supply chain management, timely inventory updates ensure efficient operations. Delays can lead to missed opportunities or incorrect decisions.

Validity is often assessed using data validation rules, such as data type checks, range checks, and format checks.

For example, ensuring that a date field contains a valid date format or that an age field contains a numeric value within a reasonable range. In a manufacturing setting, validity could involve verifying that product codes are in the correct format.

Uniqueness is measured by checking for duplicate records, often using unique key constraints.

For instance, ensuring that each customer has a unique identifier in a customer database. In an employee database, each employee should have a unique employee ID. Duplicate records can lead to skewed analyses and incorrect reporting.

Data Quality Report Format

Data quality reports should provide a clear and concise overview of the data quality metrics. These reports should include visualizations to facilitate understanding and analysis.

The report should be structured to provide actionable insights.

Report Components:

- Executive Summary: A brief overview of the data quality performance, highlighting key findings and any significant issues.

- Metrics Overview: A table summarizing the values for each data quality metric.

- Visualizations:

- Bar Charts: Displaying the percentage of data that meets each quality metric. For instance, a bar chart could show the completeness percentage for different data fields.

- Line Charts: Tracking the performance of metrics over time, such as the accuracy rate over several months.

- Pie Charts: Representing the distribution of data quality issues, such as the proportion of records with missing values.

- Detailed Analysis: An in-depth examination of each metric, including specific examples of data quality issues and their potential impact.

- Recommendations: Suggested actions to improve data quality, such as enhancements to validation rules or changes to data entry procedures.

- Appendix: Supporting documentation, such as data dictionaries and validation rule definitions.

Example Table: Data Quality Metrics Summary

| Metric | Description | Target | Current Value | Trend | Comments |

|---|---|---|---|---|---|

| Accuracy | Percentage of correct data values | 98% | 95% | Decreasing | Investigate recent data entry errors. |

| Completeness | Percentage of complete data | 99% | 97% | Stable | Monitor for missing values in key fields. |

| Consistency | Percentage of consistent data | 98% | 96% | Decreasing | Address discrepancies between systems. |

| Timeliness | Data availability within timeframe | 99% | 98% | Stable | Ensure timely data updates. |

Illustration: Bar Chart Example

A bar chart comparing the percentage of accuracy, completeness, and consistency in a data set. The x-axis would display the data quality metrics (Accuracy, Completeness, Consistency), and the y-axis would display the percentage values (e.g., 95%, 97%, 96%). Each metric would have its corresponding bar, with the height of the bar representing the percentage achieved for that metric.

Data Validation and Testing in the Data Lifecycle

Data validation and testing are not isolated activities; rather, they are integral components of the entire data lifecycle, spanning from data ingestion to archival. Integrating these practices at each stage ensures data quality, reliability, and trustworthiness, mitigating potential errors and inconsistencies that could propagate through the system. A comprehensive approach to validation and testing across the data lifecycle is critical for maintaining data integrity and supporting sound decision-making.

Data Validation and Testing During Data Ingestion

Data ingestion is the process of importing data from various sources into a data system. Effective data validation and testing at this stage are crucial for preventing corrupted or incorrect data from entering the system.

- Source Data Assessment: Before ingestion, the characteristics of the source data must be understood. This includes identifying the data format (e.g., CSV, JSON, relational databases), data types, expected ranges, and potential inconsistencies. This initial assessment informs the design of validation rules.

- Data Profiling: Performing data profiling on the source data provides a statistical overview of the data, including data types, value distributions, and missing values. This analysis helps identify potential data quality issues early on.

- Data Validation Rules: Implement specific validation rules to check for data integrity. Examples include:

- Format Validation: Ensuring that data conforms to the expected format (e.g., date formats, email addresses, phone numbers).

- Type Validation: Verifying that data is of the correct data type (e.g., numeric, text, boolean).

- Range Validation: Checking that data values fall within acceptable ranges (e.g., age between 0 and 120).

- Completeness Validation: Ensuring that required fields are not missing.

- Uniqueness Validation: Checking for duplicate values in fields where uniqueness is required (e.g., primary keys).

- Error Handling and Logging: Establish a robust error-handling mechanism to manage data validation failures. This includes logging errors for auditing and troubleshooting, as well as defining strategies for handling invalid data (e.g., rejecting, correcting, or flagging).

- Automated Testing: Develop automated tests to verify the data ingestion process itself. These tests should check the data loading process, including data transformation, and the application of validation rules.

Data Validation and Testing During Data Processing

Data processing involves transforming and manipulating data to make it suitable for analysis and reporting. Validation and testing at this stage focus on ensuring that the transformations are accurate and that the resulting data maintains its integrity.

- Transformation Validation: Verify the correctness of data transformations. This involves testing the logic of data transformations, such as data cleaning, data enrichment, and data aggregation.

- Data Consistency Checks: Ensure that data remains consistent after processing. This involves checking for data inconsistencies across different datasets or tables.

- Data Quality Monitoring: Implement continuous monitoring of data quality metrics to detect any degradation in data quality during processing.

- Unit Testing: Perform unit tests on individual data processing components (e.g., data transformation functions, data aggregation scripts). These tests isolate and validate specific parts of the processing pipeline.

- Integration Testing: Conduct integration tests to verify the interactions between different data processing components. This ensures that data flows correctly through the entire processing pipeline.

- Example: Consider a data pipeline that transforms customer addresses. Validation at this stage would involve verifying that the transformed addresses are correctly formatted and that they match the original addresses after the transformation. If a postal code transformation is performed, the validation should check that the new postal code is accurate based on the original address information.

Data Validation and Testing During Data Storage

Data storage involves organizing and storing data in a data warehouse, data lake, or other storage systems. Validation and testing at this stage ensure that the data is stored correctly and that it can be retrieved accurately.

- Data Integrity Checks: Implement checks to ensure data integrity within the storage system. This can include checksums to verify data corruption and referential integrity constraints to maintain relationships between data tables.

- Data Retrieval Testing: Test data retrieval processes to ensure that data can be accessed accurately and efficiently. This involves testing queries, reports, and data extracts.

- Data Backup and Recovery Testing: Regularly test data backup and recovery processes to ensure that data can be restored in case of a failure.

- Data Security Validation: Validate the security measures in place to protect data from unauthorized access. This includes testing access controls, encryption, and other security mechanisms.

- Example: Imagine a data warehouse storing financial transactions. Validation during storage would involve verifying that the data is stored with proper referential integrity, ensuring that foreign keys correctly reference primary keys. Additionally, the storage system should perform checksums on stored data to detect any data corruption during the storage process. Retrieval tests would confirm that financial reports generate correct results from the stored data.

Establishing Automated Data Validation and Testing Pipelines

Automation is key to ensuring that data validation and testing are performed consistently and efficiently throughout the data lifecycle. Automated pipelines streamline the process and reduce the risk of human error.

- Pipeline Design: Design a data validation and testing pipeline that integrates seamlessly with the data processing pipeline. This includes defining the triggers for validation and testing, such as after data ingestion, after data transformation, or before data storage.

- Automated Validation Rules: Implement automated validation rules using data quality tools or scripting languages. These rules should be executed automatically at the appropriate stages of the data lifecycle.

- Automated Testing Frameworks: Use automated testing frameworks to create and execute test cases. This includes unit tests, integration tests, and system tests.

- Continuous Integration/Continuous Deployment (CI/CD): Integrate data validation and testing into the CI/CD pipeline. This ensures that data quality checks are performed automatically whenever new data is ingested or data processing logic is updated.

- Monitoring and Alerting: Implement monitoring and alerting to track the performance of the data validation and testing pipeline. This includes monitoring data quality metrics, test results, and system performance.

- Example: A CI/CD pipeline could automatically trigger data validation and testing after each data ingestion from a source. Upon successful validation and testing, the data is moved to the next stage of the pipeline. If validation fails, the pipeline alerts the data engineering team and prevents the invalid data from propagating through the system.

Challenges and Best Practices

Data validation and testing projects, while crucial for data quality and reliability, are often fraught with challenges. These challenges can arise from various sources, including data complexity, resource constraints, and evolving business requirements. Addressing these hurdles effectively is critical to ensuring the success of any data-driven initiative.

Common Challenges in Data Validation and Testing

Several common obstacles can impede the effectiveness of data validation and testing efforts. These challenges necessitate proactive planning and mitigation strategies to avoid costly errors and delays.

- Data Complexity and Volume: The sheer volume and complexity of modern datasets present a significant challenge. Large datasets require substantial computational resources and sophisticated testing methodologies. Furthermore, complex data structures, including nested objects and relationships, demand advanced validation techniques to ensure data integrity.

- Evolving Data Requirements: Business requirements are dynamic, and data structures and validation rules often need to adapt. Changes in regulations, business processes, or data sources can necessitate frequent updates to validation and testing plans, adding to the project’s complexity.

- Lack of Clear Requirements: Ambiguous or poorly defined data requirements are a frequent source of problems. Without clear specifications, it’s difficult to design effective validation rules and testing scenarios. This can lead to inaccurate results and wasted effort.

- Resource Constraints: Limited budgets, insufficient skilled personnel, and inadequate testing tools can hinder the validation and testing process. The lack of specialized skills, particularly in areas like data profiling and automated testing, can be a significant bottleneck.

- Data Quality Issues: Existing data quality issues, such as missing values, inconsistencies, and errors, can complicate validation and testing. Addressing these issues often requires extensive data cleansing and transformation efforts before testing can commence.

- Integration Challenges: Integrating data validation and testing processes into existing data pipelines and systems can be challenging. Ensuring compatibility with various data sources, formats, and tools requires careful planning and execution.

- Communication and Collaboration: Poor communication between stakeholders, including data engineers, business analysts, and testers, can lead to misunderstandings and errors. Effective collaboration is essential for ensuring that validation and testing efforts align with business objectives.

Best Practices for Overcoming Challenges

Implementing best practices can mitigate the challenges associated with data validation and testing. These practices promote efficiency, accuracy, and overall project success.

- Establish Clear Data Requirements: Define data requirements thoroughly and document them clearly. This includes specifying data formats, data types, acceptable ranges, and business rules. Involve business users and stakeholders in the requirements gathering process to ensure alignment.

- Implement Data Profiling: Perform data profiling to understand the characteristics of the data, identify data quality issues, and define appropriate validation rules. Data profiling tools can automatically analyze data and generate statistics to facilitate this process.

- Automate Testing: Automate testing processes wherever possible to reduce manual effort, improve efficiency, and ensure consistency. Utilize automated testing tools and frameworks to create and execute test cases.

- Prioritize Testing Efforts: Focus testing efforts on critical data elements and business processes. Prioritize test cases based on risk and impact to ensure that the most important aspects of the data are validated thoroughly.

- Use Version Control: Implement version control for data validation rules, test cases, and testing scripts. This helps manage changes, track revisions, and ensure that testing processes are repeatable and auditable.

- Employ Data Quality Tools: Utilize data quality tools for data cleansing, data transformation, and data validation. These tools can automate many of the tasks involved in data quality management.

- Foster Collaboration: Promote effective communication and collaboration between stakeholders. Establish clear communication channels and regularly review testing results with business users and data engineers.

- Iterative Approach: Adopt an iterative approach to data validation and testing. Break down the process into smaller cycles and incorporate feedback throughout the project. This allows for continuous improvement and adaptation to changing requirements.

- Continuous Monitoring: Implement continuous monitoring of data quality metrics to identify and address issues proactively. Establish dashboards and alerts to track data quality performance.

Recommendations for Ensuring Data Validation and Testing Effectiveness:

- Define clear roles and responsibilities for data validation and testing activities.

- Establish a data governance framework to manage data quality and data standards.

- Provide training and education to data validation and testing personnel.

- Regularly review and update data validation and testing plans to reflect changing requirements.

- Conduct post-implementation reviews to assess the effectiveness of validation and testing efforts.

Summary

In conclusion, a robust data validation and testing plan is not merely a technical procedure; it is a strategic imperative for any organization that relies on data. By implementing the methods and strategies Artikeld, businesses can significantly enhance data quality, reduce the risk of errors, and foster a culture of data integrity. The continuous evolution of these plans, coupled with the adoption of best practices, will ensure that data remains a valuable and reliable asset, driving informed decision-making and fostering organizational success.

Frequently Asked Questions

What is the primary difference between data validation and data testing?

Data validation focuses on ensuring data conforms to predefined rules and standards at the point of data entry or ingestion. Data testing, on the other hand, involves verifying that the data behaves as expected throughout its lifecycle, from storage to reporting, and ensuring the accuracy of data transformations and processes.

What are the common challenges faced when implementing a data validation and testing plan?

Common challenges include defining comprehensive validation rules, dealing with large volumes of data, integrating validation and testing into existing systems, and maintaining the plans as data sources and business requirements evolve. Resource constraints and the complexity of legacy systems can also pose significant hurdles.

How often should data validation and testing plans be reviewed and updated?

Data validation and testing plans should be reviewed and updated regularly, ideally in response to changes in data sources, business rules, regulatory requirements, or system updates. The frequency of these reviews should be determined based on the criticality of the data and the rate of change within the organization.