Embarking on the journey of understanding “what is a cost-aware architecture” is akin to unveiling a hidden gem in the world of cloud computing and software design. It’s a paradigm shift, moving beyond mere functionality to incorporate financial prudence into every aspect of system design and operation. This approach ensures that applications not only perform efficiently but also do so in a way that is economically sustainable.

This comprehensive exploration will delve into the core principles, essential components, and practical strategies that define a cost-aware architecture. We’ll examine how to optimize resource allocation, leverage various cloud services effectively, and implement robust monitoring systems. Furthermore, we’ll explore the role of DevOps in fostering cost efficiency and highlight emerging trends shaping the future of cost-aware design. Prepare to discover how to build scalable, resilient, and budget-conscious applications.

Defining Cost-Aware Architecture

Cost-aware architecture is a crucial design philosophy in modern software development, especially in cloud environments. It focuses on optimizing the total cost of ownership (TCO) of a system by considering financial implications at every stage of the architecture and development lifecycle. This approach is not merely about reducing costs but also about ensuring that the system’s performance, scalability, and reliability align with the organization’s financial goals.

Core Principles of Cost-Aware Architecture

Several core principles guide the design and implementation of a cost-aware architecture. Understanding these principles is essential for building efficient and financially responsible systems.

- Right-Sizing Resources: This principle involves accurately matching resource allocation (e.g., compute instances, storage, network bandwidth) to actual workload demands. Over-provisioning leads to unnecessary costs, while under-provisioning can impact performance and user experience.

- Automation and Optimization: Automating infrastructure provisioning, scaling, and management tasks is crucial. This minimizes manual effort, reduces operational overhead, and allows for dynamic resource adjustments based on real-time needs. Optimization includes techniques like code optimization, database query tuning, and efficient data storage strategies.

- Choosing the Right Services: Selecting the appropriate cloud services and pricing models is essential. This involves evaluating various options (e.g., serverless functions, container orchestration, managed databases) based on their cost, performance characteristics, and suitability for the specific application requirements.

- Monitoring and Analysis: Continuous monitoring of resource utilization, performance metrics, and cost data is vital. This enables proactive identification of cost inefficiencies, performance bottlenecks, and opportunities for optimization. Analyzing historical data provides valuable insights for future planning and decision-making.

- Governance and Policy Enforcement: Implementing cost governance policies and enforcing them through automated mechanisms helps control spending and ensure adherence to budgetary constraints. This includes setting spending limits, defining resource tagging conventions, and establishing approval workflows for resource provisioning.

Definition of Cost-Aware Architecture

For a technical audience, a concise definition is:

Cost-Aware Architecture is a system design approach that prioritizes the financial implications of architectural decisions throughout the software development lifecycle, optimizing for Total Cost of Ownership (TCO) while maintaining desired performance, scalability, and reliability. It encompasses proactive resource management, automation, and continuous monitoring to ensure efficient resource utilization and adherence to budgetary constraints.

Primary Goals of Implementing a Cost-Aware Architecture

The implementation of a cost-aware architecture aims to achieve several key goals, which ultimately contribute to the financial health and sustainability of the system.

- Reduce Total Cost of Ownership (TCO): The primary goal is to minimize the overall cost of running the system, including infrastructure, operations, and maintenance expenses. This involves optimizing resource utilization, leveraging cost-effective services, and automating cost-saving measures.

- Improve Resource Efficiency: Cost-aware architecture aims to maximize the value derived from allocated resources. This involves right-sizing resources, eliminating waste, and ensuring that resources are utilized efficiently based on actual demand. For example, a system might automatically scale down compute instances during off-peak hours to reduce costs.

- Enhance Predictability and Control: By implementing cost-aware practices, organizations gain better visibility into their spending patterns and can forecast future costs more accurately. This allows for proactive budgeting, financial planning, and the ability to control spending within defined limits.

- Enable Scalability and Flexibility: A cost-aware architecture should be designed to scale resources up or down dynamically based on changing demands. This flexibility allows the system to handle peak loads without overspending and adapt to evolving business needs. For instance, using auto-scaling groups for virtual machines allows for automatic adjustment of compute capacity based on real-time traffic.

- Promote Sustainability: Efficient resource utilization contributes to environmental sustainability by reducing energy consumption and minimizing the carbon footprint of the system. Choosing energy-efficient hardware and cloud services can further enhance sustainability efforts.

Key Components of Cost-Awareness

Cost-aware architecture is built on several fundamental components that work in concert to ensure efficient resource utilization and minimize operational expenses. These components are not isolated; they are interconnected and interdependent, forming a cohesive system for cost optimization throughout the entire lifecycle of a software system. Understanding these components is crucial for designing and implementing cost-effective solutions.

Resource Allocation

Resource allocation is a critical aspect of cost-aware architecture. It involves strategically assigning computing resources, such as CPU, memory, storage, and network bandwidth, to different components and services within the system. The goal is to ensure that resources are utilized efficiently, avoiding both underutilization (wasting resources) and over-provisioning (incurring unnecessary costs).

- Right-Sizing: Selecting the appropriate instance types and sizes for virtual machines, containers, and other compute resources. This involves analyzing the workload requirements and choosing resources that meet the performance needs without overspending. For example, a web server that experiences low traffic during off-peak hours can be scaled down to a smaller instance size to save costs.

- Auto-Scaling: Automatically adjusting the number of resources allocated based on real-time demand. This allows the system to handle fluctuations in traffic and workload, scaling up during peak periods and scaling down during periods of low activity. This prevents over-provisioning and ensures optimal resource utilization. Amazon Web Services (AWS) Auto Scaling and Azure Virtual Machine Scale Sets are examples of services that facilitate auto-scaling.

- Resource Pooling: Sharing resources among multiple applications or services. This can improve resource utilization and reduce costs by avoiding the need for dedicated resources for each application. Containerization technologies like Docker and Kubernetes are frequently used to implement resource pooling.

- Capacity Planning: Forecasting future resource needs based on historical data, trends, and expected growth. This allows architects to proactively provision resources and avoid costly emergency expansions. This includes analyzing metrics such as CPU utilization, memory usage, and network traffic to predict future requirements.

- Choosing the Right Pricing Models: Utilizing different pricing models offered by cloud providers, such as on-demand, reserved instances, and spot instances, to optimize costs. Reserved instances provide significant discounts for long-term commitments, while spot instances offer substantial savings for workloads that can tolerate interruptions.

Monitoring and Alerting Systems

Effective monitoring and alerting are essential for maintaining a cost-aware architecture. These systems provide real-time visibility into resource utilization, performance metrics, and cost trends. They enable proactive identification of cost anomalies, performance bottlenecks, and potential optimization opportunities.

- Performance Monitoring: Tracking key performance indicators (KPIs) such as CPU utilization, memory usage, disk I/O, and network latency. This helps identify performance bottlenecks that may be impacting resource efficiency and cost. Tools like Prometheus, Grafana, and Datadog are commonly used for performance monitoring.

- Cost Monitoring: Tracking the cost of individual resources, services, and applications. This allows for the identification of cost drivers and the detection of unexpected cost increases. Cloud providers typically offer cost monitoring dashboards and reporting tools.

- Anomaly Detection: Implementing systems that automatically detect unusual patterns in resource usage or cost data. These systems use statistical methods or machine learning algorithms to identify deviations from normal behavior. When anomalies are detected, alerts are triggered to notify the relevant teams.

- Alerting and Notifications: Configuring alerts to notify relevant teams when predefined thresholds are exceeded or when anomalies are detected. Alerts can be sent via email, SMS, or other communication channels. The alerts should provide detailed information about the issue, including the affected resources, the severity of the problem, and recommendations for remediation.

- Cost Optimization Recommendations: Utilizing tools that provide automated recommendations for cost optimization. These tools analyze resource usage patterns and suggest ways to reduce costs, such as right-sizing instances, eliminating unused resources, or leveraging reserved instances. Cloud providers often offer such tools as part of their cost management services.

Design Considerations for Cost Optimization

Cost optimization is a critical aspect of designing and operating any cloud-based architecture. By carefully considering design choices and leveraging cost-effective strategies, organizations can significantly reduce their cloud spending without compromising performance, scalability, or reliability. This section delves into specific design considerations, service selection strategies, and pricing model comparisons to help you build a cost-aware architecture.

Design a cost-optimized architecture for a web application with high scalability requirements

Designing a cost-optimized architecture for a web application with high scalability necessitates a multifaceted approach, encompassing resource selection, architectural patterns, and automation. The goal is to balance performance needs with cost efficiency.Consider the following design elements:

- Choosing the Right Compute Instance Type: Select compute instances based on workload characteristics. For web applications, consider using a combination of instance types. For example, use burstable instances (like AWS’s T3 or GCP’s E2) for non-critical background tasks and tasks with fluctuating CPU needs, and general-purpose instances (like AWS’s M5 or GCP’s N1) for the application servers themselves.

- Leveraging Auto-Scaling: Implement auto-scaling to dynamically adjust the number of compute instances based on demand. This ensures that resources are available when needed while minimizing idle capacity and associated costs. Configure scaling policies based on metrics such as CPU utilization, network traffic, or custom application metrics.

- Database Optimization: Choose a database technology and configuration that aligns with the application’s data access patterns and scalability requirements. For instance, using a managed database service like Amazon RDS or Google Cloud SQL can reduce operational overhead and provide cost-effective scaling options. Consider database read replicas to offload read traffic and improve performance. For large, read-heavy datasets, consider using a content delivery network (CDN) to cache static content closer to users, reducing database load and bandwidth costs.

- Using a Content Delivery Network (CDN): Implement a CDN to cache static assets (images, CSS, JavaScript) closer to users, reducing latency and offloading traffic from the origin servers. This can significantly reduce bandwidth costs and improve the user experience. Services like Amazon CloudFront, Google Cloud CDN, and Cloudflare provide robust CDN capabilities.

- Employing Serverless Technologies: Leverage serverless functions (e.g., AWS Lambda, Google Cloud Functions, Azure Functions) for event-driven tasks, such as image resizing, API endpoints, or background processing. Serverless functions offer a pay-per-use pricing model, which can be very cost-effective for workloads with intermittent or spiky traffic.

- Implementing Caching Strategies: Implement caching at multiple levels (browser, CDN, server-side) to reduce the load on the application servers and database. Use caching mechanisms like Redis or Memcached to store frequently accessed data in memory.

- Storage Optimization: Choose appropriate storage tiers based on data access frequency. For example, use cheaper storage tiers (e.g., AWS S3 Standard-IA or Google Cloud Storage Nearline) for infrequently accessed data. Regularly review storage usage and identify opportunities to archive or delete unnecessary data.

- Monitoring and Alerting: Implement comprehensive monitoring and alerting to track resource utilization, identify performance bottlenecks, and detect cost anomalies. Set up alerts to notify you of any unexpected cost spikes or resource over-provisioning.

- Right-Sizing Resources: Regularly review resource utilization and adjust instance sizes and configurations as needed. Ensure that resources are not over-provisioned, leading to unnecessary costs.

- Automation: Automate infrastructure provisioning, scaling, and configuration using tools like Terraform, AWS CloudFormation, or Google Cloud Deployment Manager. Automation reduces operational overhead and enables consistent, repeatable deployments.

Organize the steps to selecting cost-effective cloud services

Selecting cost-effective cloud services requires a structured approach that encompasses requirements gathering, service evaluation, pricing analysis, and ongoing optimization. Following these steps will help to ensure the best value for your cloud investments.The steps for selecting cost-effective cloud services are:

- Define Requirements: Clearly define the application’s functional and non-functional requirements, including performance, scalability, security, and compliance needs. Identify the specific services required (compute, storage, database, etc.).

- Identify Potential Services: Research and identify potential cloud services that meet the defined requirements. Consider services from multiple cloud providers (AWS, Google Cloud, Azure) to broaden your options.

- Evaluate Service Features and Capabilities: Evaluate the features and capabilities of each service, comparing them against your requirements. Consider factors such as ease of use, integration with other services, and service level agreements (SLAs).

- Analyze Pricing Models: Thoroughly analyze the pricing models of each service. Understand the different pricing options (on-demand, reserved instances, spot instances, etc.) and their associated costs. Use cloud provider pricing calculators to estimate costs based on your expected usage.

- Compare Pricing Across Providers: Compare the pricing of services across different cloud providers to identify the most cost-effective options. Consider factors such as data transfer costs, storage costs, and any additional fees.

- Conduct Proof-of-Concept (POC) or Pilot Projects: Before making a final decision, conduct a proof-of-concept (POC) or pilot project to test the performance and cost-effectiveness of the selected services. This allows you to validate your assumptions and identify any potential issues.

- Optimize and Refine: After the POC or pilot project, refine your service selection based on the results. Optimize configurations, resource allocation, and pricing plans to further reduce costs.

- Implement and Monitor: Implement the selected services and continuously monitor resource utilization and costs. Use monitoring tools to identify any unexpected cost spikes or inefficiencies.

- Regularly Review and Optimize: Regularly review your cloud spending and identify opportunities for optimization. This may involve adjusting instance sizes, switching to more cost-effective pricing plans, or migrating to newer services.

Create a comparison table illustrating different pricing models of cloud providers for compute instances

Different cloud providers offer various pricing models for compute instances, each designed to cater to different workload requirements and budget constraints. Understanding these pricing models is crucial for making informed decisions and optimizing cloud costs. This table provides a comparison of common pricing models for compute instances. The data presented is for illustrative purposes only and may vary based on the cloud provider, instance type, and region.

Always refer to the official cloud provider documentation for the most up-to-date pricing information.

| Pricing Model | Description | Use Cases | Advantages |

|---|---|---|---|

| On-Demand | Pay-as-you-go pricing with no upfront commitment. You pay for compute capacity by the hour or second (depending on the provider). | Ideal for workloads with unpredictable usage patterns, development and testing, or short-lived applications. | No long-term commitments. Flexibility to scale up or down as needed. Simple to understand. |

| Reserved Instances (AWS), Committed Use Discounts (GCP), Reserved Virtual Machine Instances (Azure) | Provide significant discounts compared to on-demand pricing in exchange for a commitment to use compute capacity for a specified duration (typically 1 or 3 years). Different types offer varying levels of commitment and discounts. | Suitable for workloads with predictable and consistent usage patterns, such as production applications. | Significant cost savings compared to on-demand. Predictable costs. |

| Spot Instances (AWS), Preemptible VMs (GCP), Spot Virtual Machines (Azure) | Allow you to bid on spare compute capacity. You can obtain instances at a significantly reduced price compared to on-demand, but these instances can be interrupted if the provider needs the capacity back. | Well-suited for fault-tolerant workloads that can withstand interruptions, such as batch processing, data analysis, and scientific computing. | Highly cost-effective for workloads that can tolerate interruptions. Significant discounts. |

| Savings Plans (AWS), Sustained Use Discounts (GCP), Hybrid Benefit (Azure) | Commit to a consistent amount of compute usage (measured in dollars per hour) for a specific period. Savings Plans offer flexibility across instance families, sizes, operating systems, and regions. | Best for workloads with steady usage, offering flexibility without the constraints of Reserved Instances. | Offers flexibility and significant savings compared to on-demand. Easier to manage than Reserved Instances. |

Cost-Awareness in Different Cloud Environments

Implementing cost-aware architecture requires a nuanced understanding of the specific tools and services offered by each cloud provider. The following sections will explore how cost-aware principles are applied across Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP), highlighting key services and providing best practices for multi-cloud cost optimization.

Cost-Awareness in AWS

AWS provides a comprehensive suite of tools and services for managing and optimizing cloud costs. These tools allow users to gain visibility into their spending, identify cost-saving opportunities, and implement strategies to reduce overall expenses.

- AWS Cost Explorer: This service provides a visual interface for analyzing AWS spending over time. Users can filter and group data by various dimensions, such as service, region, and tags, to identify cost trends and potential areas for optimization. For example, a company can use Cost Explorer to identify that a particular EC2 instance type is consistently underutilized, leading to a recommendation to downsize or right-size the instance.

- AWS Budgets: Allows users to set custom budgets and receive alerts when spending exceeds predefined thresholds. This proactive approach helps prevent unexpected cost overruns. For instance, a team can set a monthly budget for its development environment and receive notifications if spending approaches the budget limit.

- AWS Cost and Usage Report (CUR): Delivers detailed cost and usage data in a structured format (CSV or Parquet) to an Amazon S3 bucket. This data can be used for advanced analysis and reporting, enabling users to build custom dashboards and gain deeper insights into their spending patterns. A company can analyze CUR data to identify the specific applications or teams that are driving the most significant costs.

- Reserved Instances (RIs) and Savings Plans: Offers significant discounts on compute resources in exchange for a commitment to use a specific amount of compute capacity over a one- or three-year term. Savings Plans provide flexibility by applying discounts to various compute services, while RIs are specific to instance types. A company can use Savings Plans to cover compute costs across EC2, Lambda, and Fargate, while a specific team can use RIs to secure discounted pricing for their frequently used EC2 instances.

- AWS Trusted Advisor: Provides real-time guidance to help optimize AWS resources, improve security, reduce costs, and improve performance. Trusted Advisor analyzes the AWS environment and provides recommendations based on best practices. For example, Trusted Advisor can identify idle or underutilized EC2 instances and recommend actions to reduce costs.

Cost-Awareness in Azure

Microsoft Azure offers a robust set of cost management tools and services designed to help users control and optimize their cloud spending. These tools enable detailed analysis, budgeting, and proactive cost management.

- Azure Cost Management + Billing: Provides a unified view of Azure spending, allowing users to track costs, analyze trends, and set budgets. This service includes features for cost analysis, budgeting, and cost alerts. Users can set up budgets to monitor spending and receive notifications when they approach or exceed their defined limits.

- Azure Advisor: Analyzes the Azure environment and provides personalized recommendations to optimize resources, improve security, and reduce costs. Azure Advisor offers specific cost recommendations, such as identifying idle virtual machines or recommending right-sizing.

- Azure Reserved Instances: Similar to AWS Reserved Instances, Azure Reserved Instances offer significant discounts on virtual machines, SQL Database compute capacity, and other resources in exchange for a one- or three-year commitment. Using reserved instances can substantially reduce compute costs for predictable workloads.

- Azure Hybrid Benefit: Allows users to use existing on-premises Windows Server or SQL Server licenses to run virtual machines in Azure at a reduced cost. This benefit can significantly reduce the cost of migrating existing workloads to the cloud.

- Azure Spot Virtual Machines: Offers access to unused Azure compute capacity at significantly discounted rates. However, these VMs can be evicted if Azure needs the capacity. This option is ideal for fault-tolerant workloads that can handle interruptions. For example, a batch processing job can leverage Spot VMs to complete tasks at a lower cost.

Cost-Awareness in Google Cloud Platform (GCP)

Google Cloud Platform (GCP) provides a suite of tools and services for cost management, enabling users to monitor, analyze, and optimize their cloud spending. These tools offer detailed insights and proactive cost control capabilities.

- Google Cloud Cost Management: Provides a comprehensive view of GCP spending, including cost breakdowns by project, service, and region. Users can set budgets, receive alerts, and analyze cost trends. The Cost Management dashboard offers visualizations and reporting capabilities to understand spending patterns.

- Google Cloud Billing Reports: Offers detailed cost and usage data in a variety of formats, including CSV and JSON. This data can be used for advanced analysis and custom reporting. Users can leverage BigQuery to analyze billing data and identify cost drivers.

- Google Cloud Pricing Calculator: Allows users to estimate the cost of GCP resources before they are deployed. This tool helps users plan their cloud deployments and make informed decisions about resource allocation.

- Committed Use Discounts (CUDs): Similar to Reserved Instances in AWS and Azure, CUDs offer significant discounts on compute resources in exchange for a commitment to use a specific amount of compute capacity for a one- or three-year term. CUDs can be applied to virtual machines, Cloud SQL, and other services.

- Sustained Use Discounts: Automatically applied to resources that are used for a significant portion of the month, providing a discount without any upfront commitment. This discount applies to services like Compute Engine.

Best Practices for Minimizing Cloud Spending in a Multi-Cloud Environment

Managing costs across multiple cloud providers requires a unified approach and a consistent set of best practices.

- Establish a Centralized Cost Management Strategy: Implement a single platform or set of tools to monitor and manage costs across all cloud providers. This can involve using third-party cost management tools or integrating the native cost management tools from each provider.

- Implement Consistent Tagging: Use a standardized tagging strategy across all cloud environments to categorize resources and track spending accurately. This enables cross-cloud cost allocation and reporting.

- Set Unified Budgets and Alerts: Define budgets and set up alerts across all cloud providers to monitor spending and proactively identify cost overruns.

- Optimize Resource Utilization: Regularly review resource utilization and right-size or downsize resources as needed. This includes identifying idle or underutilized resources across all cloud environments.

- Leverage Reserved Instances/Committed Use Discounts: Take advantage of reserved instances or committed use discounts where possible to reduce compute costs.

- Automate Cost Optimization: Automate cost optimization tasks, such as instance right-sizing and idle resource detection, to ensure continuous cost efficiency.

- Negotiate Pricing: Explore opportunities to negotiate pricing with cloud providers, especially for large-scale deployments.

- Regularly Review and Refine Strategies: Continuously monitor and refine cost management strategies based on spending patterns and evolving business needs.

Resource Management Strategies

Effective resource management is crucial for cost-aware architecture. It involves dynamically allocating and optimizing resources to meet application demands while minimizing unnecessary spending. This section details several strategies to achieve efficient resource utilization and cost reduction in cloud environments.

Implementing Auto-scaling

Auto-scaling automatically adjusts the number of resources allocated to an application based on real-time demand. This ensures optimal performance and cost efficiency.

- Scaling Out: When the application load increases, auto-scaling provisions additional resources, such as virtual machines or containers. This prevents performance degradation and ensures a positive user experience. For instance, if a website experiences a sudden surge in traffic during a promotional event, auto-scaling can automatically launch additional web servers to handle the increased requests.

- Scaling In: When the application load decreases, auto-scaling removes unused resources, such as shutting down idle virtual machines or containers. This helps reduce costs by eliminating the need to pay for underutilized resources. For example, during off-peak hours, an auto-scaling configuration can reduce the number of active web servers, thereby saving on compute costs.

- Metrics for Auto-scaling: Auto-scaling relies on various metrics to determine when to scale out or scale in. Common metrics include CPU utilization, memory usage, network traffic, and request queue length. These metrics are monitored continuously, and scaling actions are triggered based on predefined thresholds. For example, if CPU utilization exceeds 70% for a sustained period, auto-scaling can trigger the provisioning of additional resources.

- Configuration and Implementation: Auto-scaling is typically configured using cloud provider tools and services. This involves defining scaling policies, setting thresholds, and specifying the desired minimum and maximum number of resources. Proper configuration is crucial to ensure that auto-scaling responds appropriately to changing demands. For example, a scaling policy might specify that a new instance is launched when CPU utilization exceeds 75% and that the maximum number of instances is limited to 10.

Utilizing Reserved Instances and Spot Instances

Cloud providers offer various pricing models to help reduce costs. Reserved instances and spot instances are two such models that can significantly impact resource expenses.

- Reserved Instances: Reserved instances provide a significant discount compared to on-demand pricing. Users commit to using a specific instance type for a defined period (typically one or three years). In exchange for this commitment, they receive a substantial discount on the hourly rate. Reserved instances are best suited for workloads with predictable resource requirements and a long-term usage pattern. For instance, a database server that runs 24/7 can benefit greatly from reserved instances.

- Spot Instances: Spot instances allow users to bid on spare compute capacity. Spot instances offer the lowest prices but can be terminated by the cloud provider if the spot price exceeds the user’s bid or if the capacity is needed. Spot instances are ideal for fault-tolerant and flexible workloads, such as batch processing, testing, and development. For example, a data analysis job that can tolerate interruptions can utilize spot instances to significantly reduce its compute costs.

- Comparing Reserved and Spot Instances: Reserved instances offer predictable costs and are suitable for stable workloads. Spot instances provide the lowest prices but involve the risk of termination. Choosing between these options depends on the workload’s characteristics and the acceptable level of risk. For example, a production web server might benefit from reserved instances, while a development environment can leverage spot instances.

- Implementation and Management: Cloud providers offer tools to manage reserved and spot instances. This includes purchasing reserved instances, monitoring spot prices, and automating the bidding process. Effective management involves analyzing resource usage patterns and selecting the appropriate instance types and durations to maximize cost savings.

Optimizing Storage Costs

Storage costs can be a significant portion of cloud expenses. Optimizing storage involves selecting the appropriate storage tiers and implementing lifecycle management policies.

- Data Tiering: Data tiering involves storing data in different storage tiers based on its access frequency and performance requirements. This allows users to balance cost and performance.

- Hot Tier: For frequently accessed data, the hot tier offers the highest performance and is typically the most expensive.

- Cool Tier: The cool tier is suitable for less frequently accessed data and offers a lower cost per gigabyte.

- Cold Tier: The cold tier is designed for infrequently accessed data and provides the lowest cost.

- Lifecycle Management: Lifecycle management automates the movement of data between storage tiers based on predefined policies. This ensures that data is stored in the most cost-effective tier based on its access patterns. For example, a policy might automatically move data from the hot tier to the cool tier after 30 days of inactivity.

- Techniques for Storage Optimization:

- Data Compression: Compressing data reduces storage space and can lead to cost savings.

- Data Deduplication: Data deduplication identifies and eliminates duplicate data blocks, further reducing storage requirements.

- Object Storage: Utilizing object storage for storing unstructured data can often be more cost-effective than using block storage.

- Implementation Considerations:

- Storage Class Selection: Choose the appropriate storage classes based on access frequency, performance needs, and data retention requirements.

- Lifecycle Policy Configuration: Configure lifecycle policies to automate data movement between storage tiers.

- Monitoring and Optimization: Continuously monitor storage usage and access patterns to optimize storage costs.

Monitoring and Reporting for Cost Control

Implementing robust monitoring and reporting mechanisms is crucial for maintaining cost-aware architecture. These systems provide visibility into resource consumption, enabling proactive cost management and optimization. By continuously tracking and analyzing cost-related data, organizations can identify areas for improvement, prevent unexpected expenses, and ensure alignment with budgetary constraints.

Setting Up Monitoring Dashboards to Track Resource Consumption

Establishing effective monitoring dashboards is fundamental to gaining insights into resource utilization and associated costs. These dashboards should present data in a clear, concise, and easily digestible format, allowing stakeholders to quickly identify trends, anomalies, and potential cost-saving opportunities.

- Choose a Monitoring Tool: Select a monitoring solution that integrates well with your cloud provider (AWS CloudWatch, Azure Monitor, Google Cloud Monitoring) or a third-party tool (Datadog, New Relic, Prometheus with Grafana). Consider factors like ease of use, scalability, cost, and feature set.

- Define Key Performance Indicators (KPIs): Identify the most relevant metrics to track. These KPIs should provide a comprehensive view of resource consumption and associated costs. Examples include CPU utilization, memory usage, storage capacity, network traffic, and the cost of individual services.

- Configure Data Collection: Configure the monitoring tool to collect the defined KPIs from your cloud resources. This may involve installing agents, setting up integrations, or enabling specific logging features. Ensure data is collected at appropriate intervals (e.g., every minute, every hour) to provide timely insights.

- Design Visualizations: Create dashboards that visualize the collected data. Use charts, graphs, and tables to present the information in an easily understandable manner. Customize dashboards to meet the needs of different stakeholders (e.g., engineers, finance teams).

- Set Up Alerts: Configure alerts to notify relevant parties when specific thresholds are exceeded or when unusual patterns are detected. Alerts can help proactively address potential cost overruns or performance issues.

- Regular Review and Refinement: Regularly review and refine your monitoring dashboards based on your needs and evolving infrastructure. Add or remove metrics, adjust alert thresholds, and update visualizations as necessary to ensure they remain relevant and effective.

Examples of Cost-Related Metrics That Should Be Monitored

Monitoring a comprehensive set of cost-related metrics provides a detailed understanding of resource consumption and associated expenses. These metrics allow for identifying cost drivers, optimizing resource allocation, and making informed decisions about infrastructure investments.

- Cost per Resource: Track the cost of individual resources (e.g., EC2 instances, storage volumes, database instances) to understand the cost contribution of each component.

- Cost per Service: Monitor the total cost associated with each cloud service (e.g., compute, storage, database, networking) to identify cost hotspots and areas for optimization.

- Resource Utilization: Measure the utilization of resources (e.g., CPU utilization, memory usage, storage capacity) to identify underutilized or over-provisioned resources.

- Cost per Transaction/Operation: Calculate the cost associated with specific transactions or operations (e.g., API calls, database queries) to understand the cost efficiency of different processes.

- Reserved Instance/Commitment Utilization: Monitor the utilization of reserved instances or committed use discounts to ensure they are being used effectively and maximize cost savings.

- Data Transfer Costs: Track data transfer costs (e.g., data transfer between regions, data egress) to identify potential areas for optimization and minimize network expenses.

- Storage Costs: Monitor storage costs, including storage capacity, storage class, and data retrieval costs, to optimize storage usage and minimize expenses.

- Idle Resource Costs: Identify and track the cost of idle resources (e.g., unused instances, unattached storage volumes) to eliminate unnecessary expenses.

- Cost Trends and Forecasts: Analyze cost trends over time and generate forecasts to predict future spending and proactively manage budgets.

Designing a System for Generating Regular Cost Reports and Alerts

Establishing a system for generating regular cost reports and alerts is essential for proactively managing cloud spending. These reports and alerts provide timely insights into cost performance, enabling prompt action to address potential issues and optimize resource utilization.

- Define Reporting Requirements: Determine the specific cost information that needs to be included in the reports, such as cost breakdown by service, resource, and department. Identify the frequency of reports (e.g., daily, weekly, monthly) and the intended audience.

- Automate Data Extraction and Processing: Automate the process of extracting cost data from the cloud provider’s billing system and processing it into a usable format. This may involve using APIs, scripting, or dedicated cost management tools.

- Create Report Templates: Design report templates that present cost data in a clear and concise manner. Use charts, graphs, and tables to visualize the data and highlight key trends and insights.

- Schedule Report Generation and Delivery: Schedule the automated generation and delivery of cost reports to the relevant stakeholders. Ensure reports are delivered on the agreed-upon frequency (e.g., weekly reports delivered every Monday morning).

- Implement Alerting Rules: Define alert rules based on specific cost thresholds or unusual patterns. Configure the alerting system to notify relevant parties (e.g., engineers, finance teams) when these thresholds are exceeded.

- Integrate with Communication Channels: Integrate the reporting and alerting system with communication channels such as email, Slack, or Microsoft Teams to ensure timely dissemination of cost information.

- Review and Refine Reporting and Alerting: Regularly review and refine the reporting and alerting system based on feedback from stakeholders and evolving business needs. Add or modify reports, adjust alert thresholds, and update the communication channels as necessary.

- Example: A company using AWS might set up an automated system that generates a weekly cost report showing the costs associated with each AWS service (EC2, S3, RDS, etc.). This report could be sent via email to the finance team and the engineering leads. Simultaneously, alerts can be set up in CloudWatch to notify the engineering team if the cost of EC2 instances exceeds a predefined threshold, enabling them to take corrective action immediately.

Cost Optimization Techniques

Effectively managing cloud costs requires a proactive approach that goes beyond simple monitoring. This involves implementing specific optimization techniques across various areas of your architecture, from database management to network configurations and code efficiency. By strategically applying these methods, you can significantly reduce your cloud spending without compromising performance or availability.

Comparing and Contrasting Database Cost Optimization Methods

Database costs often represent a significant portion of cloud spending. Several strategies can be employed to optimize these costs, each with its own strengths and weaknesses. Understanding these differences allows for informed decision-making based on specific workload requirements.

Here’s a comparison of key database cost optimization methods:

- Right-Sizing Instances: This involves selecting the appropriate instance size for your database workload. Over-provisioning leads to unnecessary costs, while under-provisioning can impact performance.

- Example: If your database consistently uses only 20% of the CPU and memory of a large instance, consider downsizing to a smaller, more cost-effective instance. This can result in significant savings, particularly over time.

- Automated Scaling: Implementing automated scaling mechanisms allows your database to dynamically adjust resources based on demand. This ensures optimal resource utilization and cost efficiency, as you only pay for what you use.

- Example: Configure your database to automatically scale up CPU and memory during peak hours and scale down during off-peak hours. This approach is particularly effective for workloads with predictable usage patterns.

- Database Selection: Choosing the right database technology for your specific needs is crucial. Consider the features, performance characteristics, and pricing models of different database options.

- Example: For a simple key-value store, a NoSQL database like Amazon DynamoDB or Azure Cosmos DB might be more cost-effective than a traditional relational database.

- Data Tiering: Move less frequently accessed data to lower-cost storage tiers. This approach helps reduce storage costs without sacrificing data availability.

- Example: Archive older, infrequently accessed data to a cold storage tier like Amazon S3 Glacier or Azure Archive Storage. This can dramatically lower storage costs compared to storing all data in a hot storage tier.

- Reserved Instances/Committed Use Discounts: Take advantage of reserved instances or committed use discounts offered by cloud providers. These options provide significant cost savings for workloads with predictable resource needs.

- Example: If you know you will be running a database instance continuously for a year, purchasing a reserved instance can result in substantial discounts compared to on-demand pricing.

- Monitoring and Optimization Tools: Utilize database monitoring and optimization tools provided by cloud providers or third-party vendors. These tools provide insights into database performance, resource utilization, and cost optimization opportunities.

- Example: AWS Performance Insights or Azure Database Advisor can help identify performance bottlenecks and recommend cost-saving measures.

Reducing Network Costs through Efficient Data Transfer Strategies

Network costs can accumulate rapidly, especially when transferring large volumes of data between different regions or within the same region. Implementing efficient data transfer strategies is crucial for minimizing these expenses.

Strategies for reducing network costs include:

- Data Compression: Compress data before transferring it over the network. This reduces the amount of data transmitted, thereby lowering network costs.

- Example: Use tools like gzip or Brotli to compress files before uploading them to cloud storage or transferring them between servers.

- Data Caching: Implement caching mechanisms to reduce the frequency of data transfers. This is particularly effective for frequently accessed data.

- Example: Use a Content Delivery Network (CDN) to cache static content closer to users, reducing the need to retrieve data from the origin server repeatedly.

- Data Transfer Optimization Tools: Utilize cloud provider-specific tools and services designed to optimize data transfer performance and cost.

- Example: AWS DataSync can efficiently transfer large datasets between on-premises storage and Amazon S3, optimizing bandwidth utilization and minimizing transfer times.

- Region Selection: Choose the appropriate cloud region for your workloads. Transferring data across regions incurs additional costs.

- Example: If your users are primarily located in North America, deploy your application and data in a North American region to minimize data transfer costs.

- Private Network Connectivity: Utilize private network connections, such as AWS Direct Connect or Azure ExpressRoute, to transfer data between your on-premises infrastructure and the cloud. These connections can offer lower and more predictable costs compared to transferring data over the public internet.

- Example: Connecting your on-premises data center to your cloud environment via a private network connection ensures secure and cost-effective data transfer, especially for large datasets.

- Monitoring Network Traffic: Regularly monitor network traffic patterns to identify potential bottlenecks and areas for optimization. Use cloud provider monitoring tools or third-party solutions to track data transfer volumes and costs.

- Example: Monitor data transfer volumes between different services within your cloud environment. Identify services that are generating high data transfer costs and investigate potential optimization opportunities.

Providing Examples of Code Optimization to Improve Resource Utilization

Code optimization plays a critical role in improving resource utilization and reducing cloud costs. Efficient code consumes fewer resources, leading to lower compute, memory, and storage expenses.

Here are examples of code optimization techniques:

- Efficient Algorithms and Data Structures: Choose the most efficient algorithms and data structures for your specific tasks. This can significantly impact the amount of resources required to execute your code.

- Example: Use a hash table instead of a linear search for lookups in large datasets to reduce time complexity and improve performance.

- Code Profiling and Performance Tuning: Profile your code to identify performance bottlenecks and areas for optimization. Use profiling tools to analyze CPU usage, memory allocation, and I/O operations.

- Example: Use a profiler to identify slow-running functions or inefficient code sections. Optimize these sections to improve performance and reduce resource consumption.

- Lazy Loading: Implement lazy loading to load resources only when they are needed. This can reduce initial load times and resource consumption.

- Example: Load images on a webpage only when they are visible in the viewport. This reduces the initial page load time and conserves bandwidth.

- Caching: Implement caching mechanisms to store frequently accessed data or results. This reduces the need to recompute or retrieve data repeatedly.

- Example: Cache the results of computationally expensive operations or database queries to improve response times and reduce server load.

- Connection Pooling: Utilize connection pooling to reuse database connections. This reduces the overhead of establishing and tearing down database connections repeatedly.

- Example: Implement a connection pool in your application to reuse database connections. This improves database performance and reduces the number of connections required.

- Minimize Resource Consumption: Write code that minimizes resource consumption. This includes using efficient memory management techniques, avoiding unnecessary object creation, and optimizing I/O operations.

- Example: Use object pooling to reuse objects instead of creating new ones repeatedly. This can reduce memory allocation and garbage collection overhead.

The Role of DevOps in Cost-Awareness

DevOps practices are crucial for achieving cost-aware architecture by streamlining development and operational processes. This integration enables teams to proactively manage and optimize cloud spending. By fostering collaboration and automation, DevOps empowers organizations to make informed decisions about resource allocation and utilization, ultimately leading to significant cost savings.

How DevOps Practices Contribute to Cost Efficiency

DevOps promotes a culture of continuous improvement and collaboration, which directly benefits cost management. The focus on automation, monitoring, and feedback loops enables organizations to identify and address cost inefficiencies quickly. This proactive approach minimizes waste and ensures resources are utilized effectively.

Integrating Cost Considerations into the CI/CD Pipeline

Integrating cost considerations into the Continuous Integration and Continuous Delivery (CI/CD) pipeline is a core practice in DevOps. This involves incorporating cost checks at various stages of the pipeline, from code commit to deployment. By doing so, teams can identify and address potential cost issues early in the development lifecycle, preventing costly mistakes from reaching production. For example, integrating cost analysis tools within the CI/CD pipeline can automatically flag code changes that could increase cloud spending.

These tools might analyze infrastructure-as-code templates, providing cost estimates before deployment. Similarly, automated testing can include cost-related metrics, ensuring that new features or updates do not negatively impact the overall budget. This integration transforms the CI/CD pipeline into a cost-aware system, promoting financial accountability throughout the development process.

Automation Techniques for Cost Management

Automation is key to effective cost management within a DevOps framework. Implementing automated processes reduces manual effort and improves accuracy in tracking and optimizing cloud spending.

- Automated Resource Provisioning and De-provisioning: Automate the creation and deletion of cloud resources based on application needs. This ensures that resources are only provisioned when required and de-provisioned when no longer in use, preventing unnecessary costs. For example, using Infrastructure as Code (IaC) tools like Terraform or AWS CloudFormation allows for the automated deployment and teardown of entire environments. This can be particularly useful for development and testing environments, where resources are only needed for a limited time.

- Automated Cost Monitoring and Alerting: Implement automated monitoring systems that track cloud spending in real-time. Set up alerts to notify teams when spending exceeds predefined thresholds or when anomalies are detected. This proactive approach enables timely intervention and prevents unexpected cost overruns. For instance, cloud providers offer built-in monitoring services, such as AWS CloudWatch or Azure Monitor, which can be configured to send alerts based on spending patterns.

- Automated Resource Optimization: Utilize automation to identify and implement resource optimization strategies, such as right-sizing instances, scheduling resource usage, and automatically scaling resources based on demand. For example, tools can automatically detect idle or underutilized virtual machines and suggest or implement resizing or termination actions.

- Automated Reporting and Analysis: Automate the generation of cost reports and dashboards to provide visibility into cloud spending. These reports should include key metrics, such as cost per service, cost per application, and cost trends over time. This data enables informed decision-making and facilitates continuous cost optimization efforts. Many cloud providers offer built-in reporting tools, but third-party tools can provide more detailed analysis and custom reporting capabilities.

- Automated Policy Enforcement: Implement automated policies to enforce cost management best practices, such as tagging resources, restricting instance types, and limiting spending on certain services. For example, policies can be implemented to automatically tag all newly provisioned resources with cost allocation tags, ensuring accurate cost tracking.

Future Trends in Cost-Aware Architecture

The landscape of cloud cost management is constantly evolving, driven by technological advancements and the increasing complexity of cloud environments. Understanding these emerging trends is crucial for organizations aiming to optimize their cloud spending and maximize their return on investment. This section will explore the key future trends shaping cost-aware architecture, with a focus on the impact of serverless computing and a glimpse into the future.

Emerging Trends in Cloud Cost Management

Cloud cost management is moving beyond simple tracking and reporting. Organizations are increasingly seeking proactive and automated solutions to control their cloud expenses. Several trends are gaining momentum.

- AI-Powered Cost Optimization: Artificial intelligence and machine learning are being leveraged to analyze vast datasets of cloud usage, identify cost inefficiencies, and automate optimization recommendations. These systems can predict future spending patterns, detect anomalies, and proactively suggest resource adjustments to minimize costs. For instance, AI can identify instances that are underutilized and recommend resizing or termination.

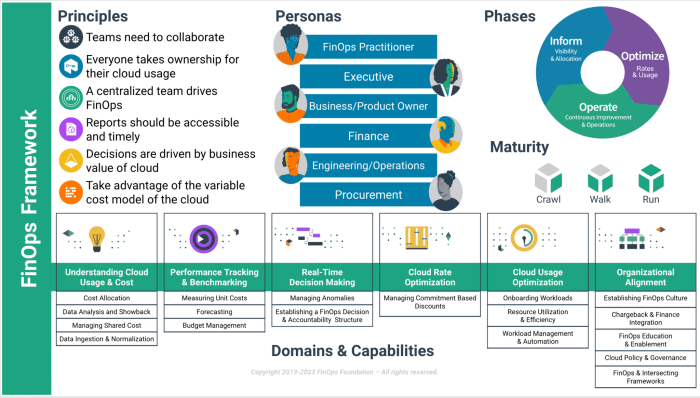

- FinOps Automation: The adoption of FinOps practices is driving the need for greater automation. Tools and platforms are emerging that automate cost allocation, budget tracking, and policy enforcement. This includes automating the shutdown of non-production environments outside of business hours or automatically scaling resources based on demand.

- Multi-Cloud Cost Management: As organizations adopt multi-cloud strategies, the complexity of managing costs across different providers increases. Solutions that provide a unified view of costs, regardless of the cloud provider, are becoming essential. These tools enable consistent cost tracking, reporting, and optimization across various cloud environments.

- Serverless Optimization Tools: The rise of serverless computing has created new challenges and opportunities for cost optimization. Specialized tools are emerging to monitor and optimize serverless function invocations, identify inefficient code, and suggest resource adjustments to minimize costs. These tools analyze function performance and cost to help developers write more efficient code.

- Sustainability and Green IT: There is a growing focus on the environmental impact of cloud computing. Organizations are seeking ways to reduce their carbon footprint by optimizing their cloud usage. This includes selecting cloud providers with sustainable practices, optimizing resource utilization to reduce energy consumption, and implementing policies that encourage energy-efficient coding practices.

Impact of Serverless Computing on Cost Optimization

Serverless computing offers significant potential for cost optimization, but it also introduces new complexities. Understanding the cost model of serverless services is crucial for effective cost management.

- Pay-per-Use Pricing: Serverless platforms typically operate on a pay-per-use model, where users are charged only for the resources they consume. This can lead to significant cost savings compared to traditional infrastructure, especially for workloads with variable demand. For example, a website with infrequent traffic may only pay for the function invocations it receives, resulting in minimal costs during periods of low activity.

- Granular Resource Allocation: Serverless platforms often provide fine-grained control over resource allocation, allowing users to optimize the resources used by their functions. By tuning parameters such as memory and execution time, developers can minimize the cost of each function invocation.

- Automated Scaling: Serverless platforms automatically scale resources based on demand, eliminating the need for manual capacity planning. This can help to avoid over-provisioning and ensure that resources are only consumed when needed.

- Monitoring and Optimization Tools: Specialized monitoring and optimization tools are essential for managing the costs of serverless applications. These tools provide insights into function performance, invocation patterns, and cost metrics, allowing developers to identify and address cost inefficiencies. For example, tools can help identify functions that are frequently invoked but rarely used, allowing for optimization.

- Cold Start Considerations: Cold starts, where a function takes longer to initialize, can impact performance and potentially increase costs. Optimizing code and using techniques such as provisioned concurrency can help mitigate the impact of cold starts.

The Evolution of Cost-Aware Architecture: A Five-Year Outlook

Year 1-2: Consolidation and Automation. Focus on automating basic cost management tasks, such as resource tagging, budget alerts, and basic rightsizing recommendations. Increased adoption of FinOps principles and tools.

Year 3-4: AI-Driven Optimization and Predictive Analytics. AI and machine learning become integral to cost optimization. Predictive analytics are used to forecast spending, identify anomalies, and automate resource adjustments. Increased focus on multi-cloud cost management and serverless optimization.

Year 5: Autonomous Cost Management and Sustainability. Cost management becomes largely autonomous, with systems automatically optimizing resource allocation and making real-time adjustments based on performance and cost data. Emphasis on sustainable cloud practices, including carbon footprint reduction and green IT initiatives.

Epilogue

In conclusion, embracing a cost-aware architecture is no longer a luxury but a necessity in today’s dynamic technological landscape. By integrating financial considerations into every phase of the software development lifecycle, organizations can unlock significant cost savings, improve resource utilization, and enhance overall operational efficiency. From understanding the fundamentals to mastering advanced optimization techniques, the journey towards a cost-aware architecture is a continuous process of learning and adaptation.

The future of cloud computing hinges on the ability to balance innovation with fiscal responsibility, and cost-aware architecture provides the blueprint for achieving this balance.

Clarifying Questions

What is the primary goal of a cost-aware architecture?

The primary goal is to minimize the total cost of ownership (TCO) of a system or application while maintaining or improving its performance, scalability, and reliability.

How does cost-aware architecture differ from traditional architecture?

Traditional architecture often prioritizes functionality and performance without explicitly considering cost. Cost-aware architecture integrates cost considerations into the design, development, and operational phases, leading to more efficient resource utilization and reduced spending.

What are some common challenges in implementing a cost-aware architecture?

Challenges include the complexity of cloud pricing models, the need for cross-functional collaboration, and the difficulty in accurately forecasting resource needs. Monitoring and alerting systems must also be carefully designed to detect and address cost anomalies promptly.

How can I measure the success of a cost-aware architecture?

Success can be measured by tracking key performance indicators (KPIs) such as reduced cloud spending, improved resource utilization, and the alignment of costs with business value. Regular cost reports and analysis are crucial for monitoring and optimizing costs.

What skills are required to work with cost-aware architecture?

Skills include a strong understanding of cloud computing, resource management, DevOps principles, and cost optimization techniques. Data analysis, monitoring, and reporting skills are also essential.