Embarking on a journey to understand cloud native applications opens doors to a world of modern software development and deployment. This guide provides a comprehensive overview, demystifying the core concepts and illustrating how these applications are revolutionizing the way businesses operate. We will explore the fundamental principles, technologies, and benefits that define cloud native applications, offering insights into their architecture, development, and the advantages they bring to organizations of all sizes.

Cloud native applications are designed to leverage the full potential of the cloud computing model. They are built to be scalable, resilient, and easily adaptable to changing business needs. This involves a shift in mindset, embracing methodologies like microservices, DevOps, and CI/CD, all working together to enable faster development cycles, improved efficiency, and enhanced user experiences. Through this guide, you’ll gain a solid understanding of how cloud native applications are transforming the landscape of software development.

Defining Cloud Native Applications

Cloud native applications represent a paradigm shift in how software is designed, built, and deployed. They are specifically engineered to take full advantage of the cloud computing model, focusing on agility, scalability, and resilience. This approach enables organizations to respond rapidly to changing market demands and deliver value to users more efficiently.

Core Characteristics of Cloud Native Applications

Cloud native applications are defined by several key characteristics. These features work in concert to provide the benefits of cloud computing, such as improved resource utilization, faster deployment cycles, and enhanced application reliability.

- Microservices Architecture: Cloud native applications are typically built using a microservices architecture. This involves breaking down the application into small, independently deployable services that communicate with each other via APIs. This architecture promotes modularity, allowing teams to work independently on different parts of the application, and enabling easier scaling of individual services. For example, a large e-commerce platform might have separate microservices for user authentication, product catalog management, shopping cart functionality, and payment processing.

- Containerization: Containers, such as those created with Docker, are used to package and isolate application code and its dependencies. This ensures that the application runs consistently across different environments, from development to production. Containerization simplifies deployment and management, and makes it easier to scale applications horizontally.

- Dynamic Orchestration: Orchestration tools like Kubernetes are used to manage and automate the deployment, scaling, and management of containerized applications. Kubernetes automates tasks such as container deployment, scaling, and self-healing, ensuring that applications remain available and resilient even in the face of failures.

- DevOps Practices: Cloud native applications are built and managed using DevOps principles, which emphasize collaboration, automation, and continuous delivery. This approach enables faster release cycles, improved quality, and reduced operational costs. DevOps integrates development and operations teams to streamline the software development lifecycle.

- Automated CI/CD Pipelines: Continuous Integration and Continuous Delivery (CI/CD) pipelines automate the build, test, and deployment processes. This allows for frequent releases of new features and bug fixes, improving the agility of the development process. Automation minimizes manual intervention, reducing the risk of errors and accelerating the time to market.

- Infrastructure as Code (IaC): IaC involves managing infrastructure through code, allowing for automated provisioning and management of cloud resources. This enables consistent and repeatable infrastructure deployments, and facilitates version control and collaboration. Tools like Terraform are commonly used for IaC.

- Observability: Cloud native applications are designed with observability in mind, which involves collecting and analyzing data about the application’s performance and behavior. This includes logging, monitoring, and tracing, which help to identify and resolve issues quickly. Observability tools provide insights into the health and performance of the application.

Examples of Cloud Native Applications and Their Use Cases

Cloud native principles are applied across various industries and use cases. These examples illustrate the versatility and benefits of the cloud native approach.

- E-commerce Platforms: E-commerce platforms like Amazon, Shopify, and Etsy leverage cloud native technologies to handle large volumes of traffic, process transactions securely, and provide a seamless user experience. Microservices enable rapid scaling of specific features during peak seasons like Black Friday.

- Streaming Services: Streaming services like Netflix and Spotify utilize cloud native architectures to deliver content to millions of users globally. They rely on microservices for content delivery, recommendation engines, and user authentication, ensuring high availability and scalability. Netflix, for example, has a complex architecture with hundreds of microservices.

- Online Gaming: Online game developers employ cloud native applications to support large numbers of concurrent users, manage game servers, and provide real-time gameplay experiences. Technologies like Kubernetes and containerization enable them to scale their infrastructure dynamically based on player demand.

- Financial Services: Financial institutions use cloud native applications for various purposes, including fraud detection, algorithmic trading, and customer relationship management. Cloud native technologies allow them to process large amounts of data in real time and provide secure and reliable services.

- Healthcare Applications: Healthcare providers utilize cloud native applications for applications such as electronic health records (EHR), telemedicine platforms, and medical imaging systems. Cloud native applications facilitate secure data storage, enable remote access, and improve patient care.

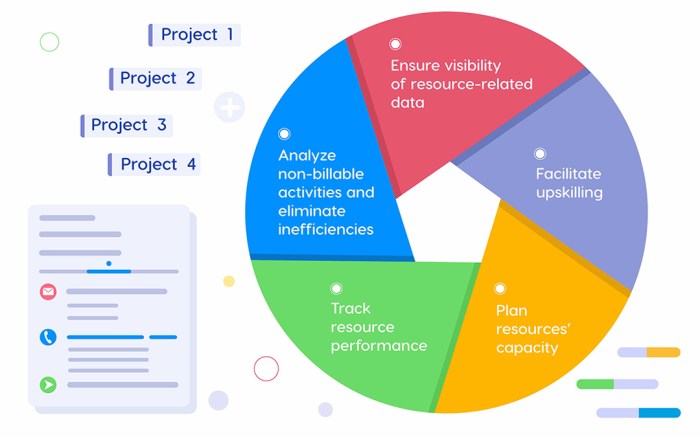

Key Components of a Cloud Native Application (Diagram)

A simplified diagram illustrates the interaction of key components in a typical cloud native application.

The diagram depicts a cloud native application’s architecture, showcasing its components and their interactions. At the center, a containerized application (represented by a container icon) is running. Surrounding the container are several key components:

- Users: Represented by a user icon, these are the end-users accessing the application through a web browser or mobile app.

- API Gateway: Illustrated by a gateway icon, this acts as the entry point for all user requests, routing them to the appropriate microservices.

- Microservices: Shown as multiple, distinct service icons (e.g., authentication, product catalog, payment processing), these represent the individual, independently deployable components of the application.

- Service Mesh: Represented by a mesh-like icon, this handles service-to-service communication, including traffic management, security, and observability.

- Container Orchestration (Kubernetes): Displayed as a Kubernetes logo, this component manages the deployment, scaling, and management of the containerized application.

- CI/CD Pipeline: Depicted by a pipeline icon, this automates the build, test, and deployment processes.

- Monitoring and Logging: Represented by a graph and log icon, this collects and analyzes data about the application’s performance and behavior.

- Infrastructure as Code (IaC): Displayed by a code icon, this manages the infrastructure through code, enabling automated provisioning and management of cloud resources.

- Cloud Provider: Illustrated by a cloud icon, this provides the underlying infrastructure, including compute, storage, and networking resources.

The arrows in the diagram indicate the flow of data and communication between the components. Users interact with the API gateway, which then routes requests to the appropriate microservices. The service mesh manages the communication between microservices. Kubernetes orchestrates the containers, and the CI/CD pipeline automates the deployment process. Monitoring and logging tools provide insights into the application’s performance, and IaC manages the infrastructure.

Core Principles of Cloud Native Development

Cloud native development hinges on a set of core principles that enable organizations to build and run applications that are resilient, scalable, and easily managed. These principles emphasize agility, automation, and the effective utilization of cloud resources. Embracing these principles allows businesses to rapidly innovate and respond to changing market demands.

Microservices Architecture in Cloud Native Applications

Microservices architecture is a cornerstone of cloud native development. It involves building applications as a collection of small, independently deployable services. Each service focuses on a specific business capability and communicates with other services through well-defined APIs.

- Independent Deployment: Each microservice can be deployed and updated independently, without affecting other parts of the application. This allows for faster release cycles and reduces the risk associated with changes. For example, an e-commerce platform might have separate microservices for product catalog, user authentication, and payment processing, each deployable independently.

- Technology Diversity: Microservices can be built using different programming languages, frameworks, and technologies, optimized for their specific tasks. This flexibility allows teams to choose the best tools for the job and leverage the latest advancements.

- Scalability and Resilience: Individual microservices can be scaled independently based on demand. If one service experiences high traffic, only that service needs to be scaled, not the entire application. Similarly, the failure of one service doesn’t necessarily bring down the entire application; other services can continue to function.

- Enhanced Agility: Smaller, more focused teams can develop and deploy microservices more quickly. This increased agility allows organizations to respond faster to market changes and customer needs.

DevOps Practices in Cloud Native Development

DevOps practices are essential for enabling the continuous delivery and operation of cloud native applications. DevOps emphasizes collaboration between development and operations teams, automating processes, and monitoring applications throughout their lifecycle.

- Continuous Integration and Continuous Delivery (CI/CD): CI/CD pipelines automate the build, testing, and deployment of code changes. This enables frequent and reliable releases, reducing the time it takes to deliver new features and bug fixes to users. Tools like Jenkins, GitLab CI, and CircleCI are commonly used for CI/CD.

- Infrastructure as Code (IaC): IaC involves managing infrastructure through code, allowing for automated provisioning, configuration, and scaling of resources. This ensures consistency and repeatability, making it easier to manage complex cloud environments. Tools like Terraform and AWS CloudFormation are examples of IaC solutions.

- Monitoring and Observability: Comprehensive monitoring and observability are crucial for understanding application behavior and identifying issues. This includes collecting metrics, logs, and traces to gain insights into performance, health, and user experience. Tools like Prometheus, Grafana, and the ELK stack (Elasticsearch, Logstash, Kibana) are frequently employed.

- Automation: Automating repetitive tasks, such as deployments, scaling, and backups, frees up development and operations teams to focus on more strategic initiatives. Automation reduces the risk of human error and improves efficiency.

Benefits of Using Containers in Cloud Native Applications

Containers, such as those managed by Docker and Kubernetes, are a key technology for building and deploying cloud native applications. They provide a consistent and portable environment for running applications, simplifying deployment and management.

| Benefit | Description | Example | Impact |

|---|---|---|---|

| Portability | Containers package an application and its dependencies into a single unit that can run consistently across different environments. | A containerized application built on a developer’s laptop can be deployed to a testing environment, and then to production, without modification. | Reduced “works on my machine” issues and streamlined deployment. |

| Efficiency | Containers share the host operating system kernel, making them lightweight and resource-efficient compared to virtual machines. | A single server can run many more containerized applications than virtual machines, maximizing resource utilization. | Lower infrastructure costs and improved scalability. |

| Scalability | Containers can be easily scaled up or down based on demand, using orchestration tools like Kubernetes. | Kubernetes can automatically spin up or down container instances based on CPU usage or other metrics. For instance, during a flash sale, the number of containers handling product searches can automatically increase. | Improved application responsiveness and ability to handle traffic spikes. |

| Isolation | Containers isolate applications from each other, preventing conflicts and ensuring that issues in one application do not affect others. | If one containerized service crashes, it does not take down other services running on the same host. | Enhanced application resilience and reduced downtime. |

Microservices Architecture

Microservices architecture is a crucial element of cloud-native application development. This approach structures an application as a collection of loosely coupled services, which are independently deployable, and organized around business capabilities. This contrasts with the traditional monolithic approach where all functionalities are bundled into a single application. This shift offers significant advantages in terms of scalability, agility, and resilience.

Advantages of Using a Microservices Architecture

Microservices architecture provides several key advantages over monolithic applications, making it a preferred choice for cloud-native deployments. These benefits contribute to improved development velocity, system resilience, and overall application scalability.

- Independent Deployability: Each microservice can be deployed and updated independently without affecting other parts of the application. This accelerates the release cycle and reduces the risk of widespread failures. For example, a change to the “user profile” service doesn’t require redeploying the entire e-commerce platform.

- Technology Diversity: Different microservices can be built using different technologies, programming languages, and frameworks, allowing teams to choose the best tools for the job. This fosters innovation and enables the use of specialized technologies. For example, one microservice might be written in Python for machine learning tasks, while another uses Java for high-performance processing.

- Scalability: Microservices can be scaled independently based on their specific resource needs. This allows for efficient resource allocation and prevents over-provisioning. A high-traffic service, like a product catalog, can be scaled horizontally without impacting less-used services, such as order history.

- Fault Isolation: If one microservice fails, it doesn’t necessarily bring down the entire application. This improves system resilience and availability. For instance, a failure in the “payment processing” service only affects payment transactions, not the entire shopping experience.

- Improved Developer Productivity: Smaller, more focused services are easier for developers to understand, maintain, and debug. This leads to faster development cycles and reduced development costs. Small teams can focus on specific services, leading to increased specialization and efficiency.

Common Patterns for Designing Microservices

Designing effective microservices involves adhering to several established patterns. These patterns address common challenges in microservice architecture, such as service discovery, data consistency, and API gateway implementation.

- API Gateway: An API gateway acts as a single entry point for all client requests, routing them to the appropriate microservices. It can handle authentication, authorization, rate limiting, and other cross-cutting concerns. An example of an API gateway is a reverse proxy like Nginx or a dedicated API management solution.

- Service Discovery: Microservices dynamically discover each other’s locations, typically using a service registry. This allows services to find and communicate with each other even as their instances change. Examples include tools like Consul, etcd, or Kubernetes service discovery.

- Circuit Breaker: A circuit breaker monitors the health of remote services and prevents cascading failures. If a service becomes unavailable, the circuit breaker stops sending requests to it, protecting the calling service from being overwhelmed. Libraries like Hystrix and Resilience4j implement the circuit breaker pattern.

- Eventual Consistency: Data consistency across microservices is often achieved through eventual consistency, where changes are propagated asynchronously. This allows for greater scalability and fault tolerance. For example, a change in the “user profile” service might trigger an event that updates the “order history” service.

- Database per Service: Each microservice typically has its own database, enforcing loose coupling and allowing services to choose the database technology best suited for their needs. This prevents a single point of failure and allows for independent scaling of each database.

Communication Methods Between Microservices

Microservices communicate with each other using various methods, each with its own trade-offs. Choosing the right communication method depends on the specific requirements of the application, including performance, reliability, and coupling.

- Synchronous Communication (REST): Microservices can communicate synchronously using RESTful APIs over HTTP. This is suitable for request-response interactions, where a service needs an immediate response from another service.

- Asynchronous Communication (Message Queues): Microservices can communicate asynchronously using message queues like Kafka or RabbitMQ. This is ideal for decoupling services and handling high-volume, event-driven communication. One service publishes a message to a queue, and other services consume the message asynchronously.

- gRPC: gRPC is a high-performance, open-source remote procedure call (RPC) framework that uses Protocol Buffers for data serialization. It’s suitable for internal service-to-service communication, offering lower latency and higher throughput than REST.

- Event-Driven Architecture (EDA): Services publish events when state changes occur, and other services subscribe to these events to react accordingly. This promotes loose coupling and enables building highly scalable and resilient systems.

Example: E-commerce Platform

Consider an e-commerce platform built using microservices. A customer places an order. This triggers a series of interactions between various microservices. The “Order Service” receives the order and creates an order record. It then communicates with the “Inventory Service” (synchronously via REST) to check product availability.

If the products are available, the “Order Service” updates the order status. The “Payment Service” (synchronously via REST) is then contacted to process the payment. Once the payment is confirmed, the “Order Service” publishes an event (asynchronously via a message queue) indicating a new order has been placed. This event is consumed by the “Shipping Service” and the “Notification Service” to initiate the shipping process and send order confirmation notifications to the customer.

The “Recommendation Service” might also be triggered to update product recommendations based on the new order. In this scenario, different communication methods are used depending on the requirements. For example, a synchronous REST call is suitable for the real-time inventory check, while an asynchronous message queue ensures that the shipping process is not directly coupled to the order placement.

Containers and Orchestration

Cloud native applications thrive on efficient deployment and management, and containers and orchestration play a crucial role in achieving these goals. These technologies provide the foundation for portability, scalability, and resilience, allowing applications to run consistently across different environments. This section will delve into the specifics of containers and orchestration within the cloud native context.

The Role of Containers in Cloud Native Applications

Containers are a critical component of cloud native applications, providing a lightweight and portable way to package and run software. They encapsulate an application and all its dependencies – code, runtime, system tools, system libraries, and settings – into a single, self-contained unit. This approach offers significant advantages over traditional deployment methods.

- Portability: Containers ensure that an application runs consistently across different environments, regardless of the underlying infrastructure. This “write once, run anywhere” capability simplifies development, testing, and deployment.

- Efficiency: Containers share the host operating system’s kernel, making them more lightweight and resource-efficient than virtual machines. This leads to faster startup times and reduced resource consumption.

- Isolation: Containers isolate applications from each other, preventing conflicts and ensuring that a problem in one application does not affect others. This improves security and stability.

- Immutability: Container images are immutable, meaning they cannot be changed after they are created. This simplifies version control and rollback processes.

- Scalability: Containers can be easily scaled up or down to meet changing demands. Orchestration tools automate this process.

Deploying a Simple Application Using Kubernetes

Kubernetes (K8s) is the leading container orchestration platform, automating the deployment, scaling, and management of containerized applications. Deploying a simple application with Kubernetes involves several key steps. This example will demonstrate the deployment of a basic “Hello World” application using a container image.

- Prerequisites:

- A Kubernetes cluster: This can be a local cluster (e.g., Minikube, kind) or a managed Kubernetes service (e.g., Google Kubernetes Engine, Amazon Elastic Kubernetes Service, Azure Kubernetes Service).

- kubectl: The command-line tool for interacting with the Kubernetes cluster.

- A container image: This example assumes you have a container image available in a container registry. A simple “Hello World” application can be built using a Dockerfile.

- Create a Deployment: A Deployment manages the desired state for your application, including the number of replicas (instances) to run. Create a deployment configuration file (e.g., `hello-world-deployment.yaml`):

“`yamlapiVersion: apps/v1kind: Deploymentmetadata: name: hello-world-deployment labels: app: hello-worldspec: replicas: 3 selector: matchLabels: app: hello-world template: metadata: labels: app: hello-world spec: containers:

name

hello-world-container image: your-image-repository/hello-world:latest # Replace with your image ports:

containerPort

8080“`

- Apply the Deployment: Use `kubectl` to apply the deployment configuration:

“`bashkubectl apply -f hello-world-deployment.yaml“`

- Create a Service: A Service provides a stable IP address and DNS name for accessing the application. Create a service configuration file (e.g., `hello-world-service.yaml`):

“`yamlapiVersion: v1kind: Servicemetadata: name: hello-world-servicespec: selector: app: hello-world ports:

protocol

TCP port: 80 targetPort: 8080 type: LoadBalancer # Use NodePort or ClusterIP for local testing“`

- Apply the Service: Use `kubectl` to apply the service configuration:

“`bashkubectl apply -f hello-world-service.yaml“`

- Access the Application: Obtain the external IP address of the service (if using a LoadBalancer) and access the application through a web browser.

“`bashkubectl get service hello-world-service“`This process Artikels the fundamental steps involved in deploying a containerized application on Kubernetes. The actual commands and configurations might vary slightly depending on the specific application and the Kubernetes environment.

Demonstrating the Benefits of Container Orchestration

Container orchestration, exemplified by Kubernetes, offers several significant benefits for cloud native applications. These benefits extend beyond simple deployment and include automated scaling, self-healing capabilities, and efficient resource utilization.

- Automated Scaling: Kubernetes can automatically scale the number of container instances based on resource utilization (CPU, memory) or custom metrics. For example, if the CPU usage of your application pods exceeds a certain threshold, Kubernetes can automatically create more pods to handle the increased load.

- Self-Healing: Kubernetes continuously monitors the health of container instances. If a container fails, Kubernetes automatically restarts it or replaces it with a new one, ensuring high availability. This automated recovery process minimizes downtime and improves application resilience.

- Declarative Configuration: Kubernetes uses a declarative approach, where you define the desired state of your application. Kubernetes then works to achieve that state, managing the complexities of container deployment, scaling, and networking. This simplifies operations and promotes infrastructure as code.

- Resource Optimization: Kubernetes efficiently allocates resources (CPU, memory) to containers, maximizing the utilization of the underlying infrastructure. This leads to cost savings and improved performance.

- Rolling Updates: Kubernetes allows for rolling updates, where new versions of an application are deployed gradually, without disrupting service. This ensures zero-downtime deployments and reduces the risk of application outages. For example, a new version of an application can be rolled out to a subset of pods first, and if successful, then to the rest of the pods.

These benefits collectively contribute to the agility, scalability, and resilience of cloud native applications, making them well-suited for modern software development and deployment practices.

Continuous Integration and Continuous Delivery (CI/CD)

Cloud native applications thrive on agility and rapid iteration. Continuous Integration and Continuous Delivery (CI/CD) pipelines are fundamental to achieving these goals, enabling frequent and reliable software releases. These pipelines automate the building, testing, and deployment processes, significantly reducing the time it takes to deliver new features and updates to users.

CI/CD Pipeline in Cloud Native Applications

The CI/CD pipeline in the context of cloud native applications automates the entire software release lifecycle, from code changes to deployment. It encompasses several stages, each with specific tasks and responsibilities. The goal is to ensure that code changes are integrated, tested, and deployed quickly and reliably, with minimal manual intervention.Here’s a breakdown of the key stages:

- Code Commit: Developers commit code changes to a central repository (e.g., Git). This triggers the CI/CD pipeline.

- Build: The CI/CD system automatically builds the application from the source code. This typically involves compiling the code, resolving dependencies, and packaging the application into an artifact (e.g., a container image).

- Testing: Automated tests are run to verify the functionality and quality of the application. This includes unit tests, integration tests, and potentially end-to-end tests.

- Deployment: The application artifact is deployed to a staging or production environment. This often involves updating container images, configuring services, and scaling resources.

- Monitoring: The deployed application is continuously monitored for performance, errors, and other relevant metrics. Feedback from monitoring is used to improve the application and the CI/CD pipeline itself.

CI/CD Workflow Diagram

Below is a diagram illustrating a typical CI/CD workflow. The diagram depicts the flow of code changes from development to production.

Diagram Description:

The diagram shows a cyclical process starting with developers committing code. This triggers the CI/CD pipeline, which then proceeds through the following stages:

- Source Code Repository (e.g., Git): This is where the code is stored and managed.

- Build Stage: The code is compiled, and dependencies are resolved. Container images are built here.

- Testing Stage: Automated tests are executed, including unit, integration, and end-to-end tests.

- Staging Environment: The application is deployed to a staging environment for further testing and validation.

- Production Environment: If the staging tests are successful, the application is deployed to the production environment.

- Monitoring: The application is continuously monitored in production, and feedback is provided to the developers.

The arrows indicate the flow of the process, starting from code commit and cycling through build, test, and deployment stages.

Tools Used for CI/CD in Cloud Native Environments

Several tools are used to implement CI/CD pipelines in cloud native environments. These tools automate various stages of the pipeline, from code integration to deployment and monitoring. The choice of tools often depends on the specific needs of the project and the cloud platform being used.Here’s a look at some popular CI/CD tools:

- Version Control Systems: Git is the industry standard for version control, allowing developers to track changes to their code and collaborate effectively.

- CI/CD Orchestration Tools: These tools manage and automate the CI/CD pipeline. Examples include:

- Jenkins: A widely used open-source automation server.

- GitLab CI/CD: Integrated CI/CD features within GitLab.

- GitHub Actions: CI/CD directly integrated into GitHub repositories.

- CircleCI: A cloud-based CI/CD platform.

- Azure DevOps: A comprehensive suite of DevOps tools from Microsoft.

- Containerization Tools: These tools are used to build and manage container images. Docker is the most popular.

- Container Orchestration Tools: These tools manage the deployment, scaling, and management of containerized applications. Kubernetes is the leading container orchestration platform.

- Testing Tools: Various testing tools are used for different types of tests, including unit tests (e.g., JUnit, pytest), integration tests, and end-to-end tests (e.g., Selenium, Cypress).

- Configuration Management Tools: Tools like Ansible, Chef, and Puppet automate the configuration of infrastructure and application components.

- Monitoring and Logging Tools: These tools provide insights into the performance and health of the application. Examples include Prometheus, Grafana, and the ELK stack (Elasticsearch, Logstash, Kibana).

Cloud Native Technologies and Tools

Cloud native application development relies on a diverse ecosystem of technologies and tools. These resources enable developers to build, deploy, and manage applications in a way that leverages the benefits of the cloud, such as scalability, resilience, and agility. Selecting the right tools is crucial for a successful cloud native journey, as they directly impact the efficiency and effectiveness of the development process.

Key Technologies and Tools

Several key technologies and tools are commonly used in cloud native application development. These tools span various aspects of the application lifecycle, from development and deployment to monitoring and security.

- Containerization Technologies: Containerization is fundamental to cloud native applications. Technologies like Docker are used to package applications and their dependencies into portable containers. These containers ensure consistency across different environments.

- Container Orchestration Platforms: Orchestration platforms, such as Kubernetes, manage and automate the deployment, scaling, and operation of containerized applications. They provide essential features like service discovery, load balancing, and automated rollouts.

- Service Meshes: Service meshes, like Istio and Linkerd, provide a dedicated infrastructure layer for handling service-to-service communication. They offer features like traffic management, security, and observability, improving the reliability and manageability of microservices.

- CI/CD Pipelines: Continuous Integration and Continuous Delivery (CI/CD) pipelines automate the build, test, and deployment processes. Tools like Jenkins, GitLab CI, and CircleCI facilitate frequent and reliable releases.

- Monitoring and Logging Tools: Monitoring and logging are critical for understanding application behavior and identifying issues. Tools like Prometheus, Grafana, and the ELK stack (Elasticsearch, Logstash, Kibana) provide insights into performance, health, and security.

- Serverless Computing: Serverless platforms, such as AWS Lambda, Google Cloud Functions, and Azure Functions, allow developers to run code without managing servers. They enable event-driven architectures and reduce operational overhead.

- API Gateways: API gateways, like Kong and Apigee, manage API traffic, providing security, routing, and rate limiting. They act as a central point of control for API interactions.

- Infrastructure as Code (IaC) Tools: IaC tools, such as Terraform and Ansible, automate the provisioning and management of infrastructure. They enable infrastructure to be treated as code, promoting consistency and repeatability.

Comparative Table of Container Orchestration Platforms

Container orchestration platforms are essential for managing containerized applications at scale. Different platforms offer varying features and capabilities. The following table provides a comparison of some popular options:

| Platform | Description | Key Features | Pros and Cons |

|---|---|---|---|

| Kubernetes | An open-source container orchestration platform, widely adopted and supported by a large community. |

|

|

| Docker Swarm | A native clustering and orchestration solution provided by Docker. |

|

|

| Amazon ECS (Elastic Container Service) | A fully managed container orchestration service provided by Amazon Web Services. |

|

|

| Azure Kubernetes Service (AKS) | A managed Kubernetes service offered by Microsoft Azure. |

|

|

Visual Representation of a Cloud Native Technology Stack

A cloud native technology stack is a layered architecture, with each layer building upon the one below it. This stack encompasses the various components and technologies that work together to build and operate cloud native applications.

Description of the Visual Representation:

The visual representation depicts a layered technology stack. The base layer is labeled “Infrastructure,” representing the underlying resources like servers, networking, and storage. Above that is the “Containerization” layer, where tools like Docker are used to package applications. The next layer is “Orchestration,” which includes Kubernetes, managing the containers. Then, there is a “Service Mesh” layer with tools like Istio.

The “CI/CD” layer sits above, with tools like Jenkins and GitLab CI. The “Application” layer contains the microservices and application logic. A “Monitoring & Logging” layer provides observability. The very top layer represents “Users” interacting with the application through an API gateway.

Benefits of Cloud Native Applications

Cloud native applications offer significant advantages for businesses seeking to improve efficiency, reduce costs, and accelerate innovation. By embracing the principles of cloud native development, organizations can unlock new levels of agility, scalability, and resilience, ultimately leading to a more competitive and responsive business model. The adoption of cloud native practices is no longer a futuristic concept; it’s a proven strategy driving business success across various industries.

Improved Scalability

Cloud native applications are designed to scale automatically, adapting to fluctuating demands in real-time. This elasticity is a core benefit, allowing businesses to handle peak loads without performance degradation and optimize resource utilization during periods of low activity. This scalability is often achieved through technologies like container orchestration and automated scaling mechanisms.For example:

- Horizontal Scaling: Cloud native applications can easily add or remove instances of an application based on demand. This means if traffic suddenly increases, the application can automatically deploy more instances to handle the load, preventing slowdowns or outages.

- Dynamic Resource Allocation: Cloud native platforms can dynamically allocate resources (CPU, memory, storage) to applications as needed. This ensures that applications always have the resources they require, improving performance and responsiveness.

- Geographic Distribution: Cloud native applications can be deployed across multiple geographic regions. This enables businesses to serve users globally with low latency and improve disaster recovery capabilities.

Consider the case of Netflix, a leading streaming service. Their cloud native architecture, built on AWS, allows them to scale their services to handle massive traffic spikes during peak viewing hours, such as the release of a popular new series. This scalability ensures a seamless viewing experience for millions of users worldwide, regardless of demand. Without a cloud native approach, Netflix would struggle to maintain this level of performance and availability.

Enhanced Agility and Speed to Market

Cloud native development empowers businesses to release new features and updates more frequently and efficiently. This agility is achieved through automation, continuous integration and continuous delivery (CI/CD) pipelines, and microservices architecture. These components enable faster iteration cycles and reduced time to market for new products and services.The core of this agility rests on several key aspects:

- Faster Development Cycles: Microservices allow developers to work on smaller, independent components of an application. This facilitates faster development, testing, and deployment cycles.

- Automated Deployments: CI/CD pipelines automate the build, test, and deployment processes, significantly reducing the time and effort required to release new versions of an application.

- Reduced Risk: Small, incremental releases of microservices reduce the risk of major disruptions. If a problem arises, it can be isolated to a specific service and quickly addressed without affecting the entire application.

A practical example of this is Spotify, which uses a cloud native approach to deploy updates multiple times a day. This constant stream of improvements, ranging from bug fixes to new features, is made possible by their CI/CD pipelines and microservices architecture. This rapid release cycle allows Spotify to quickly respond to user feedback and stay ahead of the competition in the rapidly evolving music streaming market.

This agility is a significant competitive advantage.

Challenges in Adopting Cloud Native Applications

Adopting cloud native applications offers significant benefits, but the transition is not without its hurdles. Successfully navigating these challenges requires careful planning, a strategic approach, and a commitment to continuous learning and improvement. Understanding the potential pitfalls and implementing effective strategies is crucial for realizing the full potential of cloud native architectures.

Common Challenges Faced During Adoption

Migrating to cloud native architectures presents several common obstacles. These challenges often stem from the shift in operational models, the need for new skill sets, and the complexities of managing distributed systems. Addressing these issues proactively is essential for a smooth and successful transition.

- Complexity of Distributed Systems: Cloud native applications are built on distributed architectures, which introduces complexities in areas such as service discovery, inter-service communication, and data consistency. Managing these distributed components requires robust monitoring, logging, and tracing capabilities. For example, troubleshooting a performance issue in a microservices-based application might involve analyzing logs from multiple services, correlating events across different components, and identifying the root cause of the problem.

- Security Concerns: Cloud native environments introduce new security considerations, including securing container images, managing secrets, and implementing robust access controls. Vulnerabilities in container images, misconfigured deployments, and inadequate network segmentation can expose applications to security threats. It’s important to implement security best practices, such as regularly scanning container images for vulnerabilities and using a zero-trust security model.

- Skills Gap: Cloud native technologies require specialized skills in areas such as containerization, orchestration, CI/CD, and cloud infrastructure. Many organizations struggle to find and retain talent with the necessary expertise. Investing in training programs, partnering with cloud providers, and fostering a culture of continuous learning are crucial for bridging the skills gap.

- Cost Management: Cloud native applications can lead to increased operational costs if not managed effectively. Optimizing resource utilization, implementing cost monitoring tools, and adopting cost-aware design patterns are essential for controlling cloud spending. For example, using auto-scaling to automatically adjust resources based on demand can help to avoid over-provisioning and reduce costs.

- Cultural and Organizational Changes: Adopting cloud native applications often requires significant changes to organizational structure, processes, and culture. Moving from traditional, monolithic architectures to microservices-based applications requires a shift towards agile development methodologies, DevOps practices, and a culture of collaboration. Resistance to change, lack of communication, and siloed teams can hinder the transition.

- Vendor Lock-in: Relying heavily on specific cloud provider services can lead to vendor lock-in, making it difficult to migrate to another provider or adopt a multi-cloud strategy. Choosing open-source technologies, designing applications with portability in mind, and using platform-agnostic tools can help mitigate this risk.

Strategies for Addressing Challenges

Overcoming the challenges of cloud native adoption requires a proactive and strategic approach. Implementing these strategies can help organizations successfully navigate the transition and realize the benefits of cloud native architectures.

- Embrace DevOps Practices: Implementing DevOps practices, such as automation, continuous integration, and continuous delivery (CI/CD), is essential for streamlining development and deployment processes. Automation tools can be used to automate infrastructure provisioning, configuration management, and application deployments, reducing manual effort and improving efficiency.

- Implement Robust Monitoring and Logging: Monitoring application performance, collecting logs, and tracing requests across distributed systems are crucial for identifying and resolving issues. Using tools like Prometheus for monitoring, Elasticsearch for logging, and Jaeger for tracing can provide valuable insights into application behavior.

- Prioritize Security: Implementing robust security practices, such as container image scanning, vulnerability management, and access control, is essential for protecting cloud native applications. Using security tools, implementing a zero-trust security model, and regularly auditing security configurations can help to mitigate security risks.

- Invest in Training and Development: Providing training and development opportunities for developers and operations teams is essential for building the skills required for cloud native development. Investing in cloud certifications, offering hands-on workshops, and encouraging continuous learning can help to bridge the skills gap.

- Start Small and Iterate: Beginning with a pilot project or a small-scale implementation can help organizations gain experience and build confidence before undertaking a large-scale migration. Iterating on the implementation, gathering feedback, and making adjustments based on lessons learned can improve the overall outcome.

- Choose the Right Tools and Technologies: Selecting the appropriate tools and technologies for cloud native development is crucial for success. Consider factors such as ease of use, scalability, and community support when choosing tools for containerization, orchestration, CI/CD, and monitoring.

- Establish Clear Governance and Standards: Defining clear governance policies and establishing coding standards can help to ensure consistency and maintainability across cloud native applications. This includes defining best practices for container image creation, configuration management, and deployment processes.

Skills and Expertise Required for Cloud Native Development

Cloud native development requires a diverse set of skills and expertise. These skills span various areas, from software development and infrastructure management to security and operations. Building a team with the necessary skills is crucial for successful cloud native adoption.

- Containerization Technologies: Expertise in containerization technologies like Docker is essential for building and deploying applications in containers. Understanding container image creation, container networking, and container security is crucial.

- Orchestration Platforms: Proficiency in orchestration platforms like Kubernetes is required for managing and scaling containerized applications. Knowledge of Kubernetes concepts, such as pods, deployments, services, and namespaces, is essential.

- CI/CD Pipelines: Experience with CI/CD tools, such as Jenkins, GitLab CI, or CircleCI, is crucial for automating the build, test, and deployment processes. Understanding CI/CD principles and best practices is essential.

- Cloud Infrastructure: Familiarity with cloud infrastructure services, such as compute, storage, networking, and databases, is important for deploying and managing cloud native applications. Understanding cloud provider-specific services and their integration with cloud native technologies is crucial.

- Programming Languages: Proficiency in one or more programming languages, such as Go, Java, Python, or Node.js, is essential for developing cloud native applications. Understanding language-specific frameworks and libraries is also important.

- Monitoring and Logging Tools: Experience with monitoring and logging tools, such as Prometheus, Grafana, Elasticsearch, and Kibana, is essential for monitoring application performance and troubleshooting issues.

- Security Best Practices: Knowledge of security best practices, such as secure coding, vulnerability management, and access control, is crucial for securing cloud native applications.

- Networking Concepts: Understanding networking concepts, such as TCP/IP, DNS, and load balancing, is essential for designing and managing cloud native applications.

- DevOps Principles: Familiarity with DevOps principles, such as automation, collaboration, and continuous improvement, is important for fostering a culture of DevOps within the organization.

Security Considerations in Cloud Native Applications

Cloud native applications, while offering significant advantages in terms of scalability, agility, and cost-effectiveness, introduce a unique set of security challenges. The distributed nature of these applications, coupled with the use of microservices, containers, and orchestration tools, expands the attack surface and necessitates a shift in security thinking. Securing cloud native applications requires a holistic approach that addresses vulnerabilities at every stage of the application lifecycle, from development to deployment and runtime.

Security Considerations Specific to Cloud Native Environments

The cloud native environment presents distinct security considerations due to its dynamic and distributed nature. These considerations are critical for protecting applications and data from various threats.

- Container Security: Containers, the building blocks of cloud native applications, introduce new security challenges. Vulnerabilities in container images, misconfigurations, and runtime security threats must be addressed. A compromised container can lead to significant security breaches.

- Microservices Security: Microservices architecture, while promoting modularity, increases the complexity of security. Each microservice acts as a potential entry point for attackers. Secure communication between microservices, identity and access management, and API security are paramount.

- Orchestration Security: Orchestration platforms like Kubernetes manage the deployment, scaling, and networking of containers. Securing the orchestration layer is crucial. Misconfigured Kubernetes clusters, vulnerabilities in the orchestration software, and unauthorized access can expose the entire application to risks.

- CI/CD Pipeline Security: The CI/CD pipeline automates the build, test, and deployment processes. Securing this pipeline is essential to prevent attackers from injecting malicious code or compromising the application during deployment. Securing the pipeline involves securing the source code repository, build tools, and deployment infrastructure.

- Infrastructure Security: The underlying infrastructure, including the cloud provider’s services, must be secure. This involves proper configuration of virtual machines, networks, and storage, as well as implementing robust monitoring and logging.

- Identity and Access Management (IAM): Effective IAM is critical in cloud native environments. This includes implementing strong authentication and authorization mechanisms to control access to resources and services. Granular access control based on the principle of least privilege is essential.

Best Practices for Securing Cloud Native Applications

Implementing robust security practices is vital for protecting cloud native applications from various threats. These practices should be integrated throughout the application lifecycle.

- Shift-Left Security: Integrate security into the early stages of the development lifecycle. This includes security testing, vulnerability scanning, and static code analysis during development. This helps identify and address security issues early, reducing the cost and effort of remediation.

- Container Image Security: Use trusted base images, regularly scan container images for vulnerabilities, and implement image signing and verification to ensure image integrity. Tools like Docker Bench for Security and Trivy can be used for vulnerability scanning.

- Network Segmentation: Segment the network to isolate different microservices and applications. This limits the impact of a security breach by preventing attackers from moving laterally within the environment.

- Secure Microservice Communication: Implement secure communication protocols, such as TLS, to encrypt data in transit between microservices. Use service meshes like Istio or Linkerd to manage and secure microservice communication.

- Implement IAM Best Practices: Enforce strong authentication and authorization mechanisms. Utilize role-based access control (RBAC) to grant users only the necessary permissions. Regularly review and audit access controls.

- Automated Security Monitoring and Logging: Implement comprehensive monitoring and logging to detect and respond to security threats in real-time. Use security information and event management (SIEM) tools to analyze logs and identify suspicious activities.

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration testing to identify vulnerabilities and assess the effectiveness of security controls. This helps proactively address security weaknesses.

- Configuration Management: Implement configuration management tools to ensure consistent and secure configurations across the entire infrastructure. Automate configuration changes to reduce the risk of human error.

- Compliance and Governance: Adhere to relevant industry regulations and compliance standards, such as GDPR, HIPAA, and PCI DSS. Implement governance policies and procedures to ensure consistent security practices.

Summary of Security Vulnerabilities and Mitigation Strategies

Vulnerability: Container Image Vulnerabilities

Mitigation: Use trusted base images, regularly scan images, and implement image signing.Vulnerability: Misconfigured Kubernetes Clusters

Mitigation: Follow Kubernetes security best practices, use security scanning tools, and implement RBAC.Vulnerability: Insecure Microservice Communication

Mitigation: Implement TLS encryption, use service meshes for secure communication, and enforce mutual TLS.Vulnerability: Weak IAM Controls

Mitigation: Implement strong authentication, use RBAC, and regularly review access controls.Vulnerability: Vulnerabilities in CI/CD Pipeline

Mitigation: Secure the source code repository, build tools, and deployment infrastructure. Implement automated security testing in the pipeline.

Summary

In conclusion, cloud native applications represent a significant evolution in software development, offering unparalleled advantages in terms of scalability, agility, and resilience. From microservices architecture to CI/CD pipelines, the principles and technologies discussed here provide a robust framework for building modern, cloud-optimized applications. By embracing cloud native practices, businesses can unlock new levels of innovation, improve time-to-market, and ultimately, gain a competitive edge in today’s dynamic digital landscape.

This exploration equips you with the knowledge to navigate the complexities and harness the power of cloud native applications.

Q&A

What is the main difference between cloud native and traditional applications?

Cloud native applications are designed specifically for the cloud, utilizing microservices, containers, and automation to be scalable, resilient, and easily updated. Traditional applications, on the other hand, are often monolithic and designed for on-premise infrastructure, making them less flexible and harder to scale.

Why is containerization important for cloud native applications?

Containerization, using technologies like Docker, allows cloud native applications to be packaged with all their dependencies, ensuring consistency across different environments. This portability simplifies deployment, scaling, and management, crucial for cloud environments.

What is the role of Kubernetes in cloud native development?

Kubernetes is a container orchestration platform that automates the deployment, scaling, and management of containerized applications. It helps manage the lifecycle of cloud native applications, ensuring they are highly available and efficiently utilizing resources.

How does DevOps contribute to cloud native application success?

DevOps practices, such as continuous integration and continuous delivery (CI/CD), streamline the development process, enabling faster releases, improved collaboration, and quicker feedback loops. This agility is essential for cloud native applications, which require rapid iteration and adaptation.