Serverless computing, lauded for its scalability and cost-effectiveness, presents a paradigm shift in application development. However, a critical consideration within this model is the phenomenon of “cold starts.” These initial delays, where a serverless function takes time to initialize before executing, introduce a complex interplay of factors that directly impact operational expenses. This exploration delves into the often-overlooked financial ramifications of cold starts, examining how they influence resource consumption, execution time, monitoring costs, and capacity planning within a serverless architecture.

Understanding the economic consequences of cold starts is paramount for optimizing serverless deployments. By analyzing the various contributing factors and mitigation strategies, businesses can make informed decisions to minimize expenses and maximize the benefits of serverless technology. This analysis will dissect the nuances of cold start costs, from the direct expenses associated with resource usage to the indirect costs related to application performance and scalability, providing actionable insights for effective cost management.

Introduction to Serverless Cold Starts

Serverless computing offers a compelling model for application deployment, promising scalability and cost efficiency. However, a key challenge within this paradigm is the phenomenon of “cold starts.” Understanding cold starts is crucial for developers aiming to optimize serverless applications for performance and user experience.

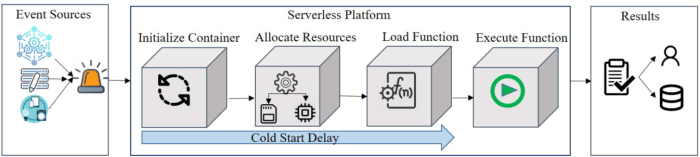

Definition of Serverless Cold Starts

In serverless computing, a cold start occurs when a function is invoked and the underlying infrastructure (the container or execution environment) needs to be initialized before the function code can begin executing. This contrasts with a “warm start,” where the function’s environment is already active and ready to process requests. The time taken for a cold start can significantly impact the perceived responsiveness of an application.

Impact of Cold Starts on User Experience and Application Performance

Cold starts directly affect user experience and overall application performance. The delay caused by a cold start can lead to increased latency, potentially causing a frustrating experience for users. Furthermore, cold starts can also impact the application’s ability to handle traffic spikes effectively.

- Increased Latency: The primary impact is increased latency. When a user requests a resource that triggers a serverless function experiencing a cold start, the user must wait for the function’s environment to be provisioned and initialized before the code executes. This wait time, which can range from milliseconds to seconds, translates directly into a slower response time.

- Reduced Responsiveness: A slow response time can degrade user experience. Users may perceive the application as sluggish or unresponsive, particularly in interactive applications where quick feedback is essential.

- Performance Bottlenecks: In scenarios with high traffic, cold starts can create performance bottlenecks. If a serverless function is frequently invoked, and each invocation triggers a cold start, the overall throughput of the application can be significantly reduced. This can lead to service degradation or even failure under heavy load.

- Resource Consumption and Cost: Although serverless architecture is known for its cost-effectiveness, cold starts can indirectly increase resource consumption and, consequently, costs. While the function is initializing, it consumes resources, even if it’s not actively processing requests. If cold starts are frequent, these initialization periods can add up.

- Impact on Interactive Applications: Interactive applications, such as web applications or mobile apps, are particularly susceptible to the negative effects of cold starts. Users expect immediate feedback, and any delay caused by a cold start can disrupt the user experience.

Factors Influencing Cold Start Duration

The duration of cold starts in serverless environments is a complex phenomenon influenced by a multitude of factors. Understanding these factors is crucial for optimizing application performance and minimizing the impact of cold starts on user experience. Several key elements contribute significantly to the time it takes for a serverless function to become ready to handle requests.

Code Size Impact

The size of the code deployed for a serverless function directly correlates with cold start times. Larger codebases require more time to be loaded, initialized, and prepared for execution. This is because the platform needs to download the code package, unpack it, and set up the runtime environment.For example, consider two serverless functions, both performing a simple calculation.* Function A: A function with a minimal codebase (e.g., a few lines of code for a basic addition operation) might experience a cold start time of, let’s say, 200 milliseconds.

Function B

A function performing complex data transformations and interacting with multiple external services, leading to a larger codebase, could have a cold start time of, for instance, 1 second or more.The difference arises from the increased amount of data that needs to be transferred, processed, and initialized during the cold start process. Larger codebases often involve more dependencies, further exacerbating the issue.

Programming Language Impact

The choice of programming language significantly influences cold start performance. Different languages have varying startup times and runtime characteristics, directly affecting how quickly a function can begin executing.Languages that are compiled ahead-of-time (AOT), like Go, typically exhibit faster cold starts compared to languages that are interpreted or compiled just-in-time (JIT), such as Python or JavaScript. This is because AOT-compiled code is already translated into machine code, reducing the overhead of compilation during function initialization.Here’s a comparative overview:* Go: Generally, Go functions benefit from relatively quick cold starts.

The compilation process generates efficient and optimized binaries, leading to reduced initialization times. For instance, a simple HTTP handler in Go might cold start in under 100 milliseconds.

Node.js (JavaScript)

Node.js, using a JIT compiler, typically has longer cold start times. The JavaScript code must be parsed, interpreted, and potentially optimized during initialization. A simple Node.js function can take between 200-500 milliseconds to cold start.

Python

Python’s interpreted nature also contributes to slower cold starts compared to Go. Python code needs to be interpreted at runtime. A basic Python function can cold start within 300-600 milliseconds, though this can vary based on dependencies.These are estimations; the actual times can vary based on the complexity of the function, the platform used, and other influencing factors.

Dependencies and Libraries Impact

The inclusion of dependencies and libraries profoundly impacts cold start times. Each dependency added to a serverless function necessitates additional time for downloading, installing, and initializing.Consider a serverless function that uses several external libraries.* The function may depend on a large data processing library like Pandas, a popular library for data manipulation in Python. The library itself and its dependencies must be downloaded and loaded, significantly increasing cold start duration.

A function interacting with a database might rely on a database client library. The client library’s initialization, including establishing connections, can add to the cold start overhead.To illustrate the impact, consider a comparison:* Function X: A function with no external dependencies, cold start: 100ms.

Function Y

A function with several external dependencies, including a large data processing library and a database client, cold start: 800ms.The difference highlights how dependencies contribute to increased cold start times. Minimizing dependencies, utilizing optimized library versions, and leveraging techniques like function packaging can help mitigate the impact of dependencies on cold start performance.

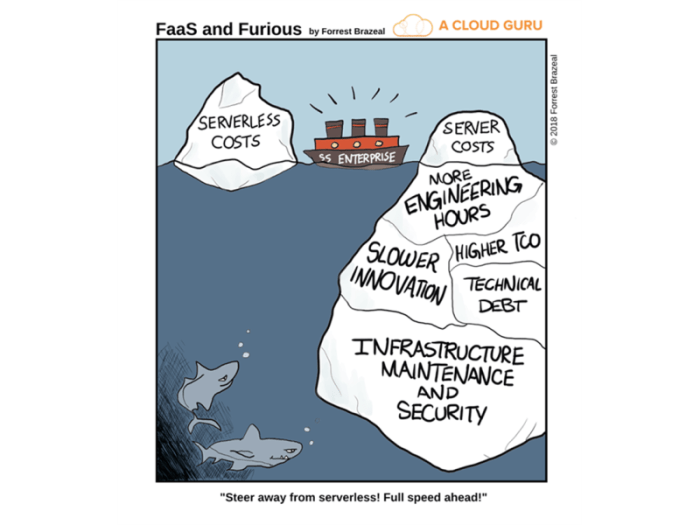

Cost Implications

The operational efficiency of serverless architectures hinges on understanding and mitigating the financial impact of various factors, including cold starts. While serverless platforms offer a pay-per-use model, the transient nature of cold starts can inadvertently lead to increased resource consumption and, consequently, higher costs. Analyzing these cost implications is crucial for optimizing serverless applications and ensuring cost-effectiveness.

Cost Implications: Resource Consumption

Cold starts directly contribute to increased resource consumption, primarily affecting CPU and memory usage. The process of initializing a serverless function, including loading code, establishing runtime environments, and connecting to dependencies, requires a non-trivial amount of computational resources. This resource demand, particularly during the cold start phase, translates into monetary costs proportional to the time and resources consumed.Idle resources during cold starts directly contribute to costs.

The serverless platform charges for the duration the function is active, even if the function is idle or waiting for dependencies to load. This means that the time spent in the cold start process, during which the function may not be actively processing requests, still incurs charges. This idle time, which can range from milliseconds to seconds depending on the function’s complexity and the platform, adds up over time, especially in applications with frequent invocations.To illustrate the cost implications, consider a hypothetical serverless function, `ImageProcessor`, designed to resize images uploaded to a cloud storage service.* Scenario: The `ImageProcessor` function is invoked infrequently, resulting in frequent cold starts.* Resource Usage:

During a cold start, the function requires 512MB of memory and consumes an average of 200ms of CPU time to initialize the runtime environment, load dependencies (such as image processing libraries), and establish connections to the storage service.

Once initialized, the function processes each image resize operation in 100ms and consumes 128MB of memory.

* Cost Calculation:

Assuming a platform charges \$0.00001667 per GB-second for memory and \$0.0000002 per 100ms of CPU time.

Cold Start Cost

512MB (0.5GB)

- 200ms (0.2 seconds)

- (\$0.00001667/GB-second) + 200ms (0.2 seconds)

- (\$0.0000002/100ms) = \$0.000001667 + \$0.0000004 = \$0.000002067

Image Processing Cost

128MB (0.125GB)

- 100ms (0.1 seconds)

- (\$0.00001667/GB-second) + 100ms (0.1 seconds)

- (\$0.0000002/100ms) = \$0.000000208375 + \$0.0000002 = \$0.000000408375

If the function experiences a cold start for every invocation, the cost per image resize operation is \$0.000002067 (cold start) + \$0.000000408375 (processing) = \$0.000002475375.

If the function is already warm (no cold start), the cost per image resize operation is \$0.000000408375.

The cold start adds approximately \$0.000002067 to the cost of each image resize operation. While this may seem small on a per-operation basis, the cumulative cost can be significant, especially for applications with a high volume of invocations.

The cost of the cold start is a substantial percentage of the total cost of the function when cold starts are frequent.

This illustrates that even small cold start durations can have a measurable impact on cost, especially when functions are invoked frequently. The longer the cold start duration, the greater the cost.

Cost Implications

The execution time of serverless functions directly impacts the cost incurred by cloud providers. While the pay-per-use model of serverless computing offers significant advantages in terms of scalability and resource efficiency, the added latency introduced by cold starts can translate into increased expenses. Understanding the relationship between cold start duration and execution costs is crucial for optimizing serverless applications and controlling operational expenditure.

Execution Time

The primary cost driver in serverless computing is the duration for which a function is actively executing and consuming resources. Cloud providers typically charge based on the total execution time, measured in milliseconds or seconds, and the amount of memory allocated to the function. Cold starts, by their nature, extend this execution time, thereby increasing the overall cost.Consider the following points:

- Increased Execution Duration: Cold starts necessitate the allocation of resources and the initialization of the function’s runtime environment, leading to a delay before the function’s code begins executing. This delay adds to the total execution time.

- Cost Per Millisecond/Second: Cloud providers establish a cost per unit of execution time, usually a few cents per second or a fraction of a cent per millisecond, depending on the allocated memory and the provider’s pricing model. Even short cold start durations can accumulate significant costs over time, especially for functions that are invoked frequently.

- Memory Allocation: The amount of memory allocated to a function influences both its performance and its cost. Functions with higher memory allocations can often execute faster, but they also incur a higher cost per unit of execution time. Cold starts can exacerbate the cost implications of memory allocation because the extra time spent initializing the function consumes the allocated memory resources.

Comparative Cost Analysis

Comparing functions with frequent cold starts to those with warm starts reveals the financial impact of cold start overhead. Consider two identical functions, Function A and Function B, designed to perform a simple calculation:

- Function A (Frequent Cold Starts): This function is invoked sporadically and experiences a cold start on each invocation. The cold start duration averages 500 milliseconds. The actual code execution takes 100 milliseconds. Therefore, the total execution time is 600 milliseconds.

- Function B (Warm Starts): This function is invoked frequently enough that it usually remains in a warm state. The execution time, without cold start overhead, is 100 milliseconds.

If the cost per 100 milliseconds of execution time is $0.001 (this is a simplified example), the cost calculation would be:

- Function A: 600 milliseconds / 100 milliseconds = 6 units

– $0.001/unit = $0.006 per invocation. - Function B: 100 milliseconds / 100 milliseconds = 1 unit

– $0.001/unit = $0.001 per invocation.

In this simplified example, Function A costs six times more per invocation than Function B. Over thousands or millions of invocations, this difference can result in substantial cost discrepancies.

Scenario: Significant Execution Time Cost

In a high-volume, time-sensitive application, the execution time cost of cold starts becomes particularly significant. For example, consider a financial trading platform that uses serverless functions to process real-time market data and execute trades.

- High Frequency of Invocations: The trading platform might trigger functions thousands of times per second to react to price fluctuations.

- Stringent Latency Requirements: Even a slight delay in trade execution can lead to missed opportunities or financial losses.

- Cold Start Impact: If each function invocation experiences a cold start of 300 milliseconds, this adds up quickly. Over the course of a day, this additional latency, multiplied by the high invocation frequency, would substantially increase the execution time and, consequently, the costs.

The total cost can be estimated using the following formula:

Total Cost = (Number of Invocations

- (Cold Start Duration + Code Execution Time) / 1000)

- Cost per Second

For instance, if the platform has 10,000 invocations per second, a 300-millisecond cold start, and a code execution time of 100 milliseconds, and the cost per second is $0.0001, the total cost would be:

Total Cost = (10,000

- (0.3 + 0.1) / 1000)

- $0.0001 = $0.04 per second, or $3456 per day.

If the cold start time could be reduced, or cold starts were avoided entirely, the cost would be lower. The financial impact of cold starts is thus amplified in scenarios with high invocation rates and stringent latency requirements.

Cost Implications

Monitoring and observability are crucial aspects of serverless applications, especially when considering the impact of cold starts. They provide insights into the performance and behavior of functions, allowing for identification and mitigation of issues. However, these tools themselves incur costs, and their usage must be carefully managed to avoid excessive spending, especially when dealing with the unpredictable nature of cold starts.

Monitoring and Observability Contribution to Cold Start Costs

Monitoring and observability tools contribute to cold start costs through several mechanisms. They generate data, which is then processed, stored, and analyzed, leading to resource consumption and associated expenses.

- Data Ingestion and Processing: Monitoring tools collect metrics, logs, and traces. The volume of this data increases during cold starts, as function invocations are often slower and more frequent. The processing of this increased data volume, including parsing, indexing, and aggregation, consumes CPU, memory, and network resources.

- Storage Costs: The collected data must be stored for analysis and historical tracking. Larger data volumes, as a result of more frequent cold starts, lead to higher storage costs. This is especially true for detailed tracing and logging, which can generate significant amounts of data.

- Analysis and Alerting: Sophisticated monitoring tools provide capabilities for analysis, alerting, and dashboards. These features require computational resources to perform calculations, run queries, and trigger notifications based on defined thresholds. Cold starts can trigger alerts, which, if not optimized, can generate unnecessary costs.

- Tooling Costs: The monitoring and observability tools themselves often come with subscription fees or usage-based pricing models. Increased data volume and the use of advanced features can lead to higher costs.

Cost Breakdown for Using Various Monitoring Tools to Track Cold Start Occurrences

The cost breakdown for monitoring cold starts varies depending on the chosen tools and their pricing models. It is crucial to understand the cost drivers for each tool.

Consider a hypothetical serverless application using three popular monitoring tools: CloudWatch (AWS), Datadog, and New Relic. The cost breakdown is illustrative and based on typical pricing structures as of late 2024, subject to change by the vendors. It assumes a scenario with 100,000 function invocations per month, and that 20% of these invocations experience a cold start, and the function generates 100KB of logs and metrics per invocation.

| Monitoring Tool | Cost Driver | Estimated Cost per Month | Notes |

|---|---|---|---|

| CloudWatch (AWS) |

|

|

|

| Datadog |

|

|

|

| New Relic |

|

|

|

| Total Estimated Monthly Cost | $1080 |

Explanation: The total cost for monitoring cold starts using this combination of tools is $1080 per month. The cost breakdown illustrates that the cost varies greatly depending on the features used and the volume of data ingested. APM and detailed logging contribute significantly to the cost. These figures are estimates and may vary based on the specific usage and pricing tiers of each tool.

Methods to Optimize the Use of Monitoring Tools to Reduce Associated Costs

Several strategies can be employed to optimize the use of monitoring tools and reduce the associated costs, particularly related to cold starts.

- Data Sampling and Filtering: Implement data sampling to reduce the volume of data ingested. Filter out unnecessary logs and metrics that do not provide valuable insights into cold start behavior. For example, only log detailed information for functions experiencing cold starts, or filter logs based on specific request parameters.

- Custom Metrics and Aggregation: Define custom metrics that directly measure cold start duration and frequency. Aggregate data at the function level to reduce the granularity of data stored. This reduces the volume of data to be ingested and processed.

- Optimized Logging Levels: Use appropriate logging levels. Increase logging verbosity only when necessary for debugging or troubleshooting cold start issues. Reduce logging verbosity during normal operations to minimize data volume.

- Alerting Optimization: Fine-tune alert thresholds to avoid unnecessary notifications. Set alerts based on trends rather than individual cold start occurrences. Configure alerts to only trigger when the cold start duration exceeds a specific threshold for a certain period.

- Tool Selection: Choose monitoring tools that offer cost-effective pricing models. Consider tools with pay-as-you-go pricing, data retention policies, and features that are specifically designed for serverless applications.

- Automated Remediation: Implement automated remediation strategies. For example, automatically increase the provisioned concurrency of a function when cold start durations exceed a threshold. This reduces the impact of cold starts and the need for excessive monitoring.

- Regular Review and Optimization: Regularly review the configuration of monitoring tools and optimize them. Periodically analyze the data and adjust the logging levels, sampling rates, and alert thresholds based on the observed performance.

Cost Implications

The financial impact of serverless cold starts extends beyond the immediate execution time. A comprehensive understanding of how cold starts affect resource allocation, scaling strategies, and overall capacity planning is crucial for optimizing costs and ensuring efficient application performance. In this section, we delve into the specific ways in which cold starts influence these aspects of serverless deployments.

Scaling and Capacity Planning

Cold starts introduce complexities into scaling and capacity planning strategies. Effectively managing the number of concurrent function instances to meet demand while minimizing the impact of cold starts is essential for cost optimization.Auto-scaling mechanisms, driven by metrics such as invocation count and latency, attempt to dynamically adjust the number of function instances. However, the inherent variability in cold start duration adds a layer of uncertainty to this process.

Over-provisioning, where more resources are allocated than strictly needed, can become necessary to mitigate the risk of cold starts causing service degradation during peak load. This, in turn, leads to increased costs. Under-provisioning, on the other hand, risks increased latency and potential service unavailability due to cold starts. The balance is delicate.For example, consider an e-commerce application deployed using AWS Lambda.

During a flash sale event, the application experiences a surge in user requests. If the scaling strategy is solely based on request volume, the system might struggle to handle the initial burst of traffic if cold starts are frequent. The auto-scaling mechanism would attempt to spin up new function instances to handle the increased load, but if each new instance takes a significant amount of time to become ready (due to cold starts), users may experience increased latency and potentially timeouts.To illustrate the cost implications of auto-scaling in this scenario, let’s assume the following:* Average cold start duration: 500 milliseconds.

Average function execution time

100 milliseconds.

Cost per function execution

$0.0000002 per execution (hypothetical).

Scaling trigger

Increase in concurrent requests above a threshold.If the auto-scaling mechanism is configured to aggressively provision new instances in response to the increase in concurrent requests, the cost associated with those instances could increase. Furthermore, the increased resource usage of idle function instances, waiting to handle requests, can lead to additional costs.The impact can be modeled mathematically. Let:* `C_cs` = Cost incurred due to cold starts.

- `N_cs` = Number of cold starts.

- `C_ex` = Cost per execution.

- `T_cs` = Time spent in cold start (seconds).

The cost can be estimated as a factor of both the execution cost and the cost of the additional time spent waiting for the cold start to complete.The formula can be represented as:

C_cs = N_cs

- (C_ex + (C_ex

- T_cs) / T_execution_time)

Where:* `T_execution_time` is the average function execution time.This formula demonstrates that the longer the cold start duration (`T_cs`) and the greater the number of cold starts (`N_cs`), the higher the overall cost. Effective capacity planning and scaling strategies, which take cold start durations into account, are essential to minimize `N_cs` and, consequently, the overall cost.Another example: Suppose a video processing application on Google Cloud Functions.

If the application uses a scaling policy based on the number of requests in the queue, it may create many instances in anticipation of requests. If the functions take a significant time to start due to cold starts, the application may spend more time in an idle state. This also translates to higher costs.

Strategies to Mitigate Cold Starts

Mitigating cold starts is crucial for maintaining the performance and cost-effectiveness of serverless applications. Several strategies exist to reduce the frequency and impact of cold starts. This section will delve into one such strategy: the “keep-alive” mechanism.

Keep-alive Strategy: Function Instance Preservation

The “keep-alive” strategy aims to reduce cold start frequency by preserving function instances for a period of time after they have finished processing a request. Instead of completely terminating the function’s execution environment, the platform retains it in a “warm” state, ready to handle subsequent requests. This eliminates the need to re-initialize the function environment from scratch, significantly reducing the latency associated with cold starts.

The effectiveness of this strategy depends on the platform’s implementation and the function’s configuration. For example, AWS Lambda, Azure Functions, and Google Cloud Functions each employ different mechanisms and offer varying levels of control over the keep-alive behavior. The duration for which a function instance is kept “warm” is often configurable, allowing developers to balance performance and cost.

Cost Considerations of Implementing Keep-alive Mechanisms

Implementing keep-alive mechanisms introduces new cost considerations. While keep-alive reduces the number of cold starts, it also incurs costs associated with the function instances remaining active. These costs are primarily related to the time the function instances are in a warm state, consuming resources even when not actively processing requests.

- Resource Consumption: Warm function instances consume memory and potentially other resources, such as CPU cycles, depending on the platform’s pricing model. For instance, AWS Lambda charges for the memory allocated to a function, even when it is idle but in a warm state.

- Pricing Models: The specific pricing model for warm instances varies across cloud providers. Some providers charge based on the duration the instance is active, while others might have a minimum billing duration. Understanding the pricing model is crucial for accurately estimating the cost of keep-alive.

- Configuration and Optimization: The duration for which function instances are kept alive (the “keep-alive duration”) is a critical configuration parameter. A longer keep-alive duration reduces cold start frequency but increases the cost of idle resources. Conversely, a shorter keep-alive duration reduces idle resource costs but may increase cold start frequency. Optimizing this duration requires careful consideration of the application’s traffic patterns and performance requirements.

Comparative Analysis: Functions With and Without Keep-alive

Comparing the costs of functions with and without keep-alive requires a quantitative analysis that considers various factors, including request frequency, cold start frequency, cold start duration, and the pricing model of the serverless platform. The optimal choice depends on the specific application and its workload characteristics.

Consider two scenarios: a function with no keep-alive and a function with keep-alive enabled.

Scenario 1: No Keep-alive

In this scenario, each request triggers a cold start. The total cost will be the sum of the invocation costs and the cost of cold start latency.

Scenario 2: Keep-alive Enabled

In this scenario, some requests will benefit from a warm function instance, avoiding cold starts. The total cost includes invocation costs, the cost of the warm function instances (idle time), and a reduced cost due to fewer cold starts.

Let’s consider a simplified model. Assume a function receives an average of 100 requests per minute and the keep-alive duration is set to 5 minutes. The cold start duration is 500ms, and the invocation cost is $0.0000002 per request and the idle cost is $0.0000001 per second. If the cold start rate is 50% without keep-alive and 10% with keep-alive.

Then we can compare the estimated cost of both scenarios.

Scenario 1: No Keep-alive

Cold start frequency = 50%

– 100 requests/minute = 50 cold starts/minute. Total cold start duration = 50 cold starts/minute

– 0.5 second/cold start = 25 seconds/minute. Cost = (100 requests

– $0.0000002/request) + (25 seconds

– $0.0000001/second) = $0.00002 + $0.0000025 = $0.0000225 per minute.

Scenario 2: Keep-alive Enabled

Cold start frequency = 10%

– 100 requests/minute = 10 cold starts/minute. Total cold start duration = 10 cold starts/minute

– 0.5 second/cold start = 5 seconds/minute. Warm instances idle time = 5 minutes

– 60 seconds/minute = 300 seconds. Cost = (100 requests

– $0.0000002/request) + (5 seconds

– $0.0000001/second) + (300 seconds

– $0.0000001/second) = $0.00002 + $0.0000005 + $0.00003 = $0.0000505 per minute.

In this simplified example, the cost of the keep-alive enabled scenario is higher, because of the idle cost. However, the actual cost will depend on the specific parameters such as invocation frequency, cold start duration, and the pricing model of the serverless platform. If the cold start duration is very high and invocation is frequent, then keep-alive can lead to a lower cost and better performance.

Strategies to Mitigate Cold Starts

To address the performance impact of cold starts in serverless environments, several strategies can be employed. These techniques aim to minimize or eliminate the latency introduced by the function initialization process, ultimately improving the user experience and reducing operational costs. One such strategy involves proactively managing function instances to ensure they are readily available to handle incoming requests.

Provisioned Concurrency

Provisioned concurrency is a serverless function feature designed to keep a specified number of function instances initialized and ready to respond to requests. By pre-warming function instances, the system eliminates cold starts for a portion of the traffic, providing consistent and predictable performance. This proactive approach contrasts with the reactive scaling of standard serverless functions, which can lead to cold starts during periods of increased demand.

The cost structure associated with provisioned concurrency differs significantly from the standard pay-per-use model. Instead of being charged only for the duration of function execution, provisioned concurrency incurs charges based on the amount of concurrency provisioned and the duration for which it is provisioned. This means that even if the function is not actively processing requests, the provisioned instances still contribute to the overall cost.

The pricing model typically includes:

- Provisioned Concurrency Cost: A per-hour charge for the number of concurrent instances provisioned. This is independent of the number of invocations.

- Invocation Cost: A standard per-invocation charge, similar to the pay-per-use model, for requests that are actually served by the provisioned concurrency. This cost is only incurred when the function processes a request.

- Data Transfer Costs: Standard data transfer costs associated with the function’s interactions with other services or resources.

The following table illustrates the cost comparison of different provisioned concurrency configurations, using example figures. Note that these are illustrative and actual pricing may vary depending on the cloud provider, region, function resource requirements (memory, CPU), and the specific pricing model in effect.

| Provisioned Concurrency | Hourly Cost (Example) | Invocation Cost (Example – per million invocations) | Benefit |

|---|---|---|---|

| 0 (No Provisioned Concurrency) | $0 | $0.20 | No upfront cost, subject to cold starts |

| 10 | $1.00 | $0.20 | Eliminates cold starts for up to 10 concurrent requests, predictable performance |

| 100 | $10.00 | $0.20 | Handles significantly more concurrent requests without cold starts, suitable for higher traffic |

| 1000 | $100.00 | $0.20 | Maximizes cold start elimination, ideal for applications with very high and consistent traffic demands |

The data transfer costs are not included in the table, as they depend on the data transferred and would be the same across all configurations. The example hourly and invocation costs are hypothetical and meant to provide an understanding of the cost implications. In this example, increasing provisioned concurrency increases the hourly cost but eliminates the cold start impact, leading to improved performance.

The invocation cost is the same across all scenarios, as it only represents the cost of executing the function itself.

Strategies to Mitigate Cold Starts

Mitigating cold starts is crucial for maintaining application performance and user experience in serverless environments. Several strategies can be employed to reduce the frequency and duration of cold starts, each with its own trade-offs in terms of cost, complexity, and effectiveness. This section will explore one such strategy: the use of warm-up scripts.

Warm-up Scripts

Warm-up scripts are automated processes designed to proactively invoke serverless functions, thereby keeping them warm and reducing the likelihood of cold starts. They function by periodically triggering the function, ensuring that an instance remains active and ready to serve requests.

To understand the implementation, consider the following steps:

- Script Creation: The first step involves creating a script, typically written in a language supported by your serverless platform (e.g., Python, Node.js). This script’s primary function is to invoke the target serverless function. The script needs to be configured with the necessary authentication credentials and the function’s endpoint or trigger mechanism (e.g., API Gateway URL, event source mapping). For example, a Python script using the `requests` library could send a GET request to the function’s API endpoint.

- Deployment: The warm-up script itself needs to be deployed. This usually involves deploying the script to a platform that can execute it on a schedule. A common approach is to use a serverless function, scheduled by a service like AWS CloudWatch Events (now EventBridge), Google Cloud Scheduler, or Azure Functions Timer Trigger. This allows the script to run periodically without requiring dedicated infrastructure.

- Scheduling: Configure the scheduler to trigger the warm-up script at regular intervals. The frequency of the invocations depends on the function’s expected traffic and the desired cold start mitigation level. More frequent invocations lead to a higher probability of keeping the function warm but also increase the associated costs. A common practice is to schedule the warm-up script to run every few minutes (e.g., every 5 minutes).

The specific interval needs to be determined based on the function’s usage patterns and performance requirements.

- Monitoring: Implement monitoring to track the effectiveness of the warm-up script. This involves monitoring the function’s cold start metrics (e.g., duration, frequency) and the warm-up script’s execution logs. Analyzing these metrics allows you to fine-tune the scheduling frequency and ensure that the script is achieving its intended purpose.

Cost Implications of Warm-up Scripts

The cost of using warm-up scripts is primarily associated with the resources consumed by the warm-up script itself and the function invocations it triggers.

These costs are dependent on several factors:

- Function Invocations: Each invocation of the serverless function by the warm-up script incurs the same cost as a regular function invocation. This includes compute time, memory usage, and any associated platform fees.

- Warm-up Script Execution: The warm-up script also consumes resources when it runs. The cost of this is usually minimal, depending on the script’s complexity and the platform used to host it (e.g., the cost of running a small serverless function or the cost of running a scheduled task).

- Frequency of Invocations: The more frequently the warm-up script runs, the higher the associated costs. A higher frequency will result in more function invocations, increasing the overall expenditure.

Compared to other cold start mitigation strategies, such as provisioning concurrency, warm-up scripts can be more cost-effective for workloads with variable traffic patterns or where the function’s traffic is relatively low. Provisioning concurrency, while potentially reducing cold starts to zero, incurs a fixed cost based on the provisioned capacity, regardless of the actual traffic. In contrast, warm-up scripts only incur costs when they run.

However, for high-traffic applications where cold starts are critical to avoid, provisioning concurrency might be more appropriate despite the higher cost.

Consider the following example:

A serverless function experiences approximately 1000 invocations per day, and the average cold start duration is 500ms. Using a warm-up script, the function is invoked every 5 minutes. The cost of a single function invocation is $0.0000002 per invocation, and the warm-up script consumes negligible resources. With the warm-up script, the function is invoked 288 times per day (60 minutes/5 minutes

- 24 hours). The cost of the warm-up script is therefore 288

- $0.0000002 = $0.0000576 per day. This is a relatively small cost. If the function experienced significant cold starts without the warm-up script, the user experience would be significantly impacted.

Cost Optimization: Code Optimization and Refactoring

Optimizing code directly impacts the performance of serverless functions, including cold start times. While not a direct fix, efficient code execution minimizes the time a function spends initializing, leading to faster cold start times. This, in turn, reduces the overall resource consumption and associated costs.

Indirect Impact of Code Optimization on Cold Start Times

Code optimization indirectly affects cold start times by reducing the amount of work the function needs to perform during initialization. A smaller, more efficient codebase loads faster, leading to quicker function readiness. This is particularly crucial for serverless environments where resources are provisioned on demand. Faster initialization translates to reduced latency and lower resource consumption, which contributes to cost savings.

Code Refactoring Techniques for Performance Improvement

Several code refactoring techniques can significantly improve performance, contributing to faster cold starts. These techniques focus on streamlining code execution and minimizing resource utilization.* Lazy Loading: Implement lazy loading for dependencies. Instead of loading all dependencies at function initialization, load them only when they are needed. This reduces the initial loading time. For example, if a function only uses a specific library under certain conditions, load the library only when those conditions are met.* Code Splitting: Break down large codebases into smaller, modular components.

This allows for selective loading of code, only fetching the necessary parts during initialization. This approach is particularly useful for functions with multiple functionalities.* Optimized Algorithm Selection: Choose the most efficient algorithms for specific tasks. For example, when sorting large datasets, use an algorithm with a lower time complexity (e.g., merge sort or quicksort) compared to less efficient algorithms (e.g., bubble sort).* Reduce External Dependencies: Minimize the number of external libraries and dependencies.

Each dependency adds to the function’s size and initialization time. Evaluate dependencies carefully and remove any unnecessary ones.* Caching: Implement caching mechanisms to store frequently accessed data or results. This reduces the need to recompute or retrieve data from external sources, improving performance. For example, cache the results of database queries.* Minification and Bundling: Minify and bundle the code, especially for languages like JavaScript, to reduce file sizes and improve loading times.

This involves removing unnecessary characters (minification) and combining multiple files into a single file (bundling).* Profiling and Performance Testing: Regularly profile the code to identify performance bottlenecks. Use profiling tools to pinpoint areas where the code is slow and then optimize those sections. Performance testing ensures that code changes improve performance.

Benefits of Code Optimization for Cost Savings

Code optimization translates directly into cost savings in several ways, particularly within a serverless architecture. These benefits are quantifiable and contribute to the overall efficiency of the function.* Reduced Execution Time: Faster function execution leads to lower compute costs. Serverless platforms typically charge based on execution time, so reducing this time directly translates to cost savings.* Lower Resource Consumption: Optimized code consumes fewer resources (CPU, memory).

This can result in lower costs, especially if the serverless platform charges based on resource usage.* Decreased Cold Start Frequency: While not eliminating cold starts, faster initialization reduces the likelihood of them occurring during periods of high demand. This helps in maintaining consistent performance and predictable costs.* Improved Scalability: Optimized code scales more efficiently. As the workload increases, the function can handle more requests with the same resources, reducing the need to scale up and incur additional costs.* Enhanced Overall Efficiency: Code optimization improves the overall efficiency of the function.

This allows for better resource utilization, resulting in lower operational costs.

Cost Optimization

The choice of serverless platform significantly impacts the cost implications of cold starts. Different providers offer varying pricing models, cold start performance characteristics, and resource allocation strategies, leading to substantial differences in overall expenses. Careful selection of the appropriate platform is crucial for minimizing cold start-related costs and optimizing the efficiency of serverless applications.

Choosing the Right Platform

The selection of a serverless platform directly influences cold start performance and associated costs. Providers implement diverse architectures and pricing structures, impacting both the frequency and duration of cold starts, which directly translates to financial expenditures. Understanding these differences is essential for informed decision-making.

- Platform Architecture: The underlying infrastructure of each provider impacts cold start times. Some platforms utilize container-based environments, which can experience longer cold start durations due to the overhead of container initialization. Others leverage pre-warmed execution environments or optimized runtime configurations to mitigate this issue.

- Pricing Models: Pricing models vary considerably. Some providers charge per execution duration, while others incorporate factors like memory allocation and request volume. Cold starts, which contribute to execution time, directly affect these costs. The pricing model’s impact can be magnified by frequent cold starts.

- Resource Allocation: The ability to control resource allocation (e.g., memory, CPU) influences both performance and cost. Allocating more resources might reduce cold start times but also increase costs. Conversely, under-allocating resources could prolong cold starts and potentially lead to performance degradation.

- Concurrency Limits: Concurrency limits, the maximum number of concurrent function instances, also play a crucial role. If concurrency limits are reached, new requests may trigger cold starts, increasing costs. Some platforms provide features to manage concurrency, such as provisioned concurrency, to mitigate this.

Comparing Serverless Provider Cost Models

Serverless providers offer different cost models and cold start characteristics. A comparative analysis reveals the varying trade-offs between performance, cost, and resource management. The following table provides a high-level overview of key considerations for different platforms.

| Provider | Cold Start Performance (Typical) | Pricing Model (Example) | Cost Considerations |

|---|---|---|---|

| AWS Lambda | Variable, dependent on language, memory allocation, and runtime environment. Generally, 100ms to several seconds. | Pay-per-use: Price per GB-second of execution time and per request. Free tier available. |

|

| Google Cloud Functions | Variable, influenced by region, language, and resource allocation. Typically, 100ms to a few seconds. | Pay-per-use: Price per GB-second of execution time and per invocation. Free tier available. |

|

| Azure Functions | Variable, influenced by the chosen hosting plan (Consumption Plan, Premium Plan, App Service Plan). Typically, 100ms to several seconds. | Pay-per-use: Price per GB-second of execution time and per execution. Free tier available. Premium Plan offers pre-warmed instances. |

|

| Cloudflare Workers | Fast, often in the tens of milliseconds. Designed for low-latency execution. | Pay-per-use: Price per 1 million requests and per GB-second of execution time. Free tier available. |

|

Last Recap

In conclusion, the cost implications of serverless cold starts are multifaceted, extending beyond mere execution time to encompass resource utilization, monitoring overhead, and scaling strategies. Effectively managing these costs requires a comprehensive approach, including code optimization, platform selection, and the strategic implementation of mitigation techniques such as keep-alive mechanisms and provisioned concurrency. By carefully analyzing these factors and employing appropriate strategies, organizations can harness the full potential of serverless computing while maintaining cost-efficiency and ensuring optimal application performance.

General Inquiries

What is a serverless cold start?

A serverless cold start is the initial delay experienced when a serverless function is invoked, as the underlying infrastructure needs to be provisioned and initialized before the function code can begin executing. This can take several seconds and increases latency.

How does code size affect cold start times?

Larger codebases typically result in longer cold start times. The serverless platform needs to download, unpack, and initialize more code, leading to increased latency. This is because the runtime environment must load all the necessary dependencies and the function’s code.

What are some common strategies to mitigate cold starts?

Common mitigation strategies include keep-alive mechanisms, provisioned concurrency, and warm-up scripts. These approaches aim to pre-initialize function instances, reducing or eliminating the need for cold starts.

How does the choice of programming language impact cold start performance?

Different programming languages have varying cold start performance characteristics. Languages like Java and .NET often have longer cold start times compared to languages like Node.js or Python due to the overhead of the Java Virtual Machine (JVM) or .NET runtime initialization.

Can monitoring tools help reduce cold start costs?

Yes, monitoring tools provide valuable insights into cold start frequency and duration. By identifying functions with frequent cold starts, developers can prioritize optimization efforts and select appropriate mitigation strategies, thus reducing associated costs.