Serverless computing, a paradigm shift in software development, has revolutionized how applications are built and deployed. Moving beyond traditional infrastructure management, serverless allows developers to focus on writing code without the overhead of server provisioning and maintenance. This shift has unlocked unprecedented levels of agility, scalability, and cost efficiency, leading to its widespread adoption across various industries. This analysis delves into the common use cases that exemplify the power and versatility of serverless, exploring its impact on modern software development practices.

This exploration will cover diverse applications, from web application development and API management to data processing and IoT applications. We’ll dissect how serverless architectures streamline operations, reduce costs, and accelerate time-to-market. Furthermore, we’ll examine how serverless empowers developers to build scalable, resilient, and cost-effective solutions across a wide range of use cases, ultimately transforming the landscape of software engineering.

Introduction to Serverless Computing

Serverless computing represents a paradigm shift in cloud computing, abstracting away server management from developers and focusing on code execution. This allows for increased agility, scalability, and cost efficiency, changing the way applications are built and deployed. This introduction will explore the fundamental concepts, historical context, and key advantages of adopting a serverless architecture.

Core Concept of Serverless Computing

Serverless computing, despite its name, does not eliminate servers. Instead, it removes the need for developers to manage them. The underlying infrastructure, including servers, operating systems, and scaling, is handled by the cloud provider. Developers focus solely on writing and deploying code, which is executed in response to events, such as HTTP requests, database updates, or scheduled triggers. This event-driven approach allows for automatic scaling; the cloud provider dynamically allocates resources based on the workload.

Brief History of Serverless Computing’s Evolution

The evolution of serverless computing is tied to the progression of cloud computing itself. It started with the concept of Function-as-a-Service (FaaS) around the early 2010s.The progression is marked by:

- Early Cloud Computing (2000s): The initial stages involved Infrastructure-as-a-Service (IaaS), providing virtualized computing resources. This gave developers more control but still required significant server management.

- Platform-as-a-Service (PaaS) Emergence (Mid-2000s): PaaS simplified application deployment and management, but developers still had to manage the underlying platform.

- Function-as-a-Service (FaaS) Revolution (Early 2010s): FaaS, a key component of serverless, emerged. Amazon Web Services (AWS) Lambda, launched in 2014, was a pioneering FaaS platform, allowing developers to execute code without managing servers.

- Serverless Architecture Expansion (Mid-2010s – Present): The concept of serverless expanded beyond FaaS to encompass other services, such as serverless databases, storage, and API gateways. This created a complete serverless ecosystem.

- Continuous Evolution and Maturity (Present): Serverless technologies are continually evolving, with improvements in performance, security, and support for various programming languages and frameworks. The adoption rate is increasing across industries.

Main Advantages of Adopting a Serverless Architecture

Serverless architecture offers several advantages over traditional server-based approaches. These benefits contribute to increased efficiency, reduced costs, and improved developer productivity. The key advantages are:

- Reduced Operational Overhead: With serverless, the cloud provider manages the infrastructure, including servers, operating systems, and scaling. Developers no longer need to provision, configure, or maintain servers, reducing operational overhead and freeing up time for coding.

- Automatic Scaling: Serverless platforms automatically scale resources based on demand. This means applications can handle sudden spikes in traffic without manual intervention. The platform automatically allocates more resources when needed and scales down when the demand decreases.

- Cost Efficiency: Serverless computing typically employs a pay-per-use model. You are charged only for the actual compute time and resources consumed by your code. This can lead to significant cost savings, especially for applications with variable or unpredictable workloads. The absence of idle server costs is a key factor.

- Increased Developer Productivity: Developers can focus solely on writing and deploying code, as the underlying infrastructure is managed by the cloud provider. This streamlines the development process, reduces deployment times, and allows developers to iterate faster.

- Improved Agility and Faster Time-to-Market: Serverless architecture allows for rapid development and deployment. The ease of scaling and the reduced operational overhead enable faster time-to-market for new features and applications.

- Enhanced Fault Tolerance: Serverless platforms are designed to be highly available and fault-tolerant. The underlying infrastructure is often distributed across multiple availability zones, ensuring that applications remain available even if a single zone experiences an outage.

Web Application Development

Serverless computing has revolutionized web application development, offering a more agile and cost-effective approach compared to traditional methods. It allows developers to focus on writing code without managing the underlying infrastructure, leading to faster development cycles and reduced operational overhead. This section explores how serverless is utilized in web application development, contrasting it with traditional approaches and highlighting its benefits, particularly in user authentication and authorization.

Serverless Implementation in Web Application Development

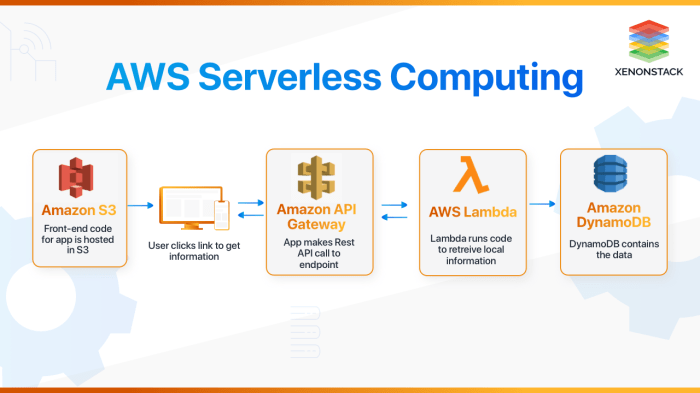

Serverless architectures for web applications typically involve a combination of services. These services work together to provide the necessary functionality. For example, a static website can be hosted on a service like Amazon S3 or Google Cloud Storage, while dynamic content is generated by serverless functions triggered by API Gateway or other events. Databases, such as Amazon DynamoDB or Google Cloud Firestore, are often used for data storage, and services like AWS Lambda or Google Cloud Functions execute the application logic.

Comparison: Serverless vs. Traditional Web App Development

Traditional web application development typically involves managing servers, either on-premises or in the cloud using virtual machines (VMs). This requires significant effort in server provisioning, configuration, scaling, and maintenance. Serverless, on the other hand, abstracts away these infrastructure concerns, allowing developers to deploy code directly. The key differences are summarized below:

- Infrastructure Management: Traditional methods require managing servers, including operating system updates, security patches, and scaling. Serverless eliminates this, as the cloud provider manages the infrastructure.

- Scaling: Traditional applications require manual scaling or auto-scaling configurations. Serverless automatically scales based on demand, often scaling up and down rapidly.

- Cost: Traditional applications have fixed costs for server resources, even when idle. Serverless uses a pay-per-use model, charging only for the actual compute time and resources consumed. This can lead to significant cost savings, especially for applications with variable traffic.

- Deployment: Traditional deployments often involve complex procedures and downtime. Serverless deployments are typically faster and less disruptive, with features like blue/green deployments available.

- Development Speed: Serverless enables faster development cycles. Developers can focus on writing code and business logic rather than managing infrastructure.

Benefits of Serverless for User Authentication and Authorization

Serverless offers significant advantages in implementing user authentication and authorization systems. Services like AWS Cognito, Google Cloud Identity Platform, and Azure Active Directory B2C provide pre-built, scalable solutions for managing user identities and access control.

- Scalability: Serverless authentication services can handle massive user loads without requiring manual scaling. They automatically scale to accommodate peak traffic.

- Security: These services offer robust security features, including multi-factor authentication, protection against common attacks, and compliance with industry standards. They often handle complex tasks like password hashing and storage securely.

- Cost-Effectiveness: The pay-per-use model applies to authentication services as well, reducing costs compared to managing a traditional authentication system. You only pay for the number of users and authentication requests.

- Simplified Integration: Serverless authentication services are designed to integrate seamlessly with other serverless components, making it easy to secure APIs and other application resources.

- Reduced Development Effort: Using pre-built authentication services significantly reduces the development effort required to implement user authentication and authorization. Developers can focus on the application’s core functionality.

API Development and Management

Serverless computing significantly streamlines API development and management by abstracting away the complexities of server infrastructure. This approach allows developers to focus on building API logic rather than managing servers, leading to faster development cycles, reduced operational overhead, and improved scalability. Serverless APIs are particularly well-suited for handling unpredictable workloads and scaling on demand.

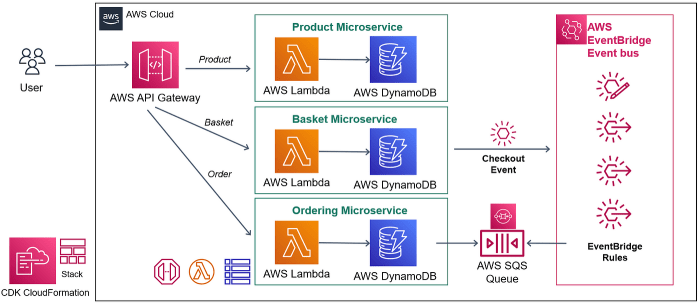

Design a serverless API architecture for a specific scenario (e.g., a food delivery service)

A serverless API architecture for a food delivery service can efficiently handle various functionalities, from order placement to delivery tracking and payment processing. This design leverages several serverless components working in concert.The core components include:* API Gateway: Acts as the entry point for all API requests, handling routing, authentication, authorization, and request transformation.

AWS Lambda (or similar)

Executes the backend logic for various API endpoints, such as order creation, order retrieval, menu browsing, and delivery status updates.

Database (e.g., Amazon DynamoDB, MongoDB Atlas)

Stores and manages data related to orders, users, restaurants, menus, and delivery personnel.

Event Bus (e.g., Amazon EventBridge, Google Cloud Pub/Sub)

Enables asynchronous communication between different parts of the system, such as notifying restaurants about new orders, updating delivery personnel about assignments, and sending notifications to customers.

Authentication and Authorization (e.g., Amazon Cognito, Auth0)

Secures the API by handling user authentication and authorization, controlling access to resources.

Object Storage (e.g., Amazon S3, Google Cloud Storage)

Used for storing static assets like restaurant menu images, user profile pictures, and other media files.The workflow could be visualized as follows:

1. User Interaction

A user interacts with the food delivery application (mobile app or web app).

2. API Request

The application sends an API request to the API Gateway, for example, to place an order.

3. Request Routing

The API Gateway routes the request to the appropriate Lambda function based on the API endpoint (e.g., `/orders`).

4. Lambda Function Execution

The Lambda function executes the logic for creating the order. This function interacts with the database to store the order details.

5. Event Emission

After successfully creating the order, the Lambda function emits an event to the event bus (e.g., “order created”).

6. Asynchronous Processing

Other Lambda functions are triggered by the event bus to perform asynchronous tasks, such as notifying the restaurant about the new order and sending a confirmation notification to the user.

7. Data Retrieval

For requests like viewing order history, the API Gateway routes requests to Lambda functions, which query the database and return the relevant data.This architecture offers several advantages:* Scalability: The serverless components automatically scale to handle fluctuating traffic.

Cost-Effectiveness

Pay-per-use pricing model for Lambda functions and other services reduces operational costs.

Reduced Operational Overhead

The serverless provider manages the underlying infrastructure, freeing up developers to focus on code.

Faster Development

Serverless allows for rapid prototyping and deployment of new features.

Organize the steps involved in deploying and managing serverless APIs

Deploying and managing serverless APIs involves a structured process that encompasses several key steps, from initial development to ongoing monitoring and maintenance. A well-defined process ensures efficient API operation and continuous improvement.The steps are as follows:

1. Development

Code the API logic

Develop the backend logic for each API endpoint using serverless functions (e.g., Lambda functions).

Define API endpoints

Design the API endpoints, including the request methods (GET, POST, PUT, DELETE), request parameters, and response formats.

Implement authentication and authorization

Integrate security measures to protect the API and control access to resources.

Test the API locally

Use local testing tools or emulators to test the API logic before deployment.

2. Deployment

Configure the API Gateway

Configure the API Gateway to route requests to the appropriate Lambda functions.

Deploy serverless functions

Deploy the Lambda functions to the cloud provider (e.g., AWS, Google Cloud, Azure).

Set up database and other resources

Provision the necessary databases, storage, and other resources required by the API.

Configure environment variables

Configure environment variables for sensitive information such as API keys, database connection strings, and other configurations.

3. Testing

Functional testing

Test the API endpoints to ensure they function correctly and return the expected results.

Performance testing

Evaluate the API’s performance under different load conditions to ensure it can handle the expected traffic.

Security testing

Conduct security testing to identify and address vulnerabilities in the API.

4. Monitoring and Logging

Implement logging

Implement detailed logging to capture API requests, responses, and errors.

Set up monitoring

Monitor the API’s performance, availability, and error rates using monitoring tools.

Configure alerts

Configure alerts to notify developers of any issues or anomalies.

5. Management and Maintenance

API versioning

Implement API versioning to manage changes to the API over time.

Update API code

Regularly update the API code to fix bugs, add new features, and improve performance.

Scale the API

Adjust the resources allocated to the API to handle increasing traffic.

Optimize API performance

Analyze API performance and make optimizations to improve response times and reduce latency.

6. Security

Regular security audits

Perform regular security audits to identify and address vulnerabilities.

Implement security best practices

Follow security best practices, such as using strong authentication and authorization mechanisms, protecting sensitive data, and regularly patching dependencies.These steps, when followed systematically, enable the creation, deployment, and management of robust and scalable serverless APIs.

Create a table comparing different API gateway services suitable for serverless applications

API gateways are crucial for serverless applications, acting as the front door for all API requests. Different API gateway services offer varying features, pricing models, and integrations. The choice of an API gateway depends on the specific requirements of the application, considering factors such as scalability, security, and ease of use.The table below compares some API gateway services:

| Feature | AWS API Gateway | Google Cloud API Gateway | Azure API Management |

|---|---|---|---|

| Provider | Amazon Web Services (AWS) | Google Cloud Platform (GCP) | Microsoft Azure |

| Key Features |

|

|

|

| Pricing Model | Pay-per-use (based on requests and data transfer) | Pay-per-use (based on requests) | Pay-per-use (based on tiers with different features and request limits) |

| Integration with Serverless Services | Excellent integration with AWS Lambda, DynamoDB, S3, etc. | Excellent integration with Cloud Functions, Cloud Run, Cloud Storage, etc. | Excellent integration with Azure Functions, Azure Logic Apps, Azure Blob Storage, etc. |

| Security Features |

|

|

|

| Ease of Use | Can be complex to configure initially but offers a wide range of features. | Relatively easy to set up and manage, especially with OpenAPI support. | User-friendly interface and developer portal, but can have a steeper learning curve. |

| Scalability | Highly scalable, automatically scales to handle high traffic volumes. | Highly scalable, automatically scales to handle high traffic volumes. | Highly scalable, automatically scales to handle high traffic volumes. |

This table provides a comparative overview, highlighting the key features, pricing, integration capabilities, and security aspects of each API gateway. The best choice depends on the specific needs and the cloud provider already used. For example, if you are already heavily invested in the AWS ecosystem, AWS API Gateway might be the most straightforward option. Similarly, Google Cloud API Gateway is the logical choice for GCP users, and Azure API Management for those on Azure.

Data Processing and ETL Pipelines

Serverless computing offers a compelling paradigm for automating data processing and building Extract, Transform, Load (ETL) pipelines. The inherent scalability, cost-effectiveness, and event-driven nature of serverless functions make them ideally suited for handling the dynamic and often unpredictable workloads associated with data ingestion, transformation, and loading. This section will delve into the specifics of how serverless functions automate data processing and how they are utilized in the construction of robust and efficient ETL pipelines.

Automating Data Processing with Serverless Functions

Serverless functions provide a streamlined approach to automating data processing tasks. They operate on an event-driven model, meaning that function execution is triggered by specific events, such as the arrival of a new file in a storage bucket, a message in a queue, or a schedule. This contrasts with traditional approaches that often require constantly running servers, which can be inefficient, particularly during periods of low activity.

- Event-Driven Architecture: The core principle of serverless data processing is its event-driven architecture. Events, such as file uploads to cloud storage (e.g., Amazon S3, Google Cloud Storage, Azure Blob Storage), database updates, or messages from message queues (e.g., Amazon SQS, Google Cloud Pub/Sub, Azure Service Bus), trigger the execution of serverless functions. This model ensures that processing resources are only consumed when needed, optimizing resource utilization and cost.

- Scalability and Elasticity: Serverless platforms automatically scale function instances based on the incoming workload. When the volume of data increases, the platform automatically provisions more function instances to handle the load. Conversely, during periods of low activity, the platform scales down the number of instances, minimizing costs. This elasticity is crucial for data processing, as workloads can fluctuate significantly.

- Cost Optimization: Serverless computing operates on a pay-per-use model. You are only charged for the actual compute time consumed by your functions. This eliminates the need to provision and maintain servers, leading to significant cost savings, especially for workloads with intermittent or unpredictable processing needs. Furthermore, the automatic scaling capabilities ensure that you are not paying for idle resources.

- Simplified Operations: Serverless platforms abstract away the complexities of server management, including patching, scaling, and infrastructure provisioning. Developers can focus on writing the code that performs the data processing logic without worrying about the underlying infrastructure. This simplifies operations and accelerates development cycles.

- Integration with Data Services: Serverless functions seamlessly integrate with various data services offered by cloud providers. For example, functions can read data from object storage, query databases, and write data to data warehouses. This integration streamlines data pipelines and simplifies the overall architecture.

Building Extract, Transform, Load (ETL) Pipelines with Serverless

Serverless functions are frequently used to build ETL pipelines, which involve extracting data from various sources, transforming it to meet specific requirements, and loading it into a target data store. This process can be broken down into three distinct stages: Extract, Transform, and Load.

- Extract: In the extract stage, data is retrieved from its source systems. These sources can include databases, APIs, files stored in object storage, or streaming data sources. Serverless functions can be triggered by events such as the arrival of a new file in an object storage bucket or a scheduled trigger to fetch data from an API.

- Transform: The transform stage involves cleaning, validating, and transforming the extracted data. This can include tasks such as data type conversions, filtering, joining data from multiple sources, and applying business rules. Serverless functions can perform these transformations, often using libraries and frameworks suited for data manipulation.

- Load: In the load stage, the transformed data is loaded into a target data store, such as a data warehouse, a database, or an object storage bucket. Serverless functions can handle the loading process, ensuring data is written to the target store in the appropriate format and with the required integrity.

Setting up a Serverless Data Pipeline: Example with AWS

Using Amazon Web Services (AWS) as an example, the following steps illustrate how to set up a serverless data pipeline. This simplified example will extract data from an S3 bucket, transform it using a Lambda function, and load the transformed data into another S3 bucket.

- Data Source (S3 Bucket): Imagine an S3 bucket containing CSV files of customer data. This represents the source data.

- Trigger: A trigger is configured, for example, S3 Event Notifications, to activate an AWS Lambda function whenever a new CSV file is uploaded to the source S3 bucket.

- Lambda Function (Transformation): An AWS Lambda function is created, written in Python (or another supported language). This function performs the following actions:

- Reads the CSV file from the source S3 bucket.

- Parses the CSV data.

- Applies transformations (e.g., cleaning data, filtering rows, adding calculated columns).

- Formats the transformed data into a new CSV file.

- Writes the transformed CSV file to a destination S3 bucket.

- Destination (S3 Bucket): Another S3 bucket is designated as the destination where the transformed data will be stored.

- Monitoring and Logging: AWS CloudWatch is used to monitor the Lambda function’s execution, track performance metrics (e.g., execution time, memory usage), and view logs for debugging purposes.

This architecture demonstrates a basic ETL pipeline. More complex pipelines can incorporate additional services such as AWS Glue for data cataloging and transformation, Amazon Kinesis for real-time data streaming, and Amazon Redshift for data warehousing. The core principle remains the same: serverless functions orchestrated by event triggers, perform the data processing tasks, offering scalability, cost-efficiency, and operational simplicity.

Mobile Backend Services

Serverless computing has fundamentally reshaped the landscape of mobile application development by providing a flexible and scalable backend infrastructure. This approach allows developers to focus on the user-facing aspects of their applications, such as user interfaces and features, while offloading the complexities of server management to the cloud provider. The inherent characteristics of serverless, including automatic scaling, pay-per-use pricing, and reduced operational overhead, make it a compelling choice for powering mobile applications that need to handle varying workloads and user traffic.Serverless functions excel at handling backend operations critical for mobile applications.

They enable the efficient implementation of features like push notifications, data synchronization, and user authentication, all without the need to provision and manage dedicated servers. This streamlined approach not only reduces development time but also minimizes the operational costs associated with traditional server-based architectures.

Push Notifications and Data Synchronization

Serverless functions are frequently employed to manage push notifications and data synchronization in mobile applications. Cloud providers offer services that integrate seamlessly with serverless functions, enabling developers to trigger notifications based on various events, such as new content updates, user actions, or real-time data changes. Data synchronization, crucial for maintaining data consistency across multiple devices and platforms, can also be efficiently handled using serverless functions.

These functions can be designed to listen for data changes, process them, and synchronize the updated data with relevant devices, ensuring a consistent user experience.

- Push Notification Delivery: Serverless functions can be triggered by events like new user sign-ups, product availability updates, or social interactions within the app. When an event occurs, the function invokes the cloud provider’s notification service (e.g., Firebase Cloud Messaging, Amazon SNS, or Azure Notification Hubs) to send targeted push notifications to the appropriate devices.

- Data Synchronization Implementation: When a user updates data on their device (e.g., a profile change or new content creation), the app sends the data to a serverless function. This function then validates the data, stores it in a database (e.g., Amazon DynamoDB, Google Cloud Firestore, or Azure Cosmos DB), and synchronizes the changes with other devices logged into the same account.

- Real-time Data Updates: For applications requiring real-time updates (e.g., chat applications, live sports scores, or collaborative document editing), serverless functions can be used in conjunction with services like WebSockets or real-time databases. The functions listen for data changes and then broadcast those changes to connected devices in real-time.

Common Mobile Backend Use Cases

Serverless computing offers several benefits for a variety of mobile backend use cases. These benefits include automatic scaling, cost optimization, and simplified deployment, making it a popular choice for developers.

- User Authentication and Authorization: Serverless functions can be used to handle user registration, login, and authentication processes. They can integrate with identity providers (e.g., Amazon Cognito, Firebase Authentication, or Azure Active Directory B2C) to securely manage user credentials and access control.

- Data Storage and Retrieval: Serverless functions can interact with cloud-based databases (e.g., DynamoDB, Firestore, or Cosmos DB) to store and retrieve user data, application data, and other information required by the mobile application.

- Image and File Processing: Serverless functions can be used to resize, optimize, and process images and files uploaded by users. This can improve performance and reduce storage costs. For example, when a user uploads a profile picture, a serverless function could automatically resize it to different dimensions for various display contexts.

- Location-Based Services: Serverless functions can be used to implement location-based features, such as geofencing, nearby search, and real-time location tracking. They can integrate with mapping services (e.g., Google Maps API, Mapbox) to provide location-aware functionality.

- Payment Processing: Serverless functions can be used to integrate with payment gateways (e.g., Stripe, PayPal) to process payments within the mobile application. They handle tasks such as creating payment requests, managing subscriptions, and handling refunds.

- Social Media Integration: Serverless functions can be used to integrate with social media platforms (e.g., Facebook, Twitter) to allow users to share content, log in with their social accounts, and access social features within the app.

- API Gateways: Serverless functions can be deployed behind API gateways (e.g., Amazon API Gateway, Google Cloud API Gateway, or Azure API Management) to provide a secure and scalable entry point for mobile applications to interact with backend services. This allows for versioning, rate limiting, and other API management features.

IoT (Internet of Things) Applications

Serverless computing provides a compelling architecture for building and deploying IoT applications, addressing the challenges of scalability, cost-efficiency, and real-time data processing. The distributed nature of IoT devices, coupled with the often-unpredictable data volumes they generate, makes serverless an ideal fit. Its ability to automatically scale resources based on demand ensures optimal performance without requiring constant infrastructure management.

IoT Device Data Ingestion and Processing Support

Serverless platforms excel at handling the influx of data from a multitude of IoT devices. They offer event-driven architectures, enabling the immediate triggering of functions in response to incoming data streams. This allows for real-time data ingestion, processing, and analysis.

- Event Triggers: Serverless functions can be triggered by various IoT events, such as device data uploads, status updates, or error notifications. Cloud providers offer services like AWS IoT Core, Azure IoT Hub, and Google Cloud IoT Core, which act as the initial ingestion points, routing data to serverless functions.

- Data Transformation and Filtering: Once ingested, serverless functions can transform, filter, and enrich the incoming data. For example, they can convert sensor readings from raw formats to standardized formats, filter out irrelevant data points, or aggregate data for analysis.

- Data Storage: Processed data can be stored in various storage solutions, such as databases (e.g., DynamoDB, Cosmos DB, Cloud SQL) or object storage (e.g., S3, Azure Blob Storage, Google Cloud Storage), depending on the data volume, access patterns, and analysis requirements.

- Real-time Analytics: Serverless functions can also integrate with real-time analytics services, enabling immediate insights. For instance, they can trigger alerts when sensor readings exceed predefined thresholds or calculate real-time statistics on device performance.

Advantages of Serverless for Handling Large Volumes of IoT Data

Serverless architecture offers significant advantages in managing the substantial and often variable data streams generated by IoT devices. These benefits contribute to improved efficiency, reduced costs, and enhanced scalability.

- Scalability: Serverless platforms automatically scale resources based on demand. This is crucial for IoT applications, where data volumes can fluctuate significantly. Serverless functions can handle sudden spikes in data ingestion without requiring manual intervention or pre-provisioned capacity.

- Cost-Effectiveness: Serverless follows a pay-per-use pricing model, meaning you only pay for the compute time and resources consumed. This is particularly advantageous for IoT applications, where data ingestion may be intermittent or seasonal. This can lead to significant cost savings compared to traditional server-based infrastructure, especially for applications with fluctuating workloads.

- Reduced Operational Overhead: Serverless platforms abstract away the underlying infrastructure management. Developers do not need to provision, manage, or scale servers. This reduces operational overhead, allowing them to focus on application logic and business value.

- Faster Development Cycles: Serverless architectures promote rapid development and deployment. The ease of use and streamlined deployment processes enable developers to iterate quickly and bring IoT applications to market faster.

- Improved Reliability and Availability: Serverless platforms are designed for high availability and fault tolerance. They automatically handle failures and distribute workloads across multiple availability zones, ensuring that IoT applications remain operational even during infrastructure disruptions.

Serverless Solution for Smart Agriculture

A smart agriculture application can leverage serverless computing to optimize crop yields and resource utilization. This use case involves the deployment of sensors in agricultural fields to collect data on environmental conditions.

Use Case Overview:

The system monitors soil moisture, temperature, humidity, and sunlight levels in real-time. This data is then used to automate irrigation, fertilization, and other agricultural processes. The application aims to provide farmers with actionable insights, improve efficiency, and reduce resource waste.

Serverless Architecture Design:

The architecture comprises several key components:

- IoT Devices: Soil moisture sensors, temperature sensors, humidity sensors, and light sensors deployed throughout the fields collect data at regular intervals (e.g., every 5 minutes). These devices transmit data wirelessly.

- Ingestion: The data from the sensors is sent to a central IoT platform (e.g., AWS IoT Core, Azure IoT Hub, Google Cloud IoT Core). These platforms securely receive the data and act as the entry point for the serverless architecture.

- Data Processing: Serverless functions (e.g., AWS Lambda, Azure Functions, Google Cloud Functions) are triggered by events generated by the IoT platform upon receiving data. These functions perform the following tasks:

- Data Validation: Verify the data integrity and filter out erroneous readings.

- Data Transformation: Convert raw sensor readings to a standardized format and perform unit conversions.

- Data Enrichment: Add metadata to the data, such as the sensor location and timestamp.

- Data Analysis: Calculate key metrics, such as average soil moisture levels, temperature variations, and potential crop stress indicators.

- Data Storage: Processed data is stored in a time-series database (e.g., Amazon Timestream, Azure Data Explorer, Google Cloud Bigtable) optimized for storing and querying time-stamped data. This database enables efficient analysis of historical data and trend identification.

- Real-time Alerts: Serverless functions can be triggered based on specific conditions (e.g., soil moisture levels below a threshold). These functions send alerts to farmers via SMS, email, or push notifications, allowing them to take immediate action.

- Dashboard and Visualization: A web application (also potentially serverless) provides a user-friendly interface for farmers to view real-time sensor data, historical trends, and alerts. The application utilizes the data stored in the time-series database to generate visualizations and insights.

- Automation: The system can be integrated with irrigation systems and other farm equipment to automate processes based on sensor data. For example, if the soil moisture level drops below a certain threshold, the system can automatically trigger the irrigation system to water the crops.

Benefits:

This serverless architecture offers several benefits:

- Scalability: The system can handle a large number of sensors and fluctuating data volumes.

- Cost-Effectiveness: Pay-per-use pricing minimizes costs, especially during off-peak seasons.

- Real-time Insights: Farmers receive real-time data and alerts, enabling timely decision-making.

- Automation: Automation capabilities optimize resource utilization and improve efficiency.

- Reduced Operational Overhead: The serverless architecture simplifies infrastructure management, allowing the focus to remain on the agricultural aspects of the system.

Chatbots and Conversational Interfaces

Serverless computing provides a compelling architecture for building and deploying chatbots and conversational interfaces. Its inherent scalability, cost-effectiveness, and ease of management make it an ideal solution for handling the fluctuating demands of user interactions. This section delves into how serverless functions, in conjunction with various cloud services, can be leveraged to create intelligent and responsive conversational experiences.

Role of Serverless in Chatbot Implementation

Serverless functions play a crucial role in the modular design and efficient operation of chatbots. By breaking down chatbot functionality into discrete, event-driven units, serverless architectures allow developers to focus on specific tasks, such as natural language processing (NLP), context management, and database interaction, without the overhead of managing underlying infrastructure. This approach enables rapid development cycles, simplified scaling, and optimized resource utilization, directly translating into reduced operational costs.

Serverless platforms typically offer pre-built integrations with popular chatbot platforms and NLP services, further streamlining the development process.

Using Serverless Functions for NLP Tasks

Natural Language Processing (NLP) is a core component of any intelligent chatbot. Serverless functions can be employed to perform a variety of NLP tasks, including intent recognition, entity extraction, and sentiment analysis. The following list details specific NLP tasks and their implementation using serverless functions:

- Intent Recognition: Serverless functions can be triggered by user input to determine the user’s intent. This involves analyzing the text to understand the underlying purpose of the message. For example, a function might be designed to identify if a user is trying to place an order, ask for support, or check their account balance. Cloud providers like AWS, Azure, and Google Cloud offer NLP services (e.g., AWS Lex, Azure Bot Service, Dialogflow) that can be integrated with serverless functions to perform intent recognition, simplifying the process.

- Entity Extraction: Serverless functions can extract relevant information, or entities, from user input. This involves identifying key pieces of information such as dates, locations, products, or names. For instance, a chatbot for a travel agency could use a serverless function to extract the destination, travel dates, and number of travelers from a user’s query.

- Sentiment Analysis: Sentiment analysis involves determining the emotional tone of the user’s message. Serverless functions can use sentiment analysis APIs to assess whether the user’s message is positive, negative, or neutral. This information can be used to tailor the chatbot’s response, such as escalating the conversation to a human agent if the user expresses dissatisfaction.

- Language Translation: If the chatbot needs to support multiple languages, serverless functions can be used to translate user input and chatbot responses. Translation APIs can be integrated within the serverless function to handle the language conversion.

Chatbot Flow Design Using Serverless Functions

Designing a chatbot flow with serverless functions involves defining the different stages of a conversation and the actions that need to be performed at each stage. A simplified example of a chatbot flow is provided below:

Example Scenario: A customer service chatbot for an online store.

Chatbot Flow Steps:

- User Input: The user types a message, such as “I need help with my order.”

- Trigger Function: An event (e.g., a message received) triggers a serverless function.

- Intent Recognition: The serverless function uses an NLP service (e.g., AWS Lex) to determine the user’s intent (e.g., “get_help”).

- Entity Extraction: If the intent requires it, the function extracts relevant entities (e.g., order number).

- Decision Logic: Based on the intent and entities, the function determines the appropriate response.

- Action Execution: The function might:

- Query a database to retrieve order information.

- Send an email to a support agent.

- Provide a canned response.

- Response: The function sends a response back to the user via the chatbot interface.

Implementation Details:

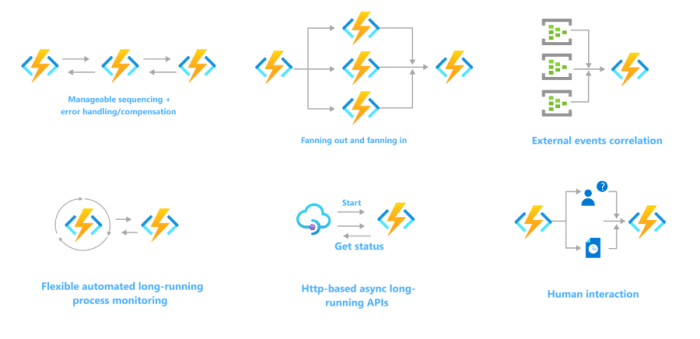

Each step in the flow could be handled by a separate serverless function or a sequence of functions orchestrated by a state machine (e.g., AWS Step Functions). For instance, one function could handle intent recognition, another could extract entities, and a third could query a database. The functions can be chained together, with the output of one function triggering the next.

Example: If the user’s intent is “track_order,” the function might extract the order number, query the order database (using another serverless function), and then present the tracking information to the user. The database interaction would also likely be serverless, perhaps using a serverless database service like DynamoDB or a database service that can be accessed through serverless functions.

Orchestration: Services like AWS Step Functions can orchestrate the flow, managing the state of the conversation and ensuring that the appropriate functions are triggered in the correct sequence. This allows for complex conversational flows to be built without the need to manage the underlying infrastructure.

Event-Driven Architectures

Event-driven architectures represent a paradigm shift in software design, emphasizing asynchronous communication and decoupling of system components. This architectural style leverages events—significant occurrences within a system—to trigger actions and orchestrate workflows. Serverless computing provides an ideal environment for implementing event-driven architectures, enabling scalable, resilient, and cost-effective solutions.

Concept of Event-Driven Architectures and Serverless Integration

Event-driven architectures are built around the principle of reacting to events. These events can originate from various sources, such as user actions, system updates, or scheduled tasks. Serverless functions, by their nature, are well-suited to this architecture. They are designed to execute in response to events, eliminating the need for managing underlying infrastructure. This integration allows for the creation of highly responsive and scalable systems.Serverless platforms provide built-in mechanisms for event triggering, such as:

- Event Sources: Platforms offer integrations with various event sources, including databases, object storage, message queues, and custom events.

- Event Triggers: These triggers define the conditions under which a serverless function is invoked, such as a new file being uploaded to object storage or a message arriving in a queue.

- Function Execution: When an event occurs and a trigger condition is met, the serverless platform automatically invokes the associated function, providing it with the event data.

This architecture allows for independent scaling of individual components, as functions are only invoked when needed, optimizing resource utilization and reducing operational costs. Furthermore, the asynchronous nature of event processing enhances system resilience, as failures in one component do not necessarily impact others.

Examples of Serverless Functions Triggered by Various Event Sources

Serverless functions can be triggered by a wide array of event sources, demonstrating the versatility of this approach.

- Object Storage Events: When a file is uploaded to cloud storage (e.g., Amazon S3, Google Cloud Storage, Azure Blob Storage), a serverless function can be triggered to process the file, such as resizing an image, extracting metadata, or indexing the content.

- Database Events: Changes in a database (e.g., new record creation, updates, deletions) can trigger serverless functions to perform tasks like sending notifications, updating search indexes, or triggering data transformations.

- API Gateway Events: API requests received by an API gateway can trigger serverless functions to handle request processing, authentication, authorization, and response generation.

- Scheduled Events: Serverless functions can be triggered by scheduled events, such as cron jobs, to perform periodic tasks like generating reports, running backups, or cleaning up data.

- Message Queue Events: Messages published to a message queue (e.g., Amazon SQS, Google Cloud Pub/Sub, Azure Service Bus) can trigger serverless functions to process those messages, enabling asynchronous communication and decoupling of components.

These examples highlight the flexibility of serverless event-driven architectures in adapting to diverse use cases.

Scenario: Serverless Functions Responding to Events from a Message Queue

Consider a scenario where a retail company processes customer orders. Orders are initially placed through a web application and then sent to a message queue for further processing.

- Order Submission: When a customer places an order, the web application publishes an order creation event to a message queue (e.g., Amazon SQS). This event includes order details such as customer information, items ordered, and shipping address.

- Message Queue: The message queue stores the order creation event until it is processed by a consumer.

- Serverless Function Trigger: A serverless function is configured to be triggered by messages arriving in the message queue.

- Function Processing: When a new message arrives in the queue, the serverless function is invoked. The function retrieves the order details from the event payload.

- Workflow Execution: The serverless function then performs several tasks, such as:

- Validating the order details.

- Checking inventory availability.

- Updating the order status in the database.

- Sending a confirmation email to the customer.

- Asynchronous Processing: The use of a message queue ensures that the order processing is asynchronous, preventing the web application from being blocked while waiting for the order to be processed. This enhances the responsiveness of the web application.

- Scalability and Resilience: If the volume of orders increases, the serverless function can automatically scale to handle the increased load. The message queue provides resilience, ensuring that orders are not lost even if a processing function fails.

This scenario exemplifies the power of serverless functions in combination with message queues to build scalable, reliable, and responsive systems for handling complex workflows. The asynchronous nature of this architecture allows for efficient resource utilization and improved user experience.

Background Task Processing

Serverless computing provides a powerful paradigm for handling background tasks, offering scalability, cost-effectiveness, and simplified management compared to traditional approaches. These tasks, which often run asynchronously, are essential for various applications, from media processing to data manipulation and notification services. The serverless model excels in this domain by automatically scaling resources based on demand, eliminating the need for manual server provisioning and maintenance.

Asynchronous Execution and Event Triggers

Serverless functions excel at asynchronous execution, a crucial aspect of background task processing. Events, such as file uploads, database updates, or scheduled triggers, initiate the execution of these functions. This event-driven architecture allows for decoupling tasks, improving responsiveness and fault tolerance. Functions are triggered only when needed, optimizing resource utilization and reducing costs.

Image Resizing, Video Encoding, and Email Sending

Serverless functions offer an efficient approach to common background tasks.

- Image Resizing: When a user uploads an image, a serverless function can automatically resize it to multiple resolutions for different devices or uses. This process ensures optimal image display across various platforms and devices, improving user experience and reducing bandwidth consumption.

- Video Encoding: Serverless functions can encode uploaded videos into different formats and resolutions. This process ensures compatibility across various devices and streaming platforms, allowing users to access the video content seamlessly. The serverless model allows for efficient handling of potentially large files and parallel processing, significantly reducing processing time.

- Email Sending: Triggering email sending through serverless functions enables applications to send notifications, confirmations, or newsletters. The event-driven nature allows these functions to be triggered in response to user actions or scheduled events, providing automated communication capabilities without requiring dedicated server resources.

Code Example: Serverless Image Resizing Function

The following code snippet illustrates a simplified serverless function written in JavaScript (using Node.js and the AWS SDK) for resizing an image uploaded to an Amazon S3 bucket. The function retrieves the image from S3, resizes it using a library like Sharp, and saves the resized image back to S3. This is a common scenario where the serverless model simplifies image manipulation tasks.

const AWS = require('aws-sdk'); const sharp = require('sharp'); const s3 = new AWS.S3(); exports.handler = async (event) => try const bucket = event.Records[0].s3.bucket.name; const key = decodeURIComponent(event.Records[0].s3.object.key.replace(/\+/g, ' ')); const params = Bucket: bucket, Key: key, ; const data = await s3.getObject(params).promise(); const image = await sharp(data.Body).resize(200, 200).toBuffer(); // Resize to 200x200 pixels const filename = key.split('.')[0] + '-resized.jpg'; // Modified filename const uploadParams = Bucket: bucket, Key: filename, Body: image, ContentType: 'image/jpeg', // Setting content type ; await s3.putObject(uploadParams).promise(); return statusCode: 200, body: JSON.stringify('Image resized and uploaded successfully!'), ; catch (error) console.error(error); return statusCode: 500, body: JSON.stringify('Error resizing image.'), ; ;

Automation and DevOps

Serverless computing significantly streamlines Automation and DevOps practices, offering capabilities that enhance efficiency, scalability, and cost-effectiveness. By eliminating the need for server management, serverless architectures allow development teams to focus on code and deployment, accelerating the software delivery lifecycle. This paradigm shift fosters agility and reduces operational overhead, making it a compelling choice for modern application development.

Role of Serverless in Automating DevOps Tasks

Serverless functions can automate various DevOps tasks, providing a flexible and scalable solution for operational needs. This automation encompasses several key areas, streamlining processes and minimizing manual intervention.

- Infrastructure Provisioning: Serverless functions can be triggered by events (e.g., a new code commit) to automatically provision and configure infrastructure resources, such as databases, storage buckets, and networking components. This infrastructure-as-code approach ensures consistency and repeatability. For example, a function could be triggered by a new code push to create a new development environment with the necessary resources, ready for testing.

- Deployment Automation: Serverless functions can automate the deployment process, from building and packaging code to deploying it to the target environment. This can include tasks like running tests, updating configuration files, and rolling out updates. This automation significantly reduces the time and effort required to deploy new versions of applications.

- Monitoring and Alerting: Serverless functions can be used to monitor application performance, detect anomalies, and trigger alerts. These functions can collect metrics from various sources, analyze them, and send notifications to the appropriate teams. For instance, a function could monitor the error rate of an API and send an alert if it exceeds a predefined threshold.

- Configuration Management: Serverless functions can manage configuration settings, ensuring consistency across different environments. These functions can retrieve configuration data from a central repository and apply it to the deployed applications. This reduces the risk of configuration errors and simplifies environment management.

- Security Automation: Serverless functions can automate security tasks, such as vulnerability scanning, access control management, and security audits. This automation helps to proactively identify and address security risks.

Advantages of Using Serverless for CI/CD Pipelines

Serverless architectures provide several advantages when integrated into Continuous Integration and Continuous Delivery (CI/CD) pipelines, resulting in faster development cycles, improved scalability, and reduced operational costs.

- Reduced Operational Overhead: Serverless CI/CD pipelines eliminate the need to manage and maintain servers for build, test, and deployment processes. This reduces the operational burden on the development team, allowing them to focus on writing and deploying code.

- Scalability and Elasticity: Serverless functions automatically scale based on demand, ensuring that CI/CD pipelines can handle varying workloads. This elasticity eliminates the need to provision and manage infrastructure resources manually.

- Cost-Effectiveness: Serverless CI/CD pipelines typically operate on a pay-per-use model, meaning that you only pay for the resources consumed. This can significantly reduce costs compared to traditional CI/CD solutions that require dedicated infrastructure.

- Faster Deployment Cycles: Serverless CI/CD pipelines automate many of the tasks involved in building, testing, and deploying applications, resulting in faster deployment cycles. This enables development teams to release new features and updates more frequently.

- Improved Developer Productivity: Serverless CI/CD pipelines streamline the development process, freeing up developers to focus on writing code. This can lead to increased productivity and faster time-to-market.

Illustration of a Serverless CI/CD Pipeline for a Web Application Deployment

A serverless CI/CD pipeline for a web application deployment typically involves several stages, each automated using serverless functions. This example illustrates a simplified pipeline:

Stage 1: Code Commit and Build.

Description: A developer commits code to a Git repository. This event triggers a serverless function (e.g., an AWS Lambda function or Google Cloud Function) via a webhook or event trigger. The function pulls the code from the repository and initiates the build process.

Details:

- Function Trigger: Git commit event (e.g., using GitHub Actions, GitLab CI/CD, or a similar service).

- Function Action: Pulls code from the repository, runs build commands (e.g., `npm install`, `npm run build`), packages the application.

- Output: Application artifact (e.g., a ZIP file containing the built web application) stored in an object storage service (e.g., AWS S3, Google Cloud Storage).

Stage 2: Testing.

Description: The built application artifact triggers another serverless function. This function executes automated tests, such as unit tests, integration tests, and end-to-end tests. The results of the tests are recorded and reported.

Details:

- Function Trigger: Completion of the build stage (e.g., object creation event in object storage).

- Function Action: Downloads the application artifact, executes tests (using tools like Jest, Mocha, Cypress), and records test results.

- Output: Test results (e.g., JUnit XML files, test reports) stored in object storage or a monitoring service.

Stage 3: Deployment.

Description: If the tests pass, another serverless function is triggered to deploy the application to a staging or production environment. This function updates the application’s configuration and deploys the new version. This might involve updating files on a web server, or deploying to a serverless hosting platform.

Details:

- Function Trigger: Successful completion of the testing stage (e.g., a test report indicating all tests passed).

- Function Action: Downloads the application artifact, updates the deployment environment configuration (e.g., database connection strings, API keys), and deploys the application. This could involve updating files on a web server (e.g., using rsync), deploying to a serverless hosting platform (e.g., AWS Amplify, Netlify, Vercel), or updating container images in a container registry and triggering a deployment.

- Output: Deployed application available at a public URL.

Stage 4: Monitoring and Rollback.

Description: After deployment, serverless functions monitor the application’s performance and health. If any issues are detected (e.g., increased error rates, high latency), the pipeline can automatically trigger a rollback to the previous version.

Details:

- Function Trigger: Metrics and logs from monitoring services (e.g., AWS CloudWatch, Google Cloud Monitoring, New Relic).

- Function Action: Analyzes the metrics and logs to detect anomalies. If issues are detected, it triggers a rollback to the previous version of the application, or sends notifications.

- Output: Application running in a stable state, and notifications.

This serverless CI/CD pipeline leverages event triggers, object storage, and serverless functions to automate the entire process, from code commit to deployment and monitoring. This approach significantly reduces manual effort, improves deployment speed, and increases the reliability of the web application.

Cost Optimization and Scalability

Serverless computing presents a compelling shift in how applications are deployed and managed, offering significant advantages in terms of cost optimization and scalability. This approach fundamentally alters the traditional infrastructure paradigm by abstracting away server management and allowing developers to focus solely on code. The benefits extend beyond mere convenience, impacting the financial and operational aspects of software development.

Cost Optimization

The economic advantages of serverless stem from its pay-per-use model. This contrasts sharply with traditional infrastructure, where resources are provisioned and paid for regardless of actual utilization. Serverless platforms only charge for the compute time consumed by the execution of code, measured in milliseconds or seconds. This granular pricing model allows for significant cost savings, especially for applications with intermittent or unpredictable traffic patterns.

- Elimination of Idle Resources: Traditional servers often sit idle, consuming resources and incurring costs even when not actively processing requests. Serverless eliminates this waste by automatically scaling down to zero when no requests are received. This is in stark contrast to a virtual machine, which requires constant upkeep.

- Reduced Operational Overhead: Serverless platforms manage the underlying infrastructure, including server provisioning, patching, and scaling. This frees up development teams from these time-consuming tasks, reducing operational costs and allowing them to focus on building features and delivering value.

- Optimized Resource Allocation: Serverless platforms automatically allocate resources based on demand. This dynamic allocation ensures that applications have the necessary resources to handle peak loads without over-provisioning or incurring unnecessary costs during periods of low traffic.

- Granular Pricing: The pay-per-use model, often measured in milliseconds or seconds, allows for highly efficient resource utilization. Developers pay only for the actual compute time consumed, leading to significant cost savings compared to paying for reserved instances or virtual machines that might be underutilized.

Automatic Scalability

Scalability is a core characteristic of serverless computing. The platform automatically handles scaling, adjusting the number of instances to meet the current demand. This inherent scalability eliminates the need for manual intervention and allows applications to handle traffic spikes without performance degradation.

- Event-Driven Scaling: Serverless functions are typically triggered by events, such as HTTP requests, database updates, or scheduled timers. When an event occurs, the platform automatically provisions and scales the necessary resources to handle the workload. This event-driven architecture ensures that resources are allocated only when needed.

- Horizontal Scaling: Serverless platforms scale horizontally by adding more instances of the function to handle increased load. This approach allows applications to handle massive traffic spikes without the need for vertical scaling, which can be limited by hardware constraints.

- Automatic Provisioning: The platform automatically provisions and manages the underlying infrastructure, including servers, networking, and storage. Developers do not need to worry about the complexities of infrastructure management, allowing them to focus on writing code.

- Elasticity: Serverless provides elasticity, meaning that resources are dynamically scaled up or down based on demand. This ensures that applications can handle peak loads without performance degradation and that costs are optimized during periods of low traffic.

Cost Model Comparison

The following table provides a comparison of the cost models for serverless and traditional infrastructure:

| Feature | Serverless | Traditional Infrastructure (e.g., VMs) | Description |

|---|---|---|---|

| Pricing Model | Pay-per-use (e.g., per millisecond/second of compute time, per invocation, per data transfer) | Fixed cost (e.g., hourly or monthly fees for virtual machines, reserved instances) | Serverless pricing is based on actual resource consumption. Traditional infrastructure often involves fixed costs regardless of usage. |

| Resource Allocation | Automatic, dynamic scaling based on demand | Manual provisioning and scaling, often involving over-provisioning to handle peak loads | Serverless automatically scales resources based on demand. Traditional infrastructure requires manual scaling, which can lead to over-provisioning and wasted resources. |

| Idle Time Costs | Zero cost when no code is running | Costs incurred even when servers are idle | Serverless eliminates costs associated with idle resources. Traditional infrastructure incurs costs even when servers are not actively processing requests. |

| Operational Overhead | Minimal, platform manages infrastructure | Significant, requiring server management, patching, and scaling | Serverless reduces operational overhead by abstracting away infrastructure management. Traditional infrastructure requires significant operational effort. |

Closure

In conclusion, the common use cases for serverless computing highlight its transformative potential. From streamlining web applications and APIs to enabling sophisticated data processing and IoT solutions, serverless architectures offer compelling advantages in terms of scalability, cost optimization, and developer productivity. The ability to focus on code rather than infrastructure has unlocked new possibilities for innovation and efficiency. As the technology continues to evolve, serverless computing is poised to remain a cornerstone of modern software development, driving future advancements in the cloud-native landscape.

Helpful Answers

What are the primary cost benefits of using serverless computing?

Serverless computing offers cost benefits through a pay-per-use model. You only pay for the actual compute time and resources consumed by your code, eliminating the costs associated with idle servers and over-provisioning. This results in significant cost savings, especially for applications with variable workloads.

How does serverless improve scalability?

Serverless platforms automatically scale your application based on demand. They dynamically allocate and manage the necessary resources, ensuring that your application can handle traffic spikes without manual intervention. This auto-scaling feature contributes to high availability and performance.

What are the security considerations when using serverless?

Serverless platforms often provide built-in security features like isolation and encryption. However, it’s crucial to implement robust security practices, including secure coding, proper access control, and regular security audits, to protect your serverless applications from vulnerabilities.

How does serverless facilitate CI/CD pipelines?

Serverless environments integrate well with CI/CD pipelines. They enable automated deployments, testing, and version control, streamlining the development lifecycle. This leads to faster release cycles, improved code quality, and reduced manual effort.

What are the limitations of serverless computing?

Serverless computing has limitations, including vendor lock-in, cold starts, and execution time limits. Cold starts, the delay when a function is invoked for the first time, can impact latency. Execution time limits may require application refactoring for long-running processes. Choosing the right platform and designing your architecture carefully can mitigate these limitations.