Understanding the serverless pay-as-you-go pricing model is crucial in today’s cloud-centric landscape. This model, a cornerstone of serverless computing, revolutionizes how businesses approach infrastructure costs. It offers a paradigm shift from traditional fixed-cost models, allowing for dynamic resource allocation and granular cost control. Serverless computing, which has evolved significantly since its inception, allows developers to focus on code without managing servers, leading to increased agility and efficiency.

This introduction will explore the nuances of this pricing strategy, offering a comprehensive understanding of its implications.

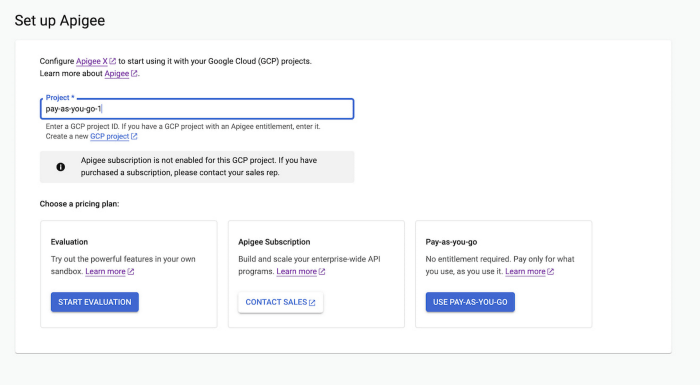

The pay-as-you-go model in serverless computing operates on a simple principle: you pay only for the resources you consume. This contrasts sharply with the traditional model where upfront investment in infrastructure is necessary. This model is particularly attractive for applications with fluctuating workloads, as it enables automatic scaling and cost optimization. Various cloud providers offer different pricing structures, each with its metrics such as compute time, requests, and data transfer.

The following sections will analyze these pricing models, providing insights into their comparative advantages and disadvantages.

Introduction to Serverless Computing and Pay-as-you-Go

Serverless computing represents a significant paradigm shift in cloud computing, moving away from the traditional model of managing and provisioning servers to a model where developers focus solely on writing and deploying code. This approach allows for greater scalability, reduced operational overhead, and a more efficient use of resources, often leading to significant cost savings. The pay-as-you-go pricing model, a core component of serverless, further enhances these benefits by aligning costs directly with actual usage.

Core Concept of Serverless Computing

Serverless computing, at its heart, allows developers to execute code without managing the underlying infrastructure. The cloud provider handles all aspects of server management, including provisioning, scaling, and maintenance. Developers simply upload their code (often in the form of functions) and specify the triggers that will invoke them. This trigger can be anything from an HTTP request to a database update or a scheduled event.

The cloud provider then automatically allocates resources as needed, scaling up or down based on the demand, and charges only for the actual compute time consumed by the function. This contrasts sharply with traditional server-based architectures where resources are provisioned in advance, regardless of actual utilization.

History and Evolution of Serverless Computing

The genesis of serverless computing can be traced back to the early days of cloud computing, with the emergence of Platform-as-a-Service (PaaS) offerings. PaaS provided a higher level of abstraction than Infrastructure-as-a-Service (IaaS), allowing developers to deploy applications without managing the underlying operating system or hardware. However, PaaS still often involved managing the application’s runtime environment and scaling infrastructure. The true precursor to serverless emerged with the introduction of Function-as-a-Service (FaaS) platforms.

- Early FaaS Platforms: AWS Lambda, launched in 2014, is often considered the pioneering FaaS platform. It allowed developers to execute code in response to events, marking a significant step towards serverless architecture. Other early entrants included Google Cloud Functions and Microsoft Azure Functions, which followed suit.

- Growth and Maturity: Over the years, FaaS platforms have matured, offering support for a wider range of programming languages, event sources, and integrations with other cloud services. The ecosystem around serverless has also expanded, with the development of tools and frameworks to simplify deployment, monitoring, and management.

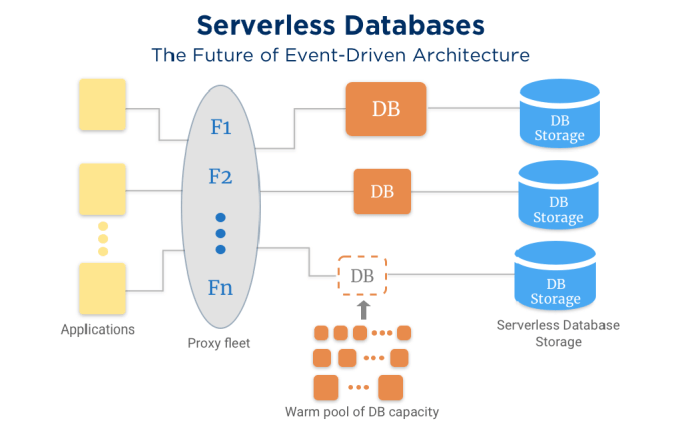

- Beyond FaaS: The term “serverless” has expanded to encompass a broader set of services, including serverless databases, object storage, and API gateways. These services are designed to be fully managed and scale automatically, further reducing the need for infrastructure management.

Advantages of Serverless Architecture

Serverless architecture offers several compelling advantages that have contributed to its growing popularity. These benefits include:

- Reduced Operational Overhead: The cloud provider handles all aspects of server management, freeing developers from the burden of provisioning, scaling, and maintaining infrastructure. This allows developers to focus on writing code and delivering value to the business.

- Automatic Scaling: Serverless platforms automatically scale resources up or down based on demand, ensuring that applications can handle traffic spikes without manual intervention. This elasticity is crucial for building resilient and responsive applications.

- Pay-as-you-go Pricing: Serverless pricing models typically charge only for the actual compute time consumed by the functions. This can lead to significant cost savings compared to traditional server-based architectures, especially for applications with variable workloads.

- Increased Developer Productivity: Serverless platforms simplify the development process by providing a managed runtime environment and a variety of pre-built services. This can lead to faster development cycles and reduced time-to-market.

- Improved Scalability and Reliability: The distributed nature of serverless platforms, combined with automatic scaling, enhances application scalability and reliability. Applications can handle large amounts of traffic and recover from failures more gracefully.

Defining the Pay-as-you-Go Pricing Model

The pay-as-you-go pricing model is a cornerstone of serverless computing, fundamentally altering how cloud resources are consumed and paid for. This model aligns costs directly with actual resource consumption, eliminating upfront commitments and enabling granular control over spending. Understanding its intricacies is crucial for effectively leveraging serverless architectures and optimizing cloud expenditure.

Fundamental Principles of Pay-as-you-Go

The core principle of pay-as-you-go in serverless is that users only pay for the resources they actively use. This contrasts sharply with traditional cloud models, where users often provision and pay for resources regardless of actual utilization. This on-demand approach offers significant advantages in terms of cost efficiency, scalability, and agility. The billing is typically calculated based on several factors, and it is usually automated and transparent.

Usage Measurement in Serverless Computing

Usage measurement in serverless is meticulously tracked to ensure accurate billing. Cloud providers employ various metrics to quantify resource consumption. These metrics provide the basis for calculating the final cost.

- Compute Time: This is often measured in milliseconds or seconds, depending on the specific service and provider. The amount of time a function is actively executing is a primary cost driver.

- Requests: The number of times a serverless function is invoked or triggered. Each invocation typically incurs a cost, even if the function completes quickly.

- Data Transfer: The volume of data transferred into and out of the serverless environment. This includes data ingress (receiving data) and egress (sending data), with egress often being more expensive.

- Memory Consumption: The amount of memory allocated to a function during execution. Some providers charge based on the memory allocated per gigabyte-second.

- Storage: The amount of storage space used to store function code, dependencies, and any associated data.

- API Gateway Calls: The number of calls made to the API gateway, if used, to access serverless functions.

Examples of Metrics Used by Cloud Providers

Different cloud providers use various metrics to bill for serverless services. These metrics can vary in granularity and pricing structure. Understanding these nuances is essential for cost optimization.

- Amazon Web Services (AWS) Lambda: AWS Lambda charges based on the number of requests, the duration of the function execution (measured in milliseconds), and the memory allocated. Data transfer costs also apply.

For example, a function that runs for 500 milliseconds with 128MB of memory allocated and processes 10,000 requests will be billed according to the Lambda pricing structure, which includes a free tier and then per-request and per-duration charges.

- Google Cloud Functions: Google Cloud Functions charges based on the number of function invocations, the duration of the function execution (measured in milliseconds), the memory allocated, and the number of gigabyte-seconds used. Network egress charges also apply.

A function that receives 1,000,000 invocations, runs for an average of 200 milliseconds with 256MB of memory, and transfers 100GB of data out would be charged based on the Function’s pricing, considering the invocation cost, compute time, and network usage.

- Microsoft Azure Functions: Azure Functions pricing is based on the number of executions, resource consumption (measured in gigabyte-seconds), and outbound data transfer. Pricing tiers and free tiers are available.

If an Azure Function executes 50,000 times, utilizes 512MB of memory on average for 1 second, and transfers 50 GB of data out, the cost would be calculated based on the number of executions, the memory usage, and the data transfer charges.

Cost Components in Serverless Pay-as-You-Go

Understanding the cost structure of serverless pay-as-you-go models is crucial for effective resource management and cost optimization. This pricing paradigm breaks down costs into granular components, allowing for precise tracking and control of spending. This section explores the various elements contributing to the overall cost, with a focus on compute time and its impact.

Compute Time and Its Impact on Cost

Compute time, the duration for which a serverless function executes, is a primary driver of cost. The pricing model typically charges based on the function’s execution time, often measured in milliseconds or seconds, and the amount of memory allocated to the function.The following factors influence compute time costs:

- Function Execution Time: The longer a function runs, the more it costs. This emphasizes the importance of optimizing function code for efficiency and minimizing execution time. For example, a function that takes 500ms to complete will cost significantly less than one that takes 2 seconds, assuming all other factors are equal.

- Memory Allocation: Serverless platforms allow you to configure the memory allocated to your functions. Higher memory allocation can sometimes improve performance, but it also increases the cost per execution. Choosing the right memory allocation involves a trade-off between performance and cost.

- Number of Invocations: The number of times a function is invoked directly impacts cost. Each invocation incurs a charge, even if the function executes very quickly. Frequent invocations, driven by high user traffic or automated processes, lead to higher costs.

Optimizing compute time involves several strategies:

- Code Optimization: Writing efficient code that minimizes processing time is critical. This includes choosing appropriate algorithms, avoiding unnecessary operations, and using optimized libraries.

- Resource Allocation: Carefully selecting the appropriate memory allocation for each function can improve performance without overspending. Monitoring function performance metrics helps determine the optimal memory configuration.

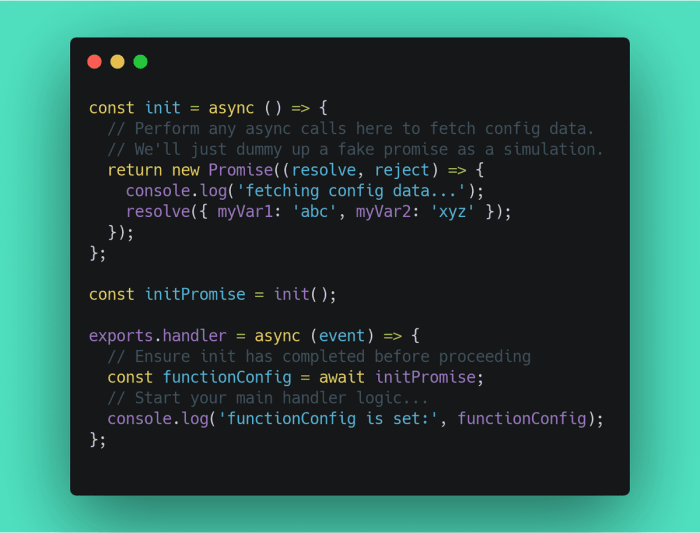

- Caching: Implementing caching mechanisms can reduce the need for repeated function executions, especially for frequently accessed data.

Cost Factors Table

The following table summarizes the key cost factors in a serverless pay-as-you-go model. Each column provides a clear breakdown of the factors, a description, and relevant examples. This table is designed to be responsive, adapting to different screen sizes for optimal readability.

| Cost Factor | Description | Impact on Cost | Example |

|---|---|---|---|

| Compute Time | The duration for which a function executes. | Directly proportional: longer execution = higher cost. | A function that runs for 100ms costs less than a function that runs for 1 second, assuming the same memory allocation. |

| Memory Allocation | The amount of memory allocated to the function during execution. | Higher memory = higher cost per execution, but potentially faster execution. | Allocating 512MB of memory to a function will generally cost more per execution than allocating 128MB. |

| Number of Invocations | The number of times a function is triggered or executed. | Directly proportional: more invocations = higher cost. | A function invoked 1,000 times costs more than the same function invoked 100 times. |

| Requests/Data Transfer | The amount of data transferred in and out of the serverless function. | Higher data transfer = higher cost, typically for egress (outgoing) data. | A function that processes and returns large files will incur higher data transfer costs than a function that returns small JSON payloads. |

Comparing Serverless Pricing Across Providers

The serverless landscape is characterized by diverse pay-as-you-go pricing models, varying significantly between cloud providers. Understanding these differences is crucial for making informed decisions about cost optimization and service selection. This comparison examines the pricing structures of AWS, Azure, and Google Cloud, focusing on common serverless services.

Pricing Breakdown for Serverless Functions

The cost of serverless functions, a cornerstone of serverless computing, is typically determined by factors like execution time, memory allocation, and the number of invocations. Each provider offers a unique approach to metering these components.

- AWS Lambda: AWS Lambda pricing is based on the number of requests and the duration of the function’s execution. There is a free tier that includes a certain number of free requests and compute time each month. After exceeding the free tier, pricing is based on the gigabyte-seconds (GB-seconds) of memory allocated and the duration of the function’s execution. The cost is calculated as:

(GB-seconds per request

– price per GB-second) + (number of requests

– price per request)For example, if a function runs for 100 milliseconds (0.1 seconds) with 128MB (0.128GB) of memory allocated, the GB-seconds used per request is 0.0128 GB-seconds (0.128 GB

– 0.1 seconds). The exact price depends on the region, but it would be a fraction of a cent per request. - Azure Functions: Azure Functions pricing also follows a pay-per-use model. The consumption plan is the most common, where you pay only for the resources your function consumes. The pricing is based on the number of executions, the execution time, and memory consumption. The cost is calculated based on resource consumption, measured in GB-seconds. Azure also offers a Premium plan, which provides more features, like longer execution times and VNet integration, at a higher price.

- Google Cloud Functions: Google Cloud Functions charges based on the number of invocations, the compute time (measured in GB-seconds), and the memory allocated. There is a free tier offering a certain amount of free invocations, compute time, and egress data each month. The cost structure is similar to AWS, with a per-invocation charge and a charge based on resource usage. The price is calculated as:

(GB-seconds per request

– price per GB-second) + (number of requests

– price per request)For instance, a function with 256MB (0.256GB) of memory that runs for 200 milliseconds (0.2 seconds) would consume 0.0512 GB-seconds (0.256 GB

– 0.2 seconds).

Pricing for Serverless Databases

Serverless databases provide scalability and cost efficiency by automatically scaling resources based on demand. However, the pricing models can vary.

- AWS DynamoDB: DynamoDB offers a pay-per-request pricing model. You pay for the read and write capacity units (RCUs and WCUs) you provision or consume. DynamoDB also offers an on-demand capacity mode, where you pay only for the reads and writes your application performs. Pricing varies based on the data transfer, the region, and the data storage. For example, with on-demand capacity, you are charged per million read requests and per million write requests.

- Azure Cosmos DB: Azure Cosmos DB offers a serverless option, where you are charged based on the provisioned throughput and storage consumed. The serverless option is billed per hour based on the maximum throughput consumed, measured in request units per second (RU/s). You are also charged for the storage consumed. Cosmos DB offers multiple APIs (SQL, MongoDB, Cassandra, Gremlin, and Table), and the pricing structure varies slightly depending on the API used.

- Google Cloud Firestore: Firestore uses a pay-as-you-go model, charging for reads, writes, deletes, and storage. The pricing is based on the number of operations and the amount of storage used. Firestore also charges for network egress. The cost is calculated as:

(number of reads

– price per read) + (number of writes

– price per write) + (storage GB

– price per GB) + (network egress

– price per GB)For example, a project that performs 100,000 reads, 10,000 writes, and stores 1GB of data will incur costs based on the price per read, write, storage, and network egress in the relevant region.

Pricing for Serverless Storage

Serverless storage solutions provide scalable and durable storage for various data types. The pricing models are generally based on storage capacity, data transfer, and the number of requests.

- AWS S3: Amazon S3 pricing is based on storage used, the number of requests, and data transfer out. Different storage classes (e.g., Standard, Infrequent Access, Glacier) offer different pricing tiers based on access frequency and availability requirements. Data transfer pricing also varies by region. The cost is calculated as:

(storage GB

– price per GB) + (number of requests

– price per request) + (data transfer out GB

– price per GB)For instance, storing 1 TB of data in the S3 Standard storage class, making 100,000 requests, and transferring out 100 GB of data would incur costs for storage, requests, and data transfer, each based on their respective pricing tiers.

- Azure Blob Storage: Azure Blob Storage pricing is based on storage capacity, transactions (read, write, and delete operations), and data egress. Different access tiers (Hot, Cool, Archive) offer different pricing structures. The Hot tier is for frequently accessed data, Cool for infrequently accessed data, and Archive for long-term archival storage. The pricing varies depending on the region and the access tier selected.

- Google Cloud Storage: Google Cloud Storage pricing is based on storage used, the number of operations, and data transfer. Different storage classes (Standard, Nearline, Coldline, Archive) offer different pricing based on access frequency. The pricing varies depending on the region and the storage class selected. The cost is calculated as:

(storage GB

– price per GB) + (number of operations

– price per operation) + (data transfer out GB

– price per GB)For example, storing 1 TB of data in the Standard storage class, performing 10,000 operations, and transferring out 50 GB of data would incur costs for storage, operations, and data transfer, according to the respective pricing tiers.

Optimizing Costs in Serverless Environments

Serverless computing offers significant cost advantages due to its pay-as-you-go model. However, these benefits are only realized through careful planning and execution. Cost optimization in serverless environments involves a multi-faceted approach, encompassing efficient resource management, function design, and monitoring. Proactive cost optimization ensures that the benefits of serverless, such as scalability and agility, are achieved without incurring unnecessary expenses.

Strategies for Optimizing Costs in a Serverless Environment

Several strategies can be employed to optimize costs in a serverless environment. These strategies, when implemented effectively, can lead to substantial savings and improved resource utilization.

- Right-Sizing Functions and Services: This involves accurately estimating the resource requirements (memory, CPU) of each function and service. Over-provisioning leads to wasted resources and increased costs. Conversely, under-provisioning can result in performance issues and poor user experience.

- Monitoring and Alerting: Implementing robust monitoring and alerting systems is crucial. Monitoring tools provide insights into function performance, invocation counts, execution times, and error rates. Alerts should be configured to notify administrators of any anomalies or deviations from expected behavior, allowing for timely intervention and cost adjustments.

- Code Optimization: Optimizing function code can reduce execution time and resource consumption. This includes techniques such as efficient data processing, minimizing dependencies, and avoiding unnecessary operations. The more efficient the code, the less time it takes to execute, and the lower the associated costs.

- Choosing the Right Services: Serverless platforms offer a variety of services, each with its own pricing model. Selecting the most appropriate services for specific tasks is essential. For example, using a serverless database like Amazon DynamoDB can be more cost-effective than a traditional database for certain workloads.

- Implementing Cost-Aware Design: Designing applications with cost in mind from the outset can significantly impact overall expenses. This includes architectural decisions such as using event-driven architectures to reduce idle time and optimizing data transfer patterns.

Best Practices for Resource Management

Effective resource management is critical for cost optimization in serverless environments. This includes practices that ensure resources are utilized efficiently and costs are minimized.

- Leveraging Autoscaling: Autoscaling allows services to automatically adjust their capacity based on demand. This ensures that resources are available when needed without over-provisioning during periods of low activity. Autoscaling policies should be carefully configured to respond appropriately to changes in workload.

- Using Efficient Data Storage and Retrieval: Data storage and retrieval can be a significant cost component. Optimizing data storage formats, using appropriate caching mechanisms, and minimizing data transfer operations can reduce costs. For example, using compressed data formats can decrease storage costs.

- Implementing Throttling and Rate Limiting: Throttling and rate limiting can help control the number of requests processed by a function or service. This prevents resource exhaustion and unexpected cost spikes, especially during periods of high traffic or potential denial-of-service attacks.

- Regularly Reviewing and Cleaning Up Resources: Regularly reviewing and removing unused or underutilized resources is essential. This includes deleting inactive functions, orphaned storage buckets, and other unnecessary components. Automation can be used to identify and clean up these resources.

- Using Serverless Database Optimization: Serverless databases such as DynamoDB offer features for cost optimization. Using appropriate indexing strategies, choosing the right capacity mode (on-demand or provisioned), and optimizing data access patterns can minimize database costs.

Reducing Costs by Right-Sizing Functions and Services

Right-sizing functions and services involves accurately estimating and allocating the appropriate resources needed for each task. This is a critical step in cost optimization.

- Analyzing Function Metrics: Monitoring function metrics such as memory usage, execution time, and invocation counts provides valuable insights into resource requirements. Tools like AWS Lambda Insights or Azure Monitor can be used to collect and analyze these metrics.

- Testing and Iteration: Thorough testing and iterative refinement are essential. Testing functions with various workloads and data sizes allows for accurate assessment of resource needs. Adjustments can then be made based on performance data.

- Using Memory Optimization Techniques: Optimizing memory usage can reduce costs. This includes techniques such as using efficient data structures, minimizing object creation, and avoiding memory leaks.

- Considering Execution Time: Execution time directly impacts costs. Reducing execution time through code optimization and efficient resource allocation can significantly lower expenses.

- Example: AWS Lambda Memory Allocation:

Imagine a Lambda function processing image thumbnails. Initially, the function is allocated 1024 MB of memory. Monitoring reveals that the function consistently uses only 256 MB during execution. By reducing the allocated memory to 256 MB, the cost per invocation is reduced, leading to overall cost savings, especially with a high volume of image processing requests. For instance, if each invocation costs $0.0000002 per 100ms for 1024MB and $0.00000005 per 100ms for 256MB and the function runs for 500ms, the cost would decrease from $0.000001 to $0.00000025 per invocation.

The Impact of Usage Patterns on Costs

Serverless computing’s pay-as-you-go model makes cost highly dependent on the application’s usage patterns. Understanding these patterns is critical for predicting and controlling expenses. In essence, the more resources consumed, the higher the cost. However, the timing and nature of resource consumption significantly influence the final bill.

Influence of Usage Patterns on Serverless Costs

The fluctuating nature of application traffic directly translates into fluctuating serverless costs. Applications with consistent, predictable workloads are easier to budget for, while those with variable or spiky traffic patterns present more complex cost management challenges. Serverless providers charge based on factors such as the number of function invocations, the duration of execution, the memory allocated, and the data transferred.

Each of these is directly impacted by how users interact with the application.

Effects of Peak and Off-Peak Traffic on Pricing

Peak traffic periods, characterized by surges in user activity, necessitate increased resource allocation. This, in turn, leads to higher costs due to the need to provision more compute power and handle a greater volume of requests. Conversely, off-peak periods, with reduced user activity, result in lower resource consumption and, consequently, lower costs. The difference in pricing between peak and off-peak times is a core characteristic of the pay-as-you-go model, directly reflecting the elasticity of the serverless infrastructure.The following points detail the impact of peak and off-peak traffic on serverless costs:

- Increased Function Invocations: During peak hours, the number of function invocations rises significantly. Each invocation incurs a cost, and a higher volume of invocations directly translates to a higher bill.

- Extended Execution Duration: Peak loads may cause functions to execute for longer durations due to increased processing demands. This extended duration leads to higher charges based on execution time.

- Elevated Memory Consumption: Under peak load, functions might require more memory to handle the increased workload efficiently. Allocating more memory also increases costs.

- Data Transfer Volume: The volume of data transferred, both inbound and outbound, typically increases during peak times as more users access and generate data. This increased data transfer results in higher bandwidth charges.

Relationship Between Usage and Cost: A Visual Representation

The relationship between usage and cost can be effectively illustrated through a chart. The following table provides a simplified example. Note that actual pricing varies based on the provider, region, and specific services used.

| Traffic Level | Function Invocations (per minute) | Average Execution Time (ms) | Cost per Minute (USD) |

|---|---|---|---|

| Off-Peak | 100 | 100 | 0.001 |

| Moderate | 500 | 150 | 0.0075 |

| Peak | 1000 | 200 | 0.02 |

The chart illustrates that as the traffic level increases, the number of function invocations and average execution time also increase, leading to a higher cost per minute. This demonstrates the direct correlation between usage patterns and serverless expenses.

Serverless Pricing for Different Use Cases

The pay-as-you-go pricing model in serverless computing exhibits significant variability based on the specific application scenario. This is due to the fluctuating resource demands, execution times, and the complexity of the underlying operations. Understanding these nuances is crucial for accurately predicting and managing costs.The cost structure is highly dependent on the nature of the workload, including factors such as the number of requests, the amount of data processed, the duration of function execution, and the memory consumed.

Different use cases have unique cost drivers.

Web Applications and APIs

Web applications and APIs represent a common use case for serverless architectures, often involving handling HTTP requests, processing data, and interacting with databases. The pricing model is heavily influenced by the number of requests and the data transfer costs.The following points detail the cost considerations:

- API Gateway: The cost is typically determined by the number of API calls and data transfer. For example, Amazon API Gateway charges per million API calls and per gigabyte of data transferred.

- Compute (Functions): The cost is driven by the execution time (in milliseconds or seconds), memory allocated, and the number of invocations. For instance, AWS Lambda charges based on these parameters. For example, a function that processes a small amount of data might cost less than a function that handles large file uploads and complex transformations.

- Database Interactions: Interactions with databases (e.g., DynamoDB, MongoDB Atlas Serverless) contribute to costs based on read/write operations, data storage, and provisioned throughput. The pricing model often involves pay-per-request or pay-per-operation charges.

- Data Transfer: Data transfer costs arise when data is transferred in or out of the serverless environment. This is a key consideration for applications that serve media files or large datasets.

Data Processing and ETL Pipelines

Data processing and Extract, Transform, Load (ETL) pipelines benefit from serverless architectures due to their ability to scale automatically based on data volume. The pricing is closely tied to compute time, data processing volume, and data transfer costs.Here are some crucial aspects of this use case:

- Data Ingestion: Services like AWS S3 or Azure Blob Storage store the initial data. Storage costs depend on the volume of data stored.

- Compute (Functions): Functions are responsible for data transformation and processing. The cost is proportional to the processing time and memory consumption. The more complex the transformations, the longer the function execution time, and the higher the cost.

- Data Storage: Intermediate data storage (e.g., data lakes) and final data storage (e.g., data warehouses) incur costs based on storage capacity and data retrieval operations.

- Data Transfer: Moving data between storage locations and processing functions incurs data transfer charges.

Event-Driven Applications

Event-driven applications respond to events in real-time. Pricing is heavily influenced by the number of events processed, the compute time required to handle each event, and the use of any integrated services.The following details are important to consider:

- Event Source: Services such as Amazon EventBridge or Azure Event Grid are priced based on the number of events ingested and delivered.

- Compute (Functions): Functions triggered by events are charged based on execution time, memory usage, and the number of invocations.

- Database Interactions: Updating databases in response to events contributes to costs based on read/write operations.

- Notifications and Integrations: Integrating with services like email or SMS providers adds to the cost. For example, sending an email via AWS SES involves charges per email sent.

Mobile Backend

Mobile backend applications utilize serverless services to handle tasks such as authentication, data storage, and push notifications. Pricing is driven by the number of users, API requests, and the volume of data transferred.Consider these elements:

- Authentication and Authorization: Services like AWS Cognito or Azure Active Directory B2C offer authentication services. The cost depends on the number of active users and authentication requests.

- API Gateway: API calls from mobile devices are managed by an API gateway, which incurs costs based on the number of requests and data transfer.

- Data Storage: Storing user data in services like DynamoDB or Azure Cosmos DB contributes to costs based on storage capacity, read/write operations, and provisioned throughput.

- Push Notifications: Sending push notifications via services like AWS SNS or Firebase Cloud Messaging is priced based on the number of notifications sent.

Monitoring and Managing Serverless Costs

Monitoring and managing serverless costs is crucial for maintaining financial control and optimizing resource utilization within a serverless architecture. Proactive cost management ensures that applications remain cost-effective as they scale and evolve, preventing unexpected expenses and enabling efficient resource allocation. Ignoring cost monitoring can lead to uncontrolled spending, especially with the pay-as-you-go model, where costs are directly proportional to usage.

Importance of Monitoring Serverless Costs

The dynamic nature of serverless environments, with their automatic scaling and event-driven architectures, necessitates continuous cost monitoring. Understanding cost drivers and identifying areas for optimization are essential for budget adherence. Failure to monitor costs can result in overspending, especially during periods of high traffic or unexpected events that trigger increased function invocations or data processing.

Tools and Techniques for Cost Management

Various tools and techniques are available for managing serverless costs. These include native cloud provider tools, third-party monitoring solutions, and custom dashboards. Effective cost management requires a multi-faceted approach.

- Cloud Provider Native Tools: Most cloud providers, such as AWS, Azure, and Google Cloud, offer built-in cost management tools. These tools provide detailed cost breakdowns, usage reports, and budgeting features. For instance, AWS Cost Explorer allows users to visualize spending trends, identify cost drivers, and set up cost alerts. Azure Cost Management + Billing offers similar functionalities, including cost analysis, budget management, and recommendations for optimization.

Google Cloud’s Cloud Billing console provides detailed cost reports and budgeting capabilities.

- Third-Party Monitoring Solutions: Several third-party solutions specialize in serverless cost management. These tools often provide more advanced features than native cloud tools, such as real-time cost tracking, anomaly detection, and automated optimization recommendations. Examples include tools that integrate with multiple cloud providers, providing a unified view of costs across different platforms.

- Custom Dashboards and Alerts: Creating custom dashboards and setting up cost alerts are essential for proactive cost management. Dashboards can visualize key cost metrics, providing insights into spending patterns. Cost alerts notify users when spending exceeds predefined thresholds, allowing for immediate action to mitigate potential overruns. These can be integrated with monitoring tools to generate alerts and notifications based on the configured threshold.

- Resource Tagging and Cost Allocation: Tagging resources with relevant metadata, such as application name, environment (e.g., production, staging), or team, enables detailed cost allocation. This allows for the accurate attribution of costs to specific projects or teams, facilitating accountability and informed decision-making. This is critical for understanding the cost breakdown of complex applications.

- Automated Optimization: Implementing automated optimization strategies can help reduce costs. This includes right-sizing resources, optimizing function code for efficiency, and leveraging cost-effective storage options. For example, automatically adjusting memory allocation for serverless functions based on observed performance can significantly reduce costs.

Key Metrics to Monitor

Monitoring key metrics is critical for understanding and controlling serverless costs. These metrics provide insights into resource consumption, performance, and cost efficiency.

- Function Invocations: The number of times a serverless function is executed. This is a primary driver of costs, especially for functions with high traffic or frequent triggers. Monitoring this metric helps to identify functions with excessive invocations, which may indicate inefficiencies or potential optimization opportunities.

- Function Execution Time: The duration of each function execution. Longer execution times translate to higher costs. Monitoring execution time helps to identify slow-performing functions that may benefit from code optimization or resource adjustments.

- Memory Consumption: The amount of memory allocated to each function. Over-provisioning memory can lead to unnecessary costs. Monitoring memory consumption helps to identify functions that can be downsized without impacting performance.

- Data Transfer: The amount of data transferred in and out of the serverless environment. This is a significant cost component, especially for applications that handle large volumes of data. Monitoring data transfer helps to identify potential bandwidth bottlenecks and optimize data transfer patterns.

- Storage Costs: The cost of storing data associated with serverless applications. Monitoring storage costs helps to identify opportunities for data archiving, lifecycle management, and optimization of storage tiers.

- Error Rates: The percentage of function invocations that result in errors. High error rates can lead to increased costs due to retries and unnecessary resource consumption. Monitoring error rates helps to identify and address issues that can negatively impact cost efficiency.

- Cost per Request/Transaction: This metric provides a more granular view of cost efficiency. It allows for the calculation of the cost associated with each request or transaction processed by the serverless application. Monitoring this metric helps to identify areas where cost optimization efforts can have the greatest impact.

Benefits and Drawbacks of Pay-as-You-Go

The pay-as-you-go pricing model in serverless computing offers a compelling value proposition, attracting businesses with its promise of cost efficiency and scalability. However, it’s crucial to understand both the advantages and disadvantages to make informed decisions. This section explores the benefits and drawbacks of the pay-as-you-go model and contrasts it with alternative pricing strategies.

Benefits of Pay-as-You-Go for Businesses

The pay-as-you-go model presents several key advantages that can significantly benefit businesses of various sizes. These benefits primarily revolve around cost optimization, resource utilization, and operational agility.

- Cost Efficiency and Reduced Upfront Investment: The most significant benefit is the elimination of upfront infrastructure costs. Businesses only pay for the resources they consume, leading to potentially significant savings, especially for applications with variable or unpredictable workloads. This is particularly advantageous for startups and businesses with limited capital. For example, a small e-commerce business experiences peak traffic during sales events. With pay-as-you-go, they only pay for the increased compute and storage during those periods, avoiding the cost of over-provisioning resources year-round.

- Scalability and Flexibility: Serverless environments automatically scale resources based on demand. The pay-as-you-go model perfectly complements this scalability. As traffic increases, the infrastructure scales up seamlessly, and the business is billed only for the additional usage. Conversely, when demand decreases, resources scale down, reducing costs. This dynamic scaling eliminates the need for manual intervention and ensures optimal resource utilization.

Consider a mobile gaming application. The number of active users can fluctuate dramatically. Pay-as-you-go allows the application to scale resources up during peak hours and down during off-peak hours, optimizing costs.

- Improved Resource Utilization: With traditional infrastructure, resources often sit idle, leading to wasted spending. Pay-as-you-go ensures that businesses pay only for the resources they actively use. This is especially beneficial for applications with infrequent or spiky workloads. For instance, a batch processing job that runs once a day or a web application with seasonal traffic patterns can significantly benefit from this model.

- Faster Time to Market and Increased Agility: Serverless architectures, combined with the pay-as-you-go model, enable faster development cycles. Developers can focus on writing code instead of managing infrastructure. This agility allows businesses to quickly prototype, deploy, and iterate on applications, leading to faster time to market and increased responsiveness to changing market demands.

- Reduced Operational Overhead: Serverless providers handle infrastructure management, including server provisioning, patching, and scaling. This reduces the operational burden on IT teams, freeing them to focus on core business activities. The pay-as-you-go model also simplifies cost management by providing detailed usage metrics and billing information.

Drawbacks of the Pay-as-You-Go Pricing Model

While offering numerous advantages, the pay-as-you-go model also presents potential drawbacks that businesses must consider. These drawbacks primarily relate to cost predictability, vendor lock-in, and debugging complexity.

- Cost Unpredictability: The primary disadvantage is the potential for unpredictable costs. Without careful monitoring and cost optimization strategies, usage can quickly escalate, leading to unexpected bills. This is particularly true for applications with complex dependencies or poorly optimized code. For instance, a poorly written function that runs inefficiently can consume excessive resources, driving up costs.

- Vendor Lock-in: Migrating serverless applications between providers can be complex and time-consuming, creating a vendor lock-in situation. This can limit a business’s negotiating power and flexibility. Different providers have different services and features, and moving an application from one platform to another may require significant code modifications.

- Debugging and Monitoring Challenges: Debugging serverless applications can be more complex than debugging traditional applications. Distributed architectures and the lack of direct access to the underlying infrastructure can make it challenging to identify and resolve performance issues. Comprehensive monitoring and logging are crucial for troubleshooting.

- Performance Variability: Performance can be less predictable than with dedicated infrastructure. Serverless functions run on shared resources, and the performance can vary depending on the workload and resource availability. This variability can be problematic for latency-sensitive applications.

- Security Considerations: Managing security in a serverless environment requires careful attention. Businesses are responsible for securing their code and data, and they must understand the security implications of the chosen serverless services. Incorrectly configured security settings can lead to vulnerabilities.

Comparison of Pay-as-You-Go with Other Pricing Models

Understanding how the pay-as-you-go model compares to other pricing models helps businesses make informed decisions about their infrastructure. This comparison highlights the key differences between pay-as-you-go and alternative pricing models.

| Pricing Model | Description | Advantages | Disadvantages | Suitable for |

|---|---|---|---|---|

| Pay-as-You-Go (Serverless) | Businesses pay only for the resources they consume, typically measured in compute time, storage, and requests. | Cost-effective for variable workloads, automatic scaling, no upfront investment. | Cost unpredictability, potential for vendor lock-in, debugging complexity. | Applications with unpredictable traffic, event-driven architectures, and development teams focused on code rather than infrastructure. |

| Reserved Instances (Serverless) | Customers reserve compute capacity for a specific period (e.g., one or three years) at a discounted rate. | Significantly lower cost compared to on-demand, predictable costs. | Requires upfront commitment, less flexible for fluctuating workloads. | Applications with stable and predictable workloads that can forecast resource needs accurately. |

| Subscription-Based Pricing | Users pay a fixed monthly or annual fee for access to a service, with varying tiers based on features or usage limits. | Predictable costs, simplified budgeting. | May not be cost-effective for low usage, limited scalability. | Businesses that require a certain level of functionality or usage, with relatively predictable needs. |

| On-Premise Infrastructure | Businesses own and manage their own hardware and software. | Full control over the infrastructure, potentially lower long-term costs for high and predictable workloads. | High upfront investment, ongoing maintenance costs, limited scalability. | Businesses with very specific needs, highly regulated environments, or large, predictable workloads. |

Real-World Examples and Case Studies

The adoption of serverless computing and its associated pay-as-you-go pricing model has been widespread, spanning various industries and applications. Analyzing real-world examples provides valuable insights into the practical application of these concepts, highlighting both the benefits and challenges encountered during implementation. These case studies demonstrate the diverse ways organizations leverage serverless architectures to optimize costs, improve scalability, and accelerate development cycles.

Companies Utilizing Serverless and Pay-as-You-Go

Numerous companies across different sectors have successfully adopted serverless architectures and pay-as-you-go pricing models. These examples showcase the versatility and adaptability of serverless computing.

- Netflix: Netflix uses serverless functions, primarily AWS Lambda, for various tasks, including video encoding, data processing, and backend services. The scalability and cost-effectiveness of serverless infrastructure are crucial for handling Netflix’s massive streaming volumes. This is achieved through the automatic scaling capabilities of the serverless model, ensuring that resources are allocated only when needed, directly influencing cost efficiency.

- Coca-Cola: Coca-Cola has employed serverless technology to enhance its customer engagement platform. By utilizing serverless functions, Coca-Cola can quickly deploy new features and scale their platform based on demand. The pay-as-you-go model allows them to manage costs effectively, particularly during peak seasons and promotional campaigns. This model offers the flexibility to scale resources up or down dynamically, aligning infrastructure costs with actual usage patterns.

- The New York Times: The New York Times leverages serverless computing for its content delivery and data processing pipelines. They use AWS Lambda and other serverless services to process articles, manage image resizing, and handle website traffic. The ability to scale resources on demand is vital for handling the fluctuating traffic demands of a major news website, optimizing resource allocation and controlling costs.

- Pinterest: Pinterest utilizes serverless functions to power its backend services, including image processing, search indexing, and API gateways. The pay-as-you-go model enables Pinterest to scale its infrastructure to meet the demands of its rapidly growing user base while maintaining cost efficiency. Pinterest’s adoption demonstrates how serverless can support the requirements of high-traffic, data-intensive applications.

- iRobot: iRobot has adopted serverless architectures to process data from its robotic vacuum cleaners, Roomba. By using serverless functions, iRobot can efficiently analyze data, manage updates, and provide enhanced customer services. The pay-as-you-go pricing model aligns well with the intermittent nature of data processing tasks, optimizing costs and resource utilization.

Case Studies Illustrating Cost Savings Achieved

Quantifiable cost savings are a key driver for serverless adoption. Analyzing specific case studies demonstrates the tangible financial benefits of this approach.

- Serverless Transformation at a Financial Institution: A large financial institution migrated its batch processing workloads to a serverless environment using AWS Lambda and S3. The institution reported a 60% reduction in infrastructure costs compared to its previous on-premises infrastructure. This reduction was primarily due to the pay-as-you-go pricing model, which eliminated the need to provision and maintain idle servers. The financial institution was able to dynamically scale its resources, optimizing resource utilization and minimizing over-provisioning, leading to significant cost savings.

- E-commerce Platform Optimization: An e-commerce platform adopted serverless functions to handle its product catalog, order processing, and payment gateway integrations. By leveraging serverless, the platform experienced a 40% decrease in operational expenses, including reduced server maintenance and monitoring costs. The pay-as-you-go model enabled the platform to scale its resources during peak shopping seasons and reduce costs during off-peak periods. This flexibility in resource allocation allowed for a more efficient use of resources and optimized spending.

- Healthcare Application Development: A healthcare provider developed a new application using serverless technologies to manage patient data and appointment scheduling. The provider reported a 50% reduction in development costs compared to a traditional architecture, primarily due to the elimination of server management overhead. The pay-as-you-go pricing model further contributed to cost savings by enabling the provider to pay only for the compute resources consumed.

This resulted in improved resource utilization and minimized infrastructure expenses.

Challenges and Successes of Implementing Serverless Solutions

Implementing serverless solutions presents both challenges and opportunities. Understanding these aspects is crucial for successful adoption.

- Challenges:

- Vendor Lock-in: Serverless architectures often tie applications to specific cloud providers. Migrating between providers can be complex and time-consuming, leading to vendor lock-in. Mitigation strategies include utilizing open-source frameworks and adopting platform-agnostic coding practices.

- Monitoring and Debugging: Monitoring and debugging serverless applications can be challenging due to the distributed nature of the architecture. Tools and strategies for distributed tracing and logging are essential for identifying and resolving issues.

- Cold Starts: Cold starts, the initial latency when a serverless function is invoked, can impact performance. Techniques like function pre-warming and optimizing code can mitigate this issue.

- Security Considerations: Securing serverless applications requires a different approach than traditional architectures. Managing access control, securing API gateways, and protecting against common web vulnerabilities are critical.

- Successes:

- Increased Agility: Serverless allows for faster development cycles and quicker deployments. This increased agility allows organizations to respond rapidly to market changes and innovate more effectively.

- Improved Scalability: Serverless architectures automatically scale to handle fluctuating workloads, ensuring optimal performance. This scalability is crucial for applications with unpredictable traffic patterns.

- Reduced Operational Overhead: Serverless reduces the need for infrastructure management, freeing up developers to focus on building applications. This reduces the operational burden on IT teams.

- Cost Optimization: The pay-as-you-go pricing model allows for efficient resource utilization and cost savings. This model aligns infrastructure costs with actual usage, optimizing spending.

Ultimate Conclusion

In conclusion, the serverless pay-as-you-go pricing model presents a transformative approach to cloud computing economics. By understanding the intricacies of this model, businesses can optimize their cloud spending, improve resource utilization, and achieve greater operational agility. The dynamic nature of serverless environments, combined with the pay-as-you-go approach, demands continuous monitoring and strategic cost management. As cloud adoption continues to grow, mastering this pricing model will be essential for achieving cost-effective and scalable solutions.

The insights gained from this exploration will empower informed decision-making, ensuring efficient and optimized resource utilization in the cloud.

Questions and Answers

What are the key metrics used to calculate serverless costs?

Key metrics typically include compute time (measured in milliseconds or seconds), the number of requests, memory consumption, and data transfer volume. Different providers may include additional metrics based on specific services.

How does the “cold start” affect serverless costs?

Cold starts, the initial latency when a function is invoked, can impact costs if the function takes longer to initialize, consuming more compute time. Optimizing function code and using provisioned concurrency can mitigate this effect.

Can I predict my serverless costs?

Yes, while costs are variable, you can estimate them by analyzing historical usage patterns, forecasting future demand, and using cost management tools. Cloud providers often offer calculators to assist in cost estimation.

What are some best practices for managing serverless costs?

Best practices include right-sizing functions, monitoring resource utilization, implementing autoscaling, using cost allocation tags, and leveraging cost optimization tools provided by cloud providers.

How do I choose between different serverless providers based on pricing?

Compare pricing models across providers, considering your specific workload requirements. Evaluate the cost of core services (functions, databases, storage), the pricing structure (per request, per compute time), and any free tiers or discounts offered.