Embarking on the journey of setting up a CI/CD pipeline for microservices signifies a pivotal step towards achieving enhanced software development agility and efficiency. This comprehensive guide will navigate you through the essential concepts, tools, and best practices necessary to build a robust and streamlined CI/CD pipeline tailored for microservices architectures. We will explore the intricacies of automated builds, testing, deployment, and monitoring, all while emphasizing the critical aspects of security and scalability.

The modern software landscape is increasingly embracing microservices due to their inherent benefits in terms of scalability, maintainability, and independent deployment. However, managing the complexity of multiple, independently deployable services requires a well-defined CI/CD pipeline. This is where this guide comes in, offering a clear roadmap for implementing CI/CD for microservices, ensuring rapid, reliable, and secure software delivery. We will delve into choosing the right tools, version control, testing strategies, and crucial security considerations to provide you with a holistic understanding.

Introduction to CI/CD for Microservices

Implementing Continuous Integration and Continuous Delivery (CI/CD) pipelines is crucial for modern software development, especially when dealing with microservices. This approach streamlines the software development lifecycle, enabling faster releases, improved quality, and enhanced collaboration. Understanding CI/CD principles and their application to microservices is essential for building robust and scalable applications.

Core Concepts of CI/CD in Microservices

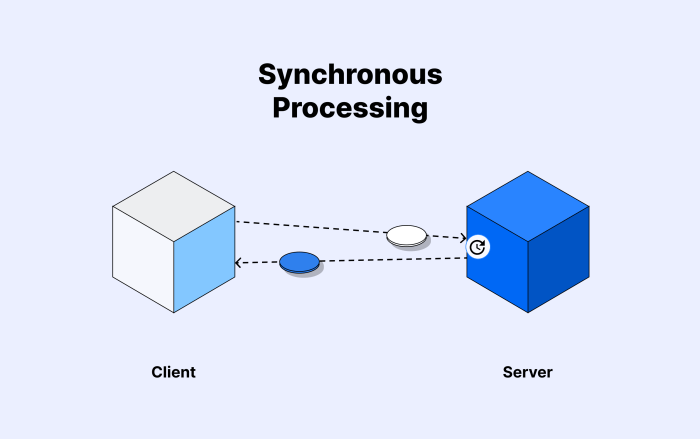

CI/CD, in the context of microservices, involves automating the build, test, and deployment processes. This automation allows developers to frequently integrate code changes, test them thoroughly, and deploy them to production environments with minimal manual intervention. The primary goals are to reduce the time it takes to release new features and bug fixes, and to minimize the risk of errors.

Benefits of Implementing a CI/CD Pipeline for Microservices

Adopting CI/CD for microservices provides several significant advantages. These benefits contribute to greater agility and operational efficiency, ultimately leading to a more responsive and resilient software development process.

- Faster Time-to-Market: CI/CD pipelines automate the build, test, and deployment phases, significantly reducing the time it takes to release new features and bug fixes. Frequent releases allow businesses to respond quickly to market demands and gain a competitive edge. For example, a retail company using CI/CD for its e-commerce platform can deploy new promotions or features within hours instead of days or weeks, capitalizing on seasonal opportunities or addressing customer feedback promptly.

- Improved Software Quality: Automated testing, a core component of CI/CD, ensures that code changes are thoroughly tested before deployment. This helps to identify and fix bugs early in the development cycle, leading to higher-quality software and reduced risk of production issues. Different testing methodologies like unit tests, integration tests, and end-to-end tests are integrated into the pipeline to validate the functionality of individual microservices and their interactions.

- Increased Efficiency and Productivity: Automating repetitive tasks, such as building, testing, and deployment, frees up developers and operations teams to focus on more strategic work. Automation reduces manual errors and allows for more efficient resource utilization. For example, a development team can spend more time on coding and less time on manual deployment tasks, leading to increased productivity.

- Reduced Risk: CI/CD pipelines enable incremental changes and frequent releases, reducing the risk associated with large, infrequent deployments. If a deployment fails, the impact is contained, and the rollback process is streamlined. This approach minimizes the blast radius of any issue.

- Enhanced Collaboration: CI/CD promotes collaboration between development, operations, and quality assurance teams. Shared pipelines and automated processes foster a culture of transparency and communication, leading to faster problem resolution and improved team performance.

Microservices and their Relationship to CI/CD Pipelines

Microservices are small, independent services that work together to form a larger application. Each microservice is developed, deployed, and scaled independently, which is a key driver for adopting CI/CD. The architectural design of microservices complements CI/CD principles.

- Independent Deployability: Each microservice can be deployed independently, allowing for faster and more frequent releases. This contrasts with monolithic applications, where a change in any part of the application requires the entire application to be redeployed.

- Technology Diversity: Microservices can be developed using different technologies and programming languages, allowing teams to choose the best tools for the job. CI/CD pipelines need to accommodate this diversity, ensuring that each microservice is built, tested, and deployed correctly regardless of its technology stack.

- Scalability and Resilience: Microservices can be scaled independently based on their resource needs. CI/CD pipelines can automate the scaling process, ensuring that microservices can handle increased traffic and maintain high availability.

- Faster Development Cycles: The smaller size and independent nature of microservices enable faster development cycles. Developers can make changes to a single microservice without affecting other parts of the application.

Choosing the Right Tools

Selecting the appropriate tools is a critical step in establishing an efficient and effective CI/CD pipeline for microservices. This involves careful consideration of various aspects, from build processes to deployment strategies. The choice of tools directly impacts the speed, reliability, and maintainability of your microservices architecture.A robust build process is fundamental to a successful CI/CD pipeline. Build tools automate the compilation, testing, and packaging of code, ensuring consistency and reducing the potential for human error.

Several popular build tools are well-suited for microservices projects, each with its own strengths and weaknesses.

Popular Build Tools for Microservices Projects

Several build tools are commonly employed in microservices development, offering different features and capabilities. These tools streamline the build process, enabling developers to create, test, and package their code efficiently.

- Maven: Primarily used for Java projects, Maven excels at managing dependencies and automating the build lifecycle. It follows a convention-over-configuration approach, simplifying project setup and maintenance.

- Gradle: Another popular choice for Java projects, Gradle provides more flexibility than Maven. It utilizes a Groovy or Kotlin-based Domain Specific Language (DSL) for defining build scripts, offering fine-grained control over the build process.

- npm (Node Package Manager): The default package manager for JavaScript and Node.js projects. npm manages dependencies and facilitates the building and packaging of front-end and back-end components written in JavaScript.

- Bazel: Developed by Google, Bazel is a powerful, multi-language build tool known for its speed and scalability. It excels at building large and complex projects, making it suitable for microservices architectures with numerous components.

Configuring a Build Tool for Building a Microservice (Maven Example)

Configuring a build tool, such as Maven, involves defining the project structure, dependencies, and build tasks within a configuration file (pom.xml). This file acts as a blueprint for the build process.To configure Maven for a microservice:

1. Project Structure

Create a standard Maven project structure, including directories for source code (src/main/java), resources (src/main/resources), and test code (src/test/java).

2. pom.xml Configuration

Create a pom.xml file in the root directory of your microservice project. This file will define the project’s metadata, dependencies, and build plugins. “`xml

3. Dependencies: Declare the necessary dependencies for your microservice within the `

4. Plugins: Configure build plugins within the `

5. Build Execution: Execute the build process using the Maven command-line interface (CLI). For example, `mvn clean install` will clean the project, compile the code, run tests, and package the application.

6. Testing: Write unit and integration tests using frameworks like JUnit or TestNG. Maven will automatically execute these tests during the build process, ensuring code quality.

7. Packaging: Maven packages the microservice into an artifact, such as a JAR or WAR file, which can then be deployed to a container or server.

By following these steps, you can effectively configure Maven to build your microservice, ensuring a consistent and automated build process. The specific configuration will vary depending on the technology stack and requirements of your microservice.

Comparing Build Tools for Microservices

Different build tools offer varying features and functionalities, making it essential to choose the tool that best suits your project’s needs. The following table compares some key features of popular build tools:

| Feature | Maven | Gradle | npm | Bazel |

|---|---|---|---|---|

| Language Support | Java | Java, Groovy, Kotlin | JavaScript, TypeScript | Multi-language (Java, C++, Go, etc.) |

| Dependency Management | Excellent, uses Maven Central repository | Excellent, uses Maven Central and other repositories | Excellent, uses npm registry | Uses external repositories and internal workspaces |

| Configuration | XML (pom.xml) | Groovy/Kotlin DSL (build.gradle) | JSON (package.json) | Starlark (BUILD files) |

| Build Speed | Generally slower for large projects | Faster than Maven, uses incremental builds | Fast for JavaScript projects | Very fast, uses caching and parallel builds |

| Flexibility | Convention-over-configuration, less flexible | Highly flexible, customizable build scripts | Flexible for JavaScript projects | Highly flexible, supports complex build graphs |

The choice of build tool depends on factors such as the programming language used, the project’s complexity, and the desired level of flexibility. Maven is a solid choice for Java projects, while Gradle offers more flexibility. npm is ideal for JavaScript projects, and Bazel excels in building large and complex multi-language projects.

Choosing the Right Tools

Selecting the appropriate tools is crucial for building an efficient and effective CI/CD pipeline for microservices. The tools chosen directly impact the speed of development, the reliability of deployments, and the overall maintainability of the system. This section focuses on CI/CD platforms, examining various options and providing guidance on making informed decisions.

CI/CD Platforms

Choosing the right CI/CD platform is a critical decision, as it forms the backbone of your automation strategy. Several platforms offer comprehensive features for automating the build, test, and deployment phases of the software development lifecycle. The best choice depends on factors like project size, budget, team expertise, and the specific requirements of your microservices architecture.

Here’s an overview of some popular CI/CD platforms:

- Jenkins: An open-source automation server, highly extensible through plugins. It’s a versatile platform suitable for various project sizes.

- GitLab CI: Integrated directly within the GitLab platform, offering a seamless experience for projects already using GitLab for version control and project management.

- CircleCI: A cloud-based CI/CD platform known for its ease of use and fast build times. It integrates well with various version control systems.

- GitHub Actions: Integrated directly into GitHub, allowing for automated workflows triggered by events within a repository. It’s a good choice for projects hosted on GitHub.

When selecting a CI/CD platform, consider these factors:

- Ease of Use: Evaluate the platform’s user interface and learning curve. Consider how easy it is to set up and configure pipelines.

- Integration Capabilities: Ensure the platform integrates seamlessly with your existing tools, such as version control systems (GitLab, GitHub, Bitbucket), container registries (Docker Hub, Amazon ECR, Google Container Registry), and cloud providers (AWS, Google Cloud, Azure).

- Scalability: Consider the platform’s ability to handle the growing complexity of your microservices architecture and the increasing number of builds and deployments.

- Cost: Evaluate the pricing model, including any associated costs for build minutes, storage, or support. Consider whether the platform is open-source or commercial.

- Community and Support: Look for a platform with an active community and readily available documentation and support resources.

- Features: Assess whether the platform provides essential features such as parallel builds, automated testing, artifact management, and deployment strategies (e.g., blue/green deployments, canary releases).

Jenkins: Pros and Cons

Jenkins, due to its widespread adoption and flexibility, is a frequently chosen CI/CD platform. However, it’s important to understand its strengths and weaknesses before making a decision.

Here’s a breakdown of the pros and cons of using Jenkins:

- Pros:

- Open Source and Free: Jenkins is open-source, making it a cost-effective solution.

- Extensive Plugin Ecosystem: A vast library of plugins extends Jenkins’ functionality, supporting a wide range of tools and integrations. For example, plugins exist for Docker, Kubernetes, various cloud providers, and testing frameworks.

- Highly Customizable: Jenkins allows for extensive customization through its configuration files and scripting capabilities.

- Large and Active Community: A large and active community provides ample support, documentation, and readily available solutions to common problems.

- Mature and Stable: Jenkins has been around for many years and is a mature and stable platform.

- Versatile: Can be used for a wide variety of projects, from simple applications to complex microservices architectures.

- Cons:

- Complex Setup and Configuration: Setting up and configuring Jenkins can be complex, especially for beginners, requiring significant initial effort.

- Maintenance Overhead: Maintaining Jenkins, including plugin updates and server administration, can be time-consuming.

- Security Concerns: Securing Jenkins requires careful attention to access control and plugin security vulnerabilities.

- Resource Intensive: Jenkins can be resource-intensive, particularly with a large number of builds or complex pipelines, potentially requiring significant server resources.

- UI Can Be Dated: The user interface, while functional, may appear less modern compared to some cloud-based CI/CD platforms.

Version Control and Code Management

Version control is the cornerstone of any successful CI/CD pipeline, especially when dealing with the distributed nature of microservices. It allows teams to track changes to the codebase, collaborate effectively, and revert to previous versions if necessary. This section delves into the critical role of version control, specifically Git, in the context of microservices, providing practical guidance on setting up repositories and implementing effective branching strategies.

Importance of Version Control (Git) in CI/CD

Version control, particularly Git, is indispensable for managing the complexities inherent in microservices architectures. It offers a robust system for tracking code changes, facilitating collaboration, and enabling efficient rollbacks. Its distributed nature ensures that developers can work independently on their respective microservices without interfering with each other’s progress.

- Code Tracking and History: Git maintains a complete history of all code changes, including who made the changes, when, and why. This audit trail is invaluable for debugging, understanding code evolution, and identifying the source of issues.

- Collaboration and Teamwork: Git provides a platform for developers to work together on the same codebase. Features like branching, merging, and pull requests enable parallel development and code review processes.

- Rollback and Recovery: In case of a bug or deployment failure, Git allows teams to easily revert to a previous, stable version of the code, minimizing downtime and impact.

- Automation and CI/CD Integration: Git is the central point of integration for CI/CD pipelines. CI/CD tools automatically trigger builds, tests, and deployments based on changes pushed to the Git repository.

- Branching and Feature Development: Git’s branching capabilities enable developers to work on new features in isolation, without affecting the main codebase. This promotes a clean and organized development process.

Setting Up a Git Repository for a Microservices Project

Setting up a Git repository for a microservices project involves creating a central repository to store the code for all microservices. The structure of the repository and the organization of the code are critical for maintainability and scalability.

Here’s a breakdown of the process:

- Choose a Hosting Provider: Select a Git hosting provider like GitHub, GitLab, or Bitbucket. These platforms provide features like repository hosting, access control, and CI/CD integration.

- Create the Repository: Create a new repository on your chosen platform. Give it a descriptive name that reflects the overall project. For example, if the project is an e-commerce platform, the repository name could be `ecommerce-platform`.

- Initialize the Repository: If you’re starting from scratch, initialize a Git repository locally using the command `git init`. If you are using an existing project, you will clone the remote repository with the command `git clone [repository URL]`.

- Organize Microservice Code: Decide on a directory structure for your microservices. A common approach is to have a separate directory for each microservice within the main repository. For example:

ecommerce-platform/ecommerce-platform/user-service/ecommerce-platform/product-service/ecommerce-platform/order-service/

- Add Code and Commit: Add the code for each microservice to its respective directory. Then, stage the changes using `git add .` (to add all modified files) and commit the changes with a descriptive message using `git commit -m “Initial commit for user-service”`.

- Configure Remote: Link your local repository to the remote repository on your chosen hosting provider using the command `git remote add origin [repository URL]`.

- Push to Remote: Push your local commits to the remote repository using `git push -u origin main` (or `git push -u origin master`, depending on the default branch).

- Configure Access Control: Configure access control settings on your Git hosting provider to manage who can access and modify the repository.

Effective Branching Strategies for Microservices Development

Effective branching strategies are essential for managing code changes, enabling parallel development, and ensuring code quality in a microservices environment. They allow developers to work on features, bug fixes, and releases in isolation, minimizing the risk of conflicts and ensuring a smooth CI/CD process.

Here are some popular branching strategies suitable for microservices:

- Gitflow: Gitflow is a well-defined branching model that works well for larger projects. It involves a `main` (or `master`) branch for production-ready code, a `develop` branch for ongoing development, and feature branches for new features, release branches for preparing releases, and hotfix branches for urgent bug fixes.

- Feature Branches: Created from `develop`, these branches are used to develop new features.

They are merged back into `develop` when the feature is complete.

- Release Branches: Created from `develop`, these branches are used to prepare for a release. They allow for minor bug fixes and final preparations before merging into `main` and tagging the release.

- Hotfix Branches: Created from `main`, these branches are used to quickly address critical bugs in production. They are then merged back into both `main` and `develop`.

Gitflow provides a structured and organized workflow, but it can be slightly more complex to manage.

- Feature Branches: Created from `develop`, these branches are used to develop new features.

- GitHub Flow: GitHub Flow is a simpler branching model that’s well-suited for smaller projects and continuous delivery. It uses a single `main` (or `master`) branch and feature branches.

- Feature Branches: Created from `main`, these branches are used to develop new features. They are merged back into `main` using pull requests.

GitHub Flow is easy to understand and implement, making it a good choice for teams that want a streamlined workflow.

- Trunk-Based Development: Trunk-based development involves frequent, small commits directly to the `main` (or `master`) branch. Feature branches are short-lived and merged frequently.

This strategy promotes continuous integration and delivery, but requires a strong focus on testing and code reviews.

Regardless of the chosen strategy, it is important to:

- Use Descriptive Branch Names: Use clear and concise branch names that reflect the purpose of the branch (e.g., `feature/user-authentication`, `bugfix/order-processing`).

- Use Pull Requests: Use pull requests for all merges, allowing for code reviews and discussions before merging changes.

- Automate Testing: Integrate automated testing into your CI/CD pipeline to ensure that code changes do not introduce regressions.

- Adopt a Consistent Strategy: Ensure that all team members follow the chosen branching strategy consistently to maintain a clean and organized codebase.

Implementing Continuous Integration

Continuous Integration (CI) is a crucial practice in modern software development, especially for microservices. It involves frequently merging code changes from multiple developers into a central repository and then automatically building and testing the application. This process helps to identify and address integration issues early, leading to more stable and reliable software. Implementing CI effectively streamlines the development workflow and reduces the risk of costly errors.

Setting Up Automated Builds and Tests

Automated builds and tests are the cornerstone of a CI pipeline. They ensure that every code change, no matter how small, is validated before being integrated into the main codebase. This process involves several key steps.

- Triggering Builds: The CI process starts when a developer pushes code changes to the version control system (e.g., Git). This push triggers the CI system to initiate a build.

- Build Process: The build process involves several actions:

- Fetching Code: The CI system retrieves the latest code from the repository.

- Dependency Management: The system installs all the necessary dependencies required to build the project. This often involves using package managers like npm (for JavaScript), Maven (for Java), or pip (for Python).

- Compilation: The code is compiled to ensure it’s free of syntax errors.

- Artifact Creation: The compiled code and any necessary resources are packaged into an artifact, such as a JAR file (for Java) or a Docker image.

- Automated Testing: Once the build is successful, the CI system runs automated tests. This includes:

- Unit Tests: These tests verify the functionality of individual components or modules in isolation.

- Integration Tests: These tests verify the interaction between different components or modules.

- End-to-End Tests: These tests simulate user interactions with the application to ensure the entire system works as expected.

- Reporting: The CI system generates reports on the build and test results. These reports indicate whether the build was successful and if any tests failed.

- Notifications: Developers receive notifications about the build and test results, allowing them to address any issues promptly.

Integrating Code Quality Checks

Integrating code quality checks into the CI process helps maintain code quality and prevent technical debt from accumulating. Tools like SonarQube are commonly used for this purpose.

- SonarQube Integration: SonarQube analyzes the code for potential bugs, code smells, vulnerabilities, and code duplication. It provides metrics and reports on code quality.

- Configuration: Configure SonarQube to analyze the code during the CI process. This usually involves setting up a SonarQube server and configuring the CI pipeline to run a SonarQube analysis as part of the build process.

- Analysis Process: The CI pipeline runs SonarQube analysis after the code is built. SonarQube scans the code and generates a report with quality metrics.

- Failing Builds Based on Quality: Configure the CI pipeline to fail the build if the code quality does not meet certain thresholds. For example, the build can fail if the code has too many bugs, code smells, or vulnerabilities.

- Reporting and Remediation: SonarQube generates detailed reports on the code quality, highlighting the issues that need to be addressed. Developers can use these reports to identify and fix code quality problems.

Creating a CI Pipeline Using GitLab CI

GitLab CI is a powerful and integrated CI/CD tool that is part of the GitLab platform. It uses a YAML file named `.gitlab-ci.yml` to define the CI/CD pipeline.

- `.gitlab-ci.yml` Configuration: The `.gitlab-ci.yml` file is placed in the root directory of the project repository. It defines the stages, jobs, and scripts that make up the CI pipeline.

- Stages: Stages define the overall structure of the pipeline. Common stages include `build`, `test`, and `deploy`. The order of stages is important as they run sequentially.

- Jobs: Jobs define the individual tasks within each stage. Each job specifies the scripts to run, the environment to use (e.g., a specific Docker image), and any dependencies.

- Example `.gitlab-ci.yml` structure:

stages: -build -test -deploy build_job: stage: build image: maven:latest # Example Docker image script: -mvn clean install # Example Maven command artifacts: paths: -target/*.jar # Example artifact path test_job: stage: test image: openjdk:17-jdk # Example Docker image script: -mvn test # Example Maven command deploy_job: stage: deploy image: docker:latest services: -docker:dind # Docker-in-Docker script: -docker login -u $CI_REGISTRY_USER -p $CI_REGISTRY_PASSWORD $CI_REGISTRY # Example Docker login -docker build -t $CI_REGISTRY_IMAGE:$CI_COMMIT_SHA .

# Example Docker build -docker push $CI_REGISTRY_IMAGE:$CI_COMMIT_SHA # Example Docker push

- The `stages` defines the order of execution (build, test, deploy).

- `build_job` uses the `maven:latest` Docker image, executes Maven commands to build the project, and stores the generated JAR file as an artifact.

- `test_job` uses the `openjdk:17-jdk` Docker image and runs Maven tests.

- `deploy_job` uses Docker to build and push a Docker image to a container registry (e.g., GitLab Container Registry).

- GitLab Runner: GitLab Runner is an application that executes the jobs defined in the `.gitlab-ci.yml` file. Runners can be configured to run on various platforms (e.g., Linux, Windows, macOS) and can use different executors (e.g., Docker, shell).

- Pipeline Execution: When a developer pushes code changes to the repository, GitLab automatically detects the `.gitlab-ci.yml` file and starts a new pipeline. The pipeline runs through the defined stages and jobs.

- Pipeline Visualization: GitLab provides a visual representation of the pipeline, showing the status of each stage and job. This makes it easy to monitor the progress of the CI process and identify any issues.

Implementing Continuous Delivery/Deployment

After successfully implementing Continuous Integration, the next crucial phase in your CI/CD pipeline is Continuous Delivery and/or Continuous Deployment. These practices automate the release process, ensuring that validated code changes are readily available for deployment to various environments. This section will detail the differences between Continuous Delivery and Continuous Deployment, Artikel the steps to automate microservice deployments, and demonstrate how to configure effective deployment strategies.

Distinguishing Continuous Delivery and Continuous Deployment

Understanding the distinctions between Continuous Delivery and Continuous Deployment is fundamental to choosing the right approach for your microservices. While both aim to automate the release process, they differ in their level of automation and the involvement of manual steps.

- Continuous Delivery: This practice focuses on automating the release process up to the point where the code is ready for deployment to production. The deployment to production is a manual step, often triggered by a human decision. Continuous Delivery ensures that code is always in a deployable state. This approach provides flexibility, allowing businesses to control when and how new features are released to end-users.

- Continuous Deployment: This practice takes automation a step further by automatically deploying every code change that passes all stages of the CI pipeline directly to production. There’s no manual intervention required for deployment. Continuous Deployment accelerates the release cycle, enabling faster feedback loops and quicker responses to market demands.

Automating Microservice Deployment to Environments

Automating the deployment of microservices across different environments (staging, production, etc.) is a critical step in achieving true CI/CD. This process ensures consistency, reduces errors, and streamlines the release cycle.

- Environment Configuration: Define and configure your target environments (e.g., staging, production). This includes setting up infrastructure (servers, databases, etc.) and configuring environment-specific variables. Tools like Terraform, Ansible, or cloud provider-specific services (e.g., AWS CloudFormation, Google Cloud Deployment Manager) are commonly used for infrastructure as code (IaC), allowing you to manage your infrastructure through code.

- Build and Package Microservices: During the CI phase, the microservices are built and packaged into deployable artifacts (e.g., Docker images, JAR files). These artifacts are then stored in a repository (e.g., Docker Hub, Nexus, Artifactory).

- Deployment Pipelines: Create deployment pipelines that automate the deployment process to each environment. These pipelines typically involve the following steps:

- Pull Artifacts: Retrieve the packaged microservice artifact from the repository.

- Environment-Specific Configuration: Apply environment-specific configurations (e.g., database connection strings, API keys). These configurations can be managed using configuration management tools or environment variables.

- Deployment: Deploy the microservice artifact to the target environment. This can involve deploying containers to a container orchestration platform like Kubernetes, deploying JAR files to application servers, or deploying code to serverless platforms.

- Testing: Run automated tests (unit tests, integration tests, end-to-end tests) to validate the deployment.

- Monitoring and Logging: Configure monitoring and logging to track the health and performance of the deployed microservice.

- Deployment Automation Tools: Utilize tools like Jenkins, GitLab CI, CircleCI, or Azure DevOps to define and execute these deployment pipelines. These tools provide features for orchestrating the deployment process, managing dependencies, and providing visibility into the deployment status.

- Rollback Strategy: Define a rollback strategy in case of deployment failures. This may involve automatically reverting to a previous version of the microservice or using a blue/green deployment strategy.

Configuring Deployment Strategies

Choosing the right deployment strategy is crucial for minimizing risk and ensuring a smooth transition to new versions of your microservices. Several strategies are available, each with its advantages and disadvantages.

- Blue/Green Deployments: This strategy involves maintaining two identical environments: “blue” (current production) and “green” (new version). When deploying a new version, the “green” environment is updated with the new code. After testing the “green” environment, traffic is switched from “blue” to “green”. If issues arise, traffic can be quickly switched back to the “blue” environment. This minimizes downtime and provides a quick rollback option.

For example, a company might have two Kubernetes deployments, one labeled “blue” and the other “green.” After verifying the “green” deployment, a service’s selector is updated to point to the “green” deployment, effectively switching traffic.

- Canary Releases: This strategy involves gradually rolling out a new version of a microservice to a small subset of users (the “canary” group) while the majority of users continue to use the current version. The canary group’s experience is closely monitored. If no issues are detected, the new version is gradually rolled out to a larger audience. This strategy minimizes the impact of potential issues and allows for early detection of problems.

A practical example is using a traffic management tool like Istio to direct 1% of traffic to a new version of a microservice. Based on the performance and error rates observed, the traffic can be increased or the release can be rolled back.

- Rolling Updates: This strategy involves updating instances of a microservice one at a time. New instances are brought up with the new version, and old instances are taken down. This minimizes downtime but may result in temporary inconsistencies during the update. Kubernetes supports rolling updates natively.

- Immutable Infrastructure: This approach emphasizes building and deploying new instances of infrastructure (e.g., containers) rather than updating existing ones. When a new version of a microservice is released, new instances are created with the new version, and old instances are terminated. This ensures consistency and simplifies rollback. Tools like Docker and Kubernetes are well-suited for implementing immutable infrastructure.

Testing Strategies for Microservices

Testing is a critical component of a successful CI/CD pipeline for microservices. Rigorous testing ensures that each microservice functions as expected, integrates correctly with other services, and meets the overall application requirements. A well-defined testing strategy minimizes the risk of bugs, reduces deployment failures, and increases the confidence in each release. Implementing a comprehensive testing strategy is essential for maintaining the agility and resilience that microservices architecture aims to provide.

Different Types of Testing Suitable for Microservices

Microservices architecture necessitates a multi-faceted testing approach to ensure the reliability and functionality of the entire system. This involves a variety of testing types, each with a specific focus and scope. The following are some key testing strategies commonly employed in microservices environments:

- Unit Testing: Unit tests are the foundation of any testing strategy. They focus on testing individual components or units of code in isolation. The primary goal is to verify that each unit functions correctly according to its specifications. These tests are typically fast to execute and provide immediate feedback on code changes.

- Integration Testing: Integration tests verify the interactions between different components or microservices. They ensure that services communicate and exchange data as intended. This testing type often involves testing the APIs and data flow between services.

- End-to-End (E2E) Testing: E2E tests simulate user interactions with the entire application, from the front-end to the back-end services. They validate the complete workflow and ensure that all components work together seamlessly. These tests are the most comprehensive but also the slowest to execute.

- Contract Testing: Contract tests verify that the communication between a service consumer and a service provider adheres to a defined contract. This ensures that changes in one service do not break the functionality of dependent services.

- Performance Testing: Performance tests assess the speed, stability, and scalability of microservices under various load conditions. They help identify performance bottlenecks and ensure that the system can handle the expected traffic.

- Security Testing: Security tests focus on identifying vulnerabilities and ensuring that microservices are protected against common security threats. This includes testing for authentication, authorization, and data protection.

Writing Effective Unit Tests for a Microservice

Unit tests are crucial for verifying the functionality of individual components within a microservice. Well-written unit tests are concise, focused, and easy to understand. They should cover a wide range of scenarios, including positive and negative test cases, to ensure the robustness of the code.

Here’s how to write effective unit tests:

- Isolate the Unit: Unit tests should focus on testing a single unit of code in isolation. This means mocking or stubbing any dependencies, such as external services or databases, to control the test environment.

- Arrange, Act, Assert: Follow the “Arrange, Act, Assert” pattern for structuring unit tests.

- Arrange: Set up the test environment, including any necessary data or objects.

- Act: Execute the code being tested.

- Assert: Verify the results of the execution against the expected outcomes.

- Test for all Scenarios: Write tests for both positive and negative scenarios. Positive tests verify that the code works as expected under normal conditions. Negative tests check how the code handles invalid inputs or unexpected situations.

- Keep Tests Simple: Unit tests should be easy to read and understand. Avoid complex logic or excessive setup.

- Use a Testing Framework: Utilize a testing framework, such as JUnit (for Java), pytest (for Python), or Jest (for JavaScript), to streamline the testing process. These frameworks provide tools for writing, running, and reporting on tests.

Example (Java with JUnit):

Consider a simple microservice that calculates the sum of two numbers. A unit test for this service might look like this:

import org.junit.jupiter.api.Test;import static org.junit.jupiter.api.Assertions.assertEquals;public class CalculatorTest @Test public void testAdd() Calculator calculator = new Calculator(); int result = calculator.add(2, 3); assertEquals(5, result, "The sum should be 5"); @Test public void testAddNegativeNumbers() Calculator calculator = new Calculator(); int result = calculator.add(-2, -3); assertEquals(-5, result, "The sum should be -5");

Incorporating Integration Tests into the CI/CD Pipeline

Integration tests are essential for validating the interactions between different microservices and components.

They ensure that services communicate correctly and that data flows as expected across the system. Integration tests should be automated and incorporated into the CI/CD pipeline to provide feedback on code changes early in the development process.

Here’s how to incorporate integration tests into the CI/CD pipeline:

- Automate Integration Tests: Write automated integration tests using tools like Postman, or specialized testing frameworks. These tests should be designed to run automatically as part of the CI/CD pipeline.

- Define Test Environments: Set up dedicated test environments that closely resemble the production environment. This ensures that the tests are run in a realistic setting. Consider using containerization technologies like Docker to create consistent and reproducible test environments.

- Integrate with CI/CD Tools: Integrate the integration tests into the CI/CD pipeline using tools such as Jenkins, GitLab CI, or CircleCI. Configure the pipeline to run the integration tests automatically after code changes are merged.

- Monitor and Analyze Results: Monitor the results of the integration tests and analyze any failures. Use the test results to identify and fix issues early in the development process. Implement a reporting mechanism to provide visibility into test results.

- Use Service Virtualization/Mocking: When necessary, use service virtualization or mocking to simulate dependencies, such as external APIs or databases. This allows integration tests to be run without relying on the availability of external services.

Example of Integration Test within a CI/CD Pipeline:

Assume a microservice (Service A) calls another microservice (Service B) to retrieve data. An integration test within the CI/CD pipeline would:

- Deploy both Service A and Service B to a test environment.

- Use a tool like Postman or a testing library to send a request to Service A.

- Verify that Service A successfully calls Service B.

- Assert that Service A receives the correct data from Service B.

- Report the test results (pass/fail) back to the CI/CD pipeline.

Monitoring and Logging

In a microservices architecture, the distributed nature of the system introduces significant complexity. Monitoring and logging become crucial for maintaining system health, identifying issues, and ensuring optimal performance. Effective monitoring and logging practices enable rapid detection of problems, facilitate root cause analysis, and provide valuable insights into the behavior of individual services and the overall system.

Importance of Monitoring and Logging in Microservices

Microservices architectures, by their distributed nature, demand robust monitoring and logging strategies. The independent deployment and scaling of services, coupled with inter-service communication, can lead to intricate interactions that are difficult to manage without proper tooling.

- Real-time Visibility: Monitoring tools provide real-time insights into the performance and health of each microservice, enabling immediate detection of issues like latency spikes, error rates, or resource exhaustion.

- Proactive Issue Identification: Logging provides detailed information about service behavior, allowing for the identification of patterns and anomalies that may indicate potential problems before they impact users. This proactive approach can prevent outages and performance degradation.

- Faster Troubleshooting: When issues arise, comprehensive logging and monitoring data facilitate rapid root cause analysis. By correlating logs and metrics across services, developers can quickly pinpoint the source of the problem and implement effective solutions.

- Performance Optimization: Monitoring provides valuable data for performance optimization. By analyzing metrics such as response times, throughput, and resource utilization, developers can identify bottlenecks and improve the efficiency of individual services and the overall system.

- Capacity Planning: Monitoring data can be used to understand resource consumption patterns and predict future capacity needs. This allows for proactive scaling of services to ensure optimal performance under varying loads.

Examples of Monitoring Tools

Several tools are available for monitoring microservices, each offering different features and capabilities. The choice of tool often depends on the specific requirements of the project, the existing infrastructure, and the team’s preferences.

- Prometheus: Prometheus is a popular open-source monitoring system that excels at collecting and storing time-series data. It uses a pull-based model to scrape metrics from configured targets. Prometheus is well-suited for monitoring the health and performance of microservices. It offers a flexible query language (PromQL) for analyzing metrics and creating custom dashboards. Its integration with Grafana makes it a powerful monitoring solution.

For example, a Prometheus setup might monitor the number of requests to a specific service, the average response time, and the CPU utilization of the service’s instances.

- Grafana: Grafana is a powerful data visualization and dashboarding tool that can be used to visualize data from various sources, including Prometheus, Elasticsearch, and InfluxDB. It allows users to create interactive dashboards that display real-time metrics, logs, and alerts. Grafana is often used in conjunction with Prometheus to provide a comprehensive monitoring solution. It allows the creation of visually rich dashboards to display key performance indicators (KPIs) such as error rates, latency, and throughput.

- Jaeger/Zipkin: These are distributed tracing systems. They are crucial for understanding the flow of requests across multiple microservices. By tracing requests, developers can identify performance bottlenecks and understand the dependencies between services. They visualize request paths and the time spent in each service, which aids in pinpointing performance issues within complex service interactions.

- Datadog: Datadog is a commercial monitoring and analytics platform that provides comprehensive monitoring capabilities for infrastructure, applications, and logs. It offers integrations with a wide range of technologies and provides features such as real-time dashboards, alerting, and anomaly detection. Datadog is a good option for teams that need a fully managed monitoring solution.

- New Relic: Similar to Datadog, New Relic is another commercial platform that provides monitoring, logging, and application performance management (APM) capabilities. It offers features like real-time dashboards, transaction tracing, and error analysis. New Relic is a comprehensive solution for monitoring the entire application stack.

Examples of Logging Tools

Effective logging is critical for understanding the behavior of microservices and troubleshooting issues. Log management tools help collect, store, and analyze logs from various sources.

- ELK Stack (Elasticsearch, Logstash, Kibana): The ELK Stack (now known as the Elastic Stack) is a popular open-source log management solution. Elasticsearch is a distributed search and analytics engine used for storing and indexing logs. Logstash is a data processing pipeline that collects, parses, and transforms logs. Kibana is a data visualization and dashboarding tool that allows users to explore and analyze logs stored in Elasticsearch.

The ELK stack is widely used for centralized log management and analysis. For instance, Logstash can parse log entries from various microservices, extract relevant information (e.g., timestamps, service names, error codes), and send the structured data to Elasticsearch for indexing. Kibana can then be used to search and visualize these logs, enabling developers to quickly identify patterns and troubleshoot issues.

- Splunk: Splunk is a commercial log management and analytics platform that provides similar functionality to the ELK Stack. It offers powerful search and analysis capabilities, as well as features such as alerting and reporting. Splunk is a good option for organizations that need a scalable and feature-rich log management solution.

- Graylog: Graylog is an open-source log management platform that offers a user-friendly interface for collecting, processing, and analyzing logs. It provides features such as log aggregation, search, and alerting. Graylog is a good alternative to the ELK Stack, particularly for smaller teams or organizations.

Integrating Monitoring and Logging into the CI/CD Pipeline

Integrating monitoring and logging into the CI/CD pipeline ensures that monitoring and logging configurations are deployed along with the application code. This integration allows for real-time feedback on the performance and health of the deployed services.

- Configuration Management: Monitoring and logging configurations should be treated as code and managed within the version control system alongside the application code. This ensures that the configurations are tracked, versioned, and deployed consistently across all environments. Tools like Terraform or Ansible can be used to manage the infrastructure and deploy the monitoring and logging configurations.

- Automated Deployment: During the CI/CD process, the monitoring and logging configurations should be automatically deployed to the target environment. This ensures that the necessary monitoring and logging infrastructure is available when the application code is deployed.

- Health Checks and Status Pages: Integrate health checks into the CI/CD pipeline. These checks verify the health and availability of the deployed microservices. Status pages can provide a real-time overview of the health of all services, which is valuable for operations teams and stakeholders.

- Automated Testing: Incorporate monitoring and logging into automated tests. This includes testing that logs are being generated correctly, that metrics are being collected, and that alerts are being triggered under the expected conditions. For instance, integration tests could verify that specific events generate the correct log entries and that the corresponding metrics are updated as expected.

- Real-time Feedback Loops: The CI/CD pipeline should provide real-time feedback on the performance and health of the deployed services. This can be achieved by integrating the CI/CD pipeline with the monitoring and logging tools. For example, if the monitoring system detects an error, the CI/CD pipeline can be automatically stopped or rolled back.

- Alerting and Notifications: Configure alerts based on the metrics and logs collected. These alerts should notify the relevant teams when critical issues are detected, enabling rapid response and resolution. Integrate these alerts with communication channels like Slack or email to ensure timely notifications.

Security Considerations

Implementing a robust CI/CD pipeline for microservices requires a proactive approach to security. This is crucial because the speed and automation of CI/CD can inadvertently introduce vulnerabilities if security is not integrated from the outset. Failing to address security concerns throughout the pipeline can lead to severe consequences, including data breaches, service disruptions, and reputational damage. Therefore, security must be a core tenet of your CI/CD strategy, ensuring that every stage, from code commit to deployment, incorporates security best practices.

Security Best Practices in CI/CD Pipelines

Integrating security best practices within a CI/CD pipeline for microservices involves several key areas, which are essential for safeguarding the entire software development lifecycle.

- Secure Code Development: Enforce secure coding standards and conduct regular code reviews to identify and eliminate vulnerabilities early in the development process. Developers should be trained in secure coding practices to minimize the introduction of vulnerabilities.

- Secrets Management: Securely manage sensitive information such as API keys, passwords, and database credentials. Utilize secrets management tools like HashiCorp Vault, AWS Secrets Manager, or Azure Key Vault to store and retrieve secrets, avoiding hardcoding them in the code or configuration files.

- Dependency Management: Regularly scan and update dependencies to mitigate risks associated with known vulnerabilities in third-party libraries and frameworks. Use tools like Snyk, Dependabot, or OWASP Dependency-Check to automate dependency scanning and update processes.

- Infrastructure as Code (IaC) Security: Securely manage infrastructure configurations. Implement security best practices when defining infrastructure configurations using tools like Terraform, CloudFormation, or Ansible. Ensure that IaC templates are scanned for security vulnerabilities before deployment.

- Container Security: Securely build and manage container images by implementing practices like image scanning and vulnerability assessments. Use tools like Docker Bench for Security, Trivy, or Clair to scan container images for vulnerabilities and misconfigurations.

- Network Security: Implement network security measures, such as firewalls, intrusion detection systems, and micro-segmentation, to protect microservices from unauthorized access and attacks. Configure network policies to restrict communication between microservices and limit the attack surface.

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration tests to identify and address vulnerabilities in the CI/CD pipeline and microservices. Penetration testing should simulate real-world attacks to evaluate the effectiveness of security controls.

- Compliance and Governance: Ensure compliance with relevant industry standards and regulations. Establish clear security policies and procedures and enforce them throughout the CI/CD pipeline. Regularly review and update security policies to address evolving threats and regulatory requirements.

Integrating Security Scanning Tools

Integrating security scanning tools into the CI/CD pipeline automates security checks, detects vulnerabilities early, and reduces the risk of deploying insecure code. Several tools can be integrated at various stages of the pipeline.

- Static Application Security Testing (SAST): SAST tools, such as SonarQube, analyze source code for vulnerabilities, coding errors, and security flaws during the build process. Integrate SAST tools into the CI stage to scan code after each commit.

- Dynamic Application Security Testing (DAST): DAST tools, such as OWASP ZAP, scan running applications for vulnerabilities by simulating attacks. Integrate DAST tools into the testing or staging environments after deployment.

- Software Composition Analysis (SCA): SCA tools, such as Snyk and OWASP Dependency-Check, analyze dependencies for known vulnerabilities. Integrate SCA tools into the build stage to scan dependencies and alert on any security risks.

- Container Image Scanning: Tools like Trivy, Clair, and Anchore scan container images for vulnerabilities and misconfigurations. Integrate container image scanning into the build or deployment stages to ensure that only secure images are deployed.

- Infrastructure as Code (IaC) Scanning: Tools like tfsec and Checkov scan IaC templates (e.g., Terraform, CloudFormation) for security vulnerabilities and misconfigurations. Integrate IaC scanning into the IaC build and deployment stages to prevent insecure infrastructure configurations.

- Example: Snyk Integration: Snyk is a popular tool for SCA and container image scanning. Integrating Snyk into a CI/CD pipeline involves the following steps:

- Authentication: Authenticate with Snyk by providing an API token.

- Build Stage Integration: Configure Snyk to run as part of the build process. This often involves adding a Snyk CLI command to the build script.

- Scan and Report: Snyk scans the project for vulnerabilities and generates a report. The report is then analyzed to determine whether the build should continue.

- Automated Remediation: Snyk provides automated fixes for many vulnerabilities, which can be integrated into the CI/CD pipeline.

- Example: OWASP ZAP Integration: OWASP ZAP is a widely used DAST tool. Integrating ZAP into a CI/CD pipeline typically involves the following steps:

- Deployment of Application: Deploy the application to a testing or staging environment.

- ZAP Configuration: Configure ZAP to scan the deployed application. This can involve setting up a proxy and specifying the target URL.

- Automated Scan: Automate ZAP scans using the ZAP API or command-line interface.

- Report Analysis: Analyze the ZAP scan report to identify vulnerabilities. The report can be integrated into the CI/CD pipeline for continuous monitoring.

Common Security Vulnerabilities and Mitigation Strategies

The following table Artikels common security vulnerabilities in CI/CD pipelines for microservices and their corresponding mitigation strategies:

| Vulnerability | Description | Impact | Mitigation Strategy |

|---|---|---|---|

| Unsecured Secrets Management | Sensitive information (API keys, passwords, etc.) stored insecurely. | Unauthorized access to sensitive data, system compromise. | Use secrets management tools (HashiCorp Vault, AWS Secrets Manager), encrypt secrets, restrict access. |

| Insecure Dependencies | Use of outdated or vulnerable third-party libraries. | Exploitation of known vulnerabilities, data breaches. | Regularly scan and update dependencies (Snyk, Dependabot), enforce dependency policies. |

| Lack of Input Validation | Failure to validate user input, allowing malicious code injection. | Code injection (SQLi, XSS), data corruption, system compromise. | Implement input validation, sanitize user input, use parameterized queries. |

| Insufficient Access Controls | Inadequate permissions and access controls in the CI/CD pipeline. | Unauthorized access to the pipeline, code tampering, system compromise. | Implement role-based access control (RBAC), enforce least privilege, regularly review access permissions. |

Concluding Remarks

In conclusion, establishing a robust CI/CD pipeline is paramount for harnessing the full potential of microservices. By integrating the principles and practices Artikeld in this guide, you can empower your development teams to release software faster, reduce errors, and improve overall application quality. From selecting the appropriate tools to implementing automated testing and security measures, the journey toward CI/CD for microservices is a transformative one.

Embrace the practices, and watch your software delivery process evolve into a well-oiled machine, ready to adapt to the ever-changing demands of the digital world.

FAQ Corner

What is the difference between Continuous Delivery and Continuous Deployment?

Continuous Delivery ensures that code changes are automatically built, tested, and prepared for release to production. Continuous Deployment goes a step further by automatically deploying these changes to production after successful testing, without manual intervention.

Which CI/CD platform is best for microservices?

The best platform depends on your specific needs. Jenkins offers flexibility and extensive plugin support. GitLab CI and GitHub Actions integrate seamlessly with their respective version control systems. CircleCI is known for its speed and ease of use. Consider factors like project size, team expertise, and budget when making your decision.

How often should I run tests in my CI/CD pipeline?

Tests should be run frequently, ideally with every code change. Automated tests, including unit, integration, and end-to-end tests, should be executed as part of your CI process to catch issues early and prevent them from reaching production.

What is the role of monitoring and logging in a microservices CI/CD pipeline?

Monitoring and logging are essential for gaining real-time insights into the health and performance of your microservices. They enable you to identify and resolve issues quickly, ensuring a stable and reliable application. Integration into the CI/CD pipeline allows for immediate feedback on deployments and changes.