Understanding how to plan for network connectivity to the cloud is critical in today’s digital landscape, where efficient and secure data transfer is paramount. The transition to cloud-based infrastructure demands a thorough understanding of network fundamentals, architecture, and security protocols. This guide provides a comprehensive overview, ensuring a well-informed approach to establishing and maintaining optimal cloud connectivity.

The complexity of cloud networking stems from its multifaceted nature, encompassing various network models (public, private, hybrid), protocols (TCP/IP, UDP), and security measures. This exploration delves into these elements, guiding the reader through assessing network requirements, selecting appropriate cloud providers, designing resilient architectures, and implementing robust security practices. Furthermore, the importance of cost optimization, disaster recovery planning, and continuous monitoring are examined to ensure operational efficiency and business continuity.

Understanding Cloud Network Connectivity Fundamentals

Cloud network connectivity is the cornerstone of cloud computing, enabling access to cloud resources and services. Understanding the fundamentals is crucial for effective cloud adoption, optimization, and security. This involves grasping the core concepts, differentiating between various network models, and recognizing the protocols that facilitate communication.

Core Concepts of Cloud Network Connectivity

Cloud network connectivity hinges on several fundamental concepts that govern how data travels between on-premises networks, the cloud provider’s infrastructure, and the internet. These concepts dictate performance, security, and the overall user experience.

- Virtual Networks: Cloud providers create virtual networks, which are logically isolated networks within the cloud infrastructure. These virtual networks function similarly to traditional networks, allowing users to define IP address ranges, subnets, and routing rules. This isolation is critical for security and allows for customized network configurations. For example, Amazon Web Services (AWS) uses Virtual Private Clouds (VPCs) to provide isolated networks.

- Subnets: Within a virtual network, subnets are created to segment the network into smaller, manageable parts. Subnets are defined by an IP address range and a subnet mask. This segmentation improves network organization, security, and performance. Different subnets can be used for different purposes, such as public-facing web servers, private database servers, or management interfaces.

- Routing: Routing is the process of directing network traffic between different networks or subnets. Routing tables define the paths that data packets take to reach their destination. Cloud providers offer various routing options, including static routing (manually configured routes) and dynamic routing (routes automatically learned through routing protocols).

- Firewalls: Cloud providers offer firewalls to control network traffic and enforce security policies. Firewalls filter traffic based on predefined rules, such as source and destination IP addresses, ports, and protocols. This helps to protect cloud resources from unauthorized access and malicious attacks. Cloud firewalls often offer advanced features like intrusion detection and prevention systems.

- Load Balancing: Load balancing distributes network traffic across multiple servers to improve performance, availability, and scalability. Load balancers can distribute traffic based on various algorithms, such as round robin, least connections, or weighted response times. This ensures that no single server is overwhelmed and that applications remain responsive even during peak traffic periods.

- Network Address Translation (NAT): NAT allows multiple instances within a private subnet to share a single public IP address. This conserves public IP addresses and enhances security by hiding the internal IP addresses of instances. Cloud providers offer NAT gateways or NAT instances for this purpose.

Differences Between Public, Private, and Hybrid Cloud Network Models

The choice of cloud network model significantly impacts security, cost, and control. Each model offers distinct advantages and disadvantages depending on the specific needs of an organization.

- Public Cloud: In the public cloud model, resources are provisioned and managed by a third-party cloud provider (e.g., AWS, Microsoft Azure, Google Cloud Platform). The network infrastructure is shared among multiple tenants.

- Advantages: Cost-effective (pay-as-you-go), highly scalable, readily available resources, and minimal upfront investment.

- Disadvantages: Limited control over the underlying infrastructure, potential security concerns (shared environment), and dependence on the provider’s availability.

- Example: A small startup might use a public cloud to host its website and applications, leveraging its scalability and cost-effectiveness.

- Private Cloud: In the private cloud model, resources are dedicated to a single organization. The network infrastructure can be located on-premises or hosted by a third-party provider, but it is not shared with other tenants.

- Advantages: Enhanced security (dedicated infrastructure), greater control over the environment, and the ability to customize the network to meet specific needs.

- Disadvantages: Higher costs (due to dedicated resources), more complex management, and potentially lower scalability compared to the public cloud.

- Example: A financial institution might choose a private cloud to host sensitive data and applications, prioritizing security and compliance.

- Hybrid Cloud: The hybrid cloud model combines public and private cloud resources, allowing organizations to leverage the advantages of both. Workloads can be distributed across both environments based on factors such as cost, performance, and security requirements.

- Advantages: Flexibility (choosing the best environment for each workload), scalability (using public cloud for peak demands), and cost optimization.

- Disadvantages: Increased complexity (managing multiple environments), the need for robust integration and orchestration tools, and potential security challenges.

- Example: A retail company might use a hybrid cloud to host its e-commerce platform (public cloud) and its customer data (private cloud), ensuring both scalability and data security.

Common Network Protocols Utilized for Cloud Connectivity

Several network protocols are fundamental to cloud connectivity, ensuring reliable and secure communication between various components. These protocols operate at different layers of the network stack and address specific aspects of data transmission.

- TCP/IP (Transmission Control Protocol/Internet Protocol): TCP/IP is the foundational protocol suite for the internet and cloud communication.

- TCP (Transmission Control Protocol): Provides reliable, connection-oriented communication. It ensures data delivery in the correct order and handles error checking and retransmission. TCP is used for applications that require reliable data transfer, such as web browsing (HTTP/HTTPS), email (SMTP), and file transfer (FTP).

- IP (Internet Protocol): Provides the addressing and routing of data packets across the internet. It is responsible for delivering packets from source to destination, regardless of the underlying network infrastructure. The current version of IP is IPv4, with IPv6 being its successor, offering a larger address space.

- UDP (User Datagram Protocol): UDP is a connectionless protocol that provides a faster, but less reliable, form of communication. It does not guarantee data delivery or order, making it suitable for applications where speed is more important than reliability, such as video streaming, online gaming, and DNS lookups.

- HTTP/HTTPS (Hypertext Transfer Protocol/Secure Hypertext Transfer Protocol): These protocols are used for web communication. HTTPS encrypts the data transmitted between a web browser and a web server, providing secure communication.

- DNS (Domain Name System): DNS translates human-readable domain names (e.g., example.com) into IP addresses, allowing users to access cloud resources using familiar names.

- VPN (Virtual Private Network): VPNs create secure, encrypted connections over a public network, such as the internet. They are commonly used to connect on-premises networks to the cloud securely.

- SSL/TLS (Secure Sockets Layer/Transport Layer Security): These protocols are used to encrypt data transmitted between a client and a server, providing secure communication channels for applications like web browsing and email.

Assessing Network Requirements

Network requirements assessment is a crucial phase in planning cloud connectivity. Accurately determining these needs ensures optimal application performance, cost-effectiveness, and a positive user experience. This process involves a thorough examination of bandwidth, latency, and other network parameters, tailored to the specific applications and workloads intended for the cloud environment.

Identifying Bandwidth Needs for Cloud Access

Bandwidth requirements are a function of the volume of data transferred between the on-premises network and the cloud environment. Several factors influence these needs, and understanding them is essential for capacity planning.

- Number of Users and Devices: The total number of users and devices accessing cloud resources directly impacts bandwidth consumption. A larger user base, or a network with numerous connected devices (e.g., IoT devices), will require higher bandwidth capacity to prevent bottlenecks and maintain performance.

- Application Type and Data Transfer Patterns: Different applications generate varying levels of network traffic. For instance, video streaming applications consume significantly more bandwidth than basic email clients. Data transfer patterns, such as the frequency and size of file transfers, also contribute to bandwidth demands.

- Data Transfer Volume: The total volume of data that needs to be transferred to and from the cloud is a critical factor. This includes data backups, large file uploads and downloads, and the synchronization of data between on-premises and cloud-based systems.

- Peak Usage Times: Analyzing traffic patterns to identify peak usage periods is essential. These periods, when demand is highest, determine the maximum bandwidth capacity needed to accommodate all users and applications without performance degradation.

- Network Protocols and Overhead: The choice of network protocols and their associated overhead affects bandwidth utilization. For example, protocols with higher overhead, such as certain VPN configurations, may consume more bandwidth than more efficient alternatives.

- Cloud Service Provider (CSP) Data Transfer Costs: CSPs often charge for data egress (data leaving the cloud). Assessing bandwidth needs allows for more accurate cost projections and can influence decisions on data storage and transfer strategies to minimize these costs.

Determining Latency Requirements Based on Application Types

Latency, the delay in data transmission, significantly impacts application performance. The acceptable latency threshold varies considerably depending on the application type.

- Application Sensitivity: Some applications are more sensitive to latency than others. Real-time applications, such as voice over IP (VoIP) and online gaming, require low latency for an acceptable user experience. Less latency-sensitive applications, like batch processing, can tolerate higher delays.

- Geographic Distance: The physical distance between the on-premises network and the cloud data center directly influences latency. Data must travel a longer distance, increasing the delay. Selecting a cloud region closer to users can help mitigate this.

- Network Infrastructure: The quality and configuration of the network infrastructure, including routers, switches, and the internet service provider (ISP) network, contribute to latency. Optimized network paths and high-performance equipment are crucial for minimizing delays.

- Application Design: Application design can impact latency. For example, applications designed with efficient data retrieval methods and optimized database queries can reduce the perceived impact of latency.

- Network Congestion: Congestion on the network, whether on the ISP’s network or within the cloud provider’s infrastructure, can increase latency. Monitoring network performance and implementing traffic management techniques can help mitigate congestion.

Network Performance Needs for Different Application Types

The following table Artikels the network performance needs of different application types.

| Application Type | Latency Requirement (ms) | Bandwidth Requirement (Mbps) | Notes |

|---|---|---|---|

| Real-time Communication (VoIP, Video Conferencing) | < 150 | 1-5 per concurrent user (depending on codec and resolution) | Requires low and consistent latency. Jitter and packet loss must be minimized. |

| Interactive Applications (Web Browsing, CRM) | < 200 | 1-10 per user (depending on application complexity) | Requires relatively low latency for a responsive user experience. |

| Database Access | < 100 (for frequent queries), < 200 (for less frequent queries) | Variable, depends on data volume and query complexity. Could be 10+ Mbps | Latency is critical for database queries. Bandwidth needs increase with data size and query frequency. |

| File Transfer and Backup | Not as critical, acceptable up to 500 | High bandwidth is desirable. Could be 10+ Mbps, or even 100+ Mbps depending on the size and frequency of the transfers. | High bandwidth is the primary concern for efficient data transfer. |

| Batch Processing (Data Analysis, Reporting) | Not critical, can tolerate delays up to 500+ | Variable, depends on data volume. Often less critical than interactive applications. | Latency is less critical. High bandwidth can speed up processing times. |

Choosing the Right Cloud Provider and Services

Selecting the appropriate cloud provider and services is a critical decision that significantly impacts network connectivity, performance, security, and cost-effectiveness. This choice necessitates a thorough evaluation of the specific requirements, the available options, and the long-term strategic goals of the organization. A mismatch can lead to inefficiencies, increased latency, security vulnerabilities, and ultimately, hinder the organization’s ability to achieve its objectives.

Comparing Network Connectivity Options of Major Cloud Providers

Each major cloud provider—Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP)—offers a diverse set of network connectivity options, each with its own strengths and weaknesses. Understanding these differences is crucial for making an informed decision that aligns with the organization’s specific needs. The following analysis provides a comparative overview of the key connectivity options offered by each provider.

| Feature | AWS | Azure | GCP |

|---|---|---|---|

| Virtual Private Cloud (VPC) / Virtual Network | AWS VPC: Isolated network within the AWS cloud, customizable subnets, routing, and security groups. | Azure Virtual Network: Similar to AWS VPC, providing isolation and control over network resources. | GCP Virtual Private Cloud (VPC): Global, scalable, and customizable network with flexible subnetting and routing options. |

| VPN Connectivity | AWS Site-to-Site VPN: Establishes secure connections between on-premises networks and AWS VPCs. Supports various VPN protocols and encryption standards. | Azure VPN Gateway: Securely connects on-premises networks to Azure Virtual Networks. Supports various VPN types and protocols. | Cloud VPN: Provides secure VPN connections between on-premises networks and GCP VPCs. Supports both route-based and policy-based VPNs. |

| Direct Connect / ExpressRoute / Cloud Interconnect | AWS Direct Connect: Provides dedicated network connections between on-premises networks and AWS. Offers higher bandwidth and lower latency than internet-based connections. | Azure ExpressRoute: Establishes private connections between on-premises networks and Azure. Offers predictable performance and enhanced security. | Cloud Interconnect: Enables private connectivity between on-premises networks and GCP. Supports both dedicated and partner interconnect options. |

| Content Delivery Network (CDN) | Amazon CloudFront: A global CDN service that accelerates content delivery. | Azure CDN: A global CDN service for delivering content with high availability and performance. | Cloud CDN: A global CDN service that integrates with GCP storage services. |

| Load Balancing | Elastic Load Balancing (ELB): Distributes traffic across multiple instances. | Azure Load Balancer: Distributes traffic across virtual machines. | Cloud Load Balancing: Distributes traffic across instances. |

Selecting the Appropriate Virtual Private Network (VPN) Solution

Choosing the right VPN solution is essential for establishing secure connections between on-premises networks and the cloud. The selection process should consider factors such as security requirements, performance needs, and cost considerations. Different VPN solutions offer varying levels of security, bandwidth, and latency.

- Site-to-Site VPN: This type of VPN establishes a secure tunnel between an on-premises network and a cloud provider’s VPC/Virtual Network. It is suitable for organizations that need to connect their entire network to the cloud. It is ideal when you require continuous and secure connectivity between your on-premises infrastructure and the cloud. For example, a company with a large data center might use a site-to-site VPN to connect to AWS.

- Client VPN: Client VPNs allow individual users or devices to connect to the cloud securely. It’s designed for remote access scenarios, allowing users to securely connect to the cloud from anywhere with an internet connection. This is particularly useful for remote workers or mobile users. For instance, a software development company might use a client VPN to allow its developers to access cloud-based resources securely from their homes or other remote locations.

- Considerations for Selection:

- Security Protocols: Choose a VPN solution that supports strong encryption protocols like IPsec or TLS to protect data in transit.

- Bandwidth Requirements: Evaluate the expected data transfer volume and select a VPN solution that can handle the required bandwidth. Insufficient bandwidth can lead to performance bottlenecks.

- Latency Sensitivity: Consider the sensitivity of applications to latency. For real-time applications, choose a VPN solution that minimizes latency.

- Cost: Evaluate the cost of different VPN solutions, including hardware, software, and bandwidth charges.

- Compatibility: Ensure the chosen VPN solution is compatible with both the on-premises network and the cloud provider’s services.

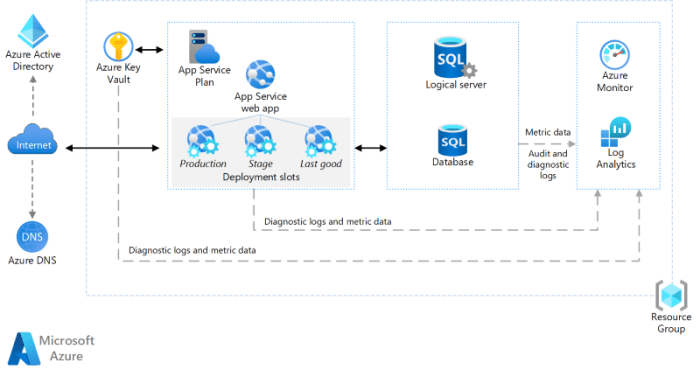

Planning Network Architecture for the Cloud

Designing a robust and efficient cloud network architecture is crucial for ensuring optimal performance, security, and scalability of cloud-based applications and services. A well-planned architecture minimizes latency, enhances security posture, and provides the flexibility to adapt to changing business needs. This section Artikels a step-by-step procedure for designing cloud network architecture, illustrating the use of key components and providing a practical example.

Step-by-Step Procedure for Designing Cloud Network Architecture

Developing a cloud network architecture is a systematic process. It involves careful consideration of various factors, including network requirements, security considerations, and scalability needs. The following steps provide a structured approach:

- Define Requirements and Objectives: This initial phase establishes the foundation for the entire architecture. Clearly identify the business requirements, application needs, and performance goals. Consider factors like anticipated traffic volume, latency sensitivity, and geographical distribution of users.

- Assess Network Topology Options: Evaluate different network topology options suitable for the cloud environment. This involves determining the optimal structure for connecting various components, such as virtual machines, databases, and other services. Common topologies include hub-and-spoke, mesh, and hybrid configurations. The choice depends on factors like scalability requirements, security needs, and the complexity of the application.

- Select Cloud Provider and Services: Choose the cloud provider and the specific network services based on the defined requirements and topology. Different cloud providers offer various networking services, including Virtual Private Clouds (VPCs), subnets, load balancers, firewalls, and VPN gateways. Consider the provider’s geographical reach, pricing models, and service level agreements (SLAs).

- Design Virtual Networks and Subnets: Design the virtual network (e.g., VPC) and subnets to segment the network logically. Subnets are used to isolate resources and control traffic flow. Consider the IP address range, subnet size, and the allocation of resources within each subnet. Plan for future growth by reserving sufficient IP address space.

- Implement Security Groups and Network Access Control Lists (ACLs): Implement security groups (or their equivalent in the chosen cloud provider) and network ACLs to control network traffic. Security groups operate at the instance level, while network ACLs operate at the subnet level. Define rules to allow or deny traffic based on source, destination, port, and protocol. This helps to create a layered security approach.

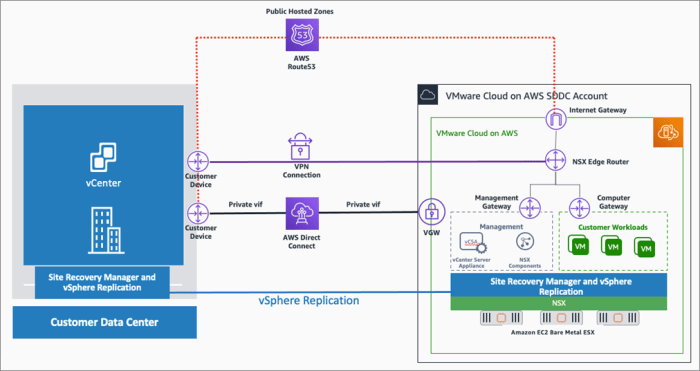

- Configure Network Connectivity: Establish network connectivity between different components, including virtual machines, databases, and external networks. This involves configuring routing tables, VPN connections, and direct connections (e.g., AWS Direct Connect, Azure ExpressRoute, Google Cloud Interconnect) if required.

- Implement Load Balancing: Implement load balancing to distribute traffic across multiple instances or services. This improves application availability and performance. Choose the appropriate load balancer type (e.g., Application Load Balancer, Network Load Balancer) based on the application requirements.

- Monitor and Optimize Network Performance: Continuously monitor network performance metrics, such as latency, throughput, and error rates. Use monitoring tools provided by the cloud provider to identify and troubleshoot network issues. Optimize network configuration and resource allocation based on performance data.

Illustrating the Use of Subnets, Virtual Networks, and Security Groups

Subnets, virtual networks, and security groups are fundamental building blocks of cloud network architecture. Understanding their roles and interactions is crucial for designing a secure and efficient network.

- Virtual Networks (VPCs): A virtual network (e.g., Amazon VPC, Azure Virtual Network, Google Cloud Virtual Network) provides an isolated network environment within the cloud provider’s infrastructure. It allows you to define your own IP address space, create subnets, and control network access. Think of it as a private network within the public cloud.

- Subnets: Subnets are subdivisions of the virtual network. They provide logical segmentation and isolation of resources. Each subnet is assigned a specific IP address range. Resources within the same subnet can communicate directly, while communication between different subnets requires routing. Subnets can be public (accessible from the internet) or private (not directly accessible from the internet).

- Security Groups: Security groups act as virtual firewalls at the instance level (e.g., virtual machine). They control inbound and outbound traffic based on rules that specify source, destination, port, and protocol. Security groups are stateful, meaning that if a connection is allowed inbound, the return traffic is automatically allowed outbound.

- Network ACLs: Network ACLs provide an additional layer of security at the subnet level. They control inbound and outbound traffic for all resources within a subnet. Unlike security groups, network ACLs are stateless, meaning that both inbound and outbound rules must be explicitly defined.

Example of a Diagram Detailing a Multi-Region Cloud Network Architecture

A multi-region cloud network architecture typically involves deploying applications and services across multiple geographic regions to improve availability, disaster recovery, and reduce latency for users in different locations.

The diagram below illustrates a simplified multi-region cloud network architecture. The architecture utilizes two regions (e.g., Region A and Region B), connected via a virtual private network (VPN) or a direct connection.

Diagram Description:

The diagram showcases two distinct regions, Region A and Region B, interconnected through a secure network. Within each region, there’s a VPC that represents the virtual network. Each VPC is further divided into subnets, categorized as public and private. The public subnets are designed to host resources that require direct internet access, such as load balancers, while the private subnets house resources like application servers and databases, protected from direct internet exposure.

Region A and Region B are connected via a VPN gateway. This connection facilitates secure communication between the two regions, allowing applications and services in one region to access resources in the other.

Within each region, there’s a load balancer positioned in the public subnet. The load balancer distributes incoming traffic across multiple application servers residing in the private subnet. Security groups are applied to both the load balancer and the application servers, controlling the traffic flow and enforcing security policies.

A database instance is placed in a private subnet within each region. This ensures the database is isolated and protected from direct internet access. A direct connection or VPN connects the database instances in different regions for data replication or failover purposes.

This architecture provides redundancy and resilience. If one region experiences an outage, traffic can be routed to the other region. This design also allows for distributing content geographically, reducing latency for users accessing the applications. The architecture employs security groups and subnetting to provide a layered security model.

Implementing Network Security Measures

Securing network connections to the cloud is paramount for protecting sensitive data and ensuring business continuity. A comprehensive security strategy encompasses multiple layers, from access control and encryption to intrusion detection and threat management. This layered approach minimizes vulnerabilities and mitigates the impact of potential security breaches.

Best Practices for Securing Network Connections to the Cloud

Adhering to established best practices is crucial for fortifying cloud network security. These practices, when implemented diligently, significantly reduce the attack surface and bolster the overall security posture.

- Implement Strong Authentication and Authorization: Enforce multi-factor authentication (MFA) for all user accounts and access points. Regularly review and update access permissions based on the principle of least privilege, granting users only the necessary access rights to perform their duties. This limits the potential damage from compromised credentials.

- Encrypt Data in Transit and at Rest: Employ robust encryption protocols, such as TLS/SSL for data in transit and AES-256 for data at rest, to protect sensitive information from unauthorized access. Regularly rotate encryption keys and ensure proper key management practices.

- Use Firewalls and Intrusion Detection/Prevention Systems (IDS/IPS): Deploy firewalls to control network traffic and restrict access based on predefined rules. Implement IDS/IPS to monitor network activity for malicious behavior and automatically block or alert on suspicious events.

- Regularly Patch and Update Systems: Keep all operating systems, applications, and security software up to date with the latest patches and security updates to address known vulnerabilities. Automate the patching process whenever possible.

- Monitor and Log Network Activity: Implement comprehensive logging and monitoring solutions to track network traffic, user activity, and security events. Analyze logs regularly to identify and respond to security incidents promptly.

- Conduct Regular Security Audits and Penetration Testing: Perform periodic security audits and penetration testing to identify vulnerabilities and weaknesses in the cloud environment. This helps proactively address potential security risks before they can be exploited.

- Implement Network Segmentation: Segment the network into logical zones to isolate critical resources and limit the impact of a security breach. This prevents attackers from easily moving laterally across the network.

- Educate and Train Employees: Provide regular security awareness training to employees to educate them about common threats, phishing scams, and best practices for secure cloud usage.

Configuring Firewalls and Intrusion Detection Systems

Proper configuration of firewalls and intrusion detection systems is essential for effective security. These systems act as the first line of defense against malicious traffic and unauthorized access.

- Firewall Configuration: Firewalls should be configured to allow only necessary traffic and block all other traffic by default. Create specific rules to permit inbound and outbound traffic based on source/destination IP addresses, ports, and protocols. Regularly review and update firewall rules to reflect changes in network infrastructure and security requirements. Implement stateful inspection to track the state of network connections and allow only legitimate traffic.

Consider using web application firewalls (WAFs) to protect against web-based attacks, such as cross-site scripting (XSS) and SQL injection. For example, an organization using AWS might utilize the AWS Web Application Firewall (WAF) to protect its web applications, specifying rules to block malicious requests based on criteria such as IP addresses, HTTP headers, and URI patterns.

- Intrusion Detection/Prevention System (IDS/IPS) Configuration: Deploy IDS/IPS solutions to monitor network traffic for malicious activity and automatically block or alert on suspicious events. Configure IDS/IPS with appropriate signatures and rules to detect known threats and vulnerabilities. Regularly update the signature database to stay current with the latest threats. Fine-tune the IDS/IPS to reduce false positives and ensure that alerts are relevant and actionable. Consider using behavioral analysis techniques to detect anomalies and unknown threats.

For instance, a company using Azure could employ Azure Firewall and Azure Network Watcher to monitor network traffic, identify suspicious patterns, and automatically block malicious activity.

Encryption is fundamental to cloud security, ensuring data confidentiality and integrity. Encryption in transit protects data as it moves between locations, while encryption at rest safeguards data stored in cloud storage. Without encryption, data is vulnerable to unauthorized access and compromise.

Establishing Secure Connections: VPNs and Direct Connect

Establishing secure network connections is paramount when migrating to or operating within the cloud. This involves implementing robust methods to protect data in transit and ensure the confidentiality, integrity, and availability of network resources. Two primary approaches for achieving this are Virtual Private Networks (VPNs) and Direct Connect services. The choice between these depends on specific needs and requirements, involving careful consideration of security, performance, and cost.

Site-to-Site VPN Setup Process

Site-to-site VPNs establish a secure, encrypted tunnel between an on-premises network and a cloud provider’s network. The setup involves several key steps, ensuring secure communication.The process typically includes:

- Configuration of VPN Gateways: Both the on-premises network and the cloud provider require VPN gateways. These gateways are responsible for encrypting and decrypting network traffic. The on-premises gateway is usually a physical or virtual appliance, while the cloud provider’s gateway is a service provided within the cloud environment.

- IPsec Tunnel Configuration: IPsec (Internet Protocol Security) is a common protocol suite used to secure VPN connections. Configuring IPsec involves defining parameters such as:

- Encryption Algorithms: Algorithms like AES (Advanced Encryption Standard) are used to encrypt the data.

- Hashing Algorithms: Algorithms like SHA-256 (Secure Hash Algorithm 256-bit) are used to verify the integrity of the data.

- Key Exchange Methods: Methods like IKE (Internet Key Exchange) are used to securely exchange cryptographic keys.

- Establishing the VPN Tunnel: Once the gateways and IPsec parameters are configured, the VPN tunnel is established. This involves the gateways negotiating the security parameters and establishing a secure connection. This process involves multiple phases, including IKE phase 1 for authentication and key exchange, and IKE phase 2 for tunnel establishment.

- Routing Configuration: Routing must be configured on both sides to ensure that traffic is correctly routed through the VPN tunnel. This involves defining static routes or using dynamic routing protocols such as BGP (Border Gateway Protocol) to exchange routing information.

- Testing and Monitoring: After the tunnel is established, thorough testing is necessary to ensure proper functionality. Monitoring tools are used to track the VPN’s performance, including latency, throughput, and availability. Alerts are configured to notify administrators of any issues.

Benefits of Using Direct Connect or Similar Services

Direct Connect services provide a dedicated network connection between an on-premises network and a cloud provider’s network, bypassing the public internet. This offers several advantages over VPNs, particularly in terms of performance and reliability.Key benefits include:

- Increased Bandwidth: Direct Connect typically offers higher bandwidth options compared to VPNs, which can be crucial for applications requiring high data transfer rates. This is especially important for large-scale data migrations, disaster recovery scenarios, and applications that generate significant network traffic.

- Reduced Latency: By bypassing the public internet, Direct Connect minimizes latency, leading to improved application performance and a better user experience. This is particularly beneficial for latency-sensitive applications such as real-time video conferencing, online gaming, and financial trading platforms.

- Enhanced Reliability: Dedicated connections provide a more stable and reliable network connection compared to the internet. Direct Connect services often include service level agreements (SLAs) that guarantee uptime and performance.

- Improved Security: While VPNs provide encryption, Direct Connect can enhance security by isolating network traffic and reducing the attack surface. This can be achieved through physical security measures at the colocation facilities where the connection terminates.

- Cost Optimization: While the initial setup cost might be higher, Direct Connect can be cost-effective for high-volume data transfer and long-term use, especially when considering the reduced egress charges offered by some cloud providers.

An example of a real-world application is a financial institution using Direct Connect to connect its on-premises trading platform to a cloud-based data analytics service. The reduced latency and increased bandwidth provided by Direct Connect are critical for processing real-time market data and executing trades efficiently.

Considerations for Choosing Between VPNs and Direct Connect

The decision between VPNs and Direct Connect depends on various factors, and a careful analysis of these is essential for making the right choice.Key considerations include:

- Performance Requirements: If applications require high bandwidth and low latency, Direct Connect is generally the preferred option. VPNs might suffice for less demanding applications.

- Security Requirements: Both VPNs and Direct Connect offer robust security. However, Direct Connect can provide an additional layer of security by isolating network traffic from the public internet.

- Cost: Direct Connect typically involves higher initial setup costs and recurring charges. VPNs are generally less expensive to set up initially, but may incur costs related to hardware or software.

- Data Transfer Volume: For high-volume data transfers, Direct Connect can be more cost-effective due to potentially lower egress charges.

- Business Continuity and Disaster Recovery: Direct Connect can be critical for ensuring business continuity, providing a reliable connection for critical applications during disaster recovery scenarios. VPNs can also be used, but might have limitations in terms of performance and reliability.

- Technical Expertise: Setting up and managing Direct Connect connections can be more complex than setting up a VPN. It often requires specialized networking expertise.

For example, a small business with limited IT resources and modest bandwidth requirements might opt for a VPN solution. In contrast, a large enterprise with demanding performance needs and stringent security requirements would likely choose Direct Connect.

Optimizing Network Performance

Effective network performance is critical for a positive cloud experience. Slow network speeds, high latency, and unreliable connections can severely impact application performance, user experience, and overall business productivity. Therefore, optimizing network performance is a continuous process involving proactive monitoring, strategic configuration, and ongoing adjustments to meet evolving demands. This section details techniques for improving latency and throughput, configuring Quality of Service (QoS), and monitoring and troubleshooting network performance issues in the cloud environment.

Improving Network Latency and Throughput

Network latency, the delay in data transfer, and throughput, the rate at which data is transferred, are fundamental performance metrics. Optimizing both is crucial for efficient cloud operations. Several strategies can be employed to minimize latency and maximize throughput, improving the responsiveness and performance of cloud-based applications.

- Choosing the Right Region: Proximity to end-users significantly impacts latency. Selecting a cloud region geographically closest to the majority of users minimizes the physical distance data must travel, thereby reducing latency. For instance, a company serving customers primarily in Europe would experience lower latency by deploying its applications in a European cloud region compared to a region in North America.

- Optimizing Network Topology: A well-designed network topology reduces the number of hops data must traverse. This includes strategically placing resources within the cloud environment, such as placing database servers closer to application servers. This minimizes the time data takes to travel between components.

- Using Content Delivery Networks (CDNs): CDNs cache content closer to users, reducing the distance data must travel. This is particularly effective for static content like images, videos, and JavaScript files. Popular CDNs like Amazon CloudFront, Cloudflare, and Akamai distribute content across geographically dispersed servers, ensuring faster content delivery for users worldwide. For example, a news website can leverage a CDN to ensure that images load quickly for users in different countries, improving their browsing experience.

- Implementing Network Optimization Techniques: Several network optimization techniques can be implemented, including:

- TCP Optimization: Tuning TCP parameters, such as the TCP window size and Maximum Segment Size (MSS), can improve throughput. A larger window size allows more data to be sent before an acknowledgment is required, leading to higher throughput.

- Path MTU Discovery: Discovering the Maximum Transmission Unit (MTU) along the network path and adjusting the packet size accordingly prevents fragmentation and reassembly, which can increase latency.

- Load Balancing: Distributing network traffic across multiple servers or instances using load balancers prevents any single server from becoming a bottleneck, thus improving overall performance. Load balancers ensure that no single server is overwhelmed, which could lead to performance degradation.

- Upgrading Network Infrastructure: Utilizing high-bandwidth network connections and upgrading network devices, such as routers and switches, can increase throughput. For example, migrating from a 1 Gbps connection to a 10 Gbps connection can significantly improve data transfer rates.

- Data Compression: Compressing data before transmission reduces the amount of data that needs to be transferred, which can improve throughput. Tools like gzip can compress web content before sending it to the user’s browser.

Configuring Quality of Service (QoS) for Cloud Traffic

Quality of Service (QoS) allows prioritizing specific types of network traffic to ensure critical applications receive the necessary bandwidth and resources. This is particularly important in cloud environments where multiple applications and services share the same network infrastructure. Properly configuring QoS can prevent performance degradation for critical applications during periods of high network congestion.

- Traffic Classification: QoS starts with classifying network traffic based on various criteria, such as the application type, source and destination IP addresses, port numbers, and DSCP (Differentiated Services Code Point) values. For instance, voice over IP (VoIP) traffic can be classified and prioritized over less critical traffic, ensuring call quality even during network congestion.

- Traffic Prioritization: Once traffic is classified, QoS policies are applied to prioritize certain types of traffic over others. This can involve assigning different priority levels to different traffic classes. For example, VoIP traffic might be assigned a higher priority than web browsing traffic.

- Bandwidth Allocation: QoS can be used to allocate specific bandwidth to different traffic classes, ensuring that critical applications receive sufficient bandwidth even during periods of high network utilization. This can prevent bandwidth starvation for critical applications.

- Congestion Management: QoS mechanisms, such as queuing and traffic shaping, can be used to manage network congestion. Queuing manages the order in which packets are sent, while traffic shaping regulates the rate at which traffic is sent to prevent congestion.

- Example: Configuring QoS for VoIP Traffic:

- Classify VoIP traffic by identifying the source and destination IP addresses, port numbers (typically UDP ports), and DSCP values.

- Assign a high-priority queue to the VoIP traffic.

- Allocate a specific amount of bandwidth to the VoIP traffic to ensure sufficient capacity.

- Implement traffic shaping to regulate the rate at which VoIP traffic is sent, preventing congestion.

Monitoring and Troubleshooting Network Performance Issues

Continuous monitoring is essential for identifying and resolving network performance issues in the cloud. Monitoring tools provide real-time insights into network performance, allowing for proactive identification of bottlenecks and performance degradations. Proactive troubleshooting is essential to maintaining a healthy and performant cloud environment.

- Network Monitoring Tools: Several tools can be used to monitor network performance. These tools collect data on various metrics, such as latency, throughput, packet loss, and error rates.

- Cloud Provider’s Monitoring Services: Cloud providers offer built-in monitoring services, such as Amazon CloudWatch, Azure Monitor, and Google Cloud Monitoring. These services provide comprehensive monitoring capabilities for network resources.

- Third-Party Monitoring Tools: Third-party tools, such as SolarWinds Network Performance Monitor, Datadog, and New Relic, offer advanced monitoring capabilities and integrations with various cloud platforms.

- Key Performance Indicators (KPIs): Monitoring specific KPIs provides a clear picture of network performance. Important KPIs include:

- Latency: Measured in milliseconds (ms), latency indicates the delay in data transfer. High latency can impact application responsiveness.

- Throughput: Measured in bits per second (bps), throughput indicates the rate at which data is transferred. Low throughput can lead to slow application performance.

- Packet Loss: Measured as a percentage, packet loss indicates the percentage of packets that are lost during transmission. High packet loss can lead to data corruption and performance degradation.

- Error Rates: Monitoring error rates, such as interface errors and CRC errors, helps identify potential hardware or configuration issues.

- Troubleshooting Techniques: When network performance issues arise, several troubleshooting techniques can be employed.

- Identify the Problem: Determine the scope and impact of the problem. Is it affecting all users or only a subset? Is it impacting a specific application or service?

- Gather Data: Collect data from monitoring tools to identify the root cause of the problem. This includes reviewing latency, throughput, packet loss, and error rates.

- Isolate the Problem: Identify the component causing the issue. This may involve testing network connectivity, checking server resources, and reviewing application logs.

- Implement a Solution: Implement a solution based on the identified root cause. This may involve optimizing network configuration, upgrading network infrastructure, or resolving application issues.

- Test and Verify: After implementing a solution, test and verify that the problem has been resolved. Monitor network performance to ensure the issue does not reoccur.

- Example: Troubleshooting High Latency:

- Symptom: Users report slow application performance and high latency.

- Data Gathering: Use a network monitoring tool to check latency, packet loss, and network utilization. If latency is high, examine the path data is taking using tools like traceroute.

- Analysis: Analyze the data to determine the source of the high latency. Is the latency due to network congestion, geographical distance, or a specific network device?

- Solution: Implement a solution based on the root cause. This could involve optimizing network configuration, implementing a CDN, or moving resources closer to users.

Managing Network Costs

Effective cloud network cost management is crucial for maximizing the return on investment (ROI) and preventing unexpected expenses. The dynamic nature of cloud environments, with their pay-as-you-go pricing models and variable resource consumption, demands proactive monitoring and optimization strategies. Understanding the factors that influence cloud network costs and implementing cost-saving measures are essential for financial sustainability.

Factors Influencing Cloud Network Costs

Several factors significantly impact the overall cost of network connectivity in the cloud. These elements are often interconnected and require careful consideration during the planning and implementation phases. Understanding these drivers allows for more informed decisions regarding network architecture and service selection.

- Data Transfer Costs: Data transfer charges are a significant cost component. These fees are incurred for data moving into the cloud (ingress), out of the cloud (egress), and sometimes between different regions or availability zones within the cloud provider’s infrastructure. Egress charges are typically higher than ingress charges.

- Network Services Usage: The consumption of network services, such as load balancers, virtual private networks (VPNs), content delivery networks (CDNs), and firewalls, contributes to the overall network costs. The pricing models for these services vary depending on the provider and the features utilized (e.g., number of requests, data processed, or bandwidth consumed).

- Network Hardware and Infrastructure: While cloud providers handle the underlying physical infrastructure, the choice of virtual network components, such as virtual routers and gateways, and their configurations (e.g., bandwidth allocation) directly affect costs. Selecting higher-performance or redundant components increases expenses.

- Storage Costs: The storage used to store data, particularly data frequently accessed, influences network costs. Data transfer from storage to other services or users incurs charges. Choosing appropriate storage tiers based on access frequency can optimize costs.

- Region Selection: The geographic region in which cloud resources are deployed can impact network costs. Pricing varies between regions, with some regions being more expensive than others. Data transfer between regions also incurs charges.

- Monitoring and Logging: Implementing comprehensive monitoring and logging solutions to track network performance and resource utilization is essential for cost management. While these tools themselves may incur costs, they provide valuable insights that can lead to optimization and cost savings.

- Data Processing and Compute Costs: Network traffic is often linked to data processing and compute operations. The volume of data processed, and the compute resources utilized to handle network traffic, contribute to overall costs.

Strategies for Cost Optimization in Cloud Networking

Implementing proactive cost optimization strategies is critical for controlling and reducing cloud network expenses. These strategies involve a combination of careful planning, efficient resource utilization, and continuous monitoring.

- Right-Sizing Resources: Accurately assess network bandwidth and capacity requirements. Avoid over-provisioning resources, as this leads to unnecessary costs. Regularly review and adjust resource allocations based on actual usage patterns.

- Data Transfer Optimization: Minimize data transfer costs by optimizing data movement patterns. Implement data compression techniques to reduce the size of data transferred. Leverage caching mechanisms to reduce the frequency of data retrieval from the cloud.

- Utilizing Content Delivery Networks (CDNs): Deploying CDNs can significantly reduce data egress costs, especially for content-heavy applications. CDNs cache content closer to users, reducing the need to retrieve data from the origin servers.

- Choosing Cost-Effective Services: Evaluate different network service options offered by cloud providers. Select services that best meet the application’s needs while minimizing costs. For example, use managed load balancers instead of self-managed solutions if they meet the performance requirements.

- Automating Network Management: Automate network tasks, such as scaling resources and managing network configurations. Automation reduces manual effort and can prevent human errors that might lead to inefficient resource allocation.

- Monitoring and Analyzing Network Traffic: Implement comprehensive network monitoring tools to track traffic patterns, identify bottlenecks, and detect anomalies. Analyze network traffic data to identify areas for optimization and cost reduction.

- Implementing Reserved Instances and Committed Use Discounts: Cloud providers offer various pricing discounts, such as reserved instances or committed use discounts, for long-term resource commitments. These discounts can significantly reduce the cost of network resources.

- Leveraging Data Compression and Optimization Techniques: Employ data compression techniques (e.g., gzip) to reduce the size of data transferred, minimizing egress costs. Optimize images and other media files to reduce bandwidth consumption.

Cost-Saving Tips for Network Connectivity

The following are practical tips for minimizing network connectivity expenses. These tips can be implemented across different stages of cloud network planning and operation.

- Regularly Review and Optimize Network Configurations: Periodically assess network configurations, such as firewall rules, routing tables, and security groups. Remove unnecessary rules and streamline configurations to reduce resource consumption.

- Utilize Private Network Connections: Consider using private network connections, such as Direct Connect or ExpressRoute, for high-volume data transfers. Private connections often offer lower and more predictable costs compared to public internet connections.

- Implement Network Segmentation: Segment the network to isolate critical workloads and reduce the attack surface. This can also help optimize network traffic flow and reduce data transfer costs.

- Use Auto-Scaling: Implement auto-scaling to automatically adjust resources based on demand. Auto-scaling helps to avoid over-provisioning resources during periods of low traffic.

- Monitor and Alert on Network Usage: Set up monitoring and alerting to track network usage and identify unexpected spikes in traffic or resource consumption. This allows for timely intervention to prevent cost overruns.

- Optimize Data Storage and Retrieval: Choose the appropriate storage tiers for data based on access frequency. Optimize data retrieval patterns to minimize data transfer costs.

- Negotiate with Cloud Providers: Cloud providers are often willing to negotiate pricing, especially for large-scale deployments or long-term commitments.

- Consider Data Residency Requirements: Select cloud regions that meet data residency requirements while optimizing for cost. Consider the implications of data transfer charges between regions.

- Leverage Cloud Provider Tools and Features: Utilize the cost management tools and features offered by cloud providers to track and analyze network spending.

Disaster Recovery and Business Continuity Planning

Planning for disaster recovery (DR) and business continuity (BC) is critical for ensuring the availability and resilience of cloud-based network infrastructure. This proactive approach minimizes downtime, data loss, and the associated financial and reputational damage that can result from unforeseen events. A well-designed DR/BC plan Artikels strategies for restoring network functionality and critical business operations in the event of a disruption, ranging from natural disasters to human error.

Designing a Network for Disaster Recovery in the Cloud

Designing a network for disaster recovery in the cloud requires a comprehensive strategy that addresses data replication, failover mechanisms, and recovery time objectives (RTOs) and recovery point objectives (RPOs). These objectives dictate the maximum acceptable downtime and data loss, respectively, guiding the selection of appropriate DR solutions.

- Geographic Redundancy: Deploying network resources across multiple geographic regions is fundamental. This ensures that if one region experiences an outage, traffic can be automatically rerouted to a healthy region. Cloud providers offer services that facilitate this, allowing for rapid failover.

- Data Replication: Continuous data replication to a secondary region is essential. This involves replicating data across regions using services like database replication, storage replication, or object storage replication. The choice of replication method depends on the specific application requirements, with options ranging from synchronous to asynchronous replication.

- Automated Failover: Implement automated failover mechanisms to minimize downtime. This involves monitoring the health of network resources and automatically switching to backup resources in a different region when a failure is detected. Services like DNS failover and load balancers play a crucial role in this process.

- Regular Testing: Conduct regular DR testing to validate the effectiveness of the plan. This involves simulating failure scenarios and verifying that the failover mechanisms work as expected. Testing should include failover of critical applications, data recovery, and network connectivity.

- Scalability and Elasticity: Design the network to be scalable and elastic to accommodate fluctuating workloads. Cloud environments allow for the rapid scaling of resources, which is crucial for handling increased traffic during failover or recovery.

The Role of Redundancy and Failover Mechanisms

Redundancy and failover mechanisms are the cornerstones of a robust DR strategy. They provide the means to maintain network availability and minimize the impact of disruptions.

- Redundancy: Implementing redundant components across the network, such as redundant firewalls, load balancers, and network connections, mitigates single points of failure. Redundancy ensures that if one component fails, another is available to take over the workload.

- Failover: Failover mechanisms automatically redirect traffic to backup resources in the event of a failure. This can involve DNS failover, which directs traffic to a different IP address, or load balancer failover, which shifts traffic to a different instance of an application.

- Health Checks: Regular health checks are essential for detecting failures and triggering failover. These checks monitor the status of network resources and applications, and when a failure is detected, the failover mechanism is initiated.

- Recovery Time Objective (RTO): RTO defines the maximum acceptable downtime. This drives the selection of appropriate failover mechanisms and recovery procedures. Lower RTOs require more sophisticated and often more costly DR solutions.

- Recovery Point Objective (RPO): RPO defines the maximum acceptable data loss. This influences the choice of data replication strategies. Lower RPOs require more frequent data replication, which can impact performance and cost.

Cloud-Based Disaster Recovery Setup Diagram

The following diagram illustrates a typical cloud-based disaster recovery setup. The diagram depicts two geographically separate regions, Region A (Primary) and Region B (Secondary/DR). This setup leverages cloud services to ensure business continuity.

Diagram Description:The diagram shows a two-region cloud setup for disaster recovery. Region A (Primary) hosts the primary application and data.

Region B (Secondary/DR) acts as the disaster recovery site.

Region A (Primary):* User Access: Users access the application through the internet, utilizing a DNS service to direct traffic.

Load Balancer

A load balancer distributes traffic across multiple application instances.

Application Tier

The application instances are hosted within a virtual network, communicating with the database.

Database

The primary database stores the application’s data. Data is replicated to Region B.

Network Connectivity

Uses a virtual private network (VPN) or Direct Connect for secure access and connectivity.

Region B (Secondary/DR):* Application Tier (Replicated): Replicated application instances are pre-configured and ready to take over.

Database (Replicated)

A replicated database instance receives data updates from Region A, ensuring data consistency.

Load Balancer (Pre-configured)

A load balancer is pre-configured, ready to accept traffic.

Network Connectivity

Has a similar setup as Region A, with VPN or Direct Connect for secure access.

Failover Mechanism:* DNS Failover: If Region A fails, DNS automatically redirects traffic to the load balancer in Region B.

Automated Failover of Application and Database

The load balancer in Region B detects the failure in Region A and automatically directs traffic to the replicated application instances. The replicated database in Region B is available to serve the traffic.

Data Replication:* Continuous data replication from Region A to Region B ensures that data is synchronized, and the data loss is minimized.

This setup utilizes redundancy at multiple levels, from the application and database instances to the network infrastructure, providing a resilient and reliable architecture.

Monitoring and Troubleshooting Network Connectivity

Effective monitoring and troubleshooting are critical for maintaining optimal cloud network performance and ensuring application availability. Proactive monitoring allows for early detection of issues, enabling timely resolution and minimizing downtime. Comprehensive troubleshooting methodologies help identify the root cause of network problems, leading to effective solutions and preventing recurrence.

Key Metrics for Cloud Network Health

Monitoring key metrics provides insights into network performance, security, and overall health. These metrics should be tracked regularly to establish baselines, identify trends, and trigger alerts when thresholds are exceeded.

- Latency: Latency measures the time it takes for a data packet to travel from the source to the destination. High latency can degrade application performance. It is typically measured in milliseconds (ms).

- Packet Loss: Packet loss refers to the percentage of data packets that fail to reach their destination. High packet loss indicates network congestion or other issues. It is expressed as a percentage.

- Throughput: Throughput measures the amount of data successfully transferred over a network connection in a given period. Low throughput can indicate bandwidth limitations or network bottlenecks. It is usually measured in bits per second (bps) or bytes per second (Bps).

- Jitter: Jitter refers to the variation in the delay of packet delivery. High jitter can impact real-time applications like voice and video. It is also measured in milliseconds (ms).

- Bandwidth Utilization: Bandwidth utilization tracks the percentage of available bandwidth being used. Monitoring bandwidth utilization helps identify potential bottlenecks and capacity planning needs. It is expressed as a percentage.

- Error Rates: Error rates track the frequency of network errors, such as CRC errors or interface errors. High error rates often indicate faulty hardware or configuration issues.

- Connection Counts: Connection counts monitor the number of active connections, both inbound and outbound. Unusual spikes or drops in connection counts can indicate denial-of-service (DoS) attacks or application issues.

- CPU and Memory Utilization (on Network Devices): Monitoring the CPU and memory utilization of network devices, such as virtual routers and firewalls, helps identify performance bottlenecks and resource constraints.

- Security Metrics: Monitoring security metrics, such as the number of blocked connections, intrusion attempts, and suspicious activity, is crucial for maintaining a secure network environment.

Common Network Connectivity Issues and Solutions

Cloud network connectivity issues can arise from various sources, requiring a systematic approach to diagnosis and resolution. Understanding the root causes of these issues and implementing appropriate solutions is essential.

- Latency Issues: High latency can be caused by distance, network congestion, or routing problems.

- Solution: Implement content delivery networks (CDNs) to cache content closer to users, optimize routing paths, and increase bandwidth. Consider upgrading network infrastructure if necessary.

- Packet Loss: Packet loss is often caused by network congestion, faulty hardware, or misconfigured firewalls.

- Solution: Increase bandwidth, implement Quality of Service (QoS) to prioritize critical traffic, and investigate hardware failures. Review firewall rules to ensure they are not inadvertently dropping packets.

- Throughput Bottlenecks: Low throughput can be due to bandwidth limitations, network congestion, or misconfigured network devices.

- Solution: Increase bandwidth, optimize network device configurations, and identify and resolve congestion points. Use network performance monitoring tools to identify bottlenecks.

- DNS Resolution Problems: DNS resolution issues can prevent users from accessing cloud resources.

- Solution: Verify DNS server configurations, ensure DNS records are correctly configured, and check for DNS server outages. Use a different DNS provider if needed.

- Routing Issues: Routing problems can prevent traffic from reaching its destination.

- Solution: Review routing tables, verify network configurations, and ensure routing protocols are functioning correctly. Use traceroute or similar tools to diagnose routing paths.

- Firewall Issues: Firewall misconfigurations can block legitimate traffic.

- Solution: Review firewall rules to ensure they allow necessary traffic, verify security group configurations, and check for any blocked ports or protocols.

- VPN Connection Problems: VPN connection failures can disrupt secure access to cloud resources.

- Solution: Verify VPN configurations, check for connectivity issues at both ends of the VPN tunnel, and ensure the VPN service is operational. Examine logs for authentication failures or other errors.

- Network Device Failures: Hardware failures can disrupt network connectivity.

- Solution: Monitor network device health, implement redundancy, and promptly replace faulty hardware. Implement proactive maintenance to prevent failures.

Troubleshooting Checklist for Cloud Network Problems

A structured troubleshooting checklist can help systematically diagnose and resolve cloud network connectivity issues. Following a methodical approach ensures a comprehensive analysis and minimizes the risk of overlooking critical factors.

- Verify Basic Connectivity:

- Ping the target resource to check for basic connectivity.

- Use `traceroute` or `tracert` to identify the network path and any potential bottlenecks.

- Check DNS Resolution:

- Use `nslookup` or `dig` to verify that DNS is resolving the correct IP address for the target resource.

- Check for DNS server outages or configuration errors.

- Review Network Configurations:

- Verify network configurations, including IP addresses, subnet masks, and gateway settings.

- Check for misconfigured firewall rules or security group settings that might be blocking traffic.

- Examine Network Device Logs:

- Review logs from routers, firewalls, and other network devices for error messages or suspicious activity.

- Analyze logs for any unusual events or patterns that might indicate a problem.

- Monitor Network Performance Metrics:

- Check latency, packet loss, throughput, and other relevant metrics using network monitoring tools.

- Identify any performance bottlenecks or unusual trends.

- Test VPN and Direct Connect Connections:

- Verify the status of VPN and Direct Connect connections.

- Check for authentication failures, connectivity issues, and bandwidth limitations.

- Isolate the Problem:

- Isolate the problem by testing from different locations or using different network paths.

- Identify whether the issue is local to the user, within the cloud provider’s network, or somewhere in between.

- Consult with the Cloud Provider:

- Contact the cloud provider’s support team for assistance if the issue cannot be resolved independently.

- Provide detailed information about the problem, including logs, configurations, and troubleshooting steps taken.

Future Trends in Cloud Networking

The landscape of cloud networking is constantly evolving, driven by advancements in technology and the increasing demands of modern applications. Several key trends are poised to reshape how organizations connect to and operate within the cloud, leading to greater agility, efficiency, and security. These trends involve the evolution of existing technologies and the emergence of new paradigms that will define the future of cloud connectivity.

Software-Defined Networking (SDN) in Cloud Environments

Software-defined networking (SDN) is becoming increasingly important in cloud environments. It separates the control plane from the data plane, enabling centralized management and automation of network infrastructure. This architectural shift provides greater flexibility and control over network resources, optimizing performance and security.SDN’s impact manifests in several key areas:

- Automated Network Provisioning: SDN allows for the automated provisioning of network resources, such as virtual networks and firewalls, which significantly reduces the time required to deploy new applications and services. This automation is particularly valuable in dynamic cloud environments where resources need to be scaled up or down rapidly based on demand.

- Centralized Network Management: SDN enables centralized management of the entire network infrastructure from a single point of control. This simplifies network administration, making it easier to monitor and troubleshoot network issues. This centralized control facilitates the enforcement of consistent security policies across the network.

- Network Programmability: SDN facilitates network programmability, allowing network administrators to write custom applications to control and manage network behavior. This enables the creation of highly customized network solutions that meet specific application requirements.

- Enhanced Security: SDN can enhance network security through features such as micro-segmentation, which isolates workloads and limits the blast radius of security breaches. By creating granular security policies, SDN reduces the attack surface and improves overall security posture.

For example, a large financial institution might leverage SDN to dynamically allocate network bandwidth to its trading applications during peak hours, ensuring optimal performance and minimizing latency. The ability to dynamically adjust network resources based on real-time demand is a significant advantage of SDN.

5G and Edge Computing’s Influence on Cloud Networking

The convergence of 5G and edge computing is creating new opportunities and challenges for cloud networking. 5G’s high bandwidth and low latency capabilities are essential for supporting edge computing applications, which require real-time processing and analysis of data at the network’s edge.The interplay between 5G, edge computing, and cloud networking will be characterized by:

- Enhanced Connectivity for Edge Devices: 5G provides the high-speed, low-latency connectivity required for edge devices to communicate with cloud services. This enables real-time data processing and analysis at the edge, reducing the need to transmit large amounts of data to the cloud.

- Distributed Cloud Architectures: Edge computing extends cloud infrastructure to the network edge, enabling organizations to deploy applications and services closer to end-users and data sources. This distributed architecture improves application performance and reduces latency.

- New Application Opportunities: The combination of 5G and edge computing unlocks new application opportunities in areas such as autonomous vehicles, industrial automation, and augmented reality. These applications rely on real-time data processing and low-latency connectivity.

- Network Optimization for Edge Workloads: Cloud networking technologies must adapt to optimize performance for edge workloads. This includes the development of new network protocols and architectures that can handle the unique requirements of edge computing.

Consider a smart factory deploying sensors and AI-powered analytics at the edge. 5G connectivity would enable real-time data transmission from the sensors to edge computing servers for immediate analysis and control of manufacturing processes. This eliminates latency associated with sending data to a central cloud, enabling quicker responses to potential issues and optimizing operational efficiency.

Ultimate Conclusion

In conclusion, successful cloud adoption hinges on a well-defined network strategy. By meticulously planning network connectivity, from understanding foundational concepts to implementing advanced security and optimization techniques, organizations can harness the full potential of cloud services. This comprehensive guide provides the necessary framework for navigating the complexities of cloud networking, ensuring secure, efficient, and cost-effective connectivity that supports business objectives and future scalability.

Essential Questionnaire

What is the difference between a VPN and Direct Connect?

A VPN (Virtual Private Network) establishes an encrypted tunnel over the public internet, while Direct Connect provides a dedicated, private network connection directly to the cloud provider, offering higher bandwidth and lower latency.

How do I determine my bandwidth needs for cloud connectivity?

Bandwidth requirements depend on factors such as the number of users, application types, data transfer volume, and peak usage times. Analyzing these factors is essential to determine the necessary bandwidth capacity.

What security measures should I implement for cloud network connectivity?

Essential security measures include firewalls, intrusion detection systems, encryption (both in transit and at rest), access control lists (ACLs), and regular security audits.

How can I optimize network costs in the cloud?

Cost optimization strategies include choosing the right instance sizes, utilizing reserved instances, implementing auto-scaling, leveraging content delivery networks (CDNs), and monitoring network traffic for inefficiencies.