Serverless computing offers unparalleled scalability and efficiency, yet harnessing its full potential hinges on effective concurrency management. This guide delves into the intricacies of how to manage and monitor serverless concurrency, providing a structured approach to optimize performance and resource utilization within serverless architectures. The inherent benefits of serverless, such as automatic scaling and pay-per-use pricing, are significantly amplified when concurrency is skillfully managed, leading to cost-effective and resilient applications.

The core of this discussion will explore the fundamentals of serverless concurrency, including understanding platform-specific limits, identifying and mitigating bottlenecks, and implementing effective scaling strategies. We will examine the critical role of monitoring in maintaining optimal performance, focusing on key metrics and visualization techniques. Furthermore, we will dissect various techniques for optimizing code, leveraging queues, and implementing robust error handling, all essential for building reliable and scalable serverless applications.

Finally, we’ll explore security considerations and highlight the tools and technologies available to assist in managing and monitoring serverless concurrency effectively.

Understanding Serverless Concurrency Fundamentals

Serverless concurrency is a fundamental aspect of modern cloud computing, allowing applications to handle multiple requests simultaneously without the need to manage underlying infrastructure. This approach offers significant advantages in terms of scalability, cost-efficiency, and development agility. The following sections will delve into the core concepts of serverless concurrency, highlighting its benefits, providing illustrative examples, and contrasting it with traditional concurrency models.

Serverless Concurrency Explained

Serverless concurrency refers to the ability of a serverless application to execute multiple function invocations concurrently in response to incoming events or requests. This concurrency is managed by the serverless provider, which automatically provisions and scales the necessary resources to handle the workload. The developer focuses on writing the code and configuring the function’s triggers and execution environment, without needing to manage servers, operating systems, or scaling infrastructure.

The provider takes responsibility for the underlying infrastructure, including the allocation of compute resources, memory management, and request routing. This allows applications to scale automatically based on demand.

Benefits of Serverless Concurrency

Serverless concurrency offers several advantages, including:

- Automatic Scaling: Serverless platforms automatically scale function instances based on incoming traffic. This ensures that applications can handle peak loads without manual intervention, eliminating the need for capacity planning and manual scaling configurations. The platform monitors incoming requests and dynamically allocates more function instances as needed. For example, if a web application experiences a sudden surge in user activity during a promotional event, the serverless platform will automatically spin up additional function instances to handle the increased load.

- Cost Optimization: With serverless concurrency, you only pay for the compute time used by your functions. This pay-per-use model contrasts with traditional server-based architectures, where you often pay for idle resources. The cost is determined by the number of function invocations, the execution time, and the memory allocated. This model enables cost savings, especially for applications with variable workloads. For instance, an image processing service that only processes images when new ones are uploaded to a storage bucket would only incur costs when images are uploaded.

- Improved Developer Productivity: Developers can focus on writing code and business logic instead of managing infrastructure. Serverless platforms handle the complexities of scaling, security, and operational tasks. This allows developers to iterate quickly, deploy updates rapidly, and focus on delivering value to the users. Tools and services provided by serverless platforms, such as automated deployments and monitoring dashboards, further streamline the development process.

- Increased Agility: Serverless concurrency promotes agility by allowing developers to quickly deploy and update applications. The ability to independently deploy and scale individual functions allows for rapid experimentation and faster time-to-market. This agility is especially important for businesses that need to respond quickly to market changes or customer feedback.

Scenarios Where Serverless Concurrency is Beneficial

Serverless concurrency is particularly well-suited for a variety of use cases, including:

- Web Applications: Serverless functions can handle API requests, serve web content, and process user interactions. For example, a serverless application can process user authentication, retrieve data from a database, and render web pages. The platform automatically scales to handle traffic spikes, ensuring a responsive user experience.

- Data Processing: Serverless functions can be used to process large datasets, transform data, and run batch jobs. For instance, a serverless function can be triggered when a new file is uploaded to a storage bucket, automatically processing the data and storing the results in a database.

- Event-Driven Architectures: Serverless functions are ideal for building event-driven systems, where functions are triggered by events such as messages in a queue, updates in a database, or changes in a storage bucket. This allows for building highly scalable and responsive applications.

- IoT Applications: Serverless functions can process data from IoT devices, such as sensors and actuators. For example, a serverless function can be triggered when a sensor reading exceeds a threshold, sending an alert or taking corrective action.

Serverless Concurrency vs. Traditional Concurrency

Serverless concurrency differs significantly from traditional concurrency models, which typically involve managing servers, operating systems, and application containers. The core differences include:

- Infrastructure Management: In traditional concurrency models, developers are responsible for provisioning, configuring, and managing the underlying infrastructure, including servers, operating systems, and networking. Serverless platforms abstract away infrastructure management, allowing developers to focus solely on code.

- Scaling: Traditional concurrency often requires manual scaling or the use of auto-scaling groups, which can be complex to configure and manage. Serverless platforms automatically scale function instances based on demand, without any manual configuration.

- Cost Model: Traditional concurrency models typically involve paying for provisioned resources, regardless of usage. Serverless concurrency uses a pay-per-use model, where you only pay for the compute time used by your functions.

- Development Workflow: Traditional concurrency often involves more complex deployment and management processes. Serverless platforms simplify the development workflow by providing automated deployments, monitoring, and logging tools.

- Resource Allocation: In traditional concurrency, resources (CPU, memory) are typically allocated at the server level, potentially leading to underutilization if the application’s workload is uneven. Serverless platforms allocate resources at the function level, optimizing resource utilization and allowing for fine-grained control over resource allocation.

Identifying Concurrency Bottlenecks in Serverless Applications

Serverless applications, by their nature, are designed to scale automatically. However, several factors can impede concurrency, leading to performance degradation and increased latency. Understanding these bottlenecks is crucial for building resilient and efficient serverless architectures. This section delves into the common impediments to concurrency in serverless environments, along with practical methods for their identification and diagnosis.

Common Bottlenecks Limiting Serverless Concurrency

Serverless applications, while highly scalable, are not immune to concurrency limitations. Several common bottlenecks can restrict the number of concurrent executions, impacting overall performance.

- Function Resource Limits: Each serverless function has resource limits, including memory, CPU, and execution time. Exceeding these limits can cause throttling, where the platform limits the number of concurrent function invocations. For example, if a function is configured with 128MB of memory and frequently hits the memory limit, the platform might throttle concurrent executions to prevent excessive resource consumption.

- Platform-Level Concurrency Limits: Cloud providers impose concurrency limits at the account or region level. These limits restrict the total number of concurrent function invocations allowed. For instance, AWS Lambda has a default concurrency limit that can be adjusted. Exceeding these limits results in throttling, delaying function execution.

- Database Connection Limits: Serverless functions often interact with databases. Databases have connection limits, and if a function rapidly creates connections without releasing them, it can exhaust the available connections, blocking subsequent requests. For example, a function might open a new database connection for each request, leading to connection pool exhaustion under high load.

- External Service Rate Limits: Serverless functions may call external services or APIs. These services often have rate limits that restrict the number of requests allowed within a specific timeframe. If a function exceeds these rate limits, it will be throttled, impacting its concurrency.

- Code-Level Issues: Inefficient code can also limit concurrency. For instance, blocking operations, such as synchronous network calls or CPU-bound tasks, can tie up function resources, preventing other requests from being processed concurrently. This includes inefficient looping constructs and excessive data processing.

- Dependencies and Package Size: Large function package sizes can increase cold start times, the time it takes for a function to initialize, negatively affecting concurrency. This delay can create bottlenecks, especially in applications with frequent invocations. In addition, dependencies that are not optimized for serverless can contribute to latency.

Methods for Recognizing Concurrency Issues in Real-Time Serverless Applications

Identifying concurrency issues in real-time serverless applications requires a proactive approach. Several methods and monitoring strategies can help pinpoint performance bottlenecks.

- Monitoring Function Metrics: Cloud providers offer comprehensive monitoring tools that track critical function metrics, such as invocation count, error rate, latency, and memory utilization. Analyzing these metrics provides insights into concurrency issues. For example, a sudden spike in error rates or increased latency during periods of high traffic might indicate throttling due to resource limits.

- Observing Throttling Errors: Serverless platforms often provide error messages that indicate throttling. Monitoring these errors is crucial for detecting concurrency issues. Frequent “TooManyRequestsException” errors from AWS Lambda, for instance, signal that the function is exceeding concurrency limits.

- Analyzing Request Patterns: Examining the patterns of incoming requests can reveal concurrency bottlenecks. A sudden increase in requests, followed by increased latency or errors, suggests potential concurrency limitations. Analyzing request logs and tracing can help identify which functions are experiencing issues.

- Using Distributed Tracing: Distributed tracing tools allow you to trace requests across multiple functions and services, providing a comprehensive view of performance bottlenecks. By tracing the flow of requests, you can pinpoint the functions and external services that are causing delays or throttling.

- Load Testing: Simulating realistic traffic loads through load testing can identify concurrency limitations. By gradually increasing the number of concurrent requests, you can observe the function’s performance under stress and identify bottlenecks.

- Analyzing Database Performance: Monitoring database metrics, such as connection usage, query execution times, and CPU utilization, can reveal concurrency issues. If database connection limits are being reached, or queries are taking too long, it may indicate a bottleneck in the database interaction.

Checklist for Diagnosing Concurrency Problems in Serverless Functions

Diagnosing concurrency problems in serverless functions requires a systematic approach. This checklist provides a structured method for identifying and resolving concurrency issues.

- Monitor Function Metrics:

- Track invocation count, error rate, latency, and memory utilization.

- Establish baseline metrics for normal operation.

- Set up alerts for metric thresholds.

- Review Throttling Errors:

- Check logs for throttling errors from the serverless platform.

- Analyze the frequency and severity of throttling errors.

- Investigate the root cause of the throttling.

- Examine Request Patterns:

- Analyze request logs for unusual spikes or patterns.

- Correlate request patterns with performance issues.

- Identify functions experiencing high load.

- Analyze Distributed Tracing Data:

- Use distributed tracing to visualize request flows.

- Identify functions and services contributing to latency.

- Pinpoint slow operations and bottlenecks.

- Evaluate Resource Limits:

- Verify function resource configurations (memory, CPU, execution time).

- Check for platform-level concurrency limits.

- Adjust resource limits as needed.

- Assess Database Connection Management:

- Monitor database connection usage.

- Ensure connections are properly opened and closed.

- Optimize database queries for performance.

- Check External Service Interactions:

- Verify external service rate limits.

- Implement retry mechanisms for transient errors.

- Optimize API calls for efficiency.

- Review Code for Inefficiencies:

- Identify blocking operations (synchronous network calls).

- Optimize code for concurrency.

- Reduce package size to minimize cold start times.

- Perform Load Testing:

- Simulate realistic traffic loads.

- Monitor performance under stress.

- Identify concurrency limits.

Serverless Platform Concurrency Limits and Quotas

Serverless platforms, while offering scalability benefits, impose concurrency limits and quotas to manage resource allocation, prevent abuse, and ensure fair usage across all users. These limits, often varying based on the platform and the specific service used, directly impact an application’s ability to handle concurrent requests and can become a critical bottleneck if not properly understood and managed. Exceeding these limits can lead to request throttling, increased latency, and ultimately, application downtime.

Default Concurrency Limits by Platform

Each serverless platform enforces its own set of default concurrency limits, which are often tiered based on the account type, region, and the specific service being used. These limits are designed to provide a balance between allowing sufficient capacity for most applications and preventing resource exhaustion.

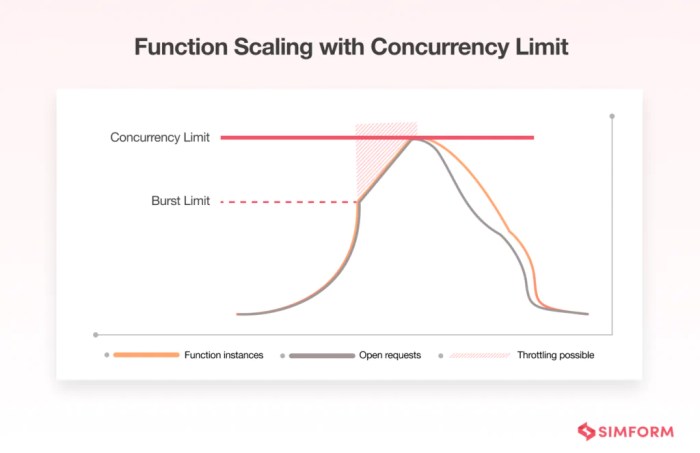

- AWS Lambda: AWS Lambda employs a combination of concurrency limits. The most fundamental is the account-level concurrency limit, which represents the total number of concurrent function executions allowed across all Lambda functions within an AWS account in a given region. The default value varies based on the AWS region, starting at a value like 1,000, but it’s generally increased automatically as an account’s usage grows.

There’s also a function-level concurrency setting, allowing users to reserve a specific amount of concurrency for a particular function, thus isolating it from the broader account-level limits and preventing it from being starved of resources by other functions. This reservation feature is particularly useful for mission-critical functions or those with predictable traffic patterns. AWS also applies burst concurrency limits, which determine the rate at which new function instances can be created.

- Azure Functions: Azure Functions operates with a consumption plan and a dedicated plan. For the consumption plan, concurrency is managed dynamically, scaling up and down based on demand. The default concurrency is relatively high, but it’s subject to the overall resource availability in the Azure region. In the dedicated plan, the concurrency is determined by the compute resources provisioned (e.g., the number of virtual machines).

Azure Functions also has limits related to the number of function instances that can be created and the duration of function executions.

- Google Cloud Functions: Google Cloud Functions manages concurrency dynamically. By default, Google Cloud Functions scales function instances automatically to handle incoming requests. The platform aims to scale up to meet the demand. However, there are implicit limits on the number of function instances that can be created, and these limits can be influenced by factors like the available resources in the Google Cloud region and the account’s usage history.

Google Cloud Functions also allows users to configure a maximum number of instances to control costs and prevent excessive scaling.

Comparison of Concurrency Limits

A direct comparison of concurrency limits across serverless providers reveals significant differences in their approaches. These variations impact the scalability, cost, and overall design of serverless applications.

| Feature | AWS Lambda | Azure Functions | Google Cloud Functions |

|---|---|---|---|

| Account-Level Concurrency (Default) | Starts at 1,000 (varies by region, and is automatically increased) | Managed dynamically, based on resource availability | Managed dynamically, based on resource availability |

| Function-Level Concurrency | Yes (reservation) | Not directly configurable in consumption plan | Yes (maximum instance configuration) |

| Scaling Behavior | Automatic, with burst and account limits | Automatic, scaling based on demand (consumption plan) | Automatic, scaling based on demand |

| Cost Model | Pay-per-use (execution time and requests) | Pay-per-use (execution time and requests) | Pay-per-use (execution time and requests) |

The AWS Lambda approach, with its account-level and function-level concurrency controls, provides the most granular control over resource allocation. Azure Functions’ consumption plan offers a simpler, more hands-off approach, but the lack of explicit concurrency controls can make it challenging to manage resource usage in high-traffic scenarios. Google Cloud Functions provides a balance between automatic scaling and control through maximum instance configuration.

Strategies for Requesting and Managing Increased Concurrency Limits

Managing concurrency limits effectively requires proactive strategies, including requesting increased limits and designing applications to operate within the available resources.

- Requesting Increased Limits: All serverless providers offer mechanisms for requesting increases to the default concurrency limits. The process typically involves submitting a support ticket or contacting the provider’s customer support. The approval process usually considers factors such as the account’s usage history, the projected workload, and the application’s architecture. It’s crucial to provide detailed information about the application’s needs, including the expected traffic volume, the function execution times, and the types of requests being processed.

- Optimizing Function Code: Efficient function code is essential for maximizing concurrency within the existing limits. This involves optimizing the code for performance, reducing execution times, and minimizing resource consumption. Techniques such as code profiling, caching frequently accessed data, and using efficient data structures can significantly improve function performance and reduce the load on the serverless platform.

- Implementing Throttling and Rate Limiting: In cases where the application’s traffic exceeds the available concurrency, implementing throttling and rate limiting mechanisms can help to prevent the application from being overwhelmed. This involves controlling the rate at which requests are accepted and processed, ensuring that the application can handle the incoming traffic without exceeding the concurrency limits. This can be achieved using API Gateway features, middleware, or custom logic within the functions.

- Using Queues and Event-Driven Architectures: Queues can decouple the request processing from the function execution, enabling the application to handle a large volume of requests asynchronously. This allows the functions to process requests at their own pace, even if the concurrency limits are reached. Event-driven architectures, where functions are triggered by events such as messages in a queue or changes in a database, can also help to improve concurrency by allowing functions to be triggered in response to specific events, rather than being directly invoked by users.

- Monitoring and Alerting: Continuous monitoring of the application’s concurrency usage is crucial for identifying potential bottlenecks and proactively managing resource allocation. Monitoring tools should track metrics such as the number of concurrent function executions, the function execution times, and the number of throttled requests. Alerts should be configured to notify developers when the concurrency limits are approaching, allowing them to take corrective action before the application is impacted.

For example, consider an e-commerce platform using AWS Lambda. During a flash sale, the expected traffic could surge dramatically. To handle this, the platform might request an increase in the account-level concurrency limit, reserve concurrency for critical functions (e.g., order processing), and implement rate limiting to protect against excessive requests. Monitoring dashboards would track the number of concurrent executions, identify any throttling, and alert the operations team if limits are being approached.

This proactive approach ensures the application remains responsive and handles the increased load without performance degradation.

Scaling Serverless Functions for Concurrency

Serverless architectures, by their nature, necessitate robust scaling mechanisms to handle fluctuating workloads and maintain optimal performance. Efficiently managing concurrency is crucial for preventing bottlenecks and ensuring applications remain responsive, particularly during periods of high demand. This section explores the core principles of scaling serverless functions for concurrency, encompassing auto-scaling strategies, policy configuration, and burst traffic management.

The Role of Auto-Scaling in Serverless Function Concurrency

Auto-scaling is a pivotal feature in serverless environments, dynamically adjusting the number of function instances to meet the demands of incoming requests. This automated process leverages monitoring metrics to proactively scale resources up or down, ensuring optimal resource utilization and cost efficiency. The primary goal is to maintain performance by minimizing latency and preventing request throttling.The benefits of auto-scaling in serverless concurrency include:

- Automatic Capacity Adjustment: Auto-scaling dynamically adjusts the number of function instances based on real-time demand, ensuring sufficient capacity to handle concurrent requests.

- Improved Resource Utilization: By scaling resources up or down as needed, auto-scaling minimizes idle resources, optimizing cost efficiency.

- Enhanced Application Performance: Auto-scaling prevents throttling and reduces latency by ensuring adequate resources are available to process requests promptly.

- Simplified Management: Auto-scaling eliminates the need for manual capacity provisioning, simplifying operational tasks and reducing the potential for human error.

Designing Auto-Scaling Policies Based on Metrics

Configuring effective auto-scaling policies requires careful consideration of relevant metrics. The choice of metrics directly impacts the responsiveness and efficiency of the scaling mechanism. The process involves selecting appropriate metrics, defining scaling thresholds, and specifying the scaling actions to be taken.Here’s a procedure for configuring auto-scaling policies based on concurrent executions:

- Metric Selection: The most crucial metric for scaling based on concurrency is “ConcurrentExecutions” (or a similar metric provided by the serverless platform). This metric represents the number of function instances actively processing requests at a given time. Other relevant metrics include:

- Invocation Count: The number of times a function is invoked.

- Throttled Invocations: The number of requests that were throttled due to concurrency limits.

- Error Count: The number of function invocations that resulted in errors.

- Latency: The time taken for a function to execute.

- Threshold Definition: Establish thresholds for the selected metrics to trigger scaling actions. For example:

- Scale-out threshold: When ConcurrentExecutions exceeds a certain value (e.g., 80% of the concurrency limit), scale out (increase the number of function instances).

- Scale-in threshold: When ConcurrentExecutions falls below a certain value (e.g., 20% of the concurrency limit) for a sustained period, scale in (decrease the number of function instances).

- Scaling Actions: Define the actions to be taken when a threshold is breached. This includes:

- Scale-out action: Increase the provisioned concurrency or the maximum number of instances allowed.

- Scale-in action: Decrease the provisioned concurrency or the number of active instances.

- Cooldown Period: Implement a cooldown period after a scaling action to prevent rapid oscillations. This period allows the newly provisioned instances to warm up or the removed instances to gracefully terminate before further scaling decisions are made.

- Testing and Monitoring: Thoroughly test the auto-scaling policy under various load conditions and continuously monitor its performance. Refine the thresholds and actions based on observed behavior.

For instance, consider an e-commerce application. During a flash sale, the number of concurrent function executions may surge dramatically. An auto-scaling policy configured to increase the concurrency limit when concurrent executions exceed 80% of the current limit, and to scale back down when usage drops below 20% for a sustained period, can ensure that the application remains responsive even during peak traffic.

Implementing Scaling Strategies for Burst Traffic Scenarios

Burst traffic scenarios, characterized by sudden and significant increases in request volume, pose a unique challenge to serverless concurrency management. Effective scaling strategies must be implemented to handle these surges without causing performance degradation or request failures.Here are several scaling strategies for burst traffic scenarios:

- Provisioned Concurrency: Proactively provision a specific number of function instances to handle anticipated peak loads. This strategy minimizes cold starts and ensures that capacity is readily available.

- Asynchronous Invocation: Utilize asynchronous invocation patterns (e.g., message queues) to decouple request processing from the initial invocation. This allows the function to handle a large volume of requests without being directly impacted by the initial burst.

- Rate Limiting: Implement rate limiting mechanisms to control the number of requests processed within a given timeframe. This helps prevent the function from being overwhelmed and allows for graceful degradation.

- Circuit Breakers: Employ circuit breakers to automatically stop requests from reaching a function if it’s experiencing errors or high latency. This protects the function from being overloaded and prevents cascading failures.

- Gradual Scaling: Configure the auto-scaling policy to scale out gradually in response to increasing traffic. This prevents sudden spikes in resource consumption and provides time for the system to adapt.

- Event-Driven Architectures: Utilize event-driven architectures to handle burst traffic by decoupling components. For example, an event queue can buffer incoming requests and distribute them to function instances at a sustainable rate.

An example of burst traffic handling involves a social media platform. During a viral event, a specific function responsible for updating user profiles might experience a sudden increase in invocations. The platform could employ a combination of strategies: provisioned concurrency to ensure a baseline capacity, rate limiting to protect the function from being overwhelmed, and asynchronous processing using a message queue to handle the influx of requests.

These measures work together to ensure a smooth user experience even during periods of extreme traffic.

Monitoring Concurrency Metrics

Monitoring concurrency metrics is crucial for ensuring the stability, performance, and cost-effectiveness of serverless applications. Proactive monitoring allows for early detection of potential issues, such as throttling or resource exhaustion, enabling timely intervention and optimization. This proactive approach minimizes the impact of concurrency bottlenecks on application performance and user experience.

Key Metrics to Monitor for Serverless Concurrency

Identifying the right metrics is paramount for effective monitoring of serverless concurrency. These metrics provide insights into the behavior of serverless functions under load and help in diagnosing potential problems.

- Concurrent Executions: This metric represents the number of function instances actively processing requests at any given time. Monitoring concurrent executions provides a direct measure of the load on the serverless application. A sustained high number of concurrent executions might indicate that the application is experiencing high traffic or that functions are taking longer to complete. Tracking this metric helps to determine if the function is reaching its concurrency limits.

For example, if a function’s configured concurrency limit is 100 and the concurrent executions consistently approach this number, it suggests that scaling is necessary.

- Throttled Requests: Throttling occurs when the serverless platform limits the rate at which requests are processed, usually due to concurrency limits being reached or other resource constraints. This metric indicates the number of requests that were rejected or delayed. High numbers of throttled requests directly impact user experience, as they result in increased latency and potential errors. Monitoring throttled requests is critical for identifying concurrency bottlenecks and ensuring that the application can handle the expected load.

A significant increase in throttled requests, particularly during peak hours, warrants immediate investigation and scaling adjustments.

- Errors: Monitoring errors is essential for understanding the health and stability of serverless functions. Error metrics provide insight into the frequency and type of failures occurring within the application. Common error types to monitor include invocation errors, timeout errors, and internal server errors. A sudden spike in errors can indicate problems related to concurrency, such as resource exhaustion or dependencies failing under load.

By analyzing error patterns, developers can identify the root causes of failures and implement appropriate fixes, such as optimizing function code or increasing resource allocation.

- Function Duration: The duration of function executions is a critical performance indicator. Longer function durations can contribute to concurrency issues, as functions consume resources for extended periods. Monitoring function duration allows for the identification of performance bottlenecks within the function code. Tracking this metric can highlight functions that are taking longer than expected to complete, prompting optimization efforts. Monitoring the average and 99th percentile durations provides a comprehensive view of performance, revealing both typical execution times and outliers that might indicate concurrency issues.

- Invocation Count: This metric tracks the number of times a function is invoked. Monitoring invocation count provides insights into the traffic patterns and load on the application. By correlating invocation count with other metrics, such as concurrent executions and throttled requests, developers can gain a better understanding of how the application responds to varying levels of traffic. Monitoring invocation counts helps to identify trends, such as increasing or decreasing traffic over time, and can inform capacity planning and scaling decisions.

- Cold Starts: Cold starts refer to the time it takes for a serverless function to initialize when invoked for the first time or after a period of inactivity. Excessive cold start times can negatively impact performance and user experience, especially under high concurrency. Monitoring cold start times helps identify functions that are experiencing slow initialization. Tracking this metric allows for optimization of function code, configuration, or platform-specific techniques to mitigate the impact of cold starts.

Creating a Dashboard Visualization for Displaying Concurrency Metrics in Real-Time

Creating a real-time dashboard is essential for visualizing concurrency metrics and gaining actionable insights into the performance of serverless applications. A well-designed dashboard provides a centralized view of key metrics, enabling developers and operations teams to quickly identify and address potential issues.

- Dashboard Components: A comprehensive dashboard should include several key components to effectively monitor concurrency metrics. These components typically include:

- Line Charts: Used to visualize trends over time for metrics such as concurrent executions, throttled requests, invocation count, and function duration. These charts help identify patterns and anomalies.

- Gauge Charts: Used to display the current value of key metrics, such as concurrent executions and the percentage of throttled requests. Gauges provide a quick visual indication of the application’s health.

- Tables: Used to display detailed information, such as error logs and function execution details. Tables allow for deeper investigation of specific issues.

- Alerting Indicators: Visual cues, such as color-coded indicators, to highlight metrics that exceed predefined thresholds. These indicators immediately draw attention to potential problems.

- Data Sources and Integration: The dashboard must integrate with the serverless platform’s monitoring tools, such as CloudWatch (AWS), Azure Monitor (Azure), or Stackdriver (Google Cloud). Data should be ingested from these sources and processed in real-time to populate the dashboard components. This typically involves using APIs provided by the serverless platform or third-party monitoring services.

- Real-time Updates: The dashboard should refresh automatically at regular intervals (e.g., every few seconds) to provide up-to-date information. This real-time view enables rapid identification of issues and proactive response. The update frequency should be balanced to ensure data accuracy without excessive resource consumption.

- Example Visualization: A dashboard could display a line chart showing the trend of concurrent executions over the past hour, a gauge chart displaying the current number of throttled requests, and a table listing the top 10 functions with the highest error rates.

Sharing Best Practices for Setting Up Alerts Based on Concurrency Thresholds

Setting up alerts based on concurrency thresholds is a critical component of proactive monitoring. Alerts notify operations teams of potential issues, allowing them to respond quickly and prevent service disruptions.

- Define Thresholds: Establish clear thresholds for each metric to trigger alerts. These thresholds should be based on the application’s performance requirements, historical data, and the platform’s concurrency limits. For example, an alert might be triggered if the number of concurrent executions exceeds 80% of the configured concurrency limit.

- Alerting Rules: Configure alerting rules within the monitoring platform to send notifications when thresholds are breached. These rules should be specific and well-defined to minimize false positives. Consider using a combination of static and dynamic thresholds. Static thresholds are fixed values, while dynamic thresholds adjust based on historical data.

- Notification Channels: Configure notification channels to ensure alerts reach the appropriate teams promptly. Common channels include email, SMS, Slack, and PagerDuty. Ensure the chosen channels are monitored regularly and that the right personnel are notified.

- Severity Levels: Assign severity levels to alerts based on the potential impact of the issue. This helps prioritize responses. For example, a high severity alert might indicate a critical problem requiring immediate attention, while a low severity alert might indicate a minor issue that can be addressed later.

- Examples of Alerting Scenarios:

- High Concurrent Executions: Alert when the number of concurrent executions exceeds 90% of the function’s concurrency limit for more than 5 minutes.

- High Throttled Requests: Alert when the number of throttled requests exceeds a predefined threshold (e.g., 100 per minute) for more than 1 minute.

- High Error Rate: Alert when the error rate (errors per invocation) exceeds a specific percentage (e.g., 5%) for more than 1 minute.

- Long Function Duration: Alert when the average function duration exceeds a predefined threshold (e.g., 2 seconds) for more than 1 minute.

- Testing and Refinement: Regularly test and refine the alerting configuration to ensure it is effective and minimizes false positives. Review alert logs and adjust thresholds as needed based on the application’s behavior and performance. Simulate different load scenarios to test the alerting system and ensure that it responds correctly.

Serverless Concurrency Management Techniques

Optimizing serverless concurrency requires a multifaceted approach, encompassing code-level improvements, strategic task management, and careful resource allocation. This section details specific techniques to enhance the performance and scalability of serverless functions, ensuring efficient handling of concurrent requests and minimizing potential bottlenecks. The goal is to maximize throughput while adhering to platform limitations and cost constraints.

Optimizing Serverless Function Code for Concurrency

Improving function code directly impacts concurrency by reducing execution time and resource consumption. Efficient code allows more instances of a function to run concurrently within platform limits. Several code optimization strategies are particularly effective in serverless environments.

- Minimize Dependencies: Reducing the number and size of function dependencies lowers cold start times, which is crucial for concurrency. Every dependency adds to the package size, and larger packages take longer to load. Regularly review dependencies and remove any unnecessary ones.

- Optimize Code Execution Paths: Analyze code to identify and eliminate unnecessary operations or computations. Use profiling tools to pinpoint performance bottlenecks within the function code. Optimizing frequently executed code paths can yield significant performance gains.

- Efficient Data Serialization and Deserialization: Choose efficient data serialization formats (e.g., JSON, Protocol Buffers) and libraries. Serialization and deserialization can be resource-intensive operations, particularly for complex data structures. Optimizing these processes directly impacts function execution time.

- Caching: Implement caching mechanisms to store frequently accessed data, reducing the need to repeatedly fetch data from external sources. This can significantly reduce execution time, especially for functions that interact with databases or APIs.

- Connection Pooling: For functions interacting with databases, use connection pooling to reuse database connections, avoiding the overhead of establishing new connections for each function invocation.

Examples of Code Optimizations for Reduced Execution Time

Specific code optimizations can dramatically reduce function execution time. These improvements directly translate into increased concurrency, as each function instance completes its tasks faster, freeing up resources for subsequent invocations.

- Database Query Optimization: Rewriting database queries to improve their efficiency.

Example: Using indexes on database columns used in `WHERE` clauses can significantly speed up query execution times. A query that initially took 5 seconds to execute could be optimized to run in 0.5 seconds.

- Code Profiling and Optimization: Employing code profiling tools to identify and address performance bottlenecks.

Example: A function might have a section of code that loops through a large dataset inefficiently. Profiling could reveal this, allowing developers to refactor the code to improve its performance. Replacing a nested loop with a more efficient algorithm (e.g., using a hash map) could reduce the execution time by 50% or more.

- Lazy Loading of Resources: Loading resources only when they are needed.

Example: Instead of loading all modules at the beginning of the function execution, load only the necessary modules when their functions are invoked. This minimizes the initial function startup time, allowing for quicker processing of concurrent requests.

- Asynchronous Operations: Performing non-blocking operations using asynchronous programming techniques.

Example: Using asynchronous calls for API requests or database operations allows the function to return immediately and continue processing other tasks while waiting for the external operation to complete. This reduces the overall function execution time and increases concurrency.

Strategies for Managing Long-Running Tasks in a Concurrent Environment

Long-running tasks pose a challenge in serverless environments due to platform time limits. Effective management requires strategies that prevent functions from exceeding execution time limits and maximize concurrency.

- Breaking Down Tasks: Decomposing long-running tasks into smaller, independent units that can be executed by separate function invocations. This allows for parallel processing and reduces the execution time of each individual function.

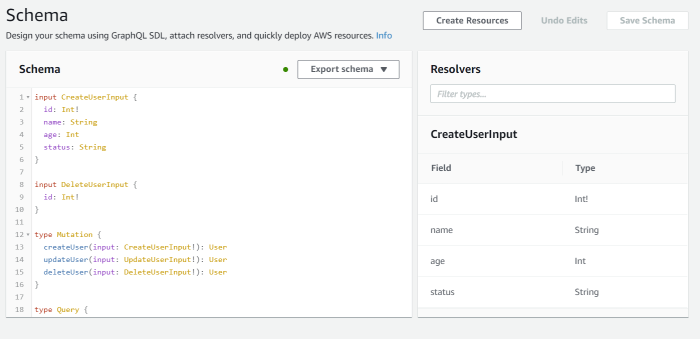

- Using Orchestration Services: Employing orchestration services (e.g., AWS Step Functions, Azure Durable Functions, Google Cloud Workflows) to manage the execution and coordination of multiple function invocations. These services provide features for state management, error handling, and task scheduling.

- Asynchronous Task Processing: Offloading long-running tasks to asynchronous queues (e.g., AWS SQS, Azure Service Bus, Google Cloud Pub/Sub). This allows the function to return immediately after enqueuing the task, freeing up resources to handle other requests.

- Implement Checkpointing: For tasks that can be interrupted, implement a checkpointing mechanism to save the state of the task periodically. This allows the task to resume from the last checkpoint if it is interrupted due to a timeout or other issues.

- Resource Allocation: Carefully allocate resources to functions handling long-running tasks. This includes considering the memory and CPU requirements of the task and adjusting the function configuration accordingly.

Using Queues and Event-Driven Architectures for Concurrency Control

Serverless architectures, by their nature, are inherently concurrent. However, managing this concurrency effectively is crucial for performance, cost optimization, and overall application stability. Queues and event-driven architectures provide powerful mechanisms for decoupling tasks, smoothing out load spikes, and controlling the rate at which functions are invoked, thereby directly addressing concurrency challenges. This approach allows for asynchronous processing, preventing bottlenecks and ensuring that serverless applications remain responsive even under heavy load.

Decoupling Serverless Functions with Queues

Queues, such as Amazon SQS, Google Cloud Pub/Sub, or Azure Service Bus, act as intermediaries between different parts of a serverless application. They buffer messages, allowing functions to process them independently and at their own pace. This decoupling is fundamental to managing concurrency.The utilization of queues provides several key benefits:

- Asynchronous Processing: Functions can enqueue tasks and immediately return a response to the client, without waiting for the task to complete. This prevents blocking operations and improves user experience.

- Rate Limiting and Throttling: Queues allow for controlling the rate at which messages are consumed by functions. This prevents overwhelming downstream services or exceeding concurrency limits.

- Load Balancing: When multiple function instances are available, the queue distributes messages across them, ensuring efficient resource utilization and parallel processing.

- Fault Tolerance: Queues can store messages durably, ensuring that tasks are not lost if a function fails. The message is retried later.

- Scalability: Queues scale automatically, allowing applications to handle increasing workloads without manual intervention.

For instance, consider an e-commerce application. When a user places an order, instead of synchronously processing payment, inventory updates, and shipping notifications within the same function, the order processing function can enqueue these tasks to separate queues. Each queue then triggers a dedicated function to handle the corresponding operation. This decouples the order placement process from the potentially slower operations, improving responsiveness.

Event-Driven Architectures for Concurrency Control

Event-driven architectures (EDAs) utilize events as the primary means of communication between different components. These architectures are inherently asynchronous and well-suited for managing concurrency in serverless applications. Events trigger functions, which then process the events and potentially emit new events, creating a chain of actions.Key characteristics of event-driven architectures include:

- Event Producers and Consumers: Components that generate events are event producers, and components that respond to events are event consumers.

- Event Buses: An event bus, such as Amazon EventBridge, Google Cloud Pub/Sub, or Azure Event Grid, acts as a central hub for event routing and management.

- Asynchronous Communication: Events are processed asynchronously, allowing producers and consumers to operate independently.

- Scalability and Flexibility: EDAs are highly scalable and flexible, allowing for easy addition or modification of components.

An example of an EDA in action is a media processing pipeline. When a new video is uploaded, an event is published to an event bus. This event triggers a serverless function that transcodes the video into multiple formats. Upon completion of the transcoding, another event is published, triggering functions that generate thumbnails, extract metadata, and update a database. This event-driven approach enables parallel processing of different video formats and tasks, improving the efficiency of the overall process.

Handling Asynchronous Tasks and Managing Concurrency with Queues

Queues are instrumental in implementing asynchronous task processing within event-driven architectures. They act as a buffer, decoupling the event producer from the event consumer and enabling concurrency control.The typical workflow involves:

- Event Generation: An event is generated, for example, a user uploading a file.

- Message Enqueueing: A function triggered by the event enqueues a message containing information about the task (e.g., file location, processing instructions) to a queue.

- Message Consumption: One or more functions are subscribed to the queue. These functions consume messages from the queue.

- Task Execution: Each function processes a message, performing the necessary operation (e.g., image resizing, data transformation).

- Concurrency Control: The queue’s configuration, such as the number of concurrent consumers, controls the degree of parallelism.

For example, consider a photo-sharing platform. When a user uploads a photo, a function can enqueue a message to a queue. This message might contain the photo’s URL and desired thumbnail sizes. A separate function, subscribed to this queue, retrieves the message, downloads the photo, generates the thumbnails, and stores them. The queue controls the number of concurrent thumbnail generation tasks, preventing the platform from being overwhelmed by a sudden influx of uploads.

This approach ensures that photo uploads are processed efficiently, and the platform remains responsive.

Error Handling and Retry Strategies in Concurrent Serverless Applications

Robust error handling is paramount in concurrent serverless applications. The ephemeral nature of serverless functions, coupled with the distributed execution environment, necessitates proactive strategies to manage failures gracefully. Without proper error handling, individual function failures can cascade, leading to application-wide instability, data inconsistencies, and ultimately, a poor user experience. Concurrency amplifies these risks, as a single failure can impact multiple concurrent function invocations.

Therefore, a well-defined error handling strategy is essential for ensuring the reliability, resilience, and maintainability of serverless applications operating under concurrent loads.

Importance of Robust Error Handling

The significance of comprehensive error handling in concurrent serverless applications stems from several key factors:

- Increased Failure Domains: Serverless applications are inherently distributed, increasing the potential points of failure. Network issues, external service unavailability, code bugs, and resource limitations can all trigger errors. Concurrency exacerbates these risks, as multiple function instances are running simultaneously, increasing the likelihood of encountering these issues.

- Data Consistency: In concurrent operations, data consistency is critical. If one function fails during a write operation, it can leave the data in an inconsistent state. Without proper error handling, subsequent functions might operate on corrupted or incomplete data, leading to inaccurate results.

- Cost Optimization: Uncontrolled failures can lead to unnecessary retries and resource consumption, increasing costs. Effective error handling can prevent cascading failures and reduce the number of retries, thus optimizing resource utilization and minimizing costs.

- Improved Observability: Comprehensive error handling, coupled with robust logging and monitoring, provides valuable insights into application behavior. Detailed error logs help identify the root causes of failures, allowing developers to proactively address issues and improve the overall stability of the application.

- Enhanced User Experience: Errors that are not handled properly can lead to service disruptions and a negative user experience. By implementing effective error handling strategies, developers can gracefully handle failures, provide informative error messages, and minimize the impact on users.

Design of a Retry Strategy for Transient Errors

A well-designed retry strategy is crucial for mitigating transient errors in serverless applications. Transient errors are temporary issues, such as network glitches or temporary service unavailability, that often resolve themselves with a short delay.

A typical retry strategy should incorporate the following elements:

- Error Identification: Implement a mechanism to identify transient errors. This can involve examining error codes, exception types, or error messages to determine if a retry is appropriate. For example, HTTP status codes like 503 (Service Unavailable) or 429 (Too Many Requests) often indicate transient issues.

- Retry Logic: Define the retry behavior, including the maximum number of retries and the delay between retries. Exponential backoff is a common and effective strategy, where the delay between retries increases exponentially. This allows the system to recover from transient issues while avoiding overwhelming the dependent services.

- Jitter: Introduce random variations (jitter) into the retry delay to prevent multiple function instances from retrying simultaneously. This helps to avoid overwhelming the dependent service and increases the chances of success. For example, a retry delay of 2^n seconds can be combined with a random offset of +/- 0.5 seconds.

- Circuit Breaker: Implement a circuit breaker pattern to prevent repeated retries if the dependent service is consistently unavailable. The circuit breaker monitors the number of failures and, after a threshold is reached, “opens” the circuit, preventing further requests to the failing service for a specified period. This protects the application from cascading failures and allows the service to recover.

- Retry Context: Ensure the retry logic is context-aware. For instance, if a function is interacting with a database, it should retry database connection errors, but not retry errors caused by invalid data.

Example of a retry strategy with exponential backoff and jitter (Python):

import timeimport randomdef retry_with_backoff(func, max_retries=3, base_delay=1, jitter_range=0.5): """ Retries a function with exponential backoff and jitter. Args: func: The function to retry. max_retries: The maximum number of retries. base_delay: The initial delay in seconds.jitter_range: The range for jitter (e.g., 0.5 for +/- 0.5 seconds). Returns: The result of the function if successful, otherwise raises an exception. """ for attempt in range(max_retries + 1): try: return func() except Exception as e: if attempt == max_retries: raise # Re-raise the exception after the maximum retries delay = base_delay- (2-* attempt) + random.uniform(-jitter_range, jitter_range) print(f"Attempt attempt + 1 failed.

Retrying in delay:.2f seconds...") time.sleep(delay)

Best Practices for Logging and Debugging Concurrency-Related Errors

Effective logging and debugging are essential for identifying and resolving concurrency-related errors in serverless applications.

- Detailed Logging: Implement comprehensive logging throughout the application, including function invocations, input parameters, output results, and any errors that occur. Include timestamps, function names, request IDs (if available), and relevant context information to facilitate debugging.

- Structured Logging: Use structured logging formats (e.g., JSON) to make logs easier to parse and analyze. This allows you to query and filter logs effectively. Include key-value pairs for important data points, such as error codes, timestamps, and function names.

- Correlation IDs: Use correlation IDs (e.g., request IDs) to track requests across multiple function invocations and services. This helps to trace the flow of execution and identify the root cause of errors that span multiple components.

- Error Aggregation and Alerting: Aggregate error logs and set up alerts to be notified of critical errors. This allows you to proactively address issues and minimize their impact. Use monitoring tools to track error rates, identify trends, and detect anomalies.

- Debugging Tools: Utilize debugging tools provided by your serverless platform (e.g., AWS CloudWatch Logs Insights, Azure Application Insights, Google Cloud Logging) to analyze logs, identify error patterns, and pinpoint the root causes of concurrency-related issues.

- Tracing: Implement distributed tracing to track requests across multiple services and function invocations. This provides a visual representation of the request flow and helps to identify performance bottlenecks and error propagation. Tools like AWS X-Ray, Jaeger, and OpenTelemetry can be used for distributed tracing.

- Simulate Concurrency: Test the application under concurrent load to identify potential issues. Use load testing tools to simulate high traffic and trigger concurrency-related errors. This helps to validate the effectiveness of error handling and retry strategies.

Security Considerations for Serverless Concurrency

High concurrency in serverless applications, while offering significant benefits in terms of scalability and responsiveness, introduces a complex set of security challenges. The dynamic nature of serverless environments, coupled with the rapid scaling of function instances, expands the attack surface and necessitates robust security measures. Failing to address these vulnerabilities can lead to denial-of-service attacks, data breaches, and unauthorized access to sensitive resources.

A comprehensive security strategy must consider both the inherent characteristics of serverless platforms and the potential for malicious exploitation of concurrent function executions.

Security Risks Associated with High Concurrency

High concurrency exposes serverless applications to a range of security risks, demanding careful consideration and mitigation strategies. Understanding these vulnerabilities is crucial for designing and implementing effective security controls.

- Denial-of-Service (DoS) Attacks: Malicious actors can exploit concurrency to overwhelm serverless functions with requests, consuming all available resources and rendering the application unavailable. This can be achieved through various methods, including sending a large number of concurrent requests, triggering resource-intensive function executions, or exploiting vulnerabilities in function code.

- Resource Exhaustion: Excessive concurrency can lead to resource exhaustion, such as CPU, memory, and network bandwidth. If functions are not properly optimized or resource limits are not in place, attackers can exhaust these resources, causing performance degradation or complete service failure.

- Data Breaches: High concurrency can increase the risk of data breaches if access controls are not properly configured. For example, a compromised function instance could potentially access sensitive data stored in shared resources like databases or object storage. If functions are not properly isolated, a compromised instance could also access other functions.

- Code Injection and Execution: Concurrent function executions can create opportunities for code injection attacks. If input validation is inadequate, attackers can inject malicious code that is then executed within the function’s environment. This could allow them to gain control of the function and potentially compromise other resources.

- Unauthorized Access: Exploiting concurrency vulnerabilities can allow attackers to bypass access controls and gain unauthorized access to sensitive resources. For instance, if an authentication mechanism is not properly implemented, an attacker might be able to spoof requests and access data that they are not authorized to view.

- Side-Channel Attacks: Concurrency can also make serverless applications vulnerable to side-channel attacks. Attackers can exploit the shared resources and execution environment to infer information about the function’s execution, such as the cryptographic keys or other sensitive data.

Strategies for Securing Serverless Functions Against Malicious Concurrent Attacks

Securing serverless functions against malicious concurrent attacks requires a multi-layered approach, encompassing code hardening, access control, and proactive monitoring. These strategies aim to minimize the attack surface and protect against a variety of threats.

- Input Validation and Sanitization: Implement robust input validation and sanitization techniques to prevent code injection attacks. This involves validating all incoming data, ensuring it conforms to expected formats and lengths, and removing or escaping any potentially malicious characters.

- Authentication and Authorization: Enforce strong authentication and authorization mechanisms to control access to serverless functions and their resources. This includes using secure authentication protocols (e.g., OAuth, OpenID Connect), implementing role-based access control (RBAC), and regularly reviewing and updating access permissions.

- Code Isolation and Sandboxing: Isolate function executions to limit the impact of compromised instances. This can be achieved by using containerization technologies like Docker or dedicated sandboxing environments that restrict the resources and permissions available to each function.

- Resource Limits and Throttling: Implement resource limits and throttling mechanisms to prevent resource exhaustion attacks. This includes setting limits on CPU usage, memory allocation, network bandwidth, and concurrent function invocations.

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration testing to identify and address vulnerabilities in the application code, configuration, and infrastructure. This should include simulating concurrent attacks to assess the effectiveness of security controls.

- Use of Security Tools and Services: Leverage security tools and services provided by the serverless platform or third-party vendors. This includes tools for vulnerability scanning, intrusion detection, and security information and event management (SIEM).

- Implement Least Privilege Principle: Grant functions only the minimum necessary permissions to access resources. This minimizes the potential impact of a compromised function. Regularly review and update permissions as the application evolves.

- Secure Configuration Management: Implement secure configuration management practices to protect sensitive information such as API keys, database credentials, and other secrets. Utilize secure secret management services provided by the serverless platform or third-party vendors.

Implementing Rate Limiting and Access Control Mechanisms

Rate limiting and access control are critical for mitigating the impact of concurrent attacks and protecting serverless applications. These mechanisms restrict the rate at which requests are processed and control who can access the functions and their associated resources.

- Rate Limiting Techniques: Implement rate limiting to control the number of requests a function can process within a specific timeframe. This can prevent DoS attacks and ensure fair access to resources. Common rate limiting techniques include:

- Fixed Window Rate Limiting: Allows a specific number of requests within a fixed time window.

- Sliding Window Rate Limiting: Tracks requests over a sliding time window, providing more granular control.

- Token Bucket Rate Limiting: Uses a token bucket to control the rate of request processing.

- API Gateway Integration: Utilize API gateways to enforce rate limits and access control policies. API gateways can be configured to throttle requests based on various criteria, such as IP address, API key, or user identity.

- Function-Level Rate Limiting: Implement rate limiting directly within the serverless functions, providing more granular control over individual function invocations. This approach is particularly useful for functions that handle sensitive operations.

- Access Control Lists (ACLs): Implement ACLs to restrict access to serverless functions based on the origin of the request, such as IP address or user identity.

- Role-Based Access Control (RBAC): Implement RBAC to define different roles with varying levels of access to resources. This ensures that users and functions only have the necessary permissions to perform their tasks.

- API Keys and Authentication: Require API keys or other authentication mechanisms to identify and authorize users. This allows you to track and control access to your functions.

- Regular Monitoring and Auditing: Regularly monitor rate limiting metrics and access control logs to detect and respond to potential security threats. Analyze the logs for unusual activity, such as a sudden spike in requests or unauthorized access attempts.

Tools and Technologies for Managing and Monitoring Serverless Concurrency

Effectively managing and monitoring serverless concurrency is crucial for maintaining application performance, cost optimization, and overall system stability. Several tools and technologies are available to provide visibility into function invocations, resource utilization, and potential bottlenecks. This section explores these tools and technologies, offering insights into their functionalities and how they contribute to efficient concurrency management.

Monitoring Tools for Serverless Concurrency

Monitoring tools are essential for gaining insights into the performance and behavior of serverless applications, particularly concerning concurrency. These tools provide real-time metrics, logs, and traces, enabling developers to identify and address concurrency-related issues proactively. The selection of the appropriate tool depends on factors such as the serverless platform used, the desired level of detail, and the specific monitoring needs.

The following table presents a comparison of various monitoring tools, highlighting their key features and pricing models. Note that pricing can vary depending on usage and specific configurations.

| Tool | Features | Pricing | Examples of Visualization |

|---|---|---|---|

| AWS CloudWatch | Real-time monitoring, logging, metrics, alarms, dashboards, Lambda insights. | Pay-per-use based on data ingested, metrics stored, and alarms created. | Dashboards displaying function invocation counts, duration, error rates, and concurrent executions over time. Example: A line graph showing a spike in concurrent executions during peak hours, indicating potential scaling issues. |

| Google Cloud Monitoring (formerly Stackdriver) | Monitoring, logging, alerting, dashboards, custom metrics, trace integration. | Pay-per-use based on data volume, metric storage, and API usage. | Graphs showing function request latency, error rates, and resource utilization. Example: A heatmap visualization illustrating the distribution of function execution times across different regions. |

| Azure Monitor | Monitoring, logging, alerting, dashboards, application insights, log analytics. | Pay-per-use based on data ingested, log storage, and alert rules. | Dashboards depicting function invocation counts, duration, and error rates, and resource utilization. Example: A bar chart showing the number of function invocations and the average execution time for each function version. |

| Datadog | Comprehensive monitoring, logging, APM, infrastructure monitoring, real-time dashboards, alerting, tracing. | Subscription-based pricing based on the number of hosts/functions monitored and data ingested. | Dashboards showing function performance metrics, resource utilization, and dependency mapping. Example: A service map visualizing the relationships between serverless functions and other services, highlighting performance bottlenecks. |

Examples of Visualizing Concurrency Metrics

Visualizing concurrency metrics is critical for understanding the behavior of serverless functions under load. The following examples illustrate how different tools can be used to create effective visualizations:

- CloudWatch Dashboards (AWS): CloudWatch allows users to create dashboards that display a variety of metrics related to Lambda functions. A common visualization is a line graph that plots the “ConcurrentExecutions” metric over time. This graph clearly shows the number of functions running concurrently. Another important visualization is the “Throttles” metric. A high value for “Throttles” indicates that the function is being rate-limited by the platform, often due to exceeding concurrency limits.

The “Errors” metric can also be visualized to monitor the function’s error rate, which can increase under high concurrency.

- Google Cloud Monitoring Dashboards: Google Cloud Monitoring (formerly Stackdriver) offers similar capabilities for visualizing serverless function metrics. For instance, one can create a graph that displays the “function/execution_count” metric, representing the number of function invocations over a specific period. To monitor concurrency, one can create a graph of the “function/concurrent_executions” metric, showing the number of concurrent executions at any given time. Alerting can be configured based on these metrics, for example, triggering an alert if the number of concurrent executions exceeds a predefined threshold.

- Azure Monitor Dashboards: Azure Monitor provides similar functionalities to CloudWatch and Google Cloud Monitoring. For example, you can visualize the “Function Execution Count” and the “Function Duration” to understand the overall performance. The “Function Errors” metric helps identify issues related to concurrency. The “Concurrency” metric directly tracks the number of concurrent executions, and you can set up alerts to be notified when concurrency levels approach the configured limits.

- Datadog Dashboards: Datadog offers a more comprehensive approach, allowing users to combine metrics from various sources and create highly customized dashboards. Datadog can display detailed metrics like function invocation counts, latency, error rates, and resource utilization. A service map can visualize the dependencies between serverless functions and other services, helping to identify performance bottlenecks. You can also create custom dashboards that combine metrics from various sources.

Outcome Summary

In conclusion, mastering serverless concurrency is paramount for unlocking the true power of serverless architectures. This comprehensive exploration has illuminated the key aspects of managing and monitoring concurrency, from understanding fundamental concepts to implementing advanced techniques. By applying the strategies Artikeld, developers can build robust, scalable, and cost-effective serverless applications. Continuous monitoring, strategic optimization, and proactive security measures are the cornerstones of success in this dynamic and rapidly evolving landscape, ensuring applications not only meet current demands but also adapt to future challenges with grace and efficiency.

Frequently Asked Questions

What is the difference between concurrency and parallelism in serverless?

Concurrency refers to the ability of a system to handle multiple tasks seemingly at the same time, often through time-slicing. Parallelism involves the actual simultaneous execution of multiple tasks, often utilizing multiple CPU cores or instances. In serverless, concurrency is the primary focus, as functions are often executed on shared infrastructure, but the platform handles the underlying parallelism.

How does auto-scaling work in serverless functions?

Auto-scaling in serverless functions dynamically adjusts the number of function instances based on demand. Metrics like concurrent executions or queue lengths trigger scaling events. The platform automatically provisions more instances to handle increased load and scales down when demand decreases, optimizing resource utilization.

What are the common causes of throttled requests in serverless?

Throttled requests in serverless are typically caused by exceeding platform-specific concurrency limits or hitting resource quotas. These limits are in place to protect the underlying infrastructure and prevent abuse. Monitoring and adjusting concurrency limits, optimizing function code, and implementing retry strategies can help mitigate throttling.

How can I test the concurrency of my serverless functions?

Concurrency testing involves simulating multiple concurrent requests to your serverless functions and observing their behavior. Tools like load testing frameworks (e.g., JMeter, Gatling) or platform-specific testing tools can be used to generate traffic and monitor metrics like concurrent executions, latency, and error rates. Analyzing these metrics will help identify performance bottlenecks.