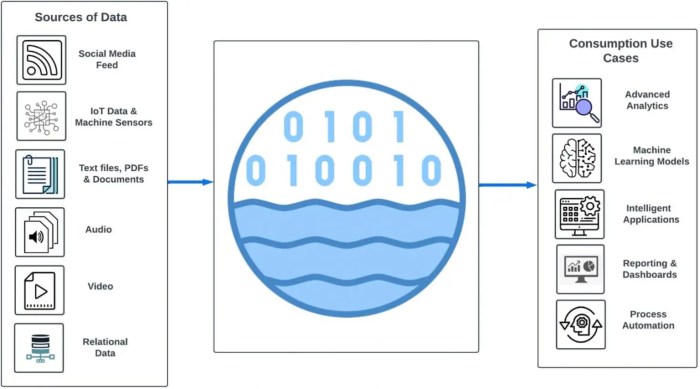

The modern data landscape demands efficient and scalable solutions for managing vast quantities of information. Ingesting data into a data lake, a centralized repository for diverse data, is a critical first step in harnessing the power of analytics and business intelligence. Serverless computing offers a revolutionary approach to this process, eliminating the complexities of infrastructure management and providing unprecedented flexibility and cost-effectiveness.

This guide delves into the intricacies of serverless data ingestion, exploring its core principles, practical implementations, and real-world applications.

We’ll explore the benefits of serverless architectures, examining how they streamline data pipelines and optimize resource utilization. This includes selecting appropriate serverless services from major cloud providers, designing ingestion patterns for various data sources, and implementing end-to-end pipelines. Furthermore, we’ll address crucial aspects such as data transformation, security, monitoring, error handling, and cost optimization, equipping you with the knowledge to build robust and efficient serverless data ingestion solutions.

Introduction to Serverless Data Ingestion into Data Lakes

Serverless data ingestion into data lakes represents a paradigm shift in how organizations handle the continuous flow of data. It leverages the principles of serverless computing to automate and optimize the process of collecting, transforming, and loading data into a data lake. This approach focuses on agility, scalability, and cost-effectiveness, making it an attractive option for modern data architectures.

Core Concepts of Serverless Computing in Data Ingestion

Serverless computing, at its core, is a cloud computing execution model where the cloud provider dynamically manages the allocation of machine resources. Developers write and deploy code without the need to manage underlying infrastructure, such as servers, operating systems, or virtual machines. This abstraction allows them to focus solely on the application logic. In the context of data ingestion, serverless functions are triggered by events, such as the arrival of a new file in a storage bucket or a message published to a queue.

These functions then execute the necessary data processing tasks, such as validation, transformation, and loading into the data lake. The cloud provider automatically scales the resources based on the workload demand, ensuring optimal performance and cost efficiency.

Serverless vs. Traditional Data Ingestion Methods

Traditional data ingestion methods typically involve setting up and maintaining dedicated infrastructure, such as on-premises servers or virtual machines, to handle the data pipeline. This approach often requires significant upfront investment, ongoing maintenance, and manual scaling efforts. Serverless data ingestion offers several advantages over traditional methods.

- Cost Efficiency: Serverless solutions often utilize a pay-per-use pricing model. Users are charged only for the actual compute time and resources consumed, leading to significant cost savings, especially during periods of low activity or when the workload is spiky. Traditional methods often involve paying for provisioned resources, even when they are idle.

- Scalability: Serverless platforms automatically scale resources based on demand. This ensures that the data ingestion pipeline can handle large volumes of data without manual intervention. Traditional methods often require manual scaling, which can be time-consuming and prone to errors.

- Reduced Operational Overhead: Serverless platforms manage the underlying infrastructure, including server provisioning, patching, and monitoring. This reduces the operational burden on the data engineering team, allowing them to focus on data-related tasks. Traditional methods require teams to manage the infrastructure, which can be complex and time-consuming.

- Faster Time-to-Market: Serverless solutions can be deployed quickly, allowing organizations to ingest data into the data lake faster. The reduced operational overhead and automated scaling capabilities also contribute to faster development cycles.

However, serverless data ingestion also has some potential drawbacks.

- Vendor Lock-in: Serverless platforms are often vendor-specific, which can lead to vendor lock-in. Organizations may find it difficult to migrate their data ingestion pipelines to another platform.

- Cold Starts: Serverless functions can experience cold starts, which is the delay in the execution of a function when it is first invoked. This can impact the performance of data ingestion pipelines, especially when processing data in real-time.

- Limited Control: Serverless platforms provide limited control over the underlying infrastructure. This can make it difficult to optimize performance or troubleshoot issues.

Benefits of Serverless Approach for Data Lake Ingestion

Employing a serverless approach for data lake ingestion yields significant advantages, particularly in terms of scalability and cost-effectiveness. These benefits translate into a more agile and efficient data management strategy.

- Scalability: Serverless data ingestion platforms automatically scale resources based on demand. For example, if a large batch of data needs to be ingested, the platform automatically allocates more compute resources to handle the increased workload. This ensures that the data ingestion pipeline can handle any volume of data without manual intervention. This is particularly beneficial for organizations that experience fluctuating data volumes.

- Cost-Effectiveness: Serverless solutions typically employ a pay-per-use pricing model. This means that organizations only pay for the compute time and resources that are actually consumed. For instance, if a data ingestion pipeline is idle for a period, no charges are incurred. This cost structure can lead to significant savings compared to traditional methods, especially for workloads with fluctuating demands. For instance, a company ingesting sensor data may see significant cost reductions during off-peak hours.

- Reduced Operational Overhead: Serverless platforms manage the underlying infrastructure, reducing the burden on the data engineering team. This allows them to focus on tasks like data transformation and analysis, rather than infrastructure management.

- Improved Agility: Serverless solutions can be deployed quickly and easily, enabling organizations to rapidly ingest data into their data lakes. This agility allows for faster time-to-market for new data-driven initiatives.

- Simplified Monitoring and Management: Serverless platforms often provide built-in monitoring and management tools, simplifying the process of tracking performance and troubleshooting issues.

The combination of these benefits makes serverless data ingestion a compelling choice for organizations seeking to build scalable, cost-effective, and agile data pipelines.

Choosing the Right Serverless Services

Selecting the appropriate serverless services for data ingestion into a data lake is crucial for optimizing performance, minimizing costs, and ensuring scalability. This process involves carefully evaluating the characteristics of the data sources, the required transformation and processing steps, and the specific features offered by each cloud provider. Understanding the strengths and limitations of different serverless options allows for the creation of a robust and efficient data ingestion pipeline.Serverless computing offers a compelling approach to data ingestion, allowing developers to focus on the code and data processing logic rather than managing infrastructure.

The major cloud providers – Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) – each offer a suite of serverless services tailored for data ingestion, providing a range of options to suit diverse requirements.

Identifying Key Serverless Services

Each major cloud provider offers a comprehensive set of serverless services designed to facilitate data ingestion. These services cater to different aspects of the ingestion pipeline, from triggering events and processing data to storing and managing the ingested data.

- AWS: AWS provides a robust ecosystem of serverless services, including:

- AWS Lambda: A compute service that allows you to run code without provisioning or managing servers. It is triggered by various events, such as object creation in S3, updates to DynamoDB tables, or messages in SQS queues.

- Amazon S3: An object storage service designed for storing and retrieving any amount of data. It is a common landing zone for ingested data.

- Amazon Kinesis Data Streams: A real-time data streaming service that enables you to collect, process, and analyze streaming data.

- Amazon EventBridge: A serverless event bus that makes it easier to build event-driven applications.

- Amazon Simple Queue Service (SQS): A fully managed message queuing service that enables you to decouple and scale microservices, distributed systems, and serverless applications.

- Azure: Microsoft Azure offers a comprehensive set of serverless services:

- Azure Functions: A serverless compute service that allows you to run code on-demand without managing infrastructure. It can be triggered by various events, such as HTTP requests, messages in Azure Service Bus, or updates to Azure Blob Storage.

- Azure Blob Storage: An object storage service for storing unstructured data. It is often used as a staging area for ingested data.

- Azure Event Hubs: A big data streaming platform and event ingestion service, capable of receiving and processing millions of events per second.

- Azure Service Bus: A fully managed enterprise integration service that enables you to build decoupled and scalable applications.

- Azure Logic Apps: A cloud service that helps you automate tasks and integrate apps, data, systems, and services across enterprises or organizations.

- GCP: Google Cloud Platform provides a powerful set of serverless services:

- Cloud Functions: A serverless compute service that allows you to run code on Google’s infrastructure without managing servers. It can be triggered by events from Cloud Storage, Pub/Sub, or HTTP requests.

- Cloud Storage: An object storage service for storing unstructured data. Similar to S3 and Azure Blob Storage, it serves as a landing zone.

- Cloud Pub/Sub: A real-time messaging service that allows you to send and receive messages between independent applications.

- Cloud Dataflow: A fully managed, serverless stream and batch data processing service.

- Cloud Scheduler: A fully managed cron job service that allows you to schedule tasks at regular intervals.

Factors in Service Selection

Choosing the right serverless services involves considering several key factors. These factors influence the efficiency, cost-effectiveness, and overall performance of the data ingestion pipeline.

- Data Source Characteristics: The nature of the data source plays a significant role in service selection.

- Data Volume: High-volume data sources may require services capable of handling massive data streams, such as Kinesis Data Streams, Azure Event Hubs, or Cloud Pub/Sub.

- Data Velocity: Real-time or near-real-time data streams necessitate services designed for high-throughput processing, such as AWS Lambda in conjunction with Kinesis, Azure Functions with Event Hubs, or Cloud Functions with Pub/Sub.

- Data Variety: The format of the data (e.g., structured, semi-structured, unstructured) influences the choice of storage and processing services. S3, Azure Blob Storage, and Cloud Storage are suitable for various data formats.

- Ingestion Scenario: The specific requirements of the ingestion process guide service selection.

- Batch Ingestion: For periodic data loads, services like AWS Lambda, Azure Functions, or Cloud Functions, triggered by scheduled events or file uploads, are appropriate.

- Real-time Ingestion: For continuous data streams, streaming services like Kinesis Data Streams, Azure Event Hubs, or Cloud Pub/Sub are preferred, often used in conjunction with Lambda, Functions, or Cloud Functions for processing.

- Event-Driven Ingestion: Services that respond to specific events, such as file uploads to S3 or Blob Storage, are suitable. This includes Lambda triggered by S3 events, Azure Functions triggered by Blob Storage events, or Cloud Functions triggered by Cloud Storage events.

- Data Transformation and Processing Requirements: The complexity of data transformations impacts the selection of compute services.

- Simple Transformations: Basic data cleaning and formatting can be handled by Lambda, Functions, or Cloud Functions.

- Complex Transformations: For intricate transformations, consider services like AWS Glue, Azure Data Factory, or Cloud Dataflow.

- Cost Considerations: Serverless services offer pay-per-use pricing models. The cost of a data ingestion pipeline depends on several factors.

- Compute Time: The duration of code execution, which is a key cost driver for Lambda, Functions, and Cloud Functions.

- Storage Costs: The amount of data stored in S3, Azure Blob Storage, or Cloud Storage.

- Data Transfer Costs: The cost of transferring data between services.

- Scalability and Availability: Ensure the selected services can handle expected data volumes and provide high availability. Serverless services inherently offer scalability, but consider the service’s limitations.

- Integration Capabilities: Assess the ease with which services can be integrated with other components of the data lake, such as data processing engines (e.g., Spark, Hadoop), data warehouses, and reporting tools.

Comparison of Serverless Services

The following table provides a comparative overview of key serverless services offered by AWS, Azure, and GCP, highlighting their features, pricing models, and use cases. This comparison helps in selecting the most suitable services for different data ingestion requirements.

| Service | Provider | Features | Pricing Model | Use Cases |

|---|---|---|---|---|

| AWS Lambda | AWS | Event-driven compute service; supports multiple programming languages; integrates with various AWS services. | Pay-per-use based on compute time, memory allocation, and requests. | Data transformation, real-time processing, scheduled tasks, triggered by S3 uploads, Kinesis streams, etc. |

| Amazon S3 | AWS | Object storage service; highly scalable and durable; integrates with other AWS services. | Pay-per-use based on storage used, requests, and data transfer. | Data landing zone, archival, data lake storage. |

| Amazon Kinesis Data Streams | AWS | Real-time data streaming service; captures, processes, and analyzes streaming data. | Pay-per-shard-hour and data transfer. | Real-time data ingestion, log processing, clickstream analysis. |

| Azure Functions | Azure | Event-driven compute service; supports multiple programming languages; integrates with various Azure services. | Pay-per-use based on compute time, memory allocation, and executions. | Data transformation, real-time processing, scheduled tasks, triggered by Blob Storage uploads, Event Hubs, etc. |

| Azure Blob Storage | Azure | Object storage service; highly scalable and durable; integrates with other Azure services. | Pay-per-use based on storage used, requests, and data transfer. | Data landing zone, archival, data lake storage. |

| Azure Event Hubs | Azure | Big data streaming platform; ingests and processes high volumes of data. | Pay-per-event based on throughput units. | Real-time data ingestion, IoT data processing, log aggregation. |

| Cloud Functions | GCP | Event-driven compute service; supports multiple programming languages; integrates with various GCP services. | Pay-per-use based on compute time, memory allocation, and invocations. | Data transformation, real-time processing, scheduled tasks, triggered by Cloud Storage uploads, Pub/Sub, etc. |

| Cloud Storage | GCP | Object storage service; highly scalable and durable; integrates with other GCP services. | Pay-per-use based on storage used, requests, and data transfer. | Data landing zone, archival, data lake storage. |

| Cloud Pub/Sub | GCP | Real-time messaging service; enables asynchronous communication between applications. | Pay-per-operation (message published or delivered). | Real-time data ingestion, event-driven architectures, IoT data processing. |

Data Sources and Serverless Ingestion Patterns

Ingesting data into a data lake requires understanding the various data sources and selecting the appropriate serverless ingestion patterns. The choice of pattern depends on factors such as data volume, velocity, variety, and the required latency. This section details different data source types and designs serverless architectures for ingesting data from them, emphasizing event-driven and scheduled approaches.

Streaming Data Ingestion

Streaming data, characterized by its continuous and real-time nature, demands specific ingestion patterns to ensure timely data availability within the data lake. These patterns focus on processing data as it arrives, enabling real-time analytics and insights.

- Data Source: Real-time event streams from various sources such as IoT devices, social media feeds, application logs, and clickstream data.

- Serverless Ingestion Method: Event-driven ingestion using services like Amazon Kinesis Data Streams or Apache Kafka (managed service options).

- Architecture:

- Data streams are ingested into Kinesis Data Streams or Kafka topics.

- AWS Lambda functions subscribe to these streams.

- Lambda functions process the data, perform transformations (e.g., filtering, aggregation), and write the results to the data lake (e.g., Amazon S3).

- Consider using Kinesis Data Firehose for direct ingestion into the data lake, providing options for data transformation and batching.

- Advantages: Low latency, real-time processing, scalability, and cost-effectiveness.

- Example: Analyzing sensor data from a manufacturing plant to detect anomalies in real-time, using AWS IoT Core to publish sensor data to Kinesis Data Streams, triggering a Lambda function for processing and writing to Amazon S3.

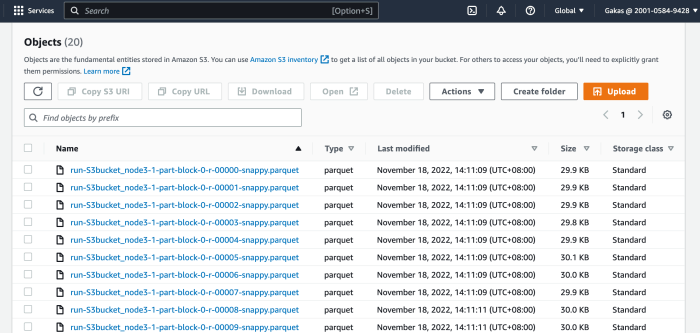

Batch File Ingestion

Batch file ingestion involves processing large files that are typically generated periodically. Efficiently handling batch files is crucial for loading historical data and regular updates.

- Data Source: Files stored in various formats (CSV, JSON, Parquet, etc.) from on-premises systems, external vendors, or other cloud storage services.

- Serverless Ingestion Method: Scheduled ingestion using services like AWS Lambda, AWS Step Functions, or AWS Glue.

- Architecture:

- Files are uploaded to a designated location in the data lake (e.g., Amazon S3).

- A scheduled trigger (e.g., CloudWatch Events or EventBridge) activates a Lambda function or starts a Step Functions workflow.

- The Lambda function or Step Functions workflow processes the files: parsing, transforming, and loading data into the data lake. AWS Glue can be used for ETL (Extract, Transform, Load) operations, including data cataloging.

- For large files, consider using AWS Glue with dynamic partitioning or AWS EMR Serverless for optimized processing.

- Advantages: Scalable processing of large datasets, support for various file formats, and cost-effective for periodic data loads.

- Example: Loading daily sales data from a CSV file stored in an S3 bucket, using a CloudWatch Events rule to trigger a Lambda function that reads the file, transforms the data, and stores it in a Parquet format in the data lake.

Database Ingestion

Ingesting data from databases involves capturing changes made to the data within the database. This is often achieved using Change Data Capture (CDC) mechanisms.

- Data Source: Relational databases (e.g., PostgreSQL, MySQL, SQL Server) and NoSQL databases (e.g., MongoDB, Cassandra).

- Serverless Ingestion Method: Event-driven or scheduled ingestion using services like AWS Database Migration Service (DMS), AWS Lambda, and AWS Glue.

- Architecture:

- Event-Driven:

- Utilize CDC tools or database triggers to capture changes.

- Changes are published to a message queue (e.g., Amazon SQS) or a stream (e.g., Kinesis Data Streams).

- A Lambda function consumes the messages/events, transforms the data, and writes to the data lake.

- Scheduled:

- Use AWS DMS to replicate the data to the data lake.

- Schedule a Lambda function to query the database and load data to the data lake.

- AWS Glue can be used for ETL tasks, connecting to various databases and writing to the data lake.

- Event-Driven:

- Advantages: Near real-time data replication, minimal impact on the source database, and scalability.

- Example: Capturing changes in a customer database, using a database trigger to publish changes to an SQS queue, and a Lambda function that reads the queue and updates customer information in the data lake.

API Ingestion

APIs provide a structured way to access data from various applications and services. Ingesting data from APIs often involves handling rate limits, authentication, and data transformation.

- Data Source: Third-party APIs (e.g., social media APIs, weather APIs, financial data APIs) and internal APIs.

- Serverless Ingestion Method: Scheduled ingestion or event-driven ingestion using AWS Lambda, AWS Step Functions, and API Gateway.

- Architecture:

- Scheduled:

- Use CloudWatch Events to trigger a Lambda function periodically.

- The Lambda function calls the API, handles authentication and rate limits, parses the API response, transforms the data, and writes it to the data lake.

- Event-Driven:

- Use API Gateway to expose the API.

- Configure API Gateway to trigger a Lambda function upon API calls.

- The Lambda function processes the request, calls the external API, transforms the response, and writes to the data lake.

- Scheduled:

- Advantages: Automation of API data retrieval, handling of API-specific complexities, and cost-effectiveness.

- Example: Fetching daily weather data from a weather API, using a CloudWatch Events rule to trigger a Lambda function that calls the API, parses the JSON response, and stores the data in an S3 bucket.

Implementing Serverless Data Ingestion Pipelines

Building robust and efficient serverless data ingestion pipelines is crucial for organizations seeking to harness the power of their data lakes. This section provides a structured approach to implementing these pipelines, detailing the necessary steps and illustrating them with practical code examples. The focus is on creating scalable, cost-effective, and maintainable solutions for moving data from various sources into a data lake.

Organizing the Steps in Building a Serverless Data Ingestion Pipeline

The process of building a serverless data ingestion pipeline involves a series of well-defined steps. Each step plays a critical role in ensuring data integrity, performance, and overall pipeline success. These steps are typically iterative and may require adjustments based on specific data source characteristics and data lake requirements.

- Data Source Identification and Assessment: This initial step involves identifying the data sources, such as databases, APIs, or streaming services. The assessment includes evaluating the data volume, velocity, and variety (the “3 Vs” of big data) to determine the optimal ingestion strategy. For example, a high-volume, real-time streaming source might require a different approach than a batch-oriented database.

- Service Selection and Architecture Design: Choose the appropriate serverless services based on the data source and requirements. This may involve services like AWS Lambda, Azure Functions, or Google Cloud Functions for processing data, along with services like Amazon S3, Azure Data Lake Storage, or Google Cloud Storage for storing the data in the data lake. Design the architecture, including the workflow and data flow, considering factors like scalability, fault tolerance, and cost optimization.

- Function Development and Configuration: Develop the serverless functions that will handle data ingestion, transformation, and loading. Configure the functions with the necessary triggers (e.g., object creation in S3, scheduled events) and permissions (e.g., access to data sources and data lake storage).

- Data Transformation and Validation: Implement data transformation logic within the serverless functions. This might involve cleaning, filtering, enriching, and converting data to a suitable format for the data lake. Data validation ensures data quality and integrity by checking for missing values, incorrect data types, or other inconsistencies.

- Data Loading into the Data Lake: Load the transformed data into the data lake storage. This step typically involves writing the data in a structured format (e.g., Parquet, Avro, CSV) optimized for querying and analysis.

- Monitoring and Logging: Implement comprehensive monitoring and logging to track the pipeline’s performance, identify errors, and troubleshoot issues. Use tools like CloudWatch (AWS), Azure Monitor, or Google Cloud Logging to collect and analyze logs and metrics.

- Testing and Deployment: Thoroughly test the pipeline in a development or staging environment before deploying it to production. This includes testing the functionality, performance, and security of the pipeline. Deploy the pipeline using infrastructure-as-code tools or automated deployment processes.

- Optimization and Iteration: Continuously monitor and optimize the pipeline’s performance and cost. Iterate on the design and implementation based on feedback, changing data source characteristics, and evolving business requirements. This might involve adjusting function memory allocation, optimizing data transformation logic, or changing the data storage format.

Creating a Step-by-Step Guide for Configuring a Serverless Function

Configuring a serverless function to process and load data into a data lake involves several key steps. The specific implementation will vary depending on the chosen cloud provider and programming language, but the fundamental principles remain the same. The following guide provides a general overview using Python and AWS Lambda as an example.

- Choose a Cloud Provider and Service: Select a cloud provider (e.g., AWS, Azure, Google Cloud) and the corresponding serverless function service (e.g., AWS Lambda). This decision should align with the organization’s existing infrastructure and expertise.

- Create a Function: Using the cloud provider’s console or CLI, create a new function. Specify the function name, runtime (e.g., Python 3.9, Node.js 16), and the desired memory allocation.

- Configure the Trigger: Define the trigger that will initiate the function execution. Common triggers include:

- Object creation in a storage service (e.g., S3): The function is triggered when a new object is uploaded to an S3 bucket.

- Scheduled events: The function is triggered based on a schedule (e.g., hourly, daily).

- API Gateway: The function is invoked through an API endpoint.

- Define IAM Permissions: Grant the function the necessary permissions to access resources, such as the data source (e.g., database) and the data lake storage (e.g., S3 bucket). This is typically done using IAM roles. Ensure least privilege access is used.

- Write the Code: Develop the function code to:

- Read data from the data source.

- Transform the data (e.g., clean, filter, format).

- Load the transformed data into the data lake storage.

- Deploy the Function: Upload the function code and any dependencies (e.g., libraries) to the cloud provider.

- Test the Function: Test the function by triggering it manually or by uploading a test data file to the storage service. Verify that the function executes successfully and that the data is loaded into the data lake correctly.

- Monitor and Optimize: Monitor the function’s performance and resource usage. Optimize the function’s code and configuration to improve performance and reduce costs.

Sharing Code Snippets Demonstrating Data Transformation and Loading Logic

The following code snippets illustrate data transformation and loading logic within a serverless function. These examples use Python and AWS Lambda and provide a basic framework for handling common data ingestion tasks. The specifics of the data transformation and loading logic will vary depending on the data source, the data lake format, and the desired data transformations.

Example 1: Reading CSV Data from S3 and Loading to Parquet

This example demonstrates reading a CSV file from an S3 bucket, transforming the data, and loading it into a Parquet file in another S3 bucket. This is a common pattern for ingesting structured data.

import boto3import pandas as pds3 = boto3.client('s3')def lambda_handler(event, context): # Get the bucket and file key from the event bucket_name = event['Records'][0]['s3']['bucket']['name'] file_key = event['Records'][0]['s3']['object']['key'] try: # Read the CSV file from S3 obj = s3.get_object(Bucket=bucket_name, Key=file_key) df = pd.read_csv(obj['Body']) # Data Transformation (Example: convert a column to datetime) if 'timestamp' in df.columns: df['timestamp'] = pd.to_datetime(df['timestamp']) # Define the output file name and path output_bucket = 'your-data-lake-bucket' output_key = file_key.replace('.csv', '.parquet') # Write the transformed data to Parquet format in S3 df.to_parquet(f's3://output_bucket/output_key', index=False) print(f"Successfully processed file_key and loaded to s3://output_bucket/output_key") return 'statusCode': 200, 'body': f'Successfully processed file_key' except Exception as e: print(f"Error processing file_key: str(e)") return 'statusCode': 500, 'body': f'Error processing file_key: str(e)' Example 2: Processing JSON Data from a Queue

This example illustrates processing JSON data received from a queue (e.g., Amazon SQS). It demonstrates parsing the JSON data and writing it to a text file in S3. This pattern is suitable for handling streaming or event-driven data.

import jsonimport boto3s3 = boto3.client('s3')def lambda_handler(event, context): for record in event['Records']: try: # Parse the JSON message from the queue message_body = json.loads(record['body']) # Data Transformation (Example: extract a specific field) data_to_write = f"The value is: message_body.get('value', 'N/A')\n" # Define the output file name and path output_bucket = 'your-data-lake-bucket' output_key = f"processed_data/record['messageId'].txt" # Write the transformed data to S3 s3.put_object(Bucket=output_bucket, Key=output_key, Body=data_to_write.encode('utf-8')) print(f"Successfully processed message record['messageId'] and loaded to s3://output_bucket/output_key") except Exception as e: print(f"Error processing message record['messageId']: str(e)") Explanation of the code snippets:

- The code utilizes the `boto3` library, which is the AWS SDK for Python, to interact with AWS services such as S3.

- The `lambda_handler` function is the entry point for the serverless function. It receives an event object, which contains information about the trigger that invoked the function (e.g., an S3 object creation event or a queue message).

- The code extracts the necessary information from the event object, such as the bucket name and file key in the first example or the message body in the second.

- The code reads the data from the data source (S3 in the first example, SQS in the second).

- The code performs data transformation operations, such as converting data types or extracting specific fields.

- The code loads the transformed data into the data lake storage (S3 in both examples), typically in a structured format like Parquet.

- The code includes error handling to catch and log any exceptions that may occur during processing.

Data Transformation and Enrichment with Serverless

Data transformation and enrichment are critical steps in the data ingestion pipeline, ensuring that data is clean, consistent, and ready for analysis. Serverless architectures provide a flexible and scalable platform for performing these operations, allowing for efficient processing of large datasets without the overhead of managing infrastructure. This section explores common transformation techniques, data enrichment strategies, and provides a practical example of transforming data from JSON to Parquet using serverless functions.

Data Transformation Techniques in Serverless Pipelines

Data transformation encompasses a range of operations designed to clean, structure, and prepare data for downstream processing. Serverless functions excel in these tasks due to their ability to execute code on demand, scaling automatically to handle varying workloads. Common techniques include:

- Data Cleaning: This involves correcting errors, handling missing values, and removing inconsistencies within the data. This might include standardizing date formats, correcting typos in text fields, or imputing missing numerical values using statistical methods such as mean or median imputation. For example, a serverless function could be triggered whenever a new CSV file arrives in a data lake, and it can identify and correct invalid phone numbers using regular expressions and data validation libraries.

- Filtering: Filtering involves selecting a subset of data based on specific criteria. This is crucial for removing irrelevant or unwanted data, reducing storage costs, and improving query performance. For instance, a serverless function could filter out records with a `status` field set to “inactive” or “deleted” before storing them in the data lake.

- Aggregation: Aggregation involves summarizing data by grouping it based on one or more attributes. Common aggregation operations include calculating sums, averages, counts, and other statistical measures. For example, a serverless function could aggregate sales data by product category and month to generate monthly sales reports.

- Data Type Conversion: Data type conversion involves changing the data type of a field to ensure consistency and compatibility with downstream systems. This might involve converting strings to numbers, dates to timestamps, or boolean values to integers. For example, a serverless function could convert a string representation of a date (“YYYY-MM-DD”) to a timestamp format suitable for analysis.

- Data Validation: Data validation is the process of ensuring that data conforms to predefined rules and constraints. This includes checking for data type correctness, range checks, and format validation. For example, a serverless function could validate that a numerical field falls within a specific range (e.g., a score between 0 and 100) or that an email address conforms to a valid format.

Data Enrichment Strategies Using Serverless Functions

Data enrichment involves augmenting existing data with additional information from external sources. Serverless functions provide a convenient way to integrate with various external services and APIs to enhance the value of the ingested data.

- API Integration: Serverless functions can easily integrate with external APIs to retrieve additional data. For example, a function could use an API to look up customer information based on an ID, add geolocation data based on an IP address, or retrieve product details based on a product code.

- Lookup Tables: Enriching data with lookup tables is a common practice. These tables can be stored in databases, cloud storage, or in-memory caches. Serverless functions can query these tables to retrieve related information and add it to the ingested data. For example, a function could look up a country name based on a country code from a lookup table stored in a cloud database.

- Real-time Data Enrichment: For real-time data streams, serverless functions can be used to enrich data as it arrives. This might involve looking up information from a real-time data feed or performing calculations based on the incoming data. For example, enriching streaming data with sentiment analysis using a Natural Language Processing (NLP) service.

- Data Augmentation: This involves generating new features or attributes based on existing data. This could involve creating derived fields such as calculating the age from a birthdate or calculating the total order value from individual item prices and quantities.

JSON to Parquet Transformation Process

Transforming data from JSON to Parquet is a common use case in data lake ingestion. Parquet is a columnar storage format optimized for analytical queries, offering significant performance improvements compared to row-based formats like JSON. This process can be efficiently implemented using serverless functions.

- Trigger: A trigger, such as an event notification from an object storage service (e.g., Amazon S3, Azure Blob Storage, Google Cloud Storage) upon the arrival of a new JSON file, initiates the process.

- Serverless Function Execution: A serverless function (e.g., AWS Lambda, Azure Functions, Google Cloud Functions) is invoked. The function receives the location of the JSON file as input.

- Data Reading: The function reads the JSON data from the specified location in the object storage service. Libraries like `json` in Python or equivalent libraries in other languages are used for parsing the JSON data.

- Data Transformation (if needed): The function performs any necessary transformations, such as data cleaning, filtering, or data type conversions. This is an optional step but crucial for data quality.

- Schema Definition: The function defines the schema for the Parquet file. This defines the data types and structure of the columns. This schema can be derived from the JSON data or defined explicitly based on requirements.

- Parquet File Creation: The function writes the transformed data to a Parquet file. Libraries like `pyarrow` in Python or equivalent libraries in other languages are used for writing Parquet files.

- Data Storage: The function stores the generated Parquet file in the data lake (e.g., S3, Azure Data Lake Storage, Google Cloud Storage).

- Error Handling and Monitoring: Implement robust error handling and monitoring to track the execution of the function, log any errors, and provide insights into the performance of the transformation pipeline. Logging is vital for debugging and performance optimization.

The process can be visualized as a sequence of steps.

A descriptive illustration of the process would include:

A diagram depicting the workflow. The diagram starts with a box labeled “JSON File Arrives in Object Storage”. An arrow points from this box to a box labeled “Serverless Function Invoked”. Another arrow points from “Serverless Function Invoked” to “Reads JSON Data”.

A subsequent arrow leads to “Data Transformation (if needed)”. Next arrow goes to “Schema Definition”. Then, “Parquet File Creation”. The arrow points to “Data Lake (Parquet File)”. The final step is a two-way arrow indicating error handling and monitoring that is present throughout the process.

For example, a Python function using `pyarrow` could perform this transformation.“`pythonimport jsonimport pyarrow as paimport pyarrow.parquet as pqimport boto3 # For interacting with S3, if applicabledef lambda_handler(event, context): “”” Transforms a JSON file to Parquet. “”” bucket_name = event[‘Records’][0][‘s3’][‘bucket’][‘name’] object_key = event[‘Records’][0][‘s3’][‘object’][‘key’] # 1.

Read JSON data from S3 s3 = boto3.client(‘s3’) try: response = s3.get_object(Bucket=bucket_name, Key=object_key) json_data = json.loads(response[‘Body’].read().decode(‘utf-8’)) except Exception as e: print(f”Error reading JSON from S3: e”) raise e # 2.

Define Schema (adapt to your JSON structure) # This example assumes a simple JSON structure. Modify as needed. schema = pa.schema([ pa.field(“id”, pa.int64()), pa.field(“name”, pa.string()), pa.field(“value”, pa.float64()), pa.field(“timestamp”, pa.timestamp(‘ms’)) ]) # 3.

Create a list of dictionaries for pyarrow data = [] for item in json_data: try: data.append( ‘id’: int(item.get(‘id’, 0)), ‘name’: item.get(‘name’, ”), ‘value’: float(item.get(‘value’, 0.0)), ‘timestamp’: pa.TimestampScalar.from_timestamp(item.get(‘timestamp’, 0.0)) # handle timestamp ) except ValueError as e: print(f”Error converting data: e for item: item”) continue # Skip the problematic item # 4.

Convert the data to a pyarrow table try: table = pa.Table.from_pylist(data, schema=schema) except Exception as e: print(f”Error creating pyarrow table: e”) raise e # 5. Write the table to Parquet output_key = object_key.replace(“.json”, “.parquet”) # create the output filename try: pq.write_table(table, f’s3://bucket_name/output_key’) print(f”Successfully converted object_key to output_key in S3″) except Exception as e: print(f”Error writing Parquet to S3: e”) raise e return ‘statusCode’: 200, ‘body’: json.dumps(f’Successfully converted object_key to Parquet’) “`This example provides a basic framework.

Error handling, schema inference, and data type conversions must be tailored to the specific structure and data types of the JSON files being processed. The `boto3` library is used for interacting with AWS S3; for other cloud providers, the respective SDKs would be used. This function can be triggered by an S3 object creation event, automatically converting new JSON files to Parquet.

This illustrates the efficiency and scalability of serverless functions for data transformation tasks.

Security and Access Control in Serverless Data Ingestion

Securing serverless data ingestion pipelines is paramount to protect sensitive data and maintain the integrity of the data lake. Serverless architectures, while offering agility and scalability, introduce unique security considerations due to their distributed nature and reliance on managed services. Implementing robust security measures across all stages of the ingestion process is crucial to prevent unauthorized access, data breaches, and compliance violations.

Authentication, Authorization, and Encryption

Authentication, authorization, and encryption are fundamental pillars of a secure serverless data ingestion pipeline. Properly implemented security measures protect data from unauthorized access, both during transit and at rest, ensuring data confidentiality and integrity.

- Authentication: This process verifies the identity of users or services attempting to access the data ingestion pipeline. Authentication methods vary depending on the serverless platform and the specific services used.

- Service Accounts: For serverless functions, authentication often involves using service accounts with specific permissions. These accounts are assigned to the functions, allowing them to securely access other services within the cloud environment. For example, an AWS Lambda function might assume an IAM role to access an S3 bucket.

- API Keys and Tokens: API keys or access tokens may be used to authenticate external sources that are sending data to the ingestion pipeline. These keys must be managed securely and rotated regularly to mitigate the risk of compromise.

- Federated Identity: Using federated identity providers (IdPs) such as Active Directory or Okta allows for centralized user authentication and management, simplifying access control and improving security.

- Authorization: Once authenticated, authorization determines the actions a user or service is permitted to perform. This involves defining and enforcing access control policies that restrict access to specific resources based on the principle of least privilege.

- IAM Roles (AWS), Service Accounts (GCP), and Similar Mechanisms: Serverless platforms provide mechanisms to define fine-grained access control policies. These policies specify which actions are allowed or denied on particular resources, such as data lake storage buckets, databases, and message queues.

- Role-Based Access Control (RBAC): Implementing RBAC helps manage access permissions efficiently by assigning users to roles with predefined sets of permissions. This simplifies administration and reduces the risk of misconfigurations.

- Attribute-Based Access Control (ABAC): ABAC allows for more granular control by evaluating attributes associated with users, resources, and the environment. This approach is particularly useful in complex scenarios where access decisions depend on multiple factors.

- Encryption: Encryption protects data confidentiality, both while in transit and at rest.

- Data in Transit: Encrypting data in transit ensures that data is protected as it moves between different components of the ingestion pipeline. This typically involves using Transport Layer Security (TLS) or Secure Sockets Layer (SSL) for communication between services and with external sources.

- Data at Rest: Encrypting data at rest protects data stored in the data lake from unauthorized access. This can be achieved using server-side encryption (SSE) with keys managed by the cloud provider or by using customer-managed keys (CMK) for greater control.

- Key Management: Securely managing encryption keys is crucial. Cloud providers offer key management services (KMS) that allow you to generate, store, and manage encryption keys, providing a centralized and secure way to control encryption.

Securing Data at Rest and in Transit

Securing data at rest and in transit requires a layered approach that addresses various potential vulnerabilities. Implementing appropriate security measures at each stage of the data ingestion pipeline minimizes the risk of data breaches and ensures compliance with relevant regulations.

- Data at Rest Security: This focuses on protecting data stored in the data lake from unauthorized access.

- Encryption: Implement server-side encryption (SSE) with either provider-managed keys or customer-managed keys (CMK). CMKs provide greater control over encryption keys and allow for more granular access control. For example, using AWS KMS to encrypt data stored in Amazon S3.

- Access Control Lists (ACLs) and Bucket Policies: Configure appropriate ACLs and bucket policies to restrict access to data lake storage buckets. These policies should follow the principle of least privilege, granting only the necessary permissions to authorized users and services.

- Data Lake Storage Encryption: Leverage the built-in encryption features of the data lake storage platform, such as encryption at rest in Amazon S3, Azure Data Lake Storage, or Google Cloud Storage.

- Regular Security Audits: Perform regular security audits to identify and address any vulnerabilities in the data lake storage configuration. These audits should include reviewing access control policies, encryption settings, and key management practices.

- Data in Transit Security: This ensures that data is protected as it moves through the ingestion pipeline.

- TLS/SSL Encryption: Use TLS/SSL encryption for all communication between services, including communication between serverless functions, data sources, and data lake storage. This protects data from eavesdropping and man-in-the-middle attacks.

- API Gateway Security: If using an API gateway to expose the ingestion pipeline, implement security features such as API key authentication, request validation, and rate limiting to protect against malicious attacks.

- Network Security: Consider using virtual private clouds (VPCs) and network security groups (NSGs) to isolate the ingestion pipeline from the public internet and restrict network traffic to authorized sources.

- Data Source Security: Ensure that data sources are also secured. This may involve implementing authentication and authorization mechanisms, encrypting data at rest, and using secure communication protocols.

Configuring Access Control Policies

Configuring access control policies for serverless functions and data lake storage is critical for controlling who can access and modify data. These policies define the permissions granted to each service and user, ensuring that the principle of least privilege is followed.

- Serverless Function Permissions: Define the permissions required by each serverless function to perform its tasks.

- IAM Roles: Assign IAM roles to serverless functions that define the actions the function is allowed to perform. These roles should be scoped to the specific resources and actions required by the function. For example, a Lambda function that reads data from a queue and writes it to an S3 bucket would need permissions to read from the queue and write to the bucket.

- Least Privilege: Grant only the necessary permissions to each function. Avoid granting broad permissions that could allow a compromised function to access sensitive data or perform unauthorized actions.

- Policy Updates: Regularly review and update IAM roles to ensure they reflect the current needs of the functions. Remove any unnecessary permissions to reduce the attack surface.

- Data Lake Storage Access Control: Configure access control policies for the data lake storage to control who can access the data.

- Bucket Policies and ACLs: Use bucket policies and ACLs to control access to the data lake storage buckets. Bucket policies are more flexible and can be used to grant permissions to multiple users and services. ACLs are used for more granular control over individual objects.

- IAM Policies: Define IAM policies that grant users and services access to the data lake storage. These policies should be scoped to specific buckets and objects, following the principle of least privilege.

- Cross-Account Access: If accessing data from multiple accounts, configure cross-account access using IAM roles. This allows users and services in one account to assume a role in another account, granting them access to the data lake storage.

- Regular Policy Audits: Regularly audit access control policies to ensure they are correctly configured and that no unauthorized access is granted. This includes reviewing IAM roles, bucket policies, and ACLs.

Monitoring and Logging for Serverless Data Ingestion

Effective monitoring and logging are critical components of any serverless data ingestion pipeline. They provide insights into the health, performance, and behavior of the pipeline, enabling proactive identification and resolution of issues. This proactive approach ensures data integrity, reduces downtime, and facilitates continuous improvement. Without robust monitoring and logging, it becomes exceedingly difficult to diagnose problems, optimize performance, and maintain the reliability of the data ingestion process.

Importance of Monitoring and Logging

Monitoring and logging are fundamental to the operational success of serverless data ingestion pipelines. They offer real-time visibility into pipeline operations, allowing for rapid detection of anomalies and potential failures. This visibility is crucial for maintaining data quality, meeting service-level agreements (SLAs), and minimizing the impact of errors.

- Real-time Visibility: Monitoring dashboards provide a real-time view of pipeline performance, allowing operators to track key metrics such as data ingestion rates, error rates, and latency.

- Proactive Issue Detection: Logging captures detailed information about pipeline events, including function invocations, data transformations, and error messages. This information enables early detection of problems before they impact data delivery.

- Performance Optimization: By analyzing performance metrics, operators can identify bottlenecks and areas for optimization, such as scaling function resources or optimizing data transformation logic.

- Compliance and Auditing: Logs provide an audit trail of all pipeline activities, which is essential for compliance with regulatory requirements and for troubleshooting data quality issues.

- Cost Management: Monitoring helps track resource consumption and identify opportunities to optimize costs by scaling resources appropriately and eliminating unnecessary function invocations.

Best Practices for Implementing Monitoring and Alerting

Implementing effective monitoring and alerting requires a strategic approach to ensure that the right metrics are tracked and that appropriate alerts are configured. Leveraging cloud provider-specific tools is often the most efficient and cost-effective approach.

- Define Key Performance Indicators (KPIs): Identify the critical metrics that reflect the health and performance of the pipeline. These KPIs will vary depending on the specific pipeline, but typically include data ingestion rate, error rate, latency, and resource utilization.

- Utilize Cloud Provider Tools: Cloud providers such as AWS, Azure, and Google Cloud offer comprehensive monitoring and logging services that are designed to work seamlessly with serverless architectures. Examples include CloudWatch (AWS), Azure Monitor (Azure), and Google Cloud Monitoring (GCP).

- Implement Detailed Logging: Log events at different levels of granularity, including informational, warning, and error messages. Include relevant context in the logs, such as timestamps, function names, request IDs, and input/output data.

- Configure Alerts: Set up alerts based on thresholds for critical metrics. Alerts should be configured to notify relevant personnel when issues arise, enabling prompt investigation and resolution.

- Create Dashboards: Build dashboards to visualize key metrics and provide a consolidated view of pipeline health. Dashboards should be designed to facilitate rapid identification of issues and trends.

- Automate Monitoring and Alerting: Automate the configuration of monitoring and alerting infrastructure using infrastructure-as-code (IaC) tools to ensure consistency and repeatability.

- Regularly Review and Refine: Periodically review monitoring configurations and alert thresholds to ensure they remain relevant and effective. Adjust configurations as needed based on changing pipeline requirements and performance characteristics.

Key Metrics to Monitor and Alerts to Trigger

Monitoring a serverless data ingestion pipeline involves tracking a variety of metrics and setting up alerts to proactively address potential issues. The following diagram illustrates key metrics to monitor and the corresponding alerts that should be triggered.

Diagram Description: The diagram illustrates a serverless data ingestion pipeline with the following components: Data Source, API Gateway, AWS Lambda Functions, AWS S3, and AWS Glue. The diagram also includes monitoring and alerting components.

| Metric Category | Metric | Description | Alert Threshold | Alert Action |

|---|---|---|---|---|

| Data Ingestion Rate | Records Processed per Second | Number of records successfully processed by the pipeline per second. | Below a predefined minimum (e.g., 100 records/sec) or significantly lower than historical average. | Notify Operations Team; Investigate pipeline performance and potential bottlenecks. |

| Error Rate | Function Error Count | Number of errors encountered by the Lambda functions during data processing. | Exceeds a predefined percentage (e.g., 1% of invocations) or a specific number within a time window. | Notify Development Team; Investigate function code for errors and potential data issues. |

| Latency | End-to-End Latency | Time taken for data to flow through the entire pipeline, from source to destination. | Exceeds a predefined threshold (e.g., 5 seconds) for a sustained period. | Notify Operations Team; Investigate performance of each stage of the pipeline to identify bottlenecks. |

| Resource Utilization | Lambda Function Memory Utilization | Percentage of allocated memory used by Lambda functions. | Approaches the configured memory limit (e.g., 80% utilization). | Notify Operations Team; Consider increasing function memory allocation or optimizing function code. |

| Cost | AWS Lambda Cost | The total cost associated with running Lambda functions. | Exceeds a predefined budget threshold or significantly increases compared to previous periods. | Notify Finance Team; Investigate potential cost optimization strategies, such as reducing function execution time or optimizing data processing. |

| S3 Operations | S3 Upload Errors | Number of errors that occur during S3 object uploads. | Exceeds a predefined threshold (e.g., 10 errors) within a time window. | Notify Operations Team; Check S3 bucket permissions, connectivity, and overall health. |

| Glue Jobs | Glue Job Failure | Indicates the failure of a Glue ETL job. | Glue job fails or exceeds a specific duration threshold. | Notify Operations Team; Investigate Glue job logs for errors, and data quality issues. |

This table illustrates how to configure alerts based on critical metrics, facilitating proactive identification and resolution of issues within the serverless data ingestion pipeline.

Error Handling and Data Quality in Serverless Pipelines

Serverless data ingestion pipelines, while offering scalability and cost-effectiveness, necessitate robust error handling and data quality measures to ensure reliable data delivery to the data lake. The inherent distributed nature of serverless architectures introduces complexities in identifying and resolving failures. Simultaneously, maintaining data integrity throughout the ingestion process is crucial for deriving meaningful insights. This section delves into strategies for addressing these challenges, providing practical techniques for building resilient and high-quality serverless data pipelines.

Common Error Handling Strategies for Serverless Functions

Effective error handling is paramount in serverless architectures. Failures are inevitable in distributed systems, and a well-defined strategy is essential for mitigating their impact. The following strategies are frequently employed:

- Retries: Implementing retry mechanisms allows serverless functions to automatically reattempt failed operations. This is particularly useful for transient errors, such as temporary network glitches or service unavailability. Retry policies typically involve exponential backoff, where the time between retries increases with each attempt, preventing overwhelming downstream services.

- Dead-Letter Queues (DLQs): When an operation consistently fails after a defined number of retries, it’s crucial to move the failed message to a dead-letter queue. DLQs act as a holding area for messages that could not be processed successfully. This allows for manual inspection and resolution of the errors, preventing data loss and enabling debugging. The architecture also allows for the automated reprocessing of messages after the underlying issue is resolved.

- Error Notifications: Real-time error notifications are critical for proactive monitoring and incident response. Implementing alerts, often integrated with services like email, Slack, or PagerDuty, enables rapid identification of issues within the pipeline. These notifications should include details such as the function name, error message, and the triggering event, allowing for quick diagnosis and resolution.

Techniques for Ensuring Data Quality During Ingestion

Data quality is a foundational aspect of any data lake. Ingestion pipelines should incorporate mechanisms to validate and ensure the accuracy and consistency of data entering the lake. These techniques include:

- Data Validation: Implementing data validation rules at various stages of the ingestion pipeline is crucial. This can involve checking data types, formats, and ranges against predefined schemas. Validation can occur within the serverless function itself or through the integration of dedicated validation services. Validation errors should be handled gracefully, potentially leading to the rejection of invalid data or its placement in a quarantine area for further investigation.

- Schema Enforcement: Enforcing a schema ensures data consistency and facilitates efficient querying and analysis. Schema enforcement can be implemented using various methods, such as schema validation libraries, schema registries, or data catalog services. The schema defines the expected structure and data types of the incoming data, ensuring that the data conforms to the defined standards.

- Data Transformation and Cleansing: Data transformation and cleansing are essential steps to address data quality issues. This includes operations like removing duplicates, standardizing data formats, and correcting inconsistencies. Serverless functions can be utilized to perform these transformations before the data is stored in the data lake.

Implementation of a Retry Mechanism in a Serverless Function

A practical example of a retry mechanism can be implemented using AWS Lambda and the AWS SDK. The following illustrates a simplified approach:

First, consider a Lambda function designed to process data from an SQS queue. The function attempts to write data to an Amazon S3 bucket. A basic implementation might look like this:

import jsonimport boto3import times3 = boto3.client('s3')sqs = boto3.client('sqs')def lambda_handler(event, context): for record in event['Records']: try: # Parse the message from SQS message = json.loads(record['body']) bucket_name = 'your-bucket-name' file_key = f"data/message['id'].json" # Write the data to S3 s3.put_object(Bucket=bucket_name, Key=file_key, Body=json.dumps(message)) print(f"Successfully wrote file_key to bucket_name") except Exception as e: print(f"Error processing message: e") # Implement retry logic here return 'statusCode': 500, 'body': json.dumps('message': 'Error processing message') return 'statusCode': 200, 'body': json.dumps('message': 'Successfully processed messages') To add retries, you can modify the function to leverage the Lambda service’s built-in retry mechanism or implement a custom retry logic. Using the built-in mechanism is straightforward, you just need to set the “Retry attempts” in the Lambda configuration.

Here’s how to implement a basic custom retry mechanism with exponential backoff using the `time` module:

import jsonimport boto3import times3 = boto3.client('s3')sqs = boto3.client('sqs')MAX_RETRIES = 3BACKOFF_FACTOR = 2def lambda_handler(event, context): for record in event['Records']: message = json.loads(record['body']) bucket_name = 'your-bucket-name' file_key = f"data/message['id'].json" retries = 0 while retries <= MAX_RETRIES: try: s3.put_object(Bucket=bucket_name, Key=file_key, Body=json.dumps(message)) print(f"Successfully wrote file_key to bucket_name") break # Exit the loop if successful except Exception as e: print(f"Attempt retries + 1 failed: e") retries += 1 if retries <= MAX_RETRIES: sleep_time = BACKOFF_FACTOR-* retries print(f"Retrying in sleep_time seconds...") time.sleep(sleep_time) else: print(f"Max retries reached for file_key. Sending to DLQ.") # Implement DLQ sending logic here (e.g., sending to another SQS queue) # Or, you could raise the exception again to propagate the failure. raise return 'statusCode': 200, 'body': json.dumps('message': 'Successfully processed messages') In this enhanced version, the function attempts to write to S3. If an error occurs, the code retries the operation up to `MAX_RETRIES` times. The `time.sleep()` function introduces an exponential backoff, increasing the delay between retries. If the operation still fails after the maximum number of retries, the code should implement a dead-letter queue (DLQ) mechanism.

The example includes a placeholder comment indicating where DLQ logic would be implemented.

The `BACKOFF_FACTOR` controls the rate at which the backoff increases. For example, with a `BACKOFF_FACTOR` of 2, the first retry will occur after 1 second, the second after 2 seconds, the third after 4 seconds, and so on. This exponential backoff prevents overwhelming the downstream service and provides time for transient issues to resolve.

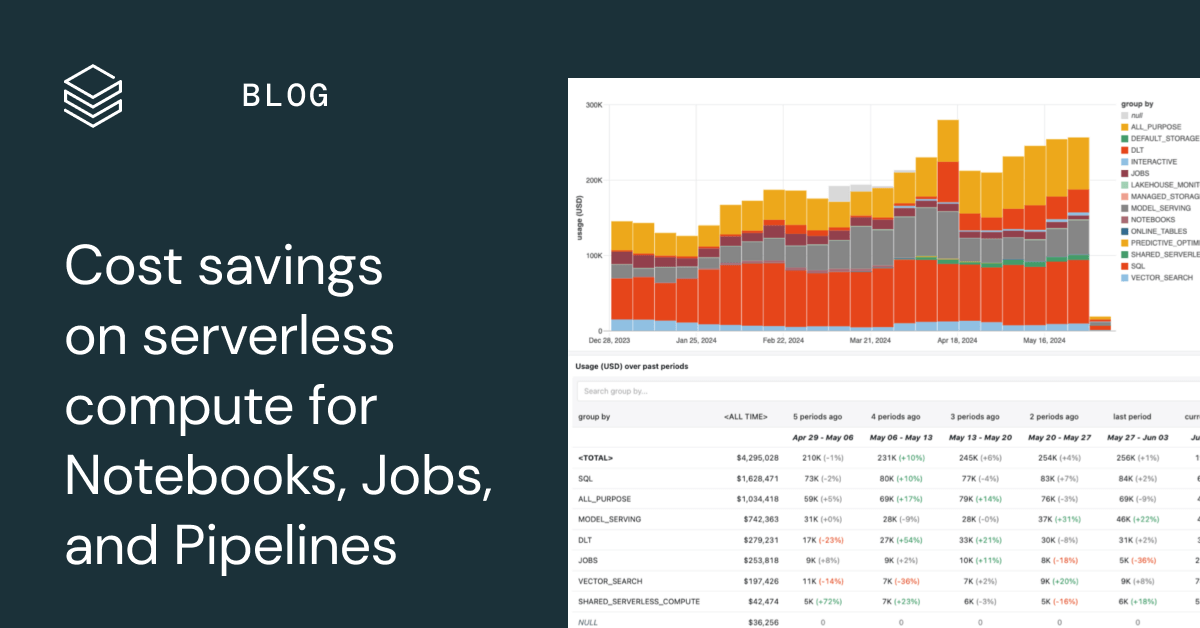

Cost Optimization Strategies for Serverless Data Ingestion

Optimizing the cost of serverless data ingestion is crucial for maximizing the return on investment and ensuring the long-term viability of data lake projects. Serverless architectures, while offering scalability and flexibility, can quickly become expensive if not managed effectively. This section explores the key factors influencing costs, provides practical optimization techniques, and details how to monitor and estimate costs using cloud provider tools.

Factors Impacting the Cost of Serverless Data Ingestion

Several factors contribute to the overall cost of a serverless data ingestion pipeline. Understanding these drivers is the first step toward effective cost management.

- Function Execution Time: The duration for which serverless functions run directly impacts cost. Longer execution times translate to higher charges, especially for functions processing large datasets or complex transformations.

- Memory Allocation: The amount of memory allocated to a serverless function influences both its performance and cost. Allocating more memory can improve performance but also increases the cost per execution. Conversely, under-allocating memory can lead to slower execution and potentially higher costs due to increased execution time.

- Number of Function Invocations: The frequency with which functions are triggered directly affects the cost. High-volume data ingestion pipelines will naturally incur more invocation charges.

- Data Storage Costs: Data storage within the data lake is a significant cost component. The type of storage (e.g., object storage, data warehouses) and the amount of data stored influence the overall expenditure.

- Network Transfer Costs: Data transfer between different cloud services, regions, or to external sources incurs network charges. Large datasets and frequent data transfers can result in substantial network costs.

- Service-Specific Pricing: Each cloud provider offers different pricing models for serverless services. For example, pricing can vary for compute, storage, and data transfer, with pay-per-use models often employed.

- Data Transformation Complexity: Complex data transformations involving computationally intensive operations increase function execution time and memory consumption, leading to higher costs.

Techniques for Optimizing Costs

Implementing effective cost optimization strategies requires a multi-faceted approach, encompassing function configuration, storage management, and service selection.

- Right-Sizing Functions: Accurately estimating the memory and compute resources needed by each function is critical. Over-provisioning resources leads to unnecessary costs, while under-provisioning impacts performance. Monitoring function execution times and memory utilization allows for fine-tuning resource allocation. For example, if a function consistently uses only 256MB of memory, consider reducing the allocated memory to reduce costs without affecting performance.

- Optimizing Data Storage: Choosing the appropriate storage class for data based on its access frequency can significantly reduce costs. For example, frequently accessed data can be stored in a standard storage class, while less frequently accessed data can be moved to a cheaper storage class like "infrequent access" or "cold storage." Data lifecycle policies can automate the migration of data to different storage tiers based on its age and access patterns.

- Batch Processing: Grouping multiple data events into a single batch before processing can reduce the number of function invocations and associated costs. For example, instead of triggering a function for each individual log event, aggregate the events over a specific time window and process them as a batch.

- Efficient Code: Writing optimized code that minimizes execution time and memory consumption is crucial. Using efficient libraries, minimizing data processing steps, and avoiding unnecessary computations can improve function performance and reduce costs.

- Using Cost-Effective Services: Comparing the pricing models of different serverless services from various cloud providers allows for selecting the most cost-effective options. For instance, choosing a service that offers a free tier or lower per-request charges can reduce costs. Evaluate services that offer more cost-effective pricing for data transformation and analysis tasks.

- Data Compression: Compressing data before storing it in the data lake reduces storage costs and potentially network transfer costs. Common compression algorithms, such as GZIP or Snappy, can significantly reduce data size.

- Event Source Optimization: Optimizing the event sources that trigger serverless functions can minimize costs. For example, adjusting the batch size and polling frequency of event sources can reduce the number of function invocations.

- Automated Scaling: Utilizing auto-scaling features provided by cloud providers ensures that resources are automatically scaled up or down based on demand. This prevents over-provisioning and ensures resources are available when needed.

Estimating and Monitoring Costs with Cloud Provider Tools

Cloud providers offer a range of tools and services to estimate, monitor, and manage the costs of serverless data ingestion pipelines.

- Cost Estimation Tools: Most cloud providers offer cost estimation tools that allow users to input expected workloads, function configurations, and data storage requirements to estimate the potential costs of a serverless data ingestion pipeline. These tools provide insights into the cost implications of different design choices.

- Cost Monitoring Dashboards: Cloud providers provide cost monitoring dashboards that display real-time and historical cost data. These dashboards enable users to track spending, identify cost trends, and set up budget alerts to prevent unexpected expenses. The dashboards typically provide visualizations of cost breakdowns by service, region, and resource.

- Cost Allocation Tags: Using cost allocation tags allows for categorizing and tracking costs associated with specific resources, projects, or teams. This facilitates a more granular understanding of spending and enables better cost management. For example, tagging all resources related to a specific data ingestion pipeline allows for tracking the cost of that pipeline independently.

- Cost Anomaly Detection: Cloud providers often offer features for detecting cost anomalies. These features automatically identify unexpected spikes in spending, allowing users to investigate and address potential issues promptly.

- Budget Alerts: Setting up budget alerts allows users to receive notifications when spending exceeds predefined thresholds. This helps to proactively manage costs and prevent overspending.

- Regular Cost Reviews: Regularly reviewing cost reports and dashboards is essential for identifying areas for optimization and ensuring that the data ingestion pipeline remains cost-effective. Analyzing cost trends and comparing them against expected workloads can help to identify potential cost-saving opportunities.

Real-World Use Cases and Examples

Serverless data ingestion has gained significant traction across various industries, enabling organizations to efficiently and cost-effectively collect, process, and store large volumes of data. These use cases highlight the versatility and adaptability of serverless architectures, showcasing their ability to address diverse data ingestion challenges. The following sections will delve into specific examples and a detailed case study to illustrate the practical applications of serverless data ingestion.

E-commerce Analytics and Personalization

E-commerce businesses generate vast amounts of data from various sources, including website clicks, purchase transactions, customer interactions, and marketing campaigns. Serverless data ingestion provides a scalable and cost-effective solution for processing this data in real-time.

- Data Sources: Website clickstream data, transaction logs from point-of-sale systems, customer relationship management (CRM) data, and marketing campaign performance data.

- Serverless Services:

- Amazon Kinesis Data Streams/Azure Event Hubs: For real-time ingestion of clickstream data.

- AWS Lambda/Azure Functions: To process and transform incoming data, such as enriching it with customer information.

- Amazon S3/Azure Blob Storage: For storing raw and processed data.

- Amazon Athena/Azure Synapse Analytics: For querying the data.

- Benefits: Real-time insights into customer behavior, personalized product recommendations, fraud detection, and improved marketing campaign performance.

IoT Data Ingestion and Analysis

The Internet of Things (IoT) generates massive amounts of data from connected devices, requiring scalable and reliable ingestion pipelines. Serverless architectures are ideally suited for handling the fluctuating data volumes and diverse data formats common in IoT deployments.

- Data Sources: Sensor data from devices such as temperature sensors, pressure sensors, and location trackers; device logs; and telemetry data.

- Serverless Services:

- AWS IoT Core/Azure IoT Hub: For secure device connectivity and data ingestion.

- AWS Lambda/Azure Functions: To process and filter device data.

- Amazon Kinesis Data Firehose/Azure Data Explorer: For batch and real-time data ingestion into a data lake.

- Amazon S3/Azure Data Lake Storage: For storing the data.

- Amazon QuickSight/Azure Data Studio: For visualizing the data.

- Benefits: Real-time monitoring of device health, predictive maintenance, anomaly detection, and improved operational efficiency.

Financial Transaction Processing

Financial institutions handle high volumes of transactions that require secure and reliable data ingestion pipelines. Serverless solutions offer the scalability and security features necessary to meet these demands.

- Data Sources: Transaction records from banking systems, payment gateways, and stock exchanges.

- Serverless Services:

- Amazon SQS/Azure Service Bus: For queuing transaction data.

- AWS Lambda/Azure Functions: For processing and validating transactions.

- Amazon DynamoDB/Azure Cosmos DB: For storing transaction details.

- AWS Glue/Azure Data Factory: For data transformation and ETL processes.

- Benefits: Fraud detection, regulatory compliance, real-time transaction monitoring, and improved customer service.

Case Study: Serverless Data Ingestion for a Healthcare Provider

A large healthcare provider sought to modernize its data infrastructure to improve patient care, streamline operations, and gain deeper insights into healthcare trends. They chose a serverless data ingestion architecture to address these goals.

- Challenge: The healthcare provider had data scattered across various systems, including electronic health records (EHRs), medical devices, claims processing systems, and research databases. They needed a centralized data lake to consolidate this information for analytics.

- Solution: The provider implemented a serverless data ingestion pipeline using the following architecture.

Serverless Data Ingestion Pipeline Architecture for a Healthcare Provider

The data ingestion pipeline is designed to ingest data from various sources, transform it, and load it into a data lake for analysis.

Data Flow Description:

The pipeline starts with data sources such as EHR systems, medical devices, and claims processing systems. Data is ingested into the cloud environment using various methods.

Data Ingestion Stage:

- EHR Systems: Data from EHR systems is extracted using scheduled batch jobs or real-time streaming through APIs. Data is then sent to Amazon S3 or Azure Blob Storage.

- Medical Devices: Data from medical devices is streamed in real-time using AWS IoT Core or Azure IoT Hub. The data is then sent to Amazon Kinesis Data Streams or Azure Event Hubs.

- Claims Processing Systems: Data from claims processing systems is ingested through batch processes, and files are sent to Amazon S3 or Azure Blob Storage.

Data Transformation and Enrichment Stage:

- AWS Lambda/Azure Functions: Triggered by events from data sources (e.g., new files in S3 or messages in Kinesis/Event Hubs), Lambda functions or Azure Functions perform data transformation tasks, such as data cleansing, data format conversion, and data enrichment.

- Data Enrichment: Lambda functions can enrich data by looking up information from reference data stores, such as patient demographic data or medical code databases.

- Data Validation: Functions validate the data against predefined rules to ensure data quality.

Data Storage Stage:

- Amazon S3/Azure Data Lake Storage: Transformed and enriched data is stored in a data lake, typically using a tiered storage approach (e.g., raw, curated, and analytical layers).

Data Analytics Stage:

- Amazon Athena/Azure Synapse Analytics: These services are used to query the data lake.

- Amazon QuickSight/Azure Power BI: Used for data visualization and reporting.

Visual Representation:

The visual representation of the pipeline is described as follows:

The diagram illustrates the data flow with the following components:

Data Sources: Represented by icons, including EHR Systems, Medical Devices (connected to a cloud-shaped icon), and Claims Processing Systems.

Ingestion Components: Include Amazon S3 and Azure Blob Storage (for batch), AWS IoT Core and Azure IoT Hub, and Amazon Kinesis Data Streams and Azure Event Hubs (for real-time data). The components are connected by arrows indicating data flow.

Processing Components: AWS Lambda and Azure Functions are represented as function icons. They are connected to the ingestion components, demonstrating how they are triggered by data events.