Delving into the intricacies of how to implement durable functions in Azure unveils a powerful approach to constructing stateful serverless applications. Unlike traditional serverless functions, Durable Functions provide a framework for orchestrating complex workflows, managing state, and handling long-running operations with remarkable efficiency. This technology empowers developers to build resilient, scalable, and maintainable solutions for a wide array of use cases, from business process automation to complex data processing pipelines.

This exploration will traverse the core concepts of Durable Functions, examining the orchestration patterns that define their capabilities. We will dissect the process of setting up the Azure environment, delving into code examples in multiple languages, and addressing critical aspects such as state management, monitoring, and scaling. By understanding these elements, developers can harness the full potential of Durable Functions to create sophisticated and robust applications on the Azure platform.

Introduction to Durable Functions

Durable Functions provide a powerful framework for orchestrating long-running, stateful, and reliable workflows within Azure Functions. They represent a significant advancement over traditional serverless approaches, particularly when dealing with complex application logic and intricate state management requirements. By abstracting away the complexities of state persistence and workflow coordination, Durable Functions enable developers to build resilient and scalable solutions that were previously difficult or impossible to achieve efficiently within a serverless context.

Core Concept and Benefits

Durable Functions are an extension of Azure Functions that allow you to write stateful functions in a serverless compute environment. At its core, a Durable Function application consists of three primary function types: activity functions, orchestrator functions, and client functions. Activity functions are the basic units of work, the tasks that the orchestrator will schedule and execute. Orchestrator functions define the workflow and coordinate the execution of activity functions.

Client functions are responsible for starting, monitoring, and interacting with the orchestrations. The key benefit lies in the automatic management of state, checkpoints, and reliable execution.The advantages of using Durable Functions are numerous:

- Stateful Orchestration: Durable Functions automatically manage the state of an orchestration, allowing for complex workflows that span multiple function invocations. This eliminates the need for developers to manually manage state using external storage mechanisms like databases or caches, simplifying the development process and reducing the potential for errors.

- Reliable Execution: Built-in mechanisms for automatic retries, error handling, and checkpointing ensure that orchestrations are resilient to failures. If a function execution fails, Durable Functions can automatically retry it, potentially multiple times, until it succeeds or reaches a pre-defined failure threshold.

- Asynchronous Operations: Durable Functions excel at orchestrating asynchronous operations, such as waiting for external events or long-running tasks. Orchestrator functions can pause execution and wait for events to occur before resuming, allowing for efficient use of resources.

- Scalability: Durable Functions are designed to scale automatically, handling large volumes of requests and complex workflows without requiring manual scaling efforts. The underlying infrastructure of Azure Functions provides the necessary resources to accommodate the demands of the application.

- Simplified Development: Durable Functions significantly reduce the complexity of building and managing complex workflows. The framework provides a clear and concise programming model, making it easier to develop, test, and deploy applications.

Orchestration Patterns

Durable Functions support several built-in orchestration patterns that address common workflow scenarios. These patterns provide pre-built solutions for complex tasks, simplifying the development process and ensuring best practices.

- Function Chaining: This pattern involves executing a series of functions in a specific order, where the output of one function serves as the input to the next. It is the most basic orchestration pattern.

- Fan-out/Fan-in: This pattern is used to execute multiple functions in parallel and then aggregate their results. It is useful for parallelizing tasks and improving performance. The fan-out phase involves starting multiple instances of an activity function, and the fan-in phase involves collecting and processing the results.

- Async HTTP APIs: This pattern is useful for creating long-running HTTP APIs that can handle asynchronous operations. The orchestrator function manages the state and provides a way to track the progress of the operation.

- Monitor: This pattern is used to periodically check the status of a long-running operation. The orchestrator function repeatedly checks the status of an activity function or external system and takes action based on the result.

- Human Interaction: This pattern allows for the integration of human interaction into the workflow. The orchestrator function can pause execution and wait for human input before continuing.

- External Events: This pattern is useful for waiting for external events to occur. The orchestrator function can subscribe to events and resume execution when an event is received.

Each of these patterns provides a structured way to build and manage complex workflows, allowing developers to focus on the business logic rather than the underlying infrastructure.

Advantages Over Traditional Serverless Approaches

Traditional serverless approaches, using only Azure Functions, can struggle with complex workflows that involve state management, long-running operations, and reliable execution. Durable Functions address these limitations by providing a dedicated framework for orchestrating these types of workflows.The benefits of using Durable Functions over traditional serverless approaches include:

- Simplified State Management: Durable Functions automatically manage the state of the orchestration, eliminating the need for developers to manually manage state using external storage mechanisms. This simplifies the development process and reduces the potential for errors. Traditional serverless approaches require developers to explicitly manage state, which can become complex and error-prone.

- Reliable Execution and Error Handling: Durable Functions provide built-in mechanisms for automatic retries, error handling, and checkpointing, ensuring that orchestrations are resilient to failures. Traditional serverless approaches require developers to implement these mechanisms manually, which can be time-consuming and complex.

- Support for Long-Running Operations: Durable Functions are designed to handle long-running operations, such as waiting for external events or long-running tasks. Traditional serverless approaches can struggle with these types of operations, often requiring workarounds such as polling or complex state management.

- Improved Code Organization and Readability: Durable Functions promote a more structured and organized approach to building complex workflows, leading to improved code readability and maintainability. Traditional serverless approaches can lead to spaghetti code, especially when dealing with complex workflows.

- Scalability and Performance: Durable Functions are designed to scale automatically, handling large volumes of requests and complex workflows without requiring manual scaling efforts. The underlying infrastructure of Azure Functions provides the necessary resources to accommodate the demands of the application. While traditional serverless approaches can also scale, the overhead of managing state and implementing reliability mechanisms can impact performance.

For example, consider a scenario involving processing a large number of files. Using traditional serverless functions, you might need to trigger a function for each file, store the processing status in a database, and implement a separate function to monitor the progress and handle failures. With Durable Functions, you can create an orchestrator function that manages the entire process, automatically handles retries, and provides a clear view of the workflow’s progress.

This reduces complexity, improves reliability, and simplifies development. Another example is an e-commerce platform that requires order processing. Using Durable Functions, one can create an orchestrator function to manage all the steps of an order, such as validation, payment, inventory, and shipping.

Setting up the Azure Environment

Establishing the correct Azure environment is paramount for deploying and operating Durable Functions effectively. This process involves creating and configuring several Azure resources, each playing a crucial role in the orchestration and execution of workflows. Failure to properly set up these resources can lead to deployment errors, performance bottlenecks, and even complete failure of the function application. The following sections detail the steps and requirements necessary to configure an Azure environment tailored for Durable Functions.

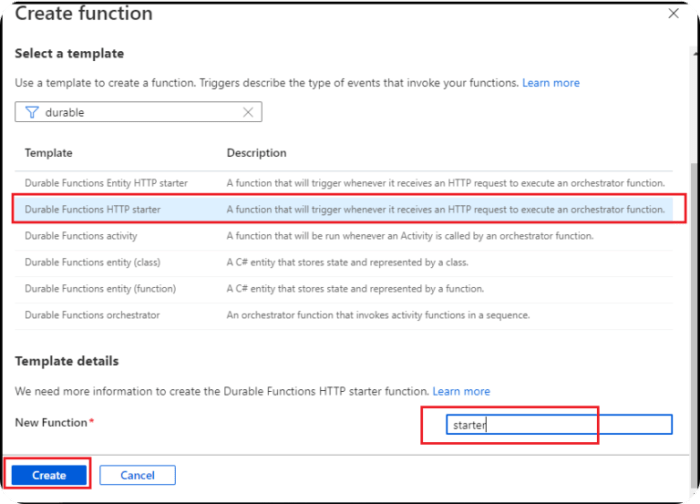

Creating an Azure Function App with Durable Functions Enabled

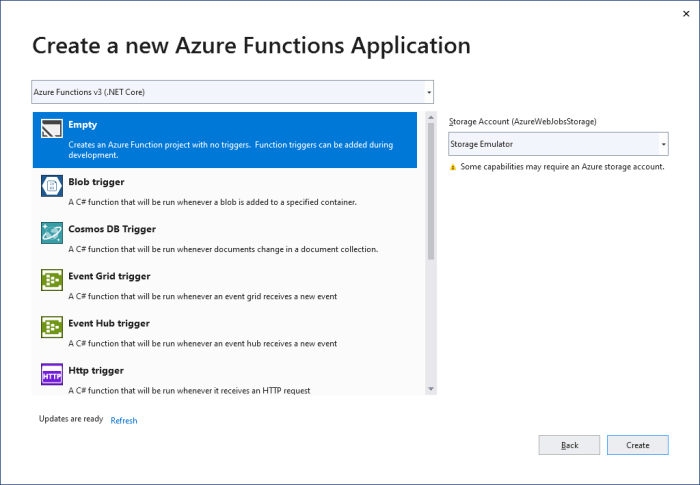

Deploying a Function App is the foundational step. This application will host the Durable Functions and provide the execution environment.To create an Azure Function App with Durable Functions, follow these steps:

- Access the Azure Portal: Navigate to the Azure portal ([https://portal.azure.com/](https://portal.azure.com/)). Sign in with your Azure account credentials.

- Create a new Function App: Search for “Function App” in the search bar and select the service. Click on “+ Create” to start the creation process.

- Provide Basic Information:

- Subscription: Select your Azure subscription.

- Resource Group: Choose an existing resource group or create a new one. Resource groups logically organize your Azure resources.

- Function App name: Provide a globally unique name for your Function App.

- Publish: Select “Code” or “Docker Container,” depending on your deployment method. For most scenarios, “Code” is the preferred choice.

- Runtime stack: Choose your preferred programming language runtime stack (e.g., .NET, Node.js, Python, Java).

- Region: Select the Azure region where you want to deploy your Function App. Consider factors such as latency and data residency.

- Select Hosting Plan:

- Consumption Plan: Suitable for workloads with variable traffic. You only pay for the resources consumed by your function executions. This is often the most cost-effective option for development and testing.

- Premium Plan: Provides dedicated resources, improved performance, and advanced features like VNet integration.

- App Service Plan: Offers dedicated virtual machines. This plan is appropriate for applications with predictable traffic patterns or requiring specific resource guarantees.

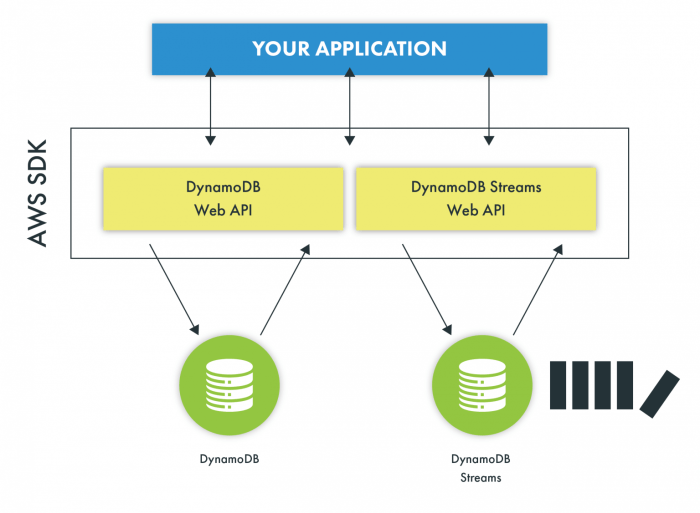

- Storage Account: Either select an existing storage account or create a new one. The storage account is used by the Function App to store function code, configuration files, and other relevant data. This is a critical step for enabling Durable Functions.

- Review and Create: Review the configuration and click “Create” to deploy the Function App. The deployment process can take a few minutes.

- Verify Deployment: Once the deployment is complete, navigate to your Function App in the Azure portal. Verify that the Function App has been created successfully and is running.

Requirements for Setting up the Necessary Azure Storage Account

The Azure Storage account is the backbone of Durable Functions. It provides the infrastructure for storing orchestration state, queue messages, and other necessary data. The proper configuration of the Storage account is critical for performance, reliability, and cost optimization.The Storage account requirements include:

- Account Type:

- General-purpose v2 (GPv2): This is the recommended account type for Durable Functions. GPv2 accounts support all storage services (blobs, queues, tables, and files) and provide the best performance and scalability.

- General-purpose v1 (GPv1): This is an older account type and is generally not recommended. It may have limitations in terms of performance and features.

- Blob Storage: While technically usable, Blob Storage accounts are not ideal as they lack queue and table storage capabilities required for Durable Functions.

- Performance Tier:

- Standard: This is the most cost-effective option and is suitable for development and testing environments.

- Premium: Offers higher performance and is recommended for production environments with high-volume workloads.

- Replication:

- Locally Redundant Storage (LRS): Data is replicated three times within a single data center. This is the least expensive option.

- Zone-Redundant Storage (ZRS): Data is replicated across multiple availability zones within a single region. This provides higher availability than LRS.

- Geo-Redundant Storage (GRS): Data is replicated to a secondary region. This provides the highest level of data durability and disaster recovery capabilities.

- Access Tier:

- Hot: Optimized for frequently accessed data.

- Cool: Optimized for infrequently accessed data.

- Archive: Optimized for rarely accessed data.

- Naming Conventions: Follow Azure naming conventions to ensure the Storage account name is globally unique. Storage account names must be between 3 and 24 characters long and can only contain lowercase letters and numbers.

- Security: Secure your Storage account using best practices, such as:

- Network security: Configure firewalls and virtual network (VNet) integration to restrict access to your Storage account.

- Authentication: Use managed identities or access keys to authenticate your Function App to the Storage account.

- Encryption: Enable encryption to protect your data at rest.

Configuring the Required Dependencies and NuGet Packages

The necessary dependencies and NuGet packages must be installed within the Function App project to enable Durable Functions. This step ensures that the runtime environment can correctly interpret and execute the orchestration logic.The following steps Artikel the configuration for .NET (C#) projects:

- Create a new Function App project: Using Visual Studio or the .NET CLI, create a new Azure Functions project.

- Install the Durable Functions NuGet package: Add the `Microsoft.Azure.WebJobs.Extensions.DurableTask` NuGet package to your project. This package provides the necessary classes and interfaces for building Durable Functions. You can install it via the NuGet Package Manager in Visual Studio or by using the .NET CLI:

dotnet add package Microsoft.Azure.WebJobs.Extensions.DurableTask - Install other required packages: Based on the programming language and specific needs of the Function App, install other relevant NuGet packages. For example, install packages for Azure Storage, logging, or other Azure services.

- Configure the `host.json` file: The `host.json` file is used to configure the Function App. The following settings are particularly important for Durable Functions:

- `extensions.durableTask.hubName`: Defines the name of the Durable Functions hub. This value is typically set to the name of the Function App or a similar identifier.

- `extensions.durableTask.storageProvider.connectionString`: Specifies the connection string to the Azure Storage account. This connection string is used by Durable Functions to connect to the Storage account.

- `extensions.durableTask.tracing.traceInputsAndOutputs`: Controls whether the inputs and outputs of function calls are logged. This setting can be helpful for debugging.

- Configure `local.settings.json` (for local development): For local development, configure the `local.settings.json` file to store the connection string to the Azure Storage account. This file is used by the Function App when running locally.

- Deploy the Function App: Deploy the Function App to Azure. The NuGet packages and configurations are automatically deployed along with the code.

Orchestration Patterns

Orchestration patterns are fundamental building blocks for designing and implementing complex workflows within Durable Functions. They define how different function activities are coordinated and executed. Understanding and utilizing these patterns allows for the creation of robust, scalable, and maintainable applications. These patterns address common scenarios, such as function chaining, fan-out/fan-in, and human interaction, each offering a structured approach to solve specific challenges in distributed systems.

Function Chaining

Function chaining is a core orchestration pattern that allows the sequential execution of a series of functions. Each function in the chain receives the output of the preceding function as its input. This pattern is particularly useful for scenarios where a process requires multiple steps, with each step building upon the result of the previous one. This sequential nature ensures that tasks are completed in a specific order, guaranteeing data integrity and workflow consistency.To understand function chaining, consider a step-by-step example involving processing an order:

- Order Placement: A function receives an order request. This function might validate the order details and save them to a database.

- Payment Processing: The output of the order placement function (e.g., order ID) is passed to a payment processing function. This function charges the customer’s credit card.

- Inventory Update: The output of the payment processing function (e.g., payment confirmation) is passed to an inventory update function. This function reduces the stock levels for the ordered items.

- Shipping Notification: The output of the inventory update function (e.g., shipment details) is passed to a shipping notification function. This function sends a notification to the customer, indicating the order has shipped.

This sequence of activities demonstrates how the output of one function serves as the input for the next, creating a cohesive workflow.Code examples for function chaining are provided below, demonstrating its implementation in C#, Python, and JavaScript.

C# Example

This C# example illustrates function chaining. The orchestration function calls several activity functions sequentially. Each activity function receives the output of the previous activity.“`csharpusing System.Threading.Tasks;using Microsoft.Azure.WebJobs;using Microsoft.Azure.WebJobs.Extensions.DurableTask;public static class FunctionChainingExample [FunctionName(“OrderProcessingOrchestrator”)] public static async Task

Python Example

The following Python example demonstrates function chaining using the Azure Durable Functions Python library. It shows the same order processing workflow as the C# example.“`pythonimport loggingimport azure.functions as funcimport azure.durable_functions as dfdef orchestrator_function(context: df.DurableOrchestrationContext): order_id = yield context.call_activity(“place_order”, None) payment_confirmation = yield context.call_activity(“process_payment”, order_id) inventory_update_result = yield context.call_activity(“update_inventory”, payment_confirmation) shipping_notification_result = yield context.call_activity(“send_shipping_notification”, inventory_update_result) return shipping_notification_resultdef place_order(input: str) -> str: logging.info(“Placing order…”) return “Order placed successfully!”def process_payment(order_id: str) -> str: logging.info(f”Processing payment for order: order_id…”) return “Payment processed successfully!”def update_inventory(payment_confirmation: str) -> str: logging.info(“Updating inventory…”) return “Inventory updated successfully!”def send_shipping_notification(inventory_update_result: str) -> str: logging.info(“Sending shipping notification…”) return “Shipping notification sent!”main = df.OrchestrationClient.create(orchestrator_function)place_order = df.ActivityClient.create(place_order)process_payment = df.ActivityClient.create(process_payment)update_inventory = df.ActivityClient.create(update_inventory)send_shipping_notification = df.ActivityClient.create(send_shipping_notification)“`This Python code defines an orchestrator function `orchestrator_function` that calls the activity functions `place_order`, `process_payment`, `update_inventory`, and `send_shipping_notification`.

The `yield` is used to call the activity functions, passing the output of one function to the next.

JavaScript Example

The JavaScript example provides another perspective on function chaining using the Azure Durable Functions JavaScript library. This code performs the same order processing workflow as the C# and Python examples.“`javascriptconst df = require(“durable-functions”);module.exports = df.orchestrator(function*(context) const orderId = yield context.df.callActivity(“placeOrder”, null); const paymentConfirmation = yield context.df.callActivity(“processPayment”, orderId); const inventoryUpdateResult = yield context.df.callActivity(“updateInventory”, paymentConfirmation); const shippingNotificationResult = yield context.df.callActivity(“sendShippingNotification”, inventoryUpdateResult); return shippingNotificationResult;);module.exports.placeOrder = df.activity(function(context) // Simulate order placement console.log(“Placing order…”); return “Order placed successfully!”;);module.exports.processPayment = df.activity(function(context) // Simulate payment processing console.log(`Processing payment for order: $context.inputs…`); return “Payment processed successfully!”;);module.exports.updateInventory = df.activity(function(context) // Simulate inventory update console.log(“Updating inventory…”); return “Inventory updated successfully!”;);module.exports.sendShippingNotification = df.activity(function(context) // Simulate shipping notification console.log(“Sending shipping notification…”); return “Shipping notification sent!”;);“`In this JavaScript example, the orchestrator function uses `yield` to call the activity functions `placeOrder`, `processPayment`, `updateInventory`, and `sendShippingNotification`.

Each activity function performs its specific task, and the output of each is passed to the subsequent function.Error handling is a crucial aspect of any robust workflow. Function chaining can incorporate error handling to manage exceptions that may occur during the execution of the chain. Implementing try-catch blocks within the orchestrator function allows for catching exceptions and executing alternative actions, such as retrying the failed activity or logging the error.The following is an example of how to handle errors in the C# example.“`csharpusing System;using System.Threading.Tasks;using Microsoft.Azure.WebJobs;using Microsoft.Azure.WebJobs.Extensions.DurableTask;using Microsoft.Extensions.Logging;public static class FunctionChainingErrorHandling [FunctionName(“OrderProcessingOrchestratorWithErrorHandling”)] public static async Task

Orchestration Patterns

Durable Functions provide a powerful framework for implementing various orchestration patterns, enabling developers to build complex, stateful, and reliable workflows. Among these patterns, Fan-out/Fan-in is particularly useful for parallel processing of tasks, aggregating results, and optimizing execution time. This pattern allows for the concurrent execution of multiple activities and then consolidates their outputs into a final result.

Fan-out/Fan-in Implementation

The Fan-out/Fan-in pattern in Durable Functions involves two primary stages: fan-out, where multiple activities are initiated in parallel, and fan-in, where the results of these activities are aggregated. The orchestration function orchestrates this process, coordinating the execution of activities and handling their outputs.The orchestration function initiates the fan-out phase by creating a list of activity function calls. These calls are typically initiated using `Task.WhenAll` or a similar mechanism to start multiple activities concurrently.

Each activity function performs a specific task, such as processing data, interacting with external services, or performing calculations. The orchestration function waits for all activity functions to complete. Once all activities are finished, the fan-in phase begins. The orchestration function collects the results from each activity and aggregates them to produce a final result. This aggregation can involve various operations, such as summing values, merging data, or determining the maximum or minimum value.

The final result is then returned by the orchestration function.Example of the fan-out/fan-in pattern implementation:“`csharp[FunctionName(“FanOutFanInOrchestrator”)]public static async Task

Visual Representation of Fan-out/Fan-in

The Fan-out/Fan-in pattern can be visually represented using a diagram that illustrates the flow of execution and the relationship between the orchestration function and the activity functions.The diagram starts with the orchestration function, which receives input. The orchestration function then branches out, spawning multiple activity functions that run concurrently. Each activity function performs its task independently. After all activity functions have completed, their results are gathered and fed back into the orchestration function.

The orchestration function then aggregates these results to produce the final output.The diagram includes the following elements:* A box representing the orchestration function.

- Multiple boxes representing the activity functions.

- Arrows indicating the flow of execution, starting from the orchestration function and branching out to the activity functions, then converging back to the orchestration function.

- Labels indicating the input, the activity function results, and the final output.

This diagram visually explains the concurrent execution of activity functions and the aggregation of results, simplifying the understanding of the Fan-out/Fan-in pattern.

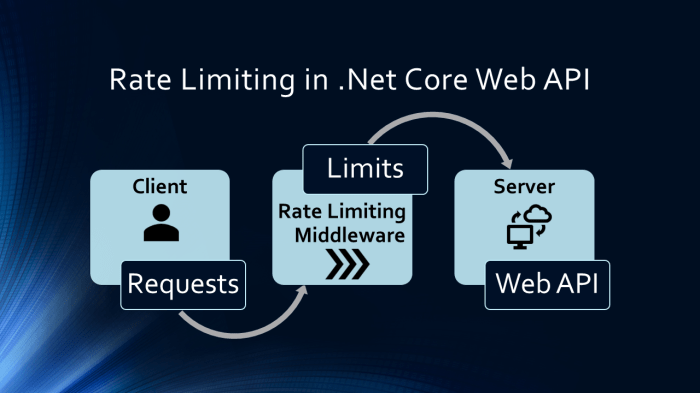

Best Practices for Managing Concurrent Executions

Effective management of concurrent executions in the Fan-out/Fan-in pattern requires careful consideration of several factors. Implementing these best practices ensures optimal performance, resource utilization, and overall reliability.* Limit concurrency: Over-concurrency can lead to resource exhaustion and performance degradation. Implement mechanisms to limit the number of concurrent activity function executions. Use the `MaxConcurrentActivityFunctions` setting in the `host.json` file to control the maximum number of concurrent activity function executions.

“`json “extensions”: “durableTask”: “hubName”: “MyDurableFunctionsHub”, “storageProvider”: “type”: “AzureStorage”, “connectionStringName”: “AzureWebJobsStorage” , “maxConcurrentActivityFunctions”: 10, “maxConcurrentOrchestrations”: 10 “` This configuration limits the number of concurrent activity function executions to 10.

Implement error handling

Robust error handling is crucial in parallel workflows. Implement try-catch blocks within activity functions and orchestration functions to handle exceptions gracefully. Use retry policies to automatically retry failed activities. “`csharp try // Call Activity await context.CallActivityAsync(“MyActivity”, input); catch (Exception e) // Handle the error // Optionally, retry the activity context.SetCustomStatus(“Activity Failed”); “`

Optimize activity function design

Activity functions should be designed to be idempotent and stateless. Idempotent functions can be safely retried without causing unintended side effects. Stateless functions avoid the need to maintain state, reducing complexity and improving scalability.

Monitor and log

Implement comprehensive monitoring and logging to track the execution of orchestration and activity functions. Monitor performance metrics, such as execution time, resource utilization, and error rates. Use logging to capture detailed information about the workflow’s execution, including input, output, and any errors that occur. This helps in debugging and identifying performance bottlenecks.

Consider activity function timeouts

Set appropriate timeouts for activity functions to prevent indefinite execution. Use the `timeout` property in the `function.json` file for activity functions. “`json “bindings”: [ “name”: “input”, “type”: “activityTrigger”, “direction”: “in” ], “timeout”: “00:05:00” // 5-minute timeout “` The example demonstrates how to set a 5-minute timeout for an activity function.

This prevents activity functions from running indefinitely if they encounter issues.

Use appropriate data types

When passing data between orchestration and activity functions, choose appropriate data types to optimize serialization and deserialization performance. Avoid passing large objects unnecessarily.

Scale resources appropriately

Ensure that the Azure resources, such as function app instances and storage accounts, are scaled appropriately to handle the workload. Monitor resource utilization and adjust scaling settings as needed.These best practices help in building robust, efficient, and scalable Fan-out/Fan-in workflows using Durable Functions. By implementing these strategies, developers can maximize the benefits of parallel processing while minimizing the risks associated with concurrent execution.

Orchestration Patterns

Asynchronous HTTP APIs are a powerful application of Durable Functions, allowing the creation of long-running processes that can be initiated and managed through standard HTTP requests. This pattern is particularly useful for operations that exceed the typical timeout limits of a single HTTP request, providing a mechanism to track progress and retrieve results asynchronously.

Async HTTP APIs Design

Designing asynchronous HTTP APIs with Durable Functions involves several key steps to ensure a robust and scalable solution.

- HTTP Trigger: An HTTP trigger acts as the entry point for the orchestration. It receives the initial HTTP request, typically containing input data needed for the long-running operation. The trigger function initiates the Durable Function orchestration.

- Orchestration Function: The orchestration function defines the workflow of the long-running operation. It orchestrates the execution of activities, manages state, and handles error conditions. The orchestration function is responsible for defining the steps of the asynchronous process.

- Activity Functions: Activity functions perform the actual work. They can be thought of as the individual tasks within the larger orchestration. These functions are where the business logic resides.

- Status Endpoints: Status endpoints provide mechanisms to check the status of a running orchestration. These endpoints return information about the orchestration’s progress, including its current state (e.g., running, completed, failed), input, output, and execution history.

- Callback Mechanism: The ability to provide a callback URL allows the orchestration to notify the caller when the operation is complete or has reached a certain state. This eliminates the need for the client to poll the status endpoint continuously.

Triggering Orchestrations via HTTP Endpoints

Initiating a Durable Function orchestration through an HTTP endpoint involves creating an HTTP-triggered function that starts the orchestration.

The following code snippets, using Python and C#, illustrate how to trigger an orchestration.

Python Example:

“`pythonimport loggingimport azure.functions as funcimport azure.durable_functions as dfasync def http_start(req: func.HttpRequest, starter: str) -> func.HttpResponse: client = df.DurableOrchestrationClient(starter) instance_id = await client.start_new(req.route_params[“orchestration_name”], None, req.get_json()) logging.info(f”Started orchestration with ID = ‘instance_id’.”) return client.create_check_status_response(req, instance_id)“`

C# Example:

“`csharpusing System.Net;using Microsoft.Azure.WebJobs;using Microsoft.Azure.WebJobs.Extensions.Http;using Microsoft.AspNetCore.Http;using Microsoft.Extensions.Logging;using DurableTask.AzureStorage;public static class HttpStart [FunctionName(“HttpStart”)] public static async Task

These examples demonstrate the core functionality:

- The HTTP trigger receives the initial request.

- The Durable Function client is used to start a new orchestration instance.

- The client returns an HTTP response containing information about the orchestration, including the instance ID and status endpoints.

Handling Long-Running Operations and Status Updates

Managing long-running operations and providing status updates are crucial aspects of asynchronous HTTP APIs.

Implementing Status Updates:

Durable Functions provides built-in mechanisms for tracking the status of orchestrations. The `create_check_status_response` (Python) or `CreateCheckStatusResponse` (C#) methods generate an HTTP response that includes URLs for monitoring the orchestration’s status. These URLs can be used to retrieve the current state of the orchestration, including its input, output, and execution history.

Polling vs. Webhooks:

- Polling: Clients periodically call the status endpoint to check the orchestration’s progress. This approach is simple to implement but can lead to unnecessary network traffic if the operation is not yet complete.

- Webhooks: The orchestration function can be configured to call a provided callback URL when the operation is complete or has reached a certain state. This eliminates the need for polling and provides real-time updates.

Example of Status Updates in C#:

“`csharp[FunctionName(“OrchestrationFunction”)]public static async Task

In this example, the orchestration function calls an activity function that simulates a long-running operation. The status endpoint can be used to monitor the progress of the orchestration, and the output of the activity function is returned when the orchestration completes.

Real-World Examples:

Consider a scenario where a user uploads a large video file for processing. The asynchronous HTTP API pattern can be used to:

- Accept the upload via an HTTP trigger.

- Start a Durable Function orchestration to handle video encoding, thumbnail generation, and content moderation.

- Return an HTTP response with an instance ID and status endpoints.

- Allow the user to check the processing status through the status endpoints.

- Optionally, notify the user via a webhook when the video processing is complete.

Durable Function Code Examples

Durable Functions provide a powerful framework for building stateful serverless applications. Understanding how to implement these functions across different programming languages is crucial for leveraging their capabilities effectively. This section delves into practical code examples, showcasing activity and orchestration functions in C#, Python, and JavaScript. These examples aim to illustrate the core concepts and common patterns used in Durable Functions.

C# Code Example

This example demonstrates a simple Durable Function written in C#. It comprises an orchestration function and an activity function. The orchestration function coordinates the execution of the activity function, demonstrating a basic fan-out/fan-in pattern.“`csharpusing System;using System.Collections.Generic;using System.Threading.Tasks;using Microsoft.Azure.WebJobs;using Microsoft.Azure.WebJobs.Extensions.DurableTask;using Microsoft.Extensions.Logging;public static class DurableFunctionExample [FunctionName(“DurableFunctionOrchestrator”)] public static async Task > RunOrchestrator( [OrchestrationTrigger] IDurableOrchestrationContext context, ILogger log) var input = context.GetInput

DurableFunctionOrchestrator: This is the orchestration function. It receives an array of strings as input. It then iterates through the array, calling theDurableFunctionActivityactivity function for each string. Finally, it aggregates the results.DurableFunctionActivity: This is the activity function. It takes a string (a name) as input and returns a greeting. It also logs an informational message.DurableFunctionStarter: This function acts as a starter function, triggered by an HTTP request. It initiates the orchestration and returns the instance ID for tracking. It also provides a way to trigger the function via an HTTP endpoint.

Python Code Example

This example showcases a Durable Function implemented in Python, demonstrating a similar fan-out/fan-in pattern.“`pythonimport loggingimport azure.functions as funcimport azure.durable_functions as dfdef orchestrator_function(context: df.DurableOrchestrationContext): input_data = context.get_input() tasks = [] for item in input_data: tasks.append(context.call_activity(“activity_function”, item)) results = yield context.task_all(tasks) return resultsdef activity_function(name: str) -> str: return f”Hello, name!”def main(req: func.HttpRequest, starter: str, context: func.Context) -> func.HttpResponse: client = df.DurableClient(starter) input_data = [“Tokyo”, “Seattle”, “London”] instance_id = client.start_new(“orchestrator_function”, None, input_data) logging.info(f”Started orchestration with ID = ‘instance_id’.”) return client.create_check_status_response(req, instance_id)main = df.DurableOrchestrationClient(main)orchestrator_function = df.orchestrator(orchestrator_function)activity_function = df.activity(activity_function)“`The Python code illustrates:

orchestrator_function: This orchestration function receives a list of strings as input. It calls theactivity_functionfor each item in the list and collects the results. It utilizes `context.task_all` to wait for all activity functions to complete.activity_function: This is the activity function, which takes a name as input and returns a greeting.main: This function serves as the entry point and starter. It initiates the orchestration, passing the input data and returning a response with the instance ID.

JavaScript Code Example

This JavaScript example provides a Durable Function implementation, also demonstrating the core orchestration and activity function concepts.“`javascriptconst df = require(“durable-functions”);module.exports = df.orchestrator(function* (context) const input = context.df.getInput(); const tasks = []; for (const item of input) tasks.push(context.df.callActivity(“activityFunction”, item)); const results = yield context.df.Task.all(tasks); return results;);module.exports.activityFunction = df.activity(function (context) const name = context.df.getInput(); return `Hello, $name!`;);module.exports.starter = df.httpStart(df.orchestrator(module.exports), “starter”);“`The JavaScript code comprises:

- The orchestrator function, which calls the activity function for each item in the input array and waits for all activities to complete using `Task.all`.

- The activity function, which receives a name as input and returns a greeting.

- A starter function, which initiates the orchestration via an HTTP trigger.

State Management and Data Handling

Managing state and handling data efficiently are crucial aspects of durable function design. These considerations directly impact performance, scalability, and the overall reliability of applications built on this framework. Understanding the various methods for state management, the strategies for handling large data payloads, and the best practices for dealing with timeouts and retries is essential for building robust and resilient durable functions.

State Management in Durable Functions

Durable Functions offer several mechanisms for managing state, each with its own characteristics and trade-offs. Choosing the appropriate method depends on the specific requirements of the application, considering factors such as data size, frequency of updates, and the need for transactional consistency.

- Function Input and Output: Functions, both orchestrator and activity, can directly receive input data and return output data. This is the simplest form of state management, suitable for passing small amounts of data between function calls. The data is automatically serialized and deserialized by the Durable Functions runtime. This approach is straightforward but has limitations regarding data size and the need for more complex state persistence.

- Orchestration History: The orchestration history, maintained by the Durable Functions runtime, serves as the primary source of truth for the state of an orchestration. Each event in the history represents a state transition, including function calls, inputs, outputs, and other events. The history is durable and fault-tolerant, ensuring that the state is preserved even in the event of failures. The runtime replays the history to reconstruct the state of an orchestration, which allows the function to recover from interruptions.

However, querying the history directly can be inefficient for complex scenarios.

- External Storage: For managing larger data sets or data that needs to be accessed by other services, external storage solutions, such as Azure Blob Storage, Azure Cosmos DB, or Azure SQL Database, can be utilized. The durable function can interact with these storage services to read and write data. This approach allows for handling large data payloads and enables data sharing with other applications.

However, it introduces additional complexity, requiring developers to manage the data storage and access logic explicitly. For example, if a function needs to process a large file, it can store the file in Azure Blob Storage and then pass a reference (e.g., a URL) to the activity functions.

- Entity Functions: Entity Functions provide a mechanism for managing state in a more granular and efficient manner. They are designed to encapsulate state and provide operations for interacting with that state. This is useful when you need to store and update small, well-defined pieces of state, as the framework optimizes the underlying storage and access patterns. They offer transactional consistency and are well-suited for scenarios like managing shopping carts or tracking user profiles.

Handling Large Data Payloads and Data Serialization

Efficiently handling large data payloads is essential for applications that process significant amounts of data. This involves selecting appropriate data serialization formats and implementing strategies for managing data transfer and storage.

- Serialization Formats: Durable Functions automatically serialize and deserialize data passed between functions. The default serializer is based on the .NET JSON serializer. Developers can customize the serialization behavior by implementing a custom serializer, which allows them to control how data is represented and serialized. Choosing the appropriate format (e.g., JSON, Protocol Buffers, Avro) can significantly impact performance and storage efficiency.

Consider the size of the data, the complexity of the data structure, and the need for schema evolution.

- Data Transfer Optimization: For large data payloads, passing the entire data set directly between functions can be inefficient. Consider the following strategies:

- Reference-Based Passing: Instead of passing the data itself, pass a reference (e.g., a URL or key) to the data stored in external storage, like Azure Blob Storage. This reduces the amount of data transferred between functions.

- Chunking: Break down large data sets into smaller chunks, and process each chunk separately using multiple activity functions. This enables parallel processing and reduces the memory footprint of each function instance.

- Streaming: For very large data sets, consider streaming the data directly from the source to the destination, avoiding the need to load the entire data set into memory.

- Compression: Compressing large data payloads before serialization can reduce the amount of data transferred and stored. Consider using compression algorithms like Gzip or Deflate.

Best Practices for Timeouts and Retries in Long-Running Operations

Timeouts and retries are critical for building resilient durable functions that can handle transient failures and long-running operations.

- Timeout Configuration: Configure timeouts appropriately for both activity functions and orchestrator functions. The timeout should be longer than the expected execution time of the function, accounting for potential delays. Consider using different timeout values for different operations based on their criticality and expected duration.

- Retry Policies: Implement retry policies for activity functions that may experience transient failures. Durable Functions provides built-in retry mechanisms that can be configured with parameters such as the number of retries, the delay between retries, and the backoff strategy. Use exponential backoff to avoid overwhelming dependent services.

- Idempotency: Design activity functions to be idempotent, meaning that they can be executed multiple times without changing the outcome. This is essential for ensuring that retries do not lead to unintended side effects.

- Monitoring and Alerting: Implement monitoring and alerting to detect and respond to timeout and retry events. This can help identify performance bottlenecks and potential issues with dependent services. Set up alerts to notify you when retries are failing repeatedly or when functions are taking longer than expected to complete.

- Compensation Transactions: For complex workflows, consider implementing compensation transactions to undo the effects of partially completed operations in case of failures. This ensures data consistency and prevents data corruption. For example, if a function has to process payments and one of the payment methods fails, it is better to reverse the operations.

Monitoring and Debugging Durable Functions

Effective monitoring and debugging are crucial for maintaining the health, performance, and reliability of Durable Functions applications. Azure provides a comprehensive suite of tools and services designed to help developers observe, diagnose, and resolve issues within their function orchestrations. This section details the key aspects of monitoring and debugging Durable Functions.

Tools and Techniques for Monitoring Durable Functions

Azure offers several tools and techniques to monitor Durable Functions, providing insights into their execution, performance, and overall health. Understanding and utilizing these tools is essential for proactively identifying and addressing potential issues.

- Azure Application Insights: Application Insights is a powerful application performance management (APM) service. It collects telemetry data from your Durable Functions, including requests, dependencies, exceptions, and performance metrics. It provides features such as:

- Live Metrics Stream: Real-time monitoring of function execution, allowing you to observe function performance and resource consumption as they occur.

- Performance Charts: Visualizations of function execution times, throughput, and error rates.

- Dependency Mapping: Visual representation of the interactions between your Durable Functions and external services.

- Failure Analysis: Automated detection and analysis of exceptions and errors, helping you quickly identify the root cause of issues.

Application Insights integrates seamlessly with Durable Functions, automatically collecting and displaying key metrics. For instance, you can monitor the number of function executions, execution duration, and the number of failed function calls.

- Azure Monitor: Azure Monitor provides a unified monitoring experience across all Azure services. It collects metrics and logs from your Durable Functions, allowing you to:

- Create Alerts: Set up alerts based on specific metrics, such as function execution time exceeding a threshold or the number of failed executions.

- Build Dashboards: Customize dashboards to visualize key performance indicators (KPIs) for your Durable Functions.

- Analyze Logs: Use log queries to investigate function execution details, including input parameters, output values, and any errors that occurred.

Azure Monitor integrates with Application Insights, providing a consolidated view of your function’s health and performance. For example, you can use Azure Monitor to create an alert that triggers when the average execution time of an activity function exceeds a defined limit, indicating a potential performance bottleneck.

- Azure Storage Explorer: While not a direct monitoring tool, Azure Storage Explorer can be used to inspect the internal storage used by Durable Functions, providing insights into orchestration state and history. This can be helpful for understanding the state of long-running orchestrations and diagnosing issues.

- Function App Logs: Function App logs provide detailed information about function executions, including function triggers, activity function calls, and any errors or exceptions that occur. These logs are essential for debugging and troubleshooting issues within your functions.

Methods for Debugging Durable Function Orchestrations

Debugging Durable Function orchestrations requires a different approach than debugging standard functions due to their distributed and stateful nature. Several methods can be employed to effectively debug these applications.

- Local Debugging: Debugging Durable Functions locally allows you to step through your code and inspect variables as your functions execute.

- Visual Studio Code with Azure Functions Extension: This provides a comprehensive debugging experience, allowing you to set breakpoints, step through code, and inspect variables.

- Azure Functions Core Tools: These tools can be used to run and debug your functions locally.

- Remote Debugging: Remote debugging allows you to debug your functions running in Azure.

- Visual Studio: Visual Studio provides remote debugging capabilities, allowing you to connect to your function app running in Azure and debug your functions.

- Azure Portal: The Azure portal allows you to monitor and debug your function apps, including the ability to view logs and metrics.

- Logging and Tracing: Implementing robust logging and tracing is crucial for debugging Durable Functions.

- Structured Logging: Use structured logging to log relevant information, such as function input parameters, output values, and any errors or exceptions that occur.

- Activity Function Context: Access the activity function context to obtain the activity function’s input and output.

- Orchestration Context: Use the orchestration context to log the orchestration state.

- Using the Durable Functions History: Durable Functions maintains a history of all orchestration events. This history can be used to reconstruct the state of an orchestration and diagnose issues. This history is accessible through:

- Application Insights: Application Insights provides access to the function execution history, allowing you to view the events that occurred during an orchestration.

- Azure Storage Explorer: Azure Storage Explorer can be used to view the internal storage used by Durable Functions, including the orchestration history.

Interpreting Logs and Metrics for Troubleshooting

Effective troubleshooting of Durable Functions relies on the ability to interpret logs and metrics to identify and resolve issues. Understanding the various types of logs and metrics and how to analyze them is essential.

- Log Analysis: Analyzing logs is a critical part of troubleshooting Durable Functions.

- Function App Logs: Examine the Function App logs for detailed information about function executions, including function triggers, activity function calls, and any errors or exceptions that occur.

- Application Insights Logs: Utilize Application Insights logs to view the execution history of your orchestrations and activity functions.

- Error Analysis: Identify and analyze errors and exceptions in the logs to understand the root cause of issues.

- Metric Analysis: Metrics provide valuable insights into the performance and health of your Durable Functions.

- Execution Time: Monitor the execution time of your functions to identify performance bottlenecks.

- Throughput: Track the throughput of your functions to understand how many executions are being processed.

- Error Rate: Monitor the error rate to identify and address issues that are causing failures.

- Common Issues and Their Resolution:

- Timeouts: Timeouts can occur if activity functions take too long to execute. Increase the timeout settings or optimize the activity function code.

- Exceptions: Exceptions can occur in activity functions or orchestrator functions. Analyze the logs to identify the cause of the exception and fix the code.

- Performance Bottlenecks: Performance bottlenecks can occur in activity functions or orchestrator functions. Optimize the code or scale the function app to improve performance.

Scaling and Performance Considerations

Durable Functions, built on Azure Functions, inherently benefit from the platform’s scalability. Understanding how to optimize function performance and leverage scaling capabilities is crucial for building robust and efficient applications. This section delves into the scaling mechanisms, performance characteristics, and optimization strategies specific to Durable Functions.

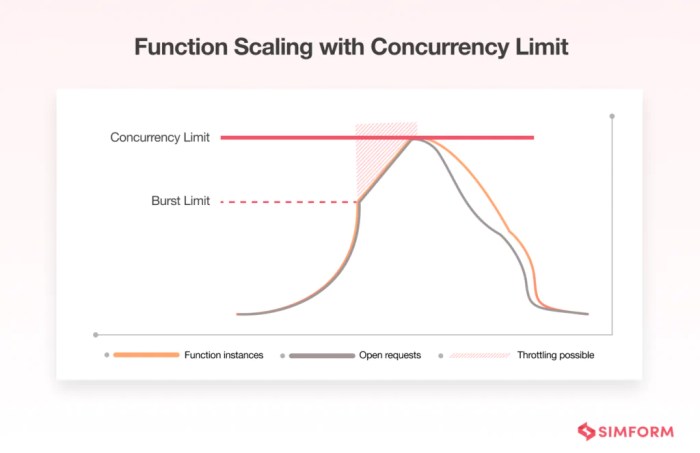

Scaling Capabilities of Durable Functions in Azure

Azure Functions, and consequently Durable Functions, offer automatic scaling. The underlying infrastructure dynamically adjusts resources based on incoming requests, ensuring optimal performance and cost-effectiveness.Azure Functions scaling is primarily governed by the following factors:

- Event Hubs (or other event sources): For functions triggered by event sources like Event Hubs, the number of instances scales based on the volume of events.

- HTTP Triggers: HTTP-triggered functions scale based on the number of concurrent requests. Azure Functions automatically provisions more instances to handle increased load.

- Execution Time and Concurrency: Longer execution times or increased concurrency can impact scaling. Azure Functions attempts to scale out to accommodate these factors, but resource limitations may apply.

- Resource Limits: Each Azure Functions app has resource limits, such as memory and CPU usage. Reaching these limits can restrict scaling.

The scaling behavior of Durable Functions is influenced by the chosen orchestration pattern. For instance, fan-out/fan-in patterns can lead to rapid instance creation, while more sequential patterns might have a more gradual scaling profile. Monitoring and proper configuration of the function app are critical for effective scaling. For example, you can configure the maximum number of instances allowed for your function app, preventing runaway costs in the event of unexpected load spikes.

In a real-world scenario, consider a data processing pipeline using Durable Functions. If the input data volume doubles, Azure Functions will automatically scale the number of function instances to process the increased workload.

Performance Comparison of Orchestration Patterns

Different orchestration patterns exhibit varying performance characteristics. Understanding these differences is crucial for selecting the most appropriate pattern for a given workload.

- Fan-out/Fan-in: This pattern is generally highly performant for parallel processing. The orchestrator quickly schedules multiple activity functions to run concurrently. However, the orchestrator itself can become a bottleneck if the number of activities is extremely large or if the activity functions have significant overhead.

- Chaining: Chaining involves a series of sequential activity functions. The overall performance is limited by the slowest activity function and the overhead of orchestrator calls between activities. This pattern is less performant than fan-out/fan-in for parallelizable tasks.

- Human Interaction: This pattern involves waiting for human interaction, which introduces significant latency. The performance is heavily dependent on the response time of the human participants.

- Monitor: The monitor pattern involves repeatedly checking a condition. Performance depends on the frequency of the checks and the complexity of the condition. Frequent checks can consume resources, while infrequent checks can increase latency.

The choice of pattern should align with the application’s requirements. For computationally intensive tasks that can be parallelized, the fan-out/fan-in pattern is often preferred. For tasks that must be performed sequentially, the chaining pattern is more suitable. Consider a scenario where you need to process a large dataset. The fan-out/fan-in pattern allows you to process the data in parallel, significantly reducing the overall processing time compared to a sequential chaining pattern.

Optimizing Durable Function Performance

Optimizing Durable Function performance involves several key strategies, including efficient code, proper configuration, and resource management.

- Efficient Activity Functions: Activity functions should be designed to execute quickly and efficiently. Optimize the code within activity functions to minimize execution time. This includes using efficient algorithms, minimizing I/O operations, and avoiding unnecessary computations.

- Orchestrator Code Optimization: Orchestrator code should be lightweight and focused on scheduling and coordinating activities. Avoid performing heavy computations or complex logic within the orchestrator itself.

- Data Handling: Minimize the amount of data passed between activities and the orchestrator. Consider using data compression techniques or storing large data sets in Azure Blob Storage.

- Concurrency Limits: Configure concurrency limits for activity functions to control the number of concurrent executions. This can help prevent resource exhaustion and improve overall performance.

- Monitoring and Logging: Implement robust monitoring and logging to identify performance bottlenecks and errors. Use Azure Monitor to track metrics such as execution time, function invocations, and resource utilization.

- Azure Storage Configuration: Durable Functions use Azure Storage for state management. Optimize Azure Storage configuration for performance. This includes using the appropriate storage account tier and enabling read-access geo-redundant storage (RA-GRS) for high availability.

- Code Profiling: Use profiling tools to identify performance bottlenecks within your code. This can help you pinpoint areas where optimization is needed.

Consider a scenario where an activity function is performing a database query. To optimize this, you could:

- Optimize the SQL query for faster execution.

- Use caching to reduce the number of database calls.

- Implement connection pooling to minimize connection overhead.

By applying these optimization techniques, you can significantly improve the performance of Durable Functions and build more responsive and scalable applications.

Advanced Topics and Best Practices

This section delves into advanced aspects of Durable Functions, equipping developers with the knowledge to build sophisticated, resilient, and scalable applications. We will explore Durable Entities for stateful workflows, integration with other Azure services, and best practices to avoid common pitfalls. The goal is to empower developers to leverage the full potential of Durable Functions for complex, real-world scenarios.

Using Durable Entities for Stateful Workflows

Durable Entities offer a powerful mechanism for managing stateful workflows within Durable Functions. They allow for the creation of stateful objects that can be accessed and modified concurrently, providing a robust alternative to traditional orchestration patterns when dealing with long-running, stateful computations. Durable Entities are essentially “actors” that encapsulate state and behavior, reacting to messages (operations) sent to them.

- Entity Definition: An entity is defined by a class or function that implements the entity interface. This definition includes the entity’s state (variables) and the operations it supports (methods).

- Entity Operations: Operations are the methods that can be invoked on an entity. Each operation defines a specific action to be performed on the entity’s state. These operations are invoked through entity client functions.

- Entity Client Functions: These are functions that interact with entities. They can read the state of an entity, send operations to modify its state, or signal events to an entity.

- Concurrency and Consistency: Durable Entities ensure atomicity and consistency. Operations on an entity are executed serially, preventing race conditions and ensuring that the entity’s state is always consistent.

An example of a simple Durable Entity in C# representing a counter might look like this:“`csharpusing System.Threading.Tasks;using Microsoft.Azure.WebJobs;using Microsoft.Azure.WebJobs.Extensions.DurableTask;public static class CounterEntity [FunctionName(“Counter”)] public static async Task Run([EntityTrigger] IDurableEntityContext ctx) switch (ctx.OperationName) case “add”: int amount = ctx.GetInput

- `add`, `get`, and `reset` are the operations.

- `ctx.GetState

()` retrieves the current counter value. - `ctx.SetState()` updates the counter value.

- `ctx.Return()` returns the counter value.

A client function to interact with this entity would use the `IDurableEntityClient` to send operations:“`csharpusing System.Threading.Tasks;using Microsoft.Azure.WebJobs;using Microsoft.Azure.WebJobs.Extensions.DurableTask;public static class CounterClient [FunctionName(“CounterClient”)] public static async Task Run( [OrchestrationClient] IDurableOrchestrationClient client, [ActivityTrigger] string input) var entityId = new EntityId(“Counter”, “myCounter”); await client.Entity.SignalEntityAsync(entityId, “add”, 10); // Increment by 10 var currentValue = await client.Entity.ReadEntityStateAsync

Demonstrating Integration of Durable Functions with Other Azure Services

Integrating Durable Functions with other Azure services enhances their capabilities and allows for building more complex and event-driven applications. This integration often involves using bindings and triggers to connect Durable Functions with services such as Event Hubs and Service Bus. This integration allows for handling large volumes of events, processing messages, and triggering workflows based on external events.

- Event Hubs Integration: Durable Functions can be triggered by events from Event Hubs. This is useful for processing high-volume data streams.

- Service Bus Integration: Durable Functions can interact with Service Bus queues and topics. This enables scenarios like message-driven workflows and asynchronous communication.

- Azure Storage Integration: Durable Functions can use Azure Storage for storing state, data, and results.

Consider an example of integrating Durable Functions with Event Hubs. A function can be triggered by events from an Event Hub, and the function can then start a Durable Function orchestration. This allows for real-time processing of events and the execution of complex workflows based on the data received from Event Hubs.“`csharpusing Microsoft.Azure.WebJobs;using Microsoft.Azure.WebJobs.Extensions.DurableTask;using Microsoft.Azure.EventHubs;using System.Threading.Tasks;public static class EventHubTrigger [FunctionName(“EventHubTrigger”)] public static async Task Run( [EventHubTrigger(“my-event-hub”, Connection = “EventHubConnectionAppSetting”)] EventData[] events, [DurableClient] IDurableClient starter) foreach (EventData eventData in events) string message = System.Text.Encoding.UTF8.GetString(eventData.Body.ToArray()); // Start a new orchestration instance string instanceId = await starter.StartNewAsync(“MyOrchestration”, message); “`In this example:* `EventHubTrigger` is an Event Hub trigger function that listens for events from an Event Hub.

- When an event is received, it starts a new Durable Function orchestration named `MyOrchestration`.

- The event data is passed as input to the orchestration.

For Service Bus integration, a Durable Function can be triggered by a Service Bus queue message.“`csharpusing Microsoft.Azure.WebJobs;using Microsoft.Azure.WebJobs.Extensions.DurableTask;using Microsoft.Azure.ServiceBus;using System.Threading.Tasks;public static class ServiceBusTrigger [FunctionName(“ServiceBusTrigger”)] public static async Task Run( [ServiceBusTrigger(“my-queue”, Connection = “ServiceBusConnectionAppSetting”)] Message message, [DurableClient] IDurableClient starter) string messageBody = System.Text.Encoding.UTF8.GetString(message.Body); // Start a new orchestration instance string instanceId = await starter.StartNewAsync(“MyOrchestration”, messageBody); “`In this example:* `ServiceBusTrigger` is a Service Bus trigger function that listens for messages from a Service Bus queue.

- When a message is received, it starts a new Durable Function orchestration named `MyOrchestration`.

- The message body is passed as input to the orchestration.

Detailing the Common Pitfalls and Best Practices for Developing Robust Durable Function Applications

Developing robust Durable Function applications requires careful planning and attention to detail. Understanding and avoiding common pitfalls, while adhering to best practices, is crucial for building reliable and maintainable solutions. This includes aspects like handling exceptions, managing state, and optimizing performance.

- Exception Handling: Implement robust exception handling within both orchestrator and activity functions. Use try-catch blocks to handle exceptions and retry operations when necessary. Consider using the `RetryOptions` class to configure retry policies.

- State Management: Carefully manage state in orchestrations. Avoid storing large amounts of data directly in orchestration variables. Use durable entities or external storage (e.g., Azure Blob Storage, Cosmos DB) for large data sets.

- Idempotency: Design activity functions to be idempotent. This ensures that retries do not cause unintended side effects. This is particularly important when interacting with external systems.

- Versioning: Implement versioning for orchestrations to allow for updates without breaking existing workflows. This can be achieved by creating new orchestration functions with different names or using conditional logic within the orchestration.

- Monitoring and Logging: Implement comprehensive monitoring and logging. Use Application Insights to track function executions, identify performance bottlenecks, and diagnose errors. Log important events and data within your functions.

- Performance Optimization: Optimize for performance by minimizing the amount of data passed between activities and orchestrations. Consider using parallel execution for independent activities. Use durable entities to manage state efficiently.

- Avoid Infinite Loops: Ensure that orchestrations do not contain infinite loops. Use appropriate conditions and termination logic to prevent unintended behavior and potential costs.

- Testing: Write unit and integration tests to ensure the correctness of your Durable Function applications. Use the Durable Functions testing framework to simulate function executions and verify behavior.

For example, a common pitfall is not handling exceptions properly. If an activity function fails and the orchestration doesn’t handle the exception, the entire orchestration may fail.“`csharptry // Call an activity function await context.CallActivityAsync(“MyActivity”, input);catch (Exception ex) // Handle the exception (e.g., retry, log, or compensate) // Example: Log the error context.SetCustomStatus($”Activity failed: ex.Message”); throw; // Re-throw to propagate the error“`This example shows how to wrap the activity function call in a `try-catch` block to handle exceptions.

By re-throwing the exception, the orchestrator can handle the error.

Conclusive Thoughts

In conclusion, the implementation of Durable Functions in Azure presents a paradigm shift in how serverless applications are conceived and executed. From orchestrating intricate workflows to managing state and ensuring resilience, Durable Functions provide a comprehensive toolkit for building sophisticated, scalable, and maintainable solutions. By mastering the concepts and patterns discussed, developers can unlock a new level of efficiency and power in their Azure deployments, creating applications that are not only functional but also robust and easily adaptable to evolving business needs.

FAQ Insights

What are the key differences between Durable Functions and regular Azure Functions?

Regular Azure Functions are stateless and designed for short-lived tasks. Durable Functions, on the other hand, are stateful, allowing for the creation of long-running, orchestrated workflows that can manage state, handle complex logic, and integrate with other Azure services.

What are the common orchestration patterns supported by Durable Functions?

Durable Functions support several orchestration patterns, including function chaining, fan-out/fan-in, async HTTP APIs, and more. These patterns allow developers to model complex processes and manage dependencies effectively.

How does Durable Functions handle state management?

Durable Functions use an orchestration engine to manage state. This engine stores the state of the orchestration in Azure Storage, allowing for reliable state management and the ability to resume execution from a specific point if necessary.

What are Durable Entities and how do they differ from orchestration functions?

Durable Entities provide a way to manage stateful objects within a Durable Function application. They differ from orchestration functions by providing a more granular approach to state management, allowing for direct access and manipulation of entity state through operations.

How can I monitor and debug Durable Function orchestrations?

Monitoring and debugging can be achieved through Azure Monitor, Application Insights, and Azure Storage logs. These tools provide insights into the execution of orchestrations, allowing for the identification of performance bottlenecks and the troubleshooting of errors.