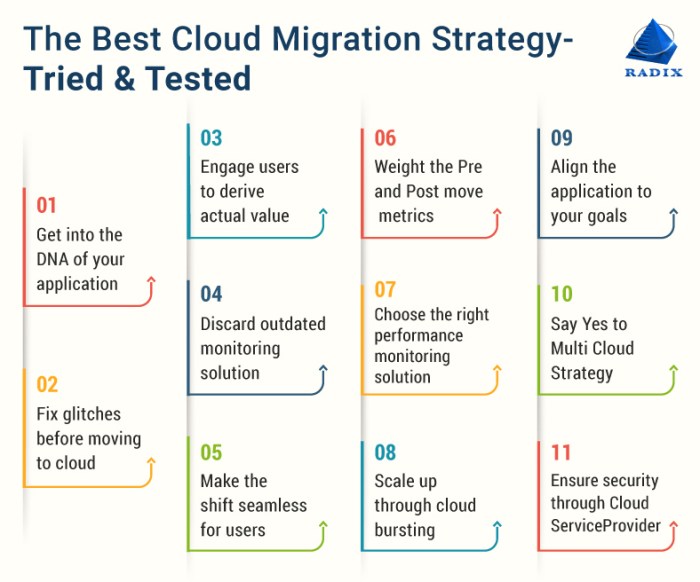

Cloud migration, the strategic transfer of data, applications, and IT processes to a cloud computing environment, presents a transformative opportunity for organizations. However, the complexity of this undertaking necessitates a meticulously planned approach. This document provides a comprehensive analysis of the critical steps involved in ensuring a successful cloud migration project, from initial planning and provider selection to post-migration optimization and ongoing management.

Success hinges on a deep understanding of pre-migration assessments, including application dependencies and infrastructure analysis, alongside the strategic selection of the right cloud provider. The process encompasses data and application migration techniques, security and compliance considerations, cost optimization strategies, and robust network and connectivity configurations. Furthermore, this document emphasizes the importance of monitoring and management, training, and skill development, and the crucial role of change management in navigating this complex transition.

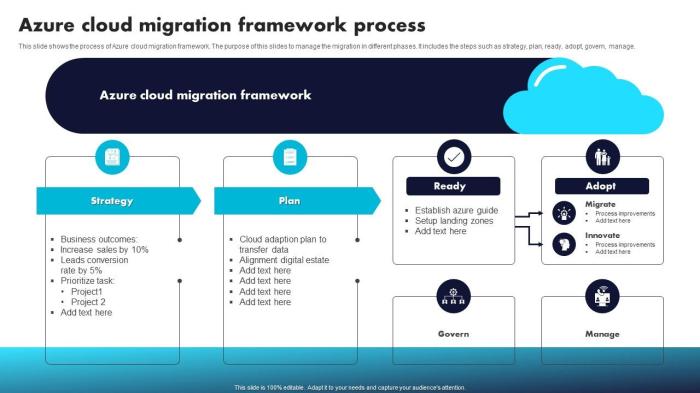

Planning and Strategy

Effective cloud migration hinges on meticulous planning and a well-defined strategy. A proactive approach, encompassing thorough assessment, phased implementation, and robust risk management, is crucial for a successful transition. This proactive methodology minimizes disruptions, optimizes resource allocation, and ensures a smooth migration process.

Essential Pre-Migration Assessments

Before embarking on a cloud migration, a comprehensive assessment of the existing environment is paramount. This involves a detailed examination of application dependencies and infrastructure components.

- Application Dependency Mapping: Identifying interdependencies between applications is crucial. This involves mapping how different applications interact with each other, including data flows and communication protocols. Tools like static code analysis, dynamic tracing, and network traffic analysis can be employed to uncover these relationships. For example, consider a retail application. The dependency mapping would reveal that the front-end website relies on the inventory management system, the payment gateway, and the customer database.

Failure to account for these dependencies can lead to application downtime and data loss during the migration. The output of this process is typically a dependency graph, visually representing the interconnectedness of the applications.

- Infrastructure Analysis: A thorough analysis of the existing infrastructure is essential. This involves assessing hardware resources (CPU, memory, storage), network configurations (bandwidth, latency), and security protocols. Metrics like CPU utilization, memory usage, and storage I/O are critical in determining the optimal cloud instance sizes and configurations. This analysis also includes identifying the current operating systems, database versions, and middleware components. For instance, if an organization is running an on-premises database server with high I/O requirements, the cloud migration strategy might involve choosing a cloud database service optimized for performance.

- Performance Baseline: Establishing performance baselines is a critical component. This involves measuring key performance indicators (KPIs) such as response times, transaction throughput, and error rates before migration. These baselines serve as a benchmark for comparison after the migration, allowing for the identification of performance degradation or improvement. Tools like application performance monitoring (APM) and network monitoring systems are valuable for collecting and analyzing these metrics.

Designing a Phased Migration Approach

A phased migration approach minimizes risks and disruptions by allowing for incremental deployments and testing. This strategy often involves pilot programs and iterative rollouts.

- Pilot Programs: Pilot programs involve migrating a small subset of applications or a specific department to the cloud first. This provides an opportunity to test the migration process, identify potential issues, and refine the migration strategy before a full-scale rollout. The pilot program should be carefully selected to represent the broader application portfolio. For example, migrating a non-critical application, such as a document management system, as a pilot can provide valuable insights without impacting core business operations.

This allows for adjustments to be made to the migration plan, tooling, and training.

- Iterative Rollouts: Once the pilot program is successful, an iterative rollout strategy is employed. This involves migrating applications in phases, prioritizing those that are less critical or easier to migrate. This allows for continuous learning and improvement throughout the migration process. For example, an organization might migrate its development and testing environments first, followed by less critical production applications, and finally, its core business applications.

Each phase should include thorough testing and validation to ensure that the migrated applications are functioning correctly.

- Data Migration Strategy: The data migration strategy should be a crucial part of the phased approach. This includes selecting the appropriate data migration method (e.g., online, offline, or hybrid) and planning for data validation and reconciliation. The choice of data migration method depends on factors such as data volume, network bandwidth, and downtime tolerance. For example, for large datasets, offline migration using physical appliances might be the preferred approach.

Creating a Risk Assessment Framework

A robust risk assessment framework is vital for identifying and mitigating potential challenges during the cloud migration process. This involves identifying risks, assessing their likelihood and impact, and developing mitigation strategies.

- Risk Identification: Identifying potential risks is the first step. This involves considering various aspects of the migration, including application compatibility, data security, network connectivity, and vendor lock-in. Common risks include application incompatibility with the cloud environment, data breaches during migration, network latency, and the potential for vendor-specific features to limit flexibility.

- Risk Assessment: Each identified risk should be assessed based on its likelihood of occurrence and its potential impact. This can be done using a risk matrix, which plots the likelihood and impact on a scale (e.g., low, medium, high). This helps prioritize risks and focus mitigation efforts on the most critical ones. For example, a risk with a high likelihood and high impact, such as data loss during migration, should be given the highest priority.

- Mitigation Strategies: Developing mitigation strategies for each identified risk is essential. This involves creating plans to reduce the likelihood or impact of the risk. For example, to mitigate the risk of data loss, strategies might include creating data backups, using data encryption, and implementing robust data validation processes. Other mitigation strategies include using multiple cloud providers to avoid vendor lock-in, designing for failure, and implementing automated monitoring and alerting systems.

- Example Risk: Data Loss During Migration. The likelihood is rated as Medium, the Impact is rated as High. Mitigation strategies involve: 1) Implementing data backups before migration. 2) Using data encryption during transit. 3) Thoroughly validating the data after migration to ensure data integrity. The effectiveness of these mitigation strategies should be continuously monitored.

Selecting the Right Cloud Provider

Choosing the appropriate cloud provider is a pivotal decision in a successful cloud migration. This selection significantly impacts cost, performance, security, and the long-term flexibility of the migrated workloads. A thorough comparative analysis of the major providers, along with a clear understanding of specific workload requirements and potential vendor lock-in implications, is crucial for informed decision-making.

Comparative Analysis of Major Cloud Providers

A comparative assessment of Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) reveals distinct strengths and weaknesses across various dimensions. This analysis considers cost structures, security features, and the breadth of services offered.

| Provider | Cost Model | Security Features | Key Services |

|---|---|---|---|

| AWS | Pay-as-you-go, Reserved Instances, Savings Plans. Complex pricing structure with numerous options. | IAM, KMS, VPC, Shield, GuardDuty. Mature security ecosystem with extensive compliance certifications. | EC2, S3, RDS, Lambda, DynamoDB, CloudFront. Wide range of services across compute, storage, database, and content delivery. |

| Azure | Pay-as-you-go, Reserved Instances, Hybrid Benefit (for on-premises licenses). Pricing is generally competitive with AWS. | Azure Active Directory, Key Vault, Virtual Network, Security Center, Sentinel. Strong integration with Microsoft’s existing security products. | Virtual Machines, Blob Storage, SQL Database, Azure Functions, Cosmos DB, CDN. Focus on hybrid cloud capabilities and integration with Windows-based environments. |

| GCP | Pay-as-you-go, Sustained Use Discounts, Committed Use Discounts. Often offers competitive pricing, particularly for sustained workloads. | Cloud IAM, Cloud KMS, VPC, Cloud Armor, Security Command Center. Emphasizes data security and advanced threat detection. | Compute Engine, Cloud Storage, Cloud SQL, Cloud Functions, Cloud Spanner, Cloud CDN. Strong in data analytics, machine learning, and Kubernetes. |

The cost of cloud services varies significantly depending on the specific services used, the region, and the commitment level. AWS, Azure, and GCP all offer cost optimization tools, such as reserved instances and sustained use discounts, which can significantly reduce expenses. Security features also vary. AWS, Azure, and GCP all offer robust security features, including identity and access management (IAM), encryption, and network security.

The choice of provider will depend on the specific security requirements of the workload and the existing security infrastructure. The range of services provided by each vendor differs significantly. AWS offers the broadest range of services, while Azure excels in hybrid cloud capabilities, and GCP leads in data analytics and machine learning.

Criteria for Selecting the Optimal Cloud Provider

Selecting the optimal cloud provider requires a detailed assessment of the workload’s specific needs and organizational priorities. Several criteria should be considered, including technical requirements, cost considerations, security and compliance mandates, and operational capabilities.

- Workload Requirements: Analyze the specific needs of the workload. This includes compute, storage, database, and network requirements. Consider factors like performance, scalability, and availability. For example, a high-performance computing (HPC) workload might benefit from GCP’s specialized compute instances and network capabilities.

- Cost Optimization: Evaluate the total cost of ownership (TCO). This involves comparing the pricing models of different providers, including pay-as-you-go, reserved instances, and savings plans. Use cost management tools to estimate and monitor cloud spending.

- Security and Compliance: Assess the provider’s security features, compliance certifications, and data residency options. Choose a provider that meets the organization’s security and compliance requirements. For example, organizations subject to HIPAA regulations in the United States must ensure that their cloud provider complies with HIPAA standards.

- Operational Capabilities: Evaluate the provider’s management tools, monitoring capabilities, and support services. Consider the provider’s ability to integrate with existing IT infrastructure and the ease of managing the cloud environment.

For instance, a financial institution might prioritize Azure due to its strong integration with existing Microsoft infrastructure and its comprehensive compliance certifications for financial services. A startup focused on data analytics might choose GCP for its advanced data processing and machine learning capabilities. Organizations should conduct proof-of-concept (POC) testing to evaluate the performance and cost of different cloud providers before making a final decision.

This involves deploying a representative workload on each platform and measuring its performance, cost, and ease of management.

Implications of Vendor Lock-in and Mitigation Strategies

Vendor lock-in refers to the situation where a customer becomes overly dependent on a single cloud provider, making it difficult and costly to migrate to another provider or to bring the workload back on-premises. Several strategies can be employed to avoid or mitigate the effects of vendor lock-in.

- Use Open Standards and Technologies: Employ open-source technologies and standards-based APIs to reduce dependencies on proprietary vendor solutions. This allows for greater portability and flexibility. For example, using containerization technologies like Docker and orchestration platforms like Kubernetes facilitates application portability across different cloud providers.

- Design for Portability: Architect applications to be portable across different cloud environments. This includes decoupling applications from specific vendor services and using infrastructure-as-code (IaC) tools to manage infrastructure deployments consistently.

- Multi-Cloud Strategy: Distribute workloads across multiple cloud providers to reduce dependency on a single vendor. This approach can improve resilience, optimize costs, and provide flexibility. A multi-cloud strategy requires careful planning and management to ensure seamless operation and avoid complexity.

- Hybrid Cloud Approach: Combine public cloud services with on-premises infrastructure. This allows organizations to leverage the benefits of both environments and retain control over sensitive data and workloads. Hybrid cloud solutions require robust integration and management capabilities.

- Regular Cost and Performance Monitoring: Continuously monitor the costs and performance of workloads across different cloud providers. This allows for proactive optimization and helps identify potential vendor lock-in issues early on. Regularly assess and compare the costs and performance of different services to ensure that the organization is getting the best value for its investment.

By implementing these strategies, organizations can reduce their dependence on a single cloud provider and maintain greater control over their IT infrastructure. For example, a company using a multi-cloud strategy might run its web application on AWS, its database on Azure, and its data analytics platform on GCP. This approach provides greater flexibility and reduces the risk of vendor lock-in.

Another example is a financial services company that uses a hybrid cloud approach, running its sensitive financial applications on-premises while leveraging public cloud services for less critical workloads. This approach allows the company to maintain compliance with regulatory requirements while taking advantage of the scalability and cost-effectiveness of the public cloud.

Data Migration Techniques

Data migration is a critical phase in cloud migration, involving the transfer of data from on-premises systems or other cloud environments to the target cloud infrastructure. The selection of the appropriate data migration technique significantly impacts the project’s success, influencing factors such as downtime, cost, and the ability to leverage cloud-native services. Careful consideration of the existing data landscape, business requirements, and technical capabilities is essential for selecting the most effective approach.

Data Migration Strategies

Several data migration strategies exist, each tailored to specific needs and scenarios. Understanding these strategies and their suitability is crucial for informed decision-making.

- Lift-and-Shift (Rehosting): This approach involves migrating data and applications with minimal changes to the existing architecture. The data is essentially “lifted” from the source environment and “shifted” to the cloud. This is often the fastest method for migrating data, as it requires the least amount of refactoring. However, it may not fully leverage the benefits of the cloud, such as scalability and cost optimization.

It’s best suited for scenarios where immediate migration is prioritized and the existing application architecture is compatible with the cloud environment. For example, a company migrating a legacy database server to a cloud-based virtual machine with minimal configuration changes would be a lift-and-shift approach.

- Re-platforming: Re-platforming involves making some modifications to the existing application to take advantage of cloud-based services, but the core architecture remains largely unchanged. This might include migrating to a different database system or using managed services. This strategy offers a balance between speed and cloud optimization. It can improve performance and reduce operational overhead. For instance, migrating a database from an on-premises SQL Server to a cloud-based managed database service, such as Azure SQL Database or Amazon RDS, would be considered re-platforming.

- Re-architecting: Re-architecting involves redesigning and rewriting the application to fully leverage cloud-native features and services. This approach typically involves significant changes to the application’s architecture and data model. While the most complex and time-consuming strategy, re-architecting offers the greatest potential for optimization, scalability, and cost savings. It’s suitable for applications that require significant performance improvements, scalability, or integration with cloud-native services.

An example would be migrating a monolithic application to a microservices architecture running on a cloud platform, utilizing cloud-native databases and message queues.

Ensuring Data Integrity and Security

Data integrity and security are paramount during data migration. Implementing robust measures is essential to protect data from loss, corruption, and unauthorized access.

- Encryption: Data encryption protects data both in transit and at rest. Implementing encryption ensures that data is unreadable to unauthorized parties.

- Encryption in Transit: This involves encrypting data as it is being transferred between the source and target environments. Common protocols for encryption in transit include Transport Layer Security (TLS) and Secure Sockets Layer (SSL). These protocols protect data from interception during migration.

- Encryption at Rest: This involves encrypting data stored in the cloud environment. Cloud providers offer various encryption options, including server-side encryption and client-side encryption. Server-side encryption is typically managed by the cloud provider, while client-side encryption requires the customer to manage the encryption keys.

- Access Controls: Implementing robust access controls restricts access to data to authorized users and systems. Access controls should be implemented at various levels, including network, storage, and application layers.

- Identity and Access Management (IAM): IAM systems, such as AWS IAM, Azure Active Directory, and Google Cloud IAM, are used to manage user identities and permissions. These systems allow administrators to define roles and grant access to specific resources based on the principle of least privilege.

- Network Security Groups (NSGs) and Firewalls: NSGs and firewalls control network traffic and restrict access to cloud resources. These security measures help prevent unauthorized access to data by blocking malicious traffic.

- Data Masking and Anonymization: Sensitive data, such as personally identifiable information (PII), should be masked or anonymized during migration to protect privacy. Data masking replaces sensitive data with realistic, but fictitious, data, while anonymization removes or alters data to prevent the identification of individuals.

- Regular Auditing and Monitoring: Implement regular auditing and monitoring to track data access, changes, and potential security breaches. Cloud providers offer various auditing and monitoring tools, such as AWS CloudTrail, Azure Monitor, and Google Cloud Logging. These tools provide valuable insights into data migration activities and help identify and address security vulnerabilities.

Validating Data Migration Success

Validating data migration success ensures that the migrated data is accurate, complete, and consistent with the source data. A comprehensive validation process is crucial to minimize data-related issues and ensure business continuity.

- Data Comparison: This involves comparing data between the source and target environments to identify any discrepancies. Data comparison can be performed using various techniques, including:

- Checksum Verification: Calculate checksums for data files or database tables in both the source and target environments. Compare the checksums to verify data integrity.

- Row Count Verification: Compare the number of rows in tables or datasets in both the source and target environments. This verifies that all data has been migrated.

- Data Sampling: Randomly sample data from the source and target environments and compare the values to ensure data accuracy.

- Functional Testing: This involves testing the functionality of the applications and services that use the migrated data. Functional testing ensures that the applications can access and process the data correctly.

- User Acceptance Testing (UAT): Involve end-users in the validation process to ensure that the migrated data meets their business requirements.

- Application Testing: Test the application’s functionality, performance, and security using the migrated data.

- Performance Testing: Evaluate the performance of the applications and services using the migrated data. Performance testing identifies any performance bottlenecks or issues that may arise after migration.

- Data Completeness Checks: Verify that all required data has been migrated to the target environment.

- Missing Data Analysis: Identify and address any missing data or incomplete records.

- Data Validation Rules: Implement data validation rules to ensure data consistency and accuracy.

- Error Logging and Reporting: Implement error logging and reporting mechanisms to track and resolve any data migration issues. Detailed logs provide valuable insights into the migration process and facilitate troubleshooting.

- Post-Migration Auditing: Conduct regular audits after migration to ensure data integrity and security. These audits should include data comparison, functional testing, and performance testing.

Application Migration Approaches

Application migration represents a critical phase in cloud adoption, requiring a systematic approach to ensure application functionality, performance, and security in the target cloud environment. The chosen approach significantly impacts the overall project timeline, cost, and the level of application refactoring required. This section delves into the various methods for migrating applications to the cloud, focusing on compatibility assessment, containerization and orchestration, and refactoring strategies for cloud-native architectures.

Assessing Application Compatibility

Evaluating application compatibility with the target cloud environment is the foundational step in any migration strategy. This assessment determines the feasibility of different migration approaches and identifies potential challenges.To effectively assess application compatibility, a combination of code analysis and testing is essential:

- Code Analysis: Code analysis involves examining the application’s source code to identify dependencies, technology stack components, and potential compatibility issues with the target cloud environment. Static code analysis tools can automate this process, scanning the codebase for deprecated libraries, incompatible APIs, and security vulnerabilities. This analysis provides insights into the application’s architecture and identifies areas requiring modification.

- Testing: Testing validates the application’s functionality and performance in the target cloud environment. This includes functional testing to ensure all features work as expected, performance testing to measure response times and resource utilization, and security testing to identify and address potential vulnerabilities. Testing should be conducted throughout the migration process to ensure the application remains functional and secure.

For example, an application built on an older version of a Java runtime environment might require significant refactoring to run on a modern cloud platform that supports newer versions. Code analysis tools can quickly identify such dependencies, allowing the migration team to plan accordingly. Similarly, performance testing can reveal bottlenecks that need to be addressed through resource scaling or code optimization.

Containerization and Orchestration

Containerization and orchestration are essential technologies for deploying and managing applications in the cloud. Containerization packages applications and their dependencies into isolated units, while orchestration automates the deployment, scaling, and management of these containers.Docker is a leading containerization platform that allows developers to package applications and their dependencies into containers. These containers are lightweight, portable, and consistent across different environments.

Kubernetes is a popular container orchestration platform that automates the deployment, scaling, and management of containerized applications.The process involves the following steps:

- Containerization with Docker: The application and its dependencies are packaged into a Docker image. A Dockerfile defines the instructions for building the image, including the base operating system, required libraries, and application code.

- Orchestration with Kubernetes: The Docker images are deployed and managed using Kubernetes. Kubernetes manages the containers, ensuring they are running and available, scaling them based on demand, and providing networking and storage services.

For example, consider a web application that uses a database. With containerization, the web application, the database, and their dependencies can each be packaged into separate containers. Kubernetes can then orchestrate these containers, ensuring that the web application and database are deployed and running, and that they can communicate with each other. This approach simplifies deployment, scaling, and management of the application.

Refactoring Applications for Cloud-Native Architectures

Refactoring applications for cloud-native architectures involves modifying the application’s design and implementation to take advantage of the benefits of the cloud, such as scalability, elasticity, and cost efficiency. This often involves breaking down monolithic applications into microservices and leveraging serverless functions.Microservices are small, independent services that communicate with each other over a network. Each microservice focuses on a specific business function and can be developed, deployed, and scaled independently.

Serverless functions are small pieces of code that execute in response to events, such as HTTP requests or database updates.The process typically includes:

- Decomposition: Breaking down a monolithic application into smaller, independent microservices. This involves identifying the different functional components of the application and separating them into individual services.

- Implementation: Implementing each microservice using appropriate technologies, such as programming languages, frameworks, and databases. Each microservice should be designed to be independently deployable and scalable.

- Deployment and Management: Deploying and managing the microservices using containerization and orchestration technologies, such as Docker and Kubernetes. The platform should provide features like auto-scaling, load balancing, and service discovery.

- Leveraging Serverless Functions: Utilizing serverless functions for specific tasks, such as processing events or handling API requests. This can reduce operational overhead and improve cost efficiency.

For example, an e-commerce application might be refactored into microservices for product catalog, shopping cart, order processing, and payment processing. Each microservice can be developed and deployed independently, allowing for faster development cycles and improved scalability. Serverless functions can be used to handle tasks like image resizing or sending email notifications.

Security and Compliance

Migrating to the cloud necessitates a robust approach to security and compliance, encompassing data protection, access control, and adherence to relevant regulations. This section details best practices, compliance considerations, and frameworks for monitoring and auditing cloud security, ensuring a secure and compliant cloud environment.

Security Best Practices for Cloud Environments

Implementing security best practices is crucial for protecting data and resources in the cloud. This involves a layered approach that integrates various security controls.

- Identity and Access Management (IAM): IAM establishes and manages user identities and access privileges, forming the cornerstone of cloud security. Effective IAM practices include:

- Principle of Least Privilege: Granting users only the minimum necessary access to perform their tasks. This limits the potential damage from compromised credentials.

- Multi-Factor Authentication (MFA): Requiring users to verify their identity through multiple factors (e.g., password and a one-time code) significantly reduces the risk of unauthorized access.

- Regular Access Reviews: Periodically reviewing user access privileges to ensure they remain appropriate and aligned with current job roles.

- Role-Based Access Control (RBAC): Assigning users to predefined roles with specific permissions, simplifying access management and ensuring consistency.

- Data Protection: Protecting data at rest and in transit is paramount. This involves:

- Encryption: Employing encryption to protect sensitive data. Encryption is applied both while data is stored (at rest) and when it is being transmitted (in transit).

- Data Loss Prevention (DLP): Implementing DLP solutions to monitor and prevent sensitive data from leaving the cloud environment.

- Data Backup and Recovery: Establishing regular data backups and a robust recovery plan to ensure business continuity in the event of data loss or disaster.

- Data Classification: Categorizing data based on its sensitivity and criticality, allowing for appropriate security controls to be applied.

- Network Security: Securing the network infrastructure within the cloud environment.

- Virtual Private Cloud (VPC) Segmentation: Isolating different parts of the cloud environment using VPCs to restrict network traffic and limit the impact of security breaches.

- Firewalls: Implementing firewalls to control network traffic and prevent unauthorized access.

- Intrusion Detection and Prevention Systems (IDS/IPS): Deploying IDS/IPS to detect and prevent malicious activity on the network.

- Vulnerability Management: Regularly identifying and addressing security vulnerabilities.

- Vulnerability Scanning: Conducting regular vulnerability scans to identify weaknesses in systems and applications.

- Patch Management: Applying security patches promptly to address identified vulnerabilities.

- Security Information and Event Management (SIEM): Utilizing SIEM systems to collect, analyze, and correlate security events from various sources. This aids in detecting and responding to security threats.

Compliance Considerations

Cloud migrations must align with relevant compliance regulations. These regulations vary depending on the industry and the location of data and operations.

- General Data Protection Regulation (GDPR): GDPR applies to organizations that process the personal data of individuals within the European Union (EU). Compliance involves:

- Data Subject Rights: Ensuring individuals have rights regarding their personal data, including the right to access, rectify, and erase their data.

- Data Minimization: Collecting and processing only the data necessary for the specified purpose.

- Data Security: Implementing appropriate technical and organizational measures to protect personal data.

- Data Breach Notification: Notifying the relevant supervisory authority and affected individuals of data breaches within 72 hours of discovery.

- Health Insurance Portability and Accountability Act (HIPAA): HIPAA applies to healthcare providers, health plans, and healthcare clearinghouses in the United States. Compliance involves:

- Privacy Rule: Protecting the privacy of protected health information (PHI).

- Security Rule: Establishing administrative, physical, and technical safeguards to protect the confidentiality, integrity, and availability of electronic PHI.

- Breach Notification Rule: Notifying individuals, the Department of Health and Human Services (HHS), and, in some cases, the media of breaches of unsecured PHI.

- Payment Card Industry Data Security Standard (PCI DSS): PCI DSS applies to organizations that process, store, or transmit credit card information. Compliance involves:

- Securing Cardholder Data: Protecting cardholder data throughout the entire payment card lifecycle.

- Regular Security Testing: Conducting vulnerability scans and penetration testing to identify and address security vulnerabilities.

- Access Control: Restricting access to cardholder data.

- Other Compliance Requirements: Depending on the industry and location, other compliance requirements may apply, such as:

- State-Specific Regulations: Organizations must also comply with state-specific data privacy and security laws.

- Industry-Specific Regulations: Industries like finance and government have specific compliance requirements.

- Compliance as a Service (CaaS): Considering the use of CaaS solutions to streamline compliance efforts, providing tools and expertise to manage compliance requirements effectively.

Framework for Monitoring and Auditing Cloud Security

A robust framework for monitoring and auditing cloud security is essential for maintaining a secure and compliant environment. This framework includes continuous monitoring, regular audits, and incident response procedures.

- Continuous Monitoring: Implementing automated monitoring tools to track security events in real-time. This involves:

- Security Information and Event Management (SIEM): Utilizing SIEM systems to collect, analyze, and correlate security events from various sources.

- Log Management: Centralizing and analyzing logs from various cloud resources, including compute instances, storage, and network devices.

- Real-time Alerts: Configuring alerts to notify security teams of suspicious activities or potential security breaches.

- Regular Audits: Conducting regular audits to assess the effectiveness of security controls and ensure compliance with relevant regulations.

- Internal Audits: Performing internal audits to assess security practices and identify areas for improvement.

- External Audits: Engaging third-party auditors to conduct independent assessments of security posture.

- Compliance Reporting: Generating reports to demonstrate compliance with relevant regulations.

- Incident Response Procedures: Establishing well-defined incident response procedures to effectively handle security incidents.

- Incident Detection and Analysis: Identifying and analyzing security incidents.

- Containment: Isolating affected systems to prevent further damage.

- Eradication: Removing the cause of the incident.

- Recovery: Restoring affected systems to their pre-incident state.

- Post-Incident Activities: Conducting a post-incident review to identify lessons learned and improve security practices.

- Security Awareness Training: Providing security awareness training to employees to educate them about security threats and best practices.

- Third-Party Risk Management: Assessing and managing the security risks associated with third-party vendors who have access to cloud resources.

Cost Optimization

Optimizing cloud costs is crucial for maximizing the return on investment (ROI) of a cloud migration project. Proactive cost management ensures that cloud resources are utilized efficiently, preventing unnecessary expenses and enabling businesses to allocate their budgets effectively. Effective cost optimization requires a multifaceted approach, encompassing resource utilization, financial planning, and the strategic use of cloud provider tools and services.

Strategies for Optimizing Cloud Costs

Several strategies can be implemented to reduce cloud spending. These approaches focus on aligning resource allocation with actual needs and leveraging cost-saving mechanisms offered by cloud providers.

- Right-sizing Instances: Right-sizing involves selecting the appropriate instance type and size for each workload. Over-provisioning leads to wasted resources and higher costs, while under-provisioning can negatively impact performance. Analyzing resource utilization metrics, such as CPU usage, memory consumption, and network I/O, is essential. Cloud providers offer tools and services to assist in right-sizing, including recommendations based on historical data. For example, a web server consistently utilizing only 20% CPU capacity could be downsized to a smaller instance type, reducing costs without affecting performance.

- Reserved Instances: Reserved Instances (RIs) provide significant cost savings compared to on-demand instances. By committing to using a specific instance type for a defined period (typically one or three years), organizations can receive substantial discounts. The discount percentage varies depending on the instance type, commitment term, and payment option (e.g., upfront, partial upfront, or no upfront). A company running a consistent database workload can significantly reduce its costs by utilizing RIs.

For instance, reserving a database instance for three years can yield savings of up to 70% compared to on-demand pricing.

- Spot Instances: Spot Instances offer the lowest cost for compute resources. They utilize spare capacity in the cloud provider’s infrastructure. However, spot instances can be terminated if the current spot price exceeds the bid price or if the capacity is needed by the provider. They are suitable for fault-tolerant, non-critical workloads, such as batch processing, testing, and development. The price of spot instances fluctuates based on supply and demand.

Implementing a bidding strategy and designing applications to be resilient to interruptions are crucial for utilizing spot instances effectively. For example, a large-scale image processing task can leverage spot instances, allowing for significant cost savings, provided the application is designed to handle potential interruptions and re-initiate processing from the last checkpoint.

Cost Management Plan

A well-defined cost management plan is vital for controlling and optimizing cloud spending. This plan should encompass budgeting, forecasting, and continuous cost tracking.

- Budgeting: Establish a clear budget for cloud spending, defining the maximum amount that can be spent on cloud resources. The budget should be aligned with business objectives and the expected resource consumption. Categorize costs by service, department, or project to facilitate tracking and analysis.

- Forecasting: Develop a forecasting model to predict future cloud spending based on historical usage data, planned resource deployments, and anticipated growth. Regularly review and adjust forecasts to account for changes in resource consumption patterns and market conditions. Utilize cloud provider forecasting tools and incorporate them into the overall financial planning process.

- Cost Tracking Tools: Implement cost tracking tools to monitor cloud spending in real-time. These tools provide detailed insights into resource consumption, allowing for identifying cost drivers and anomalies. Cloud providers offer built-in cost management dashboards and reporting features. Third-party cost management tools provide advanced analytics, reporting, and optimization recommendations. Examples include AWS Cost Explorer, Azure Cost Management + Billing, and Google Cloud Cost Management.

Leveraging Cloud Provider Cost Optimization Services and Tools

Cloud providers offer a range of services and tools designed to help organizations optimize their cloud costs. These tools provide insights into resource utilization, cost allocation, and optimization recommendations.

- Cost Analysis Dashboards: Utilize the cost analysis dashboards provided by the cloud provider. These dashboards offer visualizations of spending trends, cost breakdowns by service, and cost allocation by resource. This allows for identifying areas where costs can be reduced.

- Cost Optimization Recommendations: Cloud providers offer cost optimization recommendations based on resource utilization data. These recommendations may include right-sizing instances, utilizing reserved instances, and identifying idle resources. For example, AWS Cost Explorer provides recommendations for EC2 instance right-sizing based on CPU and memory utilization.

- Automation and Scripting: Automate cost optimization tasks using scripting and automation tools. This includes automatically right-sizing instances based on predefined thresholds, scheduling instance shutdowns during non-peak hours, and implementing cost-aware auto-scaling policies.

- Cost Allocation Tags: Implement cost allocation tags to track costs by project, department, or application. This allows for identifying the cost of individual projects and allocating costs accurately.

Network and Connectivity

Successful cloud migration hinges on establishing robust and reliable network connectivity. This ensures seamless data transfer, application accessibility, and effective communication between on-premises resources and the cloud environment. Properly configured network infrastructure minimizes latency, enhances security, and supports high availability, critical for maintaining business continuity during and after the migration process.

Configuring Network Connectivity Between On-Premises and the Cloud

Establishing connectivity between on-premises environments and the cloud is a foundational step in any migration strategy. This involves configuring secure and efficient communication channels to facilitate data transfer, application access, and management operations. Two primary methods are commonly employed: Virtual Private Networks (VPNs) and Direct Connections.

- VPNs: VPNs create an encrypted tunnel over the public internet, allowing secure communication between on-premises networks and the cloud. They are cost-effective and relatively easy to set up, making them a popular choice for initial connectivity. However, VPN performance can be affected by internet congestion and latency.

- Configuration: VPN configuration typically involves setting up a VPN gateway on both the on-premises side and within the cloud provider’s infrastructure.

Protocols like IPsec are commonly used to encrypt and secure the data transmitted through the VPN tunnel.

- Example: A company might use a site-to-site VPN to connect its on-premises data center to its AWS Virtual Private Cloud (VPC). This allows employees to securely access cloud-based applications and data as if they were on the local network.

- Configuration: VPN configuration typically involves setting up a VPN gateway on both the on-premises side and within the cloud provider’s infrastructure.

- Direct Connections: Direct connections, also known as dedicated connections or private connections, provide a dedicated physical connection between the on-premises network and the cloud provider’s network. This offers higher bandwidth, lower latency, and more consistent performance compared to VPNs.

- Configuration: Direct connections typically involve establishing a cross-connect at a colocation facility or directly connecting to the cloud provider’s network. This requires specialized hardware and configuration, and often involves a recurring monthly fee.

- Example: A financial institution with stringent regulatory requirements might use a direct connection to its AWS VPC to ensure low latency and high security for its sensitive financial data.

Designing a Network Architecture for High Availability and Disaster Recovery in the Cloud

Designing a resilient network architecture is crucial for ensuring high availability and disaster recovery capabilities within the cloud. This involves implementing redundancy, failover mechanisms, and geographically dispersed resources to minimize downtime and protect against data loss.

- Redundancy: Implement redundant components at every level of the network architecture, including routers, switches, firewalls, and load balancers. This ensures that if one component fails, another can take over, maintaining service availability.

- Example: Deploying multiple load balancers across different Availability Zones in AWS can distribute traffic and provide failover capabilities. If one load balancer fails, the others can continue to serve traffic.

- Failover Mechanisms: Configure automated failover mechanisms to quickly switch traffic to backup resources in the event of a failure. This can include DNS failover, automatic scaling, and cross-region replication.

- Example: Using AWS Route 53 health checks to monitor the health of application instances and automatically fail over traffic to a healthy instance in a different region.

- Geographic Dispersion: Distribute resources across multiple geographic regions to protect against regional outages and ensure business continuity. This involves replicating data and applications to different regions and configuring failover mechanisms to switch traffic to the backup region in case of a disaster.

- Example: A global e-commerce company might replicate its database and application servers across multiple AWS regions, such as US East and EU West.

If the US East region experiences an outage, traffic can be automatically routed to the EU West region, ensuring the website remains accessible to customers.

- Example: A global e-commerce company might replicate its database and application servers across multiple AWS regions, such as US East and EU West.

Best Practices for Optimizing Network Performance and Minimizing Latency

Optimizing network performance and minimizing latency are critical for providing a responsive and efficient user experience. Several best practices can be implemented to achieve these goals, ensuring that applications and data are accessible quickly and reliably.

- Choose the Right Cloud Region: Select the cloud region closest to your users and on-premises resources to minimize the physical distance data must travel.

- Example: If your users are primarily located in Europe, deploying your applications in an EU-based cloud region will result in lower latency compared to deploying them in a region located in the United States.

- Optimize Network Routing: Configure efficient routing policies to minimize the number of hops data must traverse. This includes using static routing, BGP (Border Gateway Protocol), and other routing protocols to ensure traffic takes the most direct path.

- Example: Use AWS Transit Gateway to simplify network routing between multiple VPCs and on-premises networks. This allows for centralized connectivity and reduces the complexity of managing routing tables.

- Use Content Delivery Networks (CDNs): CDNs cache content closer to users, reducing latency and improving download speeds. CDNs are particularly effective for delivering static content, such as images, videos, and JavaScript files.

- Example: Using Amazon CloudFront to cache static content at edge locations around the world. When a user requests content, it is served from the closest edge location, resulting in faster loading times.

- Implement Network Monitoring and Performance Tuning: Continuously monitor network performance and identify bottlenecks. Use tools to measure latency, bandwidth utilization, and packet loss. Regularly review and adjust network configurations to optimize performance.

- Example: Utilize AWS CloudWatch to monitor network metrics such as bandwidth utilization, latency, and error rates. This data can be used to identify performance issues and proactively address them.

Monitoring and Management

Effective monitoring and proactive management are critical for the ongoing success of a cloud migration. These processes ensure optimal performance, security, and cost efficiency throughout the lifecycle of cloud resources and applications. Implementing robust monitoring and management strategies allows organizations to quickly identify and resolve issues, maintain system availability, and optimize resource utilization.

Monitoring Cloud Resources and Applications

Comprehensive monitoring involves tracking various performance metrics and establishing alerting mechanisms to proactively address potential problems. Monitoring provides insights into system behavior and allows for timely intervention.

- Performance Metrics: Monitoring key performance indicators (KPIs) provides insights into resource utilization and application health. These metrics should be carefully selected based on the specific needs of the application and infrastructure. For example:

- CPU Utilization: Measures the percentage of time the CPU is actively processing tasks. High CPU utilization can indicate a need for scaling or optimization.

- Memory Usage: Tracks the amount of memory being used by applications and the operating system. Excessive memory usage can lead to performance degradation.

- Disk I/O: Monitors the read and write operations on storage devices. High disk I/O can indicate bottlenecks in data access.

- Network Traffic: Measures the amount of data transferred over the network. This is crucial for identifying network congestion or bandwidth limitations.

- Application Response Time: Measures the time it takes for an application to respond to user requests. Slow response times can indicate performance issues.

- Alerting Mechanisms: Establishing automated alerts is essential for promptly addressing issues. Alerts are triggered when specific thresholds are exceeded or anomalies are detected.

- Threshold-Based Alerts: These alerts are triggered when a metric exceeds a predefined threshold. For example, an alert could be configured to trigger when CPU utilization exceeds 90% for more than five minutes.

- Anomaly Detection: Anomaly detection systems use machine learning algorithms to identify unusual patterns in data. This can help detect issues that might not be apparent through threshold-based alerts. For example, a sudden spike in network traffic outside of normal business hours could indicate a security breach.

- Log Analysis: Analyzing logs from applications and infrastructure components is crucial for identifying errors, security threats, and performance issues. Centralized logging and log aggregation tools can simplify this process.

- Monitoring Tools: Various tools are available for monitoring cloud resources and applications. These tools provide dashboards, visualizations, and alerting capabilities. Examples include:

- Cloud Provider Native Tools: Most cloud providers offer native monitoring services. For example, Amazon CloudWatch, Azure Monitor, and Google Cloud Monitoring.

- Third-Party Monitoring Tools: Several third-party tools provide advanced monitoring capabilities. Examples include Datadog, New Relic, and Dynatrace.

Automating Cloud Management Tasks

Automating cloud management tasks streamlines operations, reduces manual effort, and improves consistency. Automation is achieved through Infrastructure as Code (IaC) and configuration management.

- Infrastructure as Code (IaC): IaC involves defining and managing infrastructure using code. This allows for automated provisioning, configuration, and management of cloud resources.

- Benefits of IaC:

- Consistency: Ensures consistent infrastructure deployments across environments.

- Repeatability: Allows for the easy replication of infrastructure.

- Version Control: Enables tracking changes to infrastructure over time.

- Automation: Automates the provisioning and management of resources.

- IaC Tools:

- Terraform: A popular IaC tool that supports multiple cloud providers.

- AWS CloudFormation: A native IaC service for Amazon Web Services.

- Azure Resource Manager (ARM) Templates: A native IaC service for Microsoft Azure.

- Google Cloud Deployment Manager: A native IaC service for Google Cloud Platform.

- Benefits of IaC:

- Configuration Management: Configuration management tools automate the configuration and maintenance of software and systems.

- Benefits of Configuration Management:

- Automation: Automates the configuration of systems.

- Compliance: Enforces compliance with security and configuration standards.

- Scalability: Enables easy scaling of infrastructure.

- Configuration Management Tools:

- Ansible: An open-source configuration management tool.

- Chef: A configuration management tool that uses a client-server architecture.

- Puppet: A configuration management tool that uses a declarative approach.

- Benefits of Configuration Management:

- Automation Workflows: Automation can be implemented through workflows that combine IaC and configuration management. For example, a workflow could automatically provision a new server using IaC, then configure the server using a configuration management tool.

Disaster Recovery and Business Continuity in the Cloud

Implementing robust disaster recovery (DR) and business continuity (BC) strategies ensures the availability of critical applications and data in the event of an outage or disaster. These strategies involve backup and restore procedures, along with failover mechanisms.

- Backup and Restore Procedures: Regular backups are crucial for data protection and disaster recovery.

- Backup Strategies:

- Full Backups: Back up all data.

- Incremental Backups: Back up only the data that has changed since the last backup.

- Differential Backups: Back up only the data that has changed since the last full backup.

- Backup Frequency: The frequency of backups should be determined based on the Recovery Point Objective (RPO) and Recovery Time Objective (RTO).

RPO: The maximum acceptable data loss in the event of a disaster.

RTO: The maximum acceptable downtime in the event of a disaster.

- Backup Storage: Backups should be stored in a geographically diverse location to protect against regional disasters. Cloud providers offer various storage options for backups, such as Amazon S3, Azure Blob Storage, and Google Cloud Storage.

- Backup Strategies:

- Disaster Recovery Strategies:

- Pilot Light: A minimal environment is maintained in the cloud, and resources are scaled up when a disaster is declared.

- Warm Standby: A scaled-down version of the production environment is always running in the cloud.

- Hot Standby: A fully functional replica of the production environment is always running in the cloud, ready to take over in case of a disaster.

- Business Continuity Planning: Business continuity planning involves developing a comprehensive plan to ensure that critical business functions can continue to operate during and after a disaster. This plan should include:

- Risk Assessment: Identifying potential threats and vulnerabilities.

- Business Impact Analysis (BIA): Assessing the impact of a disaster on critical business functions.

- Recovery Strategies: Defining the steps to recover critical systems and data.

- Testing and Exercises: Regularly testing the DR plan to ensure its effectiveness.

Training and Skill Development

Successful cloud migration hinges on the ability of IT staff to effectively manage and maintain the new cloud environment. This necessitates a proactive approach to training and skill development, ensuring the team possesses the necessary expertise to navigate the complexities of cloud technologies and best practices. A well-structured training program, coupled with effective change management and ongoing support, is crucial for a smooth transition and long-term success.

Upskilling IT Staff on Cloud Technologies and Best Practices

The transition to the cloud requires a significant shift in IT staff’s skillset. Therefore, a comprehensive upskilling plan is essential. This plan should be tailored to the specific cloud provider and the organization’s cloud strategy, focusing on both technical and soft skills.The upskilling plan should encompass the following:

- Needs Assessment: Before implementing any training program, a thorough assessment of the current skill levels and knowledge gaps within the IT team is crucial. This assessment should identify the specific cloud technologies, services, and best practices that the team needs to learn. The assessment can include surveys, interviews, and hands-on skill evaluations.

- Curriculum Development: Based on the needs assessment, a detailed curriculum should be developed. This curriculum should cover a range of topics, including cloud fundamentals, specific cloud provider services (e.g., AWS, Azure, GCP), security best practices, DevOps methodologies, and automation tools. The curriculum should incorporate a mix of learning methods, such as instructor-led training, online courses, hands-on labs, and real-world case studies.

- Training Delivery: The training program should be delivered through various channels to cater to different learning styles and preferences. This can include:

- Formal Training: Instructor-led courses, workshops, and boot camps provide structured learning and opportunities for interaction with instructors and peers.

- Online Courses: Platforms like Coursera, Udemy, and A Cloud Guru offer a vast library of cloud-related courses.

- Hands-on Labs: Practical exercises and simulations allow IT staff to gain hands-on experience with cloud technologies.

- Mentorship Programs: Pairing experienced cloud professionals with less experienced team members can accelerate learning and knowledge transfer.

- Certification: Encouraging and supporting staff to obtain relevant cloud certifications (e.g., AWS Certified Solutions Architect, Microsoft Certified: Azure Solutions Architect Expert, Google Cloud Certified Professional Cloud Architect) can validate their skills and demonstrate their commitment to cloud expertise.

- Continuous Learning: The cloud landscape is constantly evolving, so continuous learning is essential. This can involve subscribing to industry publications, attending webinars and conferences, and participating in online communities.

- Budget Allocation: Adequate budget allocation is crucial to cover the costs associated with training materials, instructor fees, certification exams, and other resources. The budget should be allocated strategically to ensure that the training program meets the organization’s specific needs and goals.

Change Management and Communication Throughout the Migration Project

Cloud migration projects often involve significant organizational change. Effectively managing this change and maintaining open communication channels are crucial for mitigating resistance, ensuring stakeholder buy-in, and driving project success.Effective change management involves:

- Stakeholder Identification: Identifying all stakeholders who will be impacted by the cloud migration, including IT staff, business users, and executive leadership.

- Communication Plan: Developing a detailed communication plan that Artikels the key messages, communication channels, frequency, and target audience. The plan should be proactive and transparent, keeping stakeholders informed about the project’s progress, challenges, and benefits.

- Impact Assessment: Assessing the potential impact of the cloud migration on different stakeholder groups. This includes understanding how their roles, responsibilities, and workflows will change.

- Training and Support: Providing adequate training and support to help stakeholders adapt to the new cloud environment. This includes offering user guides, FAQs, and help desk support.

- Resistance Management: Addressing resistance to change proactively. This involves identifying the root causes of resistance, addressing concerns, and involving stakeholders in the decision-making process.

- Feedback Mechanisms: Establishing feedback mechanisms to gather input from stakeholders and identify areas for improvement. This can include surveys, focus groups, and regular project meetings.

- Leadership Support: Securing strong leadership support for the cloud migration project. This includes demonstrating the benefits of cloud adoption, providing resources, and championing the change initiative.

Communication strategies should encompass:

- Regular Updates: Providing regular updates to stakeholders on the project’s progress, milestones, and any potential challenges.

- Transparency: Maintaining transparency throughout the migration process, sharing information openly and honestly.

- Clear Messaging: Using clear and concise language, avoiding technical jargon that may confuse stakeholders.

- Multiple Channels: Utilizing multiple communication channels, such as email, newsletters, intranet, and town hall meetings, to reach different audiences.

- Feedback Loops: Establishing feedback loops to gather input from stakeholders and address their concerns.

Post-Migration Support and Maintenance Guide

Post-migration support and maintenance are critical for ensuring the long-term success of the cloud environment. This includes providing ongoing support to users, monitoring the cloud infrastructure, and continuously optimizing the environment for performance, cost, and security.A comprehensive post-migration support and maintenance guide should include:

- Service Level Agreements (SLAs): Defining clear SLAs that specify the level of service and support that users can expect. These SLAs should cover availability, performance, and response times.

- Incident Management: Establishing a robust incident management process to quickly identify, diagnose, and resolve any issues that arise. This includes defining roles and responsibilities, establishing escalation procedures, and utilizing incident tracking tools.

- Monitoring and Alerting: Implementing comprehensive monitoring and alerting systems to proactively identify and address potential problems. This includes monitoring key performance indicators (KPIs) such as CPU utilization, memory usage, network latency, and error rates. Alerts should be configured to notify the appropriate personnel when thresholds are exceeded.

- Performance Optimization: Continuously optimizing the cloud environment for performance. This includes right-sizing instances, optimizing database queries, and utilizing caching mechanisms.

- Cost Optimization: Regularly reviewing and optimizing cloud costs. This includes identifying and eliminating unused resources, leveraging reserved instances or committed use discounts, and implementing cost allocation tagging.

- Security and Compliance: Maintaining the security and compliance of the cloud environment. This includes regularly reviewing security configurations, patching vulnerabilities, and conducting security audits.

- Backup and Disaster Recovery: Implementing a robust backup and disaster recovery plan to protect against data loss and ensure business continuity. This includes regularly backing up data and testing the disaster recovery plan.

- Documentation: Maintaining up-to-date documentation of the cloud environment, including architecture diagrams, configuration settings, and operational procedures.

- Ongoing Training: Providing ongoing training to IT staff to ensure they stay up-to-date on the latest cloud technologies and best practices.

- Regular Reviews: Conducting regular reviews of the cloud environment to identify areas for improvement. This includes reviewing performance, cost, security, and compliance.

For example, consider a company migrating its e-commerce platform to AWS. Post-migration, the team must implement a comprehensive monitoring solution to track key metrics like website response time, transaction success rates, and server resource utilization. They would also need to establish an incident management process, defining clear escalation paths and response times for any performance issues. Furthermore, they would implement a cost optimization strategy, leveraging AWS’s reserved instances to reduce infrastructure expenses by 30% compared to on-demand pricing.

Finally, the team would regularly review security configurations, patching any identified vulnerabilities promptly to maintain PCI DSS compliance. This proactive approach to post-migration support and maintenance ensures the platform’s stability, security, and cost-effectiveness.

Final Summary

In conclusion, achieving a successful cloud migration project requires a holistic strategy, incorporating meticulous planning, informed decision-making, and continuous optimization. By adhering to the principles Artikeld in this document, organizations can mitigate risks, optimize costs, and leverage the full potential of the cloud. This guide underscores the importance of a phased approach, robust security protocols, and ongoing monitoring to ensure a seamless and successful transition to the cloud, fostering innovation and agility for years to come.

Key Questions Answered

What are the key benefits of cloud migration?

Cloud migration offers several advantages, including cost reduction through optimized resource utilization, enhanced scalability and agility, improved business continuity and disaster recovery capabilities, and increased access to advanced technologies and services.

How do I choose the right cloud migration strategy?

The optimal cloud migration strategy depends on factors such as application complexity, business requirements, and budget constraints. Common strategies include lift-and-shift, re-platforming, re-architecting, and refactoring, each with its own advantages and disadvantages.

What are the primary security concerns during cloud migration?

Security concerns include data breaches, unauthorized access, misconfiguration of security settings, and compliance violations. Implementing robust security measures, such as identity and access management, data encryption, and regular security audits, is crucial.

How can I estimate the cost of a cloud migration project?

Cost estimation involves assessing the current IT infrastructure, selecting a cloud provider, choosing a migration strategy, and considering factors like data transfer costs, storage costs, and ongoing operational expenses. Utilizing cloud provider cost calculators and budgeting tools is recommended.

What is the role of automation in cloud migration?

Automation plays a critical role in streamlining cloud migration by automating tasks such as infrastructure provisioning, application deployment, and configuration management. Automation reduces manual effort, minimizes errors, and accelerates the migration process.