This comprehensive guide delves into the intricacies of designing scalable machine learning architectures. From defining scalability metrics to deploying models in the cloud, we’ll explore the key considerations for building robust and efficient systems capable of handling massive datasets and complex algorithms.

The journey through the design process encompasses crucial aspects like data handling, model deployment, system design patterns, and the selection of appropriate algorithms and libraries. We’ll also examine critical factors such as monitoring, security, fault tolerance, and cost optimization, all vital for successful implementation.

Defining Scalability in Machine Learning Architectures

Scalability in machine learning is a critical aspect of designing systems capable of handling increasing amounts of data, complexity, and user demand. A scalable machine learning architecture can adapt to evolving needs without compromising performance or efficiency. This adaptability is essential for long-term success in any machine learning application.Effective scalability is not merely about increasing the size of the system, but rather about maintaining performance and efficiency as the system grows.

It encompasses various aspects, from managing large datasets to handling complex models and high user traffic. This understanding is paramount to building robust and enduring machine learning solutions.

Defining Scalability in Machine Learning Systems

Scalability in machine learning refers to the ability of a system to handle increasing workloads, data volumes, and model complexities without significant performance degradation. This capacity is achieved through careful architectural design that allows for seamless adaptation to evolving demands. A scalable system can handle both increasing data input and more sophisticated machine learning models without hindering its responsiveness or accuracy.

Aspects of Scalability

Scalability in machine learning encompasses various dimensions, including both horizontal and vertical scaling. Understanding these different approaches is critical to architecting a system capable of meeting future demands.

- Horizontal Scaling: This approach involves distributing the workload across multiple interconnected servers. Each server handles a portion of the task, enabling parallel processing and increased throughput. This is often employed when dealing with massive datasets and high volumes of data ingestion. For example, a recommendation engine processing millions of user interactions could use a cluster of servers to handle individual user queries simultaneously, preventing performance bottlenecks.

- Vertical Scaling: This approach focuses on improving the resources of a single server to handle increased demands. It involves upgrading the hardware, such as adding more RAM or CPU cores, to accommodate larger models and more complex calculations. This is suitable for scenarios where the computational demands of a model or the dataset are relatively manageable. A model training process might benefit from upgrading the RAM and CPU of a single machine for faster processing if the model isn’t too large.

Importance of Scalability in Machine Learning Applications

Scalability is paramount for machine learning applications because it ensures that the system can adapt to the ever-increasing volumes of data and the growing complexity of machine learning models. Without scalability, the system might become unresponsive or inaccurate, leading to a decline in user experience and potentially hindering the value derived from the application. For example, a fraud detection system that cannot scale to process the increasing number of transactions will fail to accurately identify fraudulent activities.

Metrics for Evaluating Scalability

Evaluating the scalability of a machine learning system requires using specific metrics. These metrics assess the system’s responsiveness, resource utilization, and ability to handle increasing workloads. Common metrics include throughput, latency, and resource consumption (CPU, memory, storage). Quantifiable metrics are essential for demonstrating the scalability of a system.

- Throughput: The rate at which the system can process requests or data points. A higher throughput indicates better scalability. For example, a system processing 1000 transactions per second has a higher throughput than one processing 500.

- Latency: The time it takes for the system to respond to a request or process a data point. Lower latency implies better responsiveness and scalability. For example, a system with a latency of 100 milliseconds is more responsive than one with a latency of 500 milliseconds.

- Resource Consumption: The amount of CPU, memory, and storage used by the system. Efficient resource utilization is crucial for scalability. For example, a system using only 50% of its CPU resources is more scalable than one consuming 90%.

Comparison of Scaling Strategies

The choice between horizontal and vertical scaling depends on the specific requirements of the machine learning application.

| Scaling Strategy | Description | Advantages | Disadvantages |

|---|---|---|---|

| Horizontal Scaling | Distributing the workload across multiple servers. | High throughput, fault tolerance, and better ability to handle massive data volumes. | Increased complexity in system management, potential for data consistency issues. |

| Vertical Scaling | Improving the resources of a single server. | Simpler to implement, easier to manage. | Limited scalability, potential for bottlenecks if resources are insufficient. |

Data Handling and Storage Strategies

Effective data handling and storage are crucial for building scalable machine learning architectures. Robust systems must efficiently manage massive datasets, ensuring rapid access and retrieval for model training and inference. This section explores various strategies for storing and managing data, along with the different database technologies suitable for machine learning applications.Efficient data management is paramount for a scalable machine learning system.

Data handling strategies directly impact model performance and the overall system’s scalability. This section dives into the critical aspects of storing and managing massive datasets, from database technologies to data partitioning techniques.

Data Storage Technologies

Diverse database technologies cater to various machine learning needs. Choosing the right technology depends on factors such as data volume, velocity, variety, and the specific requirements of the machine learning tasks.

- Relational Databases (SQL): Relational databases, such as PostgreSQL and MySQL, are well-structured and offer strong ACID properties (Atomicity, Consistency, Isolation, Durability). They are suitable for structured data and support complex queries. However, they might not be optimal for handling extremely large datasets or unstructured data types.

- NoSQL Databases: NoSQL databases, including MongoDB and Cassandra, are designed to handle unstructured and semi-structured data. They are highly scalable and flexible, making them ideal for massive datasets and real-time applications. However, they may lack the sophisticated querying capabilities of relational databases.

- Cloud Storage Services: Cloud storage solutions like Amazon S3 and Google Cloud Storage are excellent for storing large volumes of data, often used as a staging area for data processing pipelines. They are highly scalable, cost-effective for large datasets, and offer robust data redundancy. These services often integrate well with other cloud-based machine learning platforms.

Data Partitioning and Sharding

Efficiently managing large datasets involves strategies like partitioning and sharding. These techniques distribute data across multiple storage units, enhancing scalability and performance.

- Data Partitioning: Partitioning divides a large dataset into smaller, more manageable parts. This improves query performance and reduces the load on individual storage units. Vertical partitioning separates data based on columns, while horizontal partitioning splits data based on rows.

- Data Sharding: Sharding further enhances scalability by distributing data across multiple servers. This approach is particularly useful for extremely large datasets. Sharding strategies can be based on various criteria, such as hashing, range-based partitioning, or consistent hashing.

Optimizing Data Access and Retrieval

Efficient data access and retrieval are crucial for timely model training and inference. Techniques like indexing and caching can significantly speed up data access.

- Indexing: Creating indexes on frequently queried data columns significantly speeds up query performance. Indexes enable faster lookups and reduce the time needed to retrieve data. Choosing appropriate indexing strategies is crucial for optimal performance.

- Caching: Caching frequently accessed data in memory can dramatically reduce the time required for data retrieval. This technique is especially beneficial for data that is accessed repeatedly during model training or inference.

Comparison of Data Storage Solutions

The following table summarizes the pros and cons of different data storage solutions for machine learning applications.

| Data Storage Solution | Pros | Cons |

|---|---|---|

| Relational Databases (SQL) | Structured data, ACID properties, complex queries | Limited scalability for massive datasets, not ideal for unstructured data |

| NoSQL Databases | Scalability, flexibility for unstructured data, high availability | Limited query capabilities, data consistency challenges |

| Cloud Storage Services | High scalability, cost-effectiveness, data redundancy | Potential for higher latency, need for careful data management |

Model Deployment and Serving

Deploying machine learning models into production environments is a crucial step for realizing the value of these models. A scalable deployment strategy ensures the model can handle increasing workloads and user demands efficiently. This involves choosing the right deployment method, optimizing model serving, and considering the role of cloud platforms. Successful deployment translates to reliable predictions and a positive user experience.Effective model deployment requires careful consideration of various factors.

These factors include the model’s complexity, the anticipated volume of requests, and the desired response time. The chosen deployment strategy must align with these factors, enabling the model to perform accurately and consistently under diverse operational conditions. Furthermore, a robust deployment strategy facilitates efficient model management, allowing for updates and maintenance without disrupting service.

Model Deployment Methods

Different deployment methods cater to varying needs and complexities. For instance, a simple model might be deployed directly onto a server, whereas a more intricate model might necessitate a dedicated containerized environment. Model deployment options include direct server deployment, containerization using Docker, and cloud-based platforms. Each method has its own strengths and weaknesses, impacting scalability and maintainability.

Containerization with Docker

Docker containers encapsulate the model and its dependencies, creating portable and reproducible deployments. This isolation minimizes conflicts and ensures consistency across different environments. Docker simplifies the process of deploying models across various servers or cloud platforms. A critical advantage is the ability to package the model and its necessary libraries into a single unit, facilitating efficient transfer and deployment.

This ensures consistency across different environments.

Cloud Platforms for Model Deployment

Cloud platforms offer managed services for model deployment, significantly simplifying the process. These platforms often provide infrastructure, tools, and monitoring capabilities, freeing up resources for model development. Popular cloud platforms include Amazon SageMaker, Google Cloud AI Platform, and Azure Machine Learning. These platforms offer various features, such as automated scaling, model monitoring, and integration with other cloud services.

Model Serving and Inference Optimization

Optimizing model serving and inference is crucial for efficient prediction generation. Techniques such as model quantization, where the model’s precision is reduced, can significantly improve inference speed without sacrificing accuracy. Another technique is caching frequently requested inputs or predictions, reducing processing time and enhancing responsiveness. Moreover, techniques like parallel inference processing allow for handling multiple requests simultaneously, increasing overall throughput.

This is especially relevant when dealing with a large volume of data and predictions.

Table of Model Deployment Platforms

| Deployment Platform | Key Features |

|---|---|

| Amazon SageMaker | Automated model training, hosting, and monitoring; seamless integration with AWS ecosystem; scalable infrastructure. |

| Google Cloud AI Platform | Managed environment for machine learning workflows; support for various model types; integration with Google Cloud services. |

| Azure Machine Learning | Comprehensive platform for building, deploying, and managing machine learning models; integrated with Azure ecosystem; robust monitoring and management tools. |

| Docker | Containerization for model deployment; consistent environment across different platforms; simplifies portability and reproducibility. |

System Design Patterns for Scalability

Effective machine learning systems require careful architectural design to ensure scalability and maintainability. This involves employing suitable design patterns that address the specific challenges of handling large datasets, complex models, and high volumes of requests. These patterns provide reusable solutions to common problems, promoting efficient resource utilization and system robustness.Common system design patterns, such as microservices, message queues, and caching, are instrumental in creating scalable machine learning architectures.

These patterns facilitate modularity, resilience, and performance optimization, crucial for handling the increasing complexity and demands of modern machine learning applications. Choosing the right pattern depends on the specific needs of the system, considering factors such as data volume, model complexity, and anticipated traffic.

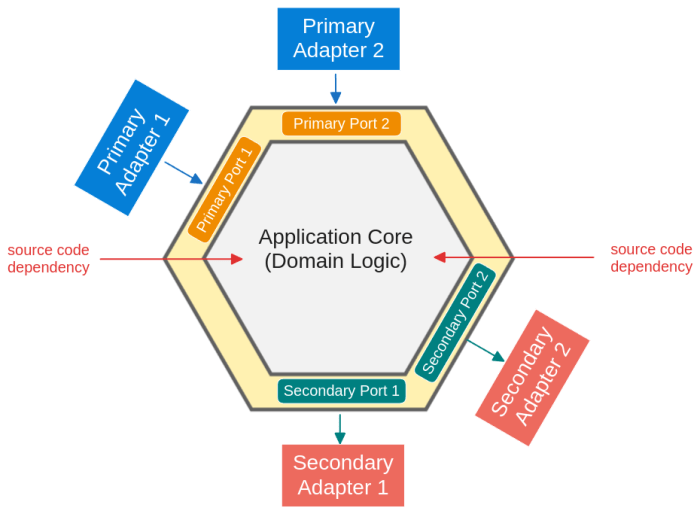

Microservices Architecture

Microservices architecture, a modular approach, allows breaking down a complex machine learning system into smaller, independent services. Each service focuses on a specific function, such as data preprocessing, model training, or prediction serving. This modularity facilitates independent scaling and deployment of different components. For example, a service dedicated to data ingestion can be scaled independently to handle increasing data volumes, while a separate service for model inference can be scaled to accommodate more requests.

This decentralized approach promotes resilience, as failure of one service does not necessarily bring down the entire system. Furthermore, each service can be built and maintained by different teams, fostering agility and faster development cycles.

Message Queue Pattern

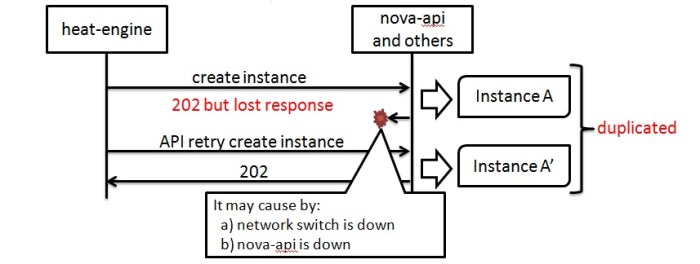

Message queues play a critical role in handling asynchronous tasks within a scalable machine learning system. They decouple components by allowing one component to send a request (e.g., a new dataset for training) to another without waiting for immediate response. This asynchronous communication pattern significantly improves system responsiveness and allows for parallel processing. A typical use case involves training a model using a new batch of data.

Instead of blocking the entire system, the data can be pushed into a queue, and the training process can proceed asynchronously. This decoupling also enhances fault tolerance; if a component fails during the training process, the rest of the system can continue functioning.

Caching Pattern

Caching significantly improves performance and reduces latency in machine learning systems. By storing frequently accessed data or results in a cache, subsequent requests can retrieve the data from the cache instead of querying the underlying data source, database, or model. This substantially reduces the load on the data source, improving the system’s responsiveness. For example, if a prediction service is frequently requested for the same data points, caching the results can drastically reduce latency.

The cache can be implemented using various technologies, such as Redis or Memcached, and can be designed to automatically expire cached data based on time or usage frequency.

Comparison of System Design Patterns

| Pattern | Advantages | Disadvantages |

|---|---|---|

| Microservices | Independent scaling, resilience, agility, faster development | Increased complexity in deployment and management, potential for communication overhead |

| Message Queue | Asynchronous communication, improved responsiveness, fault tolerance | Requires additional infrastructure, complexity in message routing and handling |

| Caching | Improved performance, reduced latency, reduced load on data sources | Increased memory usage, potential for stale data, cache invalidation challenges |

Choosing the Right Algorithms and Libraries

Selecting appropriate machine learning algorithms and libraries is crucial for building scalable models. The choice directly impacts the model’s performance, training time, and overall system efficiency. Carefully considering factors like data characteristics, model complexity, and available computational resources is essential. Optimized libraries provide tools and frameworks that streamline the development process and ensure scalability.Effective machine learning systems are built on a foundation of well-chosen algorithms and libraries.

Understanding the trade-offs between different options and the specific characteristics of your data is paramount. This allows you to leverage tools and frameworks that enhance efficiency and maintainability, leading to more robust and scalable solutions.

Considerations for Algorithm Selection

Choosing the right machine learning algorithm for a specific task depends on several key factors. The nature of the data, the desired prediction accuracy, and the computational resources available are critical considerations. For instance, linear models are often preferred for simpler datasets, whereas more complex algorithms like deep neural networks might be necessary for intricate patterns.

Libraries and Frameworks Optimized for Scalability

Several libraries and frameworks are specifically designed for building scalable machine learning models. These tools provide optimized implementations of algorithms, distributed computing capabilities, and robust management of large datasets. Examples include TensorFlow, PyTorch, and scikit-learn, each with strengths suited to different scenarios.

Performance Characteristics of Different Algorithms

The performance characteristics of various algorithms vary significantly. Linear models, such as logistic regression, generally offer faster training times and lower computational costs, making them suitable for smaller datasets. However, for complex relationships within large datasets, more sophisticated models like Support Vector Machines (SVMs) or deep neural networks may be necessary, despite their increased computational demands. Choosing the right balance is vital.

Comparison of Popular Machine Learning Libraries

Different machine learning libraries provide distinct advantages in terms of scalability. For instance, TensorFlow excels in handling large-scale data and complex neural network architectures. PyTorch, on the other hand, offers a more flexible and dynamic programming experience, potentially useful for research and prototyping. Scikit-learn, known for its ease of use, is well-suited for smaller datasets and simpler models.

Performance Comparison Table

| Algorithm | Training Time (Estimated) | Scalability | Complexity | Use Cases |

|---|---|---|---|---|

| Logistic Regression | Fast | High | Low | Binary classification, prediction |

| Support Vector Machines (SVM) | Moderate | Moderate | Medium | Classification, regression |

| Decision Trees | Moderate | Moderate | Low | Classification, regression |

| Random Forest | Moderate | High | Medium | Classification, regression, ensemble methods |

| Gradient Boosting Machines (GBM) | Slow | High | High | Complex models, high accuracy |

| Deep Neural Networks (DNN) | Very Slow | High | High | Image recognition, natural language processing |

Note: Training times are estimations and vary depending on the dataset size and complexity. Scalability refers to the algorithm’s ability to handle increasing data volumes and model complexity.

Monitoring and Maintaining Scalable Systems

Maintaining a scalable machine learning architecture requires continuous monitoring and proactive maintenance to ensure optimal performance, stability, and resilience. This involves proactively identifying and addressing potential issues before they impact users or negatively affect the system’s overall efficiency. Regular monitoring and effective troubleshooting mechanisms are critical for long-term success.

Performance Monitoring Strategies

Effective monitoring of a scalable machine learning system necessitates the tracking of key performance indicators (KPIs). These KPIs provide insights into the system’s health, allowing for proactive identification of potential bottlenecks and performance degradation. Monitoring strategies should be designed to provide real-time feedback on critical metrics, enabling quick responses to emerging problems. This involves setting thresholds for acceptable performance levels, triggering alerts when these thresholds are breached, and providing clear visualizations of the data collected.

Monitoring Tools and Metrics

A variety of tools are available for monitoring the performance of a machine learning system. These tools allow for the collection and analysis of various metrics, facilitating a deep understanding of the system’s behavior. Crucially, these tools should be selected based on the specific needs of the architecture, encompassing factors such as scalability, ease of integration, and the type of metrics being monitored.

Examples of critical metrics include latency, throughput, resource utilization (CPU, memory, network), and error rates.

Latency, Throughput, and Resource Utilization Monitoring

Latency, or the time taken to process a request, is a critical metric in assessing system responsiveness. High latency can negatively impact user experience and operational efficiency. Throughput, measuring the number of requests processed per unit of time, signifies the system’s capacity to handle a load. Monitoring resource utilization, particularly CPU and memory, helps identify potential bottlenecks and prevent system crashes.

Monitoring tools should provide visualizations and alerts for exceeding predefined thresholds for these metrics.

System Stability and Resilience Strategies

Maintaining system stability and resilience is paramount for a scalable machine learning architecture. Strategies for achieving this include implementing robust error handling mechanisms, load balancing techniques, and redundant components. Monitoring tools should be configured to detect and report anomalies in the system’s behavior, such as unusual spikes in errors or resource consumption.

Troubleshooting and Issue Resolution

Troubleshooting in a large-scale system requires a systematic approach. A well-defined process should be in place for identifying the root cause of issues. This often involves using logs, monitoring data, and potentially using specialized debugging tools. Crucially, documentation and well-defined procedures are vital to facilitate rapid resolution of problems.

Monitoring Tool Comparison

The following table provides a comparative overview of common monitoring tools and their key features.

| Tool | Key Features | Strengths | Weaknesses |

|---|---|---|---|

| Prometheus | Open-source, highly customizable, supports multiple data sources, excellent for metrics collection and visualization. | Scalable, robust, open-source community support. | Requires some configuration knowledge. |

| Grafana | Open-source visualization tool, integrates with various data sources like Prometheus, allows for creating custom dashboards. | Intuitive dashboards, customizable visualizations. | Relies on data source for metrics collection. |

| Datadog | Cloud-based monitoring platform, offers comprehensive monitoring of various metrics, integrates with various services. | Wide range of integrations, comprehensive alerting capabilities. | Can be expensive for extensive use. |

Security Considerations in Scalable Architectures

Ensuring the security of machine learning systems is paramount, especially in scalable architectures. Protecting sensitive data, models, and communication channels is crucial to prevent unauthorized access, breaches, and malicious manipulation. Robust security measures are essential to maintain trust and reliability, preventing potential harm to individuals and organizations.Protecting machine learning systems from attacks requires a multi-faceted approach, addressing vulnerabilities at every stage, from data storage to model deployment.

A comprehensive security strategy is vital to maintain the integrity and confidentiality of the system.

Security Vulnerabilities in Machine Learning Systems

Machine learning systems, particularly those deployed in scalable environments, are susceptible to various security vulnerabilities. These vulnerabilities range from malicious data injection to model poisoning and unauthorized access. Compromised models can generate inaccurate or biased outputs, leading to detrimental consequences. Furthermore, poorly secured communication channels can expose sensitive information to eavesdropping and manipulation.

Protecting Data and Models

Robust data and model protection strategies are essential to prevent unauthorized access and modification. Data encryption, both at rest and in transit, is a fundamental security measure. Access controls, including role-based access management, are necessary to restrict access to sensitive data and models based on user roles and permissions. Regular security audits and vulnerability assessments are crucial for proactively identifying and addressing potential risks.

Securing Communication Channels

Securing communication channels in a distributed machine learning system is critical to prevent unauthorized access and manipulation. Employing secure protocols, such as HTTPS, is essential to protect data transmitted over networks. End-to-end encryption ensures that only authorized parties can access the data, even if the communication channel is compromised. Implementing intrusion detection systems and firewalls can further enhance security by monitoring network traffic and blocking malicious activity.

Security Best Practices for Machine Learning Systems

Implementing security best practices is crucial to mitigate risks and maintain the integrity of machine learning systems. Employing secure coding practices during model development, rigorous testing of models, and robust data validation are important to prevent vulnerabilities. Regular security updates and patches for libraries and frameworks help to address known security flaws. Finally, implementing a comprehensive incident response plan can help to mitigate the impact of security breaches.

Table of Potential Security Threats and Mitigation Strategies

| Potential Security Threat | Mitigation Strategy |

|---|---|

| Malicious Data Injection | Data validation and sanitization, input filtering, and robust data preprocessing techniques. |

| Model Poisoning | Secure model training environments, data integrity checks, and rigorous model evaluation. |

| Unauthorized Access | Strong access controls, encryption of sensitive data, and regular security audits. |

| Eavesdropping and Communication Interception | Secure communication protocols (e.g., TLS/SSL), end-to-end encryption, and network segmentation. |

| Denial-of-Service Attacks | Traffic filtering, load balancing, and intrusion detection systems. |

Fault Tolerance and Resilience

Designing fault-tolerant machine learning systems is crucial for ensuring reliable and consistent performance, especially in production environments. A system’s ability to withstand failures and continue operating is paramount, minimizing downtime and data loss. This involves implementing strategies that anticipate and mitigate potential issues, ensuring the system remains available and responsive even during unexpected events.

Strategies for Building Fault-Tolerant Systems

Robust machine learning systems require proactive strategies to handle failures. These strategies encompass various approaches, from redundancy techniques to automatic recovery mechanisms. Effective fault tolerance is vital for maintaining data integrity and preserving the system’s operational capacity in the face of diverse potential failures.

- Redundancy Techniques: Redundancy is a cornerstone of fault tolerance. Employing redundant components, such as multiple servers for data storage or multiple model replicas for prediction, ensures that if one component fails, the system can continue operating with minimal disruption. This approach is particularly important in distributed environments where failures are more likely.

- Distributed Systems Failure Handling: In a distributed machine learning system, failures can manifest in various ways. A critical element of designing fault-tolerant systems is identifying potential failure points and implementing mechanisms to gracefully handle failures. Techniques include monitoring system health, detecting failed components, and triggering failover procedures to maintain system operation.

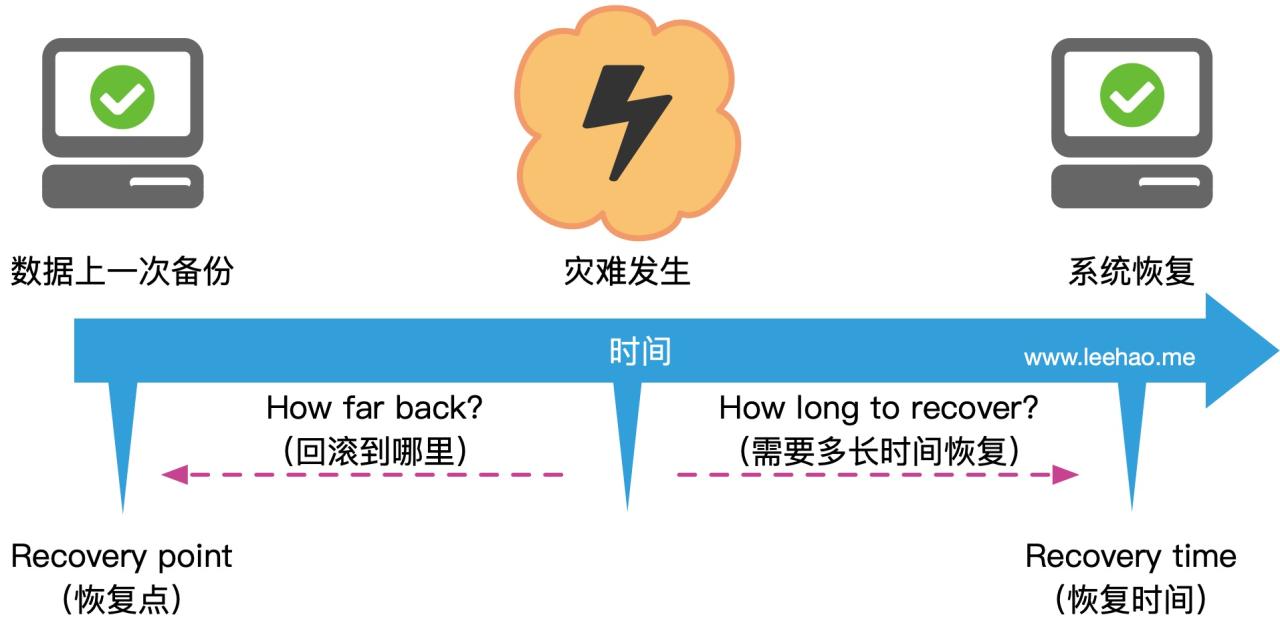

- Automatic Recovery from Failures: Designing automatic recovery mechanisms is essential to ensure that the system can resume operations as quickly as possible after a failure. This involves establishing clear procedures for identifying and addressing failures, triggering recovery actions, and verifying the restoration of system functionality. This process should be designed to minimize downtime and data loss.

Redundancy Techniques for System Availability

Redundancy ensures the system’s continuous operation despite component failures. Different forms of redundancy exist, each with specific advantages and disadvantages.

- Data Replication: Replicating data across multiple storage locations allows the system to access data even if one storage location fails. Techniques like mirroring or distributed databases facilitate data redundancy.

- Model Replication: Replicating machine learning models across multiple servers enables the system to continue predictions even if one server fails. This approach is crucial for high-throughput prediction systems.

- Component Redundancy: Implementing redundant hardware components, such as servers or network interfaces, provides a failover mechanism. If a component fails, the system can seamlessly switch to the backup component.

Techniques for Handling Failures in a Distributed Environment

Distributed machine learning environments introduce unique challenges in fault tolerance. Robust strategies are required to handle failures effectively.

- Monitoring System Health: Continuously monitoring system health, including resource utilization, network connectivity, and component status, allows for early detection of potential failures.

- Fault Detection and Isolation: Developing mechanisms to detect and isolate failures is critical. This includes implementing monitoring tools and algorithms that identify anomalies and pinpoint the source of the problem.

- Failover Mechanisms: Implementing failover mechanisms that automatically switch to backup components when failures occur is essential for maintaining system availability. These mechanisms should be designed to minimize disruption and data loss.

Automatic Recovery from System Failures

Effective recovery from failures is crucial for minimizing downtime and maintaining data integrity.

- Rollback Strategies: Implementing rollback strategies allows the system to revert to a previous stable state if a failure occurs. This helps to restore system functionality without significant data loss.

- Restart Procedures: Developing and implementing procedures for restarting failed components or the entire system is critical. These procedures should be designed to minimize downtime and data loss.

- Data Consistency Restoration: Ensuring data consistency after a failure is crucial. Implementing mechanisms to recover and reconcile data from different components is essential to maintain data integrity and prevent data corruption.

Fault Tolerance Strategies

This table illustrates different fault tolerance strategies and their associated benefits.

| Strategy | Description | Benefits |

|---|---|---|

| Data Replication | Replicating data across multiple storage locations | High availability, data redundancy |

| Model Replication | Replicating models across multiple servers | High throughput, fault tolerance for prediction |

| Component Redundancy | Employing redundant hardware components | Failover capability, enhanced system reliability |

Cost Optimization Strategies

Effective cost optimization is crucial for the long-term sustainability and success of any machine learning project. Minimizing resource consumption while maintaining system performance and scalability is a key challenge in building robust and economically viable machine learning architectures. This involves strategic choices in resource allocation, cloud utilization, and algorithm selection.Careful planning and implementation of cost optimization strategies are essential to ensure the project aligns with budgetary constraints and remains financially viable.

This often involves leveraging cloud services effectively, employing efficient algorithms, and proactively managing resource utilization. Detailed strategies and examples will be provided to guide the development of a cost-conscious machine learning architecture.

Cloud Cost Optimization Techniques for Machine Learning Systems

Cloud platforms offer a range of tools and services for managing and optimizing costs associated with machine learning workloads. Leveraging these features can significantly reduce expenses.

- Spot Instances: Spot instances are a cost-effective alternative to on-demand instances. They leverage unused capacity on the cloud provider’s infrastructure. This can result in substantial savings, particularly for batch processing or tasks with predictable demand. However, instances can be terminated if demand increases, requiring careful scheduling and monitoring of instance availability.

- Reserved Instances: Reserved instances offer a significant discount on on-demand pricing for consistent use of specific instance types. This is beneficial for workloads with predictable and consistent requirements. The discount depends on the duration of the commitment and the chosen instance type. Long-term commitments provide the greatest savings.

- Preemptible Instances: Preemptible instances are even more cost-effective than spot instances. They are reserved for short-term use and may be interrupted at any time. This is a suitable choice for non-critical tasks where interruptions are acceptable. The tasks need to be designed with the possibility of interruption in mind.

- Optimized Instance Types: Selecting the appropriate instance type is crucial. Consider factors like the required compute power, memory, and network bandwidth. Selecting under-provisioned instances can lead to performance bottlenecks, while over-provisioning is costly. Thorough analysis of resource requirements is essential.

- Storage Optimization: Choosing cost-effective storage solutions, such as Glacier or Deep Archive for infrequently accessed data, can significantly reduce storage costs. Strategies for tiered storage, moving data between different storage tiers based on access frequency, are also effective.

Strategies for Optimizing Resource Allocation

Efficient resource allocation is fundamental to cost-effective machine learning.

- Automated Scaling: Utilize cloud provider’s automated scaling capabilities to dynamically adjust resources based on demand. This prevents over-provisioning during periods of low activity and ensures sufficient capacity during peak usage. Monitoring and adjusting scaling policies are critical for optimizing resource utilization.

- Efficient Algorithm Selection: Choosing algorithms with lower computational requirements can reduce processing time and resource consumption. Consider factors like model complexity, data size, and available computational resources. Algorithms with fewer parameters or less intensive calculations can be more cost-effective, especially for large-scale models.

- Data Compression and Caching: Compressing data and caching frequently accessed data can reduce storage requirements and improve access speeds, reducing the overall cost of storage and computation.

Examples of Cost-Saving Strategies in Machine Learning Systems

Practical examples illustrate the impact of cost-saving strategies.

- Case Study 1: A company using spot instances for training models during off-peak hours saved 40% on training costs compared to on-demand instances. This required careful scheduling of training jobs and robust error handling in case of instance termination.

- Case Study 2: By migrating infrequently accessed data to a lower-cost storage tier, a company reduced storage costs by 25% without compromising data availability for frequently accessed data.

Cost Optimization Techniques

This table summarizes key cost optimization techniques for machine learning systems.

| Technique | Description | Benefits |

|---|---|---|

| Spot Instances | Utilize unused cloud capacity for cost-effective training. | Significant cost savings for batch jobs. |

| Reserved Instances | Pre-purchase instances at a discounted rate for consistent usage. | Significant cost savings for predictable workloads. |

| Preemptible Instances | Use low-cost instances for non-critical tasks. | Cost-effective option for less critical workloads. |

| Automated Scaling | Dynamically adjust resources based on demand. | Avoids over-provisioning and optimizes resource utilization. |

| Efficient Algorithm Selection | Choose algorithms with lower computational requirements. | Reduces processing time and resource consumption. |

| Data Compression/Caching | Reduce storage needs and improve access speeds. | Decreases storage and computation costs. |

Case Studies of Scalable Machine Learning Systems

Real-world applications of machine learning often demand scalability to handle massive datasets and complex models. Examining successful implementations provides valuable insights into the design choices, challenges, and lessons learned in building scalable machine learning systems. These case studies highlight best practices and demonstrate how these principles translate into practical solutions.

Examples of Real-World Applications

Numerous industries leverage scalable machine learning systems. E-commerce platforms, for instance, utilize these systems for personalized recommendations, fraud detection, and inventory management. Social media platforms employ them for content moderation, targeted advertising, and user engagement analysis. Financial institutions rely on them for risk assessment, algorithmic trading, and customer churn prediction. Healthcare organizations use them for disease diagnosis, drug discovery, and personalized treatment plans.

These applications, and many others, demand systems that can handle significant volumes of data and execute complex models in real-time.

Design Choices in Scalable Applications

Several crucial design choices are critical for building scalable machine learning systems. These include distributed computing frameworks, such as Apache Spark, for parallel data processing. Choosing the right storage solutions, such as cloud-based object storage, is also paramount for handling massive datasets. Furthermore, optimized model deployment strategies, such as containerization using Docker and orchestration with Kubernetes, are necessary for efficient model serving.

Effective use of caching mechanisms to reduce database load and leverage in-memory data structures can dramatically improve performance. These design choices are not isolated but integrated components of a comprehensive architecture.

Challenges Encountered and Solutions

Developing scalable machine learning systems often faces challenges. One common issue is managing the sheer volume of data. Solutions include data partitioning, distributed storage, and optimized data pipelines. Another significant challenge is ensuring real-time performance for applications like fraud detection. Addressing this involves deploying models on specialized hardware, such as GPUs, and implementing efficient inference mechanisms.

Maintaining model accuracy and preventing performance degradation over time requires continuous monitoring and retraining. Solutions involve careful model selection, regular data updates, and robust monitoring dashboards.

Lessons Learned from Case Studies

Case studies provide valuable lessons on designing and implementing scalable machine learning systems. They highlight the importance of meticulous planning and the need for iterative development. Careful consideration of infrastructure, data pipelines, and model deployment is crucial. Furthermore, choosing the appropriate tools and technologies for the specific task is essential for optimal performance and cost-effectiveness. Finally, robust monitoring and maintenance are vital for ensuring continuous operation and performance.

Table Summarizing Key Features of Different Case Studies

| Case Study | Industry | Scalability Challenge | Solution | Key Lessons |

|---|---|---|---|---|

| Netflix Recommendation Engine | E-commerce | Handling massive user data and diverse content | Distributed computing frameworks, optimized data pipelines, and real-time recommendation algorithms | Prioritizing efficient data processing and model serving are critical. |

| Google Search | Information Retrieval | Querying and processing billions of web pages | Distributed indexing, parallel processing, and specialized hardware | Scalability is achieved through sophisticated distributed systems. |

| Fraud Detection at a Major Bank | Finance | Real-time detection of fraudulent transactions | Low-latency model deployment, GPU acceleration, and high-availability infrastructure | Real-time performance demands optimized infrastructure and algorithms. |

Summary

In conclusion, building a scalable machine learning architecture is a multifaceted endeavor requiring careful consideration of various factors. This guide has presented a comprehensive overview of the key stages involved, from data management to deployment and ongoing maintenance. By understanding and applying the strategies discussed, developers can create powerful, resilient, and cost-effective machine learning systems capable of handling ever-growing data volumes and sophisticated models.

FAQ

What are the key differences between horizontal and vertical scaling?

Horizontal scaling involves adding more machines to a system, distributing the workload across multiple nodes. Vertical scaling, on the other hand, focuses on increasing the resources of a single machine, such as processing power or memory.

What are some common security vulnerabilities in machine learning systems?

Potential vulnerabilities include poisoned data, model inversion attacks, and insecure access to models and data. Protecting these areas is crucial for maintaining system integrity.

How do you choose the right machine learning algorithms for scalability?

The choice depends on factors like dataset size, model complexity, and desired performance. Algorithms with lower computational complexity tend to be more scalable.

What are some cost-effective strategies for managing resources in a scalable machine learning architecture?

Optimizing cloud resource utilization, leveraging serverless computing where appropriate, and employing efficient data storage techniques are key cost-saving measures.