The journey of how to containerize a legacy application begins with acknowledging the inherent complexities of these systems. Legacy applications, often monolithic and burdened by accumulated technical debt, present unique challenges to containerization. Understanding these challenges is paramount. These applications, which are often crucial to business operations, are frequently characterized by intricate dependencies, outdated technologies, and limited documentation. Successful containerization necessitates a methodical approach, starting with a thorough assessment and planning phase.

This guide will explore the critical steps involved in transforming these legacy systems into containerized environments. We will examine the selection of appropriate containerization technologies, the necessary application refactoring techniques, and the intricacies of building, testing, and deploying container images. Furthermore, we will delve into crucial aspects like networking, data management, security, and monitoring, ensuring a holistic understanding of the containerization process.

The goal is to provide a pragmatic framework for migrating legacy applications to modern, scalable, and manageable containerized infrastructures.

Understanding Legacy Applications

Legacy applications, often characterized by their age and the technologies they employ, present unique challenges when considering containerization. These applications, critical to many businesses, have evolved over time, accumulating dependencies and complexities that can hinder modern deployment strategies. Understanding their inherent characteristics and the motivations behind containerizing them is crucial for a successful migration.

Common Characteristics of Legacy Applications Challenging Containerization

Several factors contribute to the difficulty of containerizing legacy applications. These challenges often stem from the application’s design, dependencies, and operational requirements.

- Monolithic Architecture: Many legacy applications are monolithic, meaning all functionalities are bundled into a single, large codebase. This can make it difficult to isolate components for containerization and scale individual services independently. For instance, modifying a small part of the application may require redeploying the entire monolith, leading to downtime and resource inefficiency.

- Complex Dependencies: Legacy applications frequently rely on a multitude of dependencies, including specific versions of operating systems, libraries, and middleware. These dependencies can be difficult to replicate in a containerized environment, requiring careful planning and potentially significant refactoring.

- Lack of Documentation: Over time, documentation for legacy applications can become outdated or non-existent. This lack of documentation complicates understanding the application’s architecture, dependencies, and operational requirements, making containerization more challenging. Reverse engineering may be necessary to understand the application’s behavior.

- Stateful Operations: Some legacy applications rely on storing data locally or on shared storage, making it difficult to scale and manage in a containerized environment. Container orchestration platforms often favor stateless applications, which are easier to scale and manage.

- Security Vulnerabilities: Legacy applications may contain security vulnerabilities due to outdated technologies and lack of security updates. Containerization can help mitigate these risks by providing a more isolated environment, but it does not eliminate the vulnerabilities themselves.

- Performance Bottlenecks: The performance of legacy applications can be constrained by the underlying infrastructure and design choices. Containerization can help improve performance by providing a more efficient resource allocation, but it may not solve all performance issues.

Examples of Legacy Application Types and Technologies

Legacy applications come in various forms, each employing different technologies and architectures. Understanding these different types is essential for tailoring the containerization approach.

- Monolithic Applications: These applications are characterized by a single, large codebase.

- Example: A large e-commerce platform built on Java EE (now Jakarta EE) running on an application server like JBoss or WebSphere, using a relational database such as Oracle or IBM DB2.

- Technologies: Java, .NET Framework, COBOL, C++, relational databases (e.g., Oracle, SQL Server, MySQL), application servers (e.g., WebLogic, Tomcat, JBoss).

- Client-Server Applications: These applications typically involve a client application communicating with a server application over a network.

- Example: A desktop application built with Visual Basic communicating with a database server like Microsoft SQL Server.

- Technologies: Visual Basic, Delphi, C++, client-server protocols (e.g., TCP/IP), database servers (e.g., SQL Server, Oracle).

- Mainframe Applications: These applications are designed to run on mainframe computers, often using legacy programming languages.

- Example: A financial transaction processing system written in COBOL running on an IBM mainframe.

- Technologies: COBOL, Assembler, JCL, VSAM, mainframe operating systems (e.g., z/OS).

- Web Applications: Early web applications built with older web technologies.

- Example: A website built with PHP 5 and using a MySQL database.

- Technologies: PHP, ASP, Perl, CGI, older versions of JavaScript, relational databases (e.g., MySQL, PostgreSQL).

Typical Business Reasons for Containerizing Legacy Applications

Containerizing legacy applications offers several business benefits, making it an attractive option for organizations seeking to modernize their IT infrastructure. These reasons often drive the decision to undertake such projects.

- Improved Resource Utilization: Containerization allows for more efficient use of server resources. Containers share the host operating system’s kernel, reducing overhead compared to virtual machines. This can lead to significant cost savings, especially in cloud environments where resources are often billed by usage.

- Increased Scalability: Containers can be easily scaled up or down based on demand. Container orchestration platforms like Kubernetes automate the process of deploying, scaling, and managing containers, allowing for greater flexibility and responsiveness to changing business needs. For example, during peak shopping seasons, an e-commerce application can automatically scale up its containerized web servers to handle increased traffic.

- Enhanced Portability: Containers provide a consistent runtime environment across different platforms. This portability simplifies application deployment and migration across various environments, including on-premises data centers, public clouds, and hybrid cloud setups.

- Simplified Deployment and Management: Containerization streamlines the deployment process. Containers package the application and its dependencies into a single unit, making it easier to deploy and manage. Container orchestration tools further simplify management tasks, such as updates, rollbacks, and monitoring.

- Reduced Operational Costs: By improving resource utilization, automating deployment, and simplifying management, containerization can significantly reduce operational costs. This includes lower infrastructure costs, reduced IT staff workload, and faster time to market for new features and updates.

- Improved Security: Containerization can enhance application security by isolating applications from each other and the underlying host operating system. This reduces the attack surface and makes it more difficult for attackers to compromise the system. However, it’s important to implement security best practices within the container environment as well.

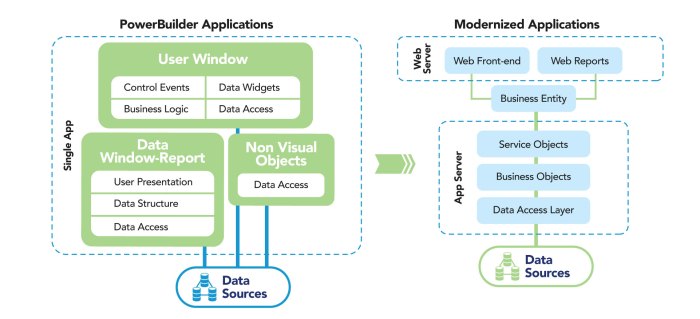

- Facilitating Modernization: Containerization can be a stepping stone towards more modern application architectures, such as microservices. By containerizing legacy applications, organizations can begin to break them down into smaller, more manageable components, paving the way for future modernization efforts.

Assessment and Planning

Containerizing a legacy application requires a methodical approach, beginning with a thorough assessment to determine feasibility and culminating in a detailed migration plan. This process minimizes risks and maximizes the chances of a successful transition, ensuring the application’s continued operation and potential for modernization. The assessment phase is crucial for understanding the application’s intricacies, dependencies, and resource needs.

Suitability Evaluation

The initial step involves evaluating the legacy application’s suitability for containerization. This assessment considers various factors, including the application’s architecture, dependencies, and operational environment. Determining the application’s characteristics helps to decide whether containerization is a viable approach.

- Application Architecture: Monolithic applications, while often challenging, can be containerized. However, applications designed with microservices architecture are generally better suited for containerization, as their individual components are already logically separated. For example, a monolithic Java application might be containerized as a single unit, while a microservices-based application can have each service running in its own container.

- Dependencies: Identify all external dependencies, such as databases, message queues, and external APIs. Containerization can simplify dependency management by bundling them with the application.

- Operating System Compatibility: Determine the operating system requirements of the application. This helps select the appropriate base image for the container. Applications originally designed for older operating systems might require specific container images or compatibility layers.

- Resource Consumption: Analyze the application’s resource usage (CPU, memory, disk I/O, network) to ensure efficient container resource allocation. This helps prevent performance bottlenecks and ensure the application can run smoothly within the containerized environment.

- Application State: Consider the application’s state management. Stateless applications are generally easier to containerize than stateful ones, as state can be managed outside the container. Stateful applications require careful consideration of data persistence and replication strategies.

Dependency and Resource Analysis

A comprehensive analysis of application dependencies and resource requirements is essential for effective containerization. This analysis involves utilizing various tools and techniques to gain a detailed understanding of the application’s operational characteristics. The goal is to identify all dependencies, resource consumption patterns, and potential bottlenecks.

- Dependency Analysis Tools: Tools such as `ldd` (Linux), Dependency Walker (Windows), and specialized dependency scanners can be used to identify the libraries, shared objects, and other external components that the application relies on. These tools help create a complete dependency graph.

- Resource Monitoring Tools: Monitoring tools, such as `top`, `htop`, `perf` (Linux), Task Manager (Windows), and third-party application performance monitoring (APM) solutions, are used to track CPU, memory, disk I/O, and network usage. These tools provide insights into the application’s resource consumption patterns under various workloads.

- Profiling Tools: Profiling tools, such as `gprof` (Linux), Visual Studio Profiler (Windows), and Java profilers (e.g., JProfiler, YourKit), are used to identify performance bottlenecks within the application code. These tools can pinpoint areas where the application spends the most time and resources.

- Network Analysis Tools: Network analysis tools, such as `tcpdump`, Wireshark, and network monitoring software, are used to analyze network traffic patterns. These tools can help identify network dependencies, communication protocols, and potential network bottlenecks.

- Static Code Analysis: Static code analysis tools can be used to examine the application’s source code for potential vulnerabilities, performance issues, and adherence to coding standards. These tools can identify potential issues early in the containerization process.

Migration Plan for a Web Application (Example)

The migration plan should be tailored to the specific type of legacy application. As an example, consider migrating a legacy web application written in PHP, using a MySQL database and running on an Apache web server. This plan Artikels the steps, considerations, and potential challenges involved.

- Application Packaging: Create a Dockerfile that defines the container image. The Dockerfile would typically include:

- A base image (e.g., `php:7.4-apache` or `ubuntu:20.04`).

- Installation of necessary PHP extensions (e.g., `mysqli`, `curl`).

- Copying the application code into the container.

- Configuring the Apache web server (e.g., virtual hosts).

- Defining environment variables for database connection details.

Example Dockerfile snippet:

FROM php:7.4-apache

RUN apt-get update && apt-get install -y --no-install-recommends \ mysql-client \ && docker-php-ext-install mysqli pdo pdo_mysql

COPY . /var/www/html

COPY 000-default.conf /etc/apache2/sites-available/000-default.conf

- Database Migration: Consider the database migration strategy.

- Data Migration: Export the database data from the legacy MySQL server. Use tools like `mysqldump` to create a SQL dump of the database. Import the data into a containerized MySQL instance (e.g., using `mysql:5.7` or `mysql:8.0` Docker image). Consider the size of the database and the downtime requirements when choosing a migration strategy.

For example, a large database might require a more sophisticated approach, such as using replication or a staged migration.

- Connection Configuration: Configure the PHP application within the container to connect to the containerized MySQL database. This often involves setting environment variables or modifying the application’s configuration files to specify the database host, port, username, and password.

- Data Migration: Export the database data from the legacy MySQL server. Use tools like `mysqldump` to create a SQL dump of the database. Import the data into a containerized MySQL instance (e.g., using `mysql:5.7` or `mysql:8.0` Docker image). Consider the size of the database and the downtime requirements when choosing a migration strategy.

- Network Configuration: Configure the network for container communication.

- Container Networking: Use Docker networks to enable communication between the application container and the database container. Create a Docker network (e.g., `docker network create my-network`) and attach both containers to it.

- Port Mapping: Expose the necessary ports on the application container (e.g., port 80 for HTTP). Use Docker’s port mapping feature (e.g., `-p 8080:80`) to map the container’s ports to the host machine’s ports.

- Testing and Validation: Thoroughly test the containerized application to ensure functionality and performance.

- Functional Testing: Perform functional testing to verify that all application features are working correctly.

- Performance Testing: Conduct performance testing to assess the application’s performance under load.

- Security Testing: Perform security testing to identify and address any vulnerabilities.

- Deployment and Monitoring: Deploy the containerized application and set up monitoring.

- Deployment Strategy: Choose a deployment strategy (e.g., rolling updates, blue/green deployments) to minimize downtime during deployment.

- Monitoring: Implement monitoring to track the application’s health, performance, and resource usage. Use tools like Prometheus and Grafana to collect and visualize metrics.

Choosing the Right Containerization Technology

The selection of the appropriate containerization technology is a pivotal decision in the process of containerizing legacy applications. This choice significantly impacts the ease of migration, the operational overhead, and the overall success of the project. Several platforms exist, each with its strengths and weaknesses, making a careful comparative analysis essential. The decision must align with the specific requirements and constraints of the legacy application, the existing infrastructure, and the desired future state.

Comparing Docker and Other Containerization Platforms

The containerization landscape extends beyond Docker, encompassing various platforms that offer distinct approaches to container management and orchestration. Understanding these differences is crucial for making an informed decision.Docker, often considered the de facto standard, provides a robust platform for building, shipping, and running containerized applications. However, other technologies, such as rkt (Rocket), containerd, and Podman, offer alternative solutions, each with its unique features and trade-offs.* Docker: Docker’s popularity stems from its user-friendly interface, extensive ecosystem, and broad community support.

It utilizes a client-server architecture, where the Docker daemon manages container execution. Dockerfiles define the build process, and Docker Hub serves as a central repository for container images. Docker’s advantages include its ease of use, large community, and extensive tooling. It facilitates rapid application deployment and scaling. Docker’s disadvantages include its reliance on a daemon, which can introduce security concerns and resource overhead.

It also has a more complex architecture compared to some alternatives.

rkt (Rocket)

rkt, developed by CoreOS (now part of Red Hat), aimed to provide a more secure and composable container runtime. It focused on integrating with existing infrastructure and adhering to open standards. rkt’s advantages included its focus on security and its compatibility with the Container Runtime Interface (CRI). It was designed to be lightweight and integrated well with systems like Kubernetes.

rkt’s disadvantages were its smaller community compared to Docker and its potentially more complex configuration. It has also seen less active development in recent years.

containerd

containerd is a container runtime that provides a low-level interface for managing containers. It is designed to be embedded in larger systems, such as Docker and Kubernetes. containerd’s advantages include its efficiency and its focus on container lifecycle management. It offers a stable and performant foundation for container orchestration. containerd’s disadvantages are its lower-level nature, which requires additional tooling for building and managing container images.

It is less user-friendly for direct interaction.

Podman

Podman is a daemonless container engine that offers a Docker-compatible command-line interface. It allows users to run containers without requiring a daemon, enhancing security and simplifying operations. Podman’s advantages include its daemonless architecture, which improves security and reduces resource consumption. It offers excellent compatibility with Docker commands.

Podman’s disadvantages include potential compatibility issues with some Docker features and a slightly steeper learning curve for users unfamiliar with daemonless container management.

Advantages and Disadvantages in the Context of Legacy Application Migration

The choice of containerization platform significantly impacts the migration process. Each platform presents unique benefits and drawbacks that must be carefully considered in the context of legacy applications.* Docker:

Advantages

Ease of Use

Docker’s user-friendly interface and extensive documentation simplify the containerization process, which is particularly valuable for teams new to containerization.

Large Community and Ecosystem

The vast Docker community and the availability of pre-built images on Docker Hub accelerate the migration process by providing readily available solutions and support.

Mature Tooling

Docker’s mature tooling, including Docker Compose and Docker Swarm, simplifies application deployment, scaling, and management.

Disadvantages

Daemon Dependency

The Docker daemon can be a single point of failure and may introduce security vulnerabilities, especially in legacy applications that may have vulnerabilities.

Resource Overhead

Docker’s architecture can consume more resources compared to more lightweight alternatives, potentially impacting the performance of legacy applications.

Complexity for Specific Scenarios

While user-friendly overall, complex legacy applications might require more intricate Docker configurations, which can increase the learning curve.

rkt (Rocket)

Advantages

Security Focus

rkt’s security-centric design can be advantageous when migrating legacy applications that may have inherent security risks.

Integration with Kubernetes

rkt’s compatibility with Kubernetes can be beneficial if the goal is to orchestrate the legacy application within a Kubernetes cluster.

Composability

rkt’s focus on composability allows for easier integration with existing infrastructure components.

Disadvantages

Smaller Community

The smaller community support might make it more difficult to find solutions and assistance during the migration process.

Less Mature Ecosystem

The ecosystem of tools and services around rkt is less mature compared to Docker.

Potential Learning Curve

While not drastically different, the specific concepts of rkt may require additional training for the team.

containerd

Advantages

Efficiency

containerd’s focus on efficiency can be beneficial for resource-constrained legacy applications.

Stability

containerd’s stable and reliable nature ensures a robust foundation for container execution.

Integration with Kubernetes

containerd is the core container runtime for Kubernetes, making it a natural choice if Kubernetes is part of the strategy.

Disadvantages

Lower-Level Interface

containerd’s lower-level interface requires additional tooling for building and managing container images.

Less User-Friendly

The direct interaction with containerd is less user-friendly than Docker, potentially requiring specialized expertise.

Less Mature Ecosystem for Direct Use

While excellent as a foundation, the direct ecosystem for using containerd is less extensive compared to Docker.

Podman

Advantages

Daemonless Architecture

Podman’s daemonless architecture enhances security and reduces resource consumption, which can be critical for legacy applications.

Docker Compatibility

Podman’s compatibility with Docker commands makes it easier for teams familiar with Docker to adopt it.

Simplified Operations

The daemonless design simplifies operations and reduces the risk of security vulnerabilities.

Disadvantages

Compatibility Issues

Potential compatibility issues with certain Docker features might require adjustments during the migration.

Learning Curve

While similar to Docker, the daemonless approach might require some adjustments to the team’s workflow.

Maturity

Podman, while rapidly evolving, is still less mature than Docker, and some features might not be fully developed.

Designing a Decision Matrix for Selecting Containerization Technology

A decision matrix provides a structured approach to evaluate and compare different containerization technologies based on specific criteria relevant to the legacy application. The matrix should include key factors and a scoring system to facilitate the selection process.* Criteria: Define the critical criteria for evaluating each platform. These criteria should reflect the specific needs and constraints of the legacy application.

Ease of Migration

How easy is it to containerize the legacy application using the platform?

Security

Does the platform offer strong security features and mitigations for vulnerabilities?

Performance

What is the expected performance impact on the legacy application?

Community Support

What is the level of community support and available resources?

Tooling and Ecosystem

What tools and services are available for managing the containers?

Integration with Existing Infrastructure

How well does the platform integrate with the existing infrastructure (e.g., networking, storage)?

Learning Curve

What is the expected learning curve for the team?

Resource Consumption

How much resource overhead does the platform introduce?

Licensing and Cost

Are there any licensing costs associated with the platform?

Scoring System

Assign a numerical score to each platform for each criterion.

Use a consistent scoring scale (e.g., 1-5, where 1 is the lowest and 5 is the highest).

Define clear definitions for each score to ensure consistency in the evaluation.

Weighting

Assign weights to each criterion based on its importance.

For example, security might be given a higher weight than ease of use.

The weights should reflect the priorities of the project.

Evaluation

Evaluate each platform based on the criteria and scoring system.

For each criterion, assign a score to each platform.

Multiply each score by the corresponding weight.

Sum the weighted scores for each platform to obtain a total score.

Decision

Select the platform with the highest total score.

The decision matrix provides a clear and objective basis for selecting the most appropriate containerization technology.

Document the decision-making process, including the criteria, scoring system, and weighting, for future reference.

Example Decision Matrix (Simplified):| Criterion | Weight | Docker | rkt | containerd | Podman || ————————- | —— | —— | —– | ———- | —— || Ease of Migration | 3 | 5 | 3 | 3 | 4 || Security | 4 | 3 | 4 | 4 | 5 || Performance | 3 | 4 | 4 | 5 | 4 || Community Support | 5 | 5 | 2 | 3 | 4 || Weighted Score (Docker)| | 56 | 39| 48 | 54 |In this simplified example, Docker has the highest score, indicating it might be the most suitable choice.

However, the specific scores and weights should be adjusted based on the actual requirements of the legacy application and the project’s priorities. The decision matrix provides a systematic way to compare and contrast the platforms, leading to a more informed decision. For instance, if the legacy application is highly sensitive and security is paramount, rkt or Podman, with their higher security scores, might be preferred even if their overall scores are slightly lower.

Preparing the Application for Containerization

Containerizing a legacy application often necessitates adjustments to ensure its smooth operation within a containerized environment. This involves code modifications, infrastructure considerations, and the creation of a Dockerfile, the blueprint for building the container image. The complexity of this process varies depending on the application’s architecture, dependencies, and the chosen containerization technology. Careful planning and execution are crucial for a successful transition.

Refactoring Application Code for Container Compatibility

Refactoring the application code is often a critical step to improve its compatibility with containers. The goal is to minimize dependencies on the underlying operating system, improve portability, and optimize resource utilization.

- Dependency Management: Legacy applications frequently rely on system-level dependencies. Containerization isolates applications, and these dependencies must be explicitly managed within the container. This may involve:

- Identifying all external dependencies, including libraries, system utilities, and runtime environments.

- Packaging these dependencies within the container image, typically using the container’s package manager (e.g., `apt` for Debian-based systems, `yum` for CentOS-based systems).

- Using a dependency management tool like Maven or Gradle for Java applications, or NuGet for .NET applications, to manage dependencies and ensure consistent builds.

- Configuration Management: Hardcoded configurations are problematic in containerized environments. Containerized applications should be configurable through environment variables or configuration files mounted from outside the container. This allows for:

- Separation of configuration from the application code, enabling easy deployment across different environments (development, testing, production).

- Dynamic configuration updates without rebuilding the container image.

- Use of secrets management tools to securely store and manage sensitive information like database credentials.

- Statelessness and Shared Storage: Containers are designed to be ephemeral. Data persistence should be handled separately, ideally using external storage solutions. This can be achieved by:

- Storing persistent data in databases or object storage services.

- Using volumes to mount external storage directories into the container, allowing data to persist even if the container is stopped or removed.

- Avoiding storing data directly within the container’s filesystem.

- Process Management: Legacy applications might rely on init systems or custom scripts for process management. Containerized applications typically run a single process. Refactoring might involve:

- Modifying the application to run as a single process.

- Using a process manager like `supervisord` within the container to manage multiple processes if necessary (although this is generally discouraged).

- Health Checks: Implementing health checks allows container orchestration platforms to monitor the application’s health and automatically restart unhealthy containers. This can be achieved by:

- Exposing health check endpoints that return the application’s status.

- Configuring container platforms to periodically probe these endpoints.

- Responding to health check probes with appropriate HTTP status codes (e.g., 200 OK for healthy, 503 Service Unavailable for unhealthy).

Creating a Dockerfile for a Legacy Application

The Dockerfile is a text file containing instructions for building a Docker image. It defines the base image, dependencies, application code, and how the application should be executed. The process involves several key steps.

- Choosing a Base Image: Select a base image that provides the necessary runtime environment and dependencies. This could be a base image provided by the container registry (Docker Hub), a custom image, or an image from a third-party provider. Considerations include:

- The operating system (e.g., Debian, Ubuntu, CentOS).

- The programming language runtime (e.g., Java, .NET, Node.js).

- The size of the image (smaller images generally lead to faster builds and deployments).

- Installing Dependencies: Use the appropriate package manager (e.g., `apt-get`, `yum`) within the Dockerfile to install the application’s dependencies. This involves:

- Specifying the package manager in the `RUN` instruction.

- Updating the package lists.

- Installing the required packages.

- Copying Application Code: Copy the application code into the container’s filesystem. This is typically done using the `COPY` instruction. Ensure that the source directory is specified relative to the Dockerfile’s location.

- Setting Environment Variables: Define environment variables that configure the application’s behavior. This can be done using the `ENV` instruction. Examples include:

- Database connection strings.

- API keys.

- Logging levels.

- Exposing Ports: Specify the ports that the application listens on using the `EXPOSE` instruction. This informs Docker about the ports the container exposes.

- Defining the Entrypoint and Command: The `ENTRYPOINT` and `CMD` instructions specify how the application is executed when the container starts. The `ENTRYPOINT` defines the primary command, and the `CMD` provides default arguments.

Sample Dockerfile for a Hypothetical Legacy Java Application

Consider a legacy Java application packaged as a WAR file, running on Apache Tomcat. This example demonstrates how to create a Dockerfile for such an application.“`dockerfile# Use an official Tomcat runtime as the base imageFROM tomcat:9.0-jre11# Set the application’s context path (optional, but good practice)ENV CONTEXT_PATH /legacy-app# Copy the WAR file to Tomcat’s webapps directoryCOPY legacy-app.war /usr/local/tomcat/webapps/legacy-app.war# Expose port 8080 (Tomcat’s default HTTP port)EXPOSE 8080# Define the entrypoint (Tomcat’s startup script)CMD [“catalina.sh”, “run”]“`The Dockerfile above provides the following instructions:

- `FROM tomcat:9.0-jre11`: This line specifies the base image. It uses an official Tomcat 9 image with Java 11. This base image includes the necessary Java runtime and Tomcat server.

- `ENV CONTEXT_PATH /legacy-app`: Sets an environment variable `CONTEXT_PATH`. This is used to define the application’s context path within Tomcat. This is optional, but setting it is considered a good practice for configuration.

- `COPY legacy-app.war /usr/local/tomcat/webapps/legacy-app.war`: Copies the `legacy-app.war` file (the packaged application) into the Tomcat webapps directory. The `COPY` instruction copies files or directories from the Docker build context (where the Dockerfile resides) to the container’s filesystem.

- `EXPOSE 8080`: This instruction informs Docker that the container will listen on port 8080. This does not automatically publish the port; it merely documents it. The port must be published during container runtime to make it accessible from outside the container.

- `CMD [“catalina.sh”, “run”]`: This line specifies the command to run when the container starts. It executes Tomcat’s startup script. This ensures that Tomcat, and consequently the legacy application, is started when the container is launched.

To build the image, navigate to the directory containing the Dockerfile and run the command `docker build -t legacy-java-app .`. This will build a Docker image tagged as `legacy-java-app`. Then, to run the container, use the command `docker run -p 8080:8080 legacy-java-app`. This command maps port 8080 on the host machine to port 8080 inside the container, making the application accessible through the host’s IP address.

Building and Testing the Container Image

Building and rigorously testing the container image is a critical phase in containerizing a legacy application. This process ensures the application functions as expected within the containerized environment and that any potential issues are identified and resolved before deployment. Proper image building and testing significantly contribute to the stability, reliability, and portability of the containerized application.

Building a Container Image from a Dockerfile

The Dockerfile serves as the blueprint for building a container image. It contains a set of instructions that Docker uses to assemble the image layer by layer. Understanding the Dockerfile structure and best practices for its creation is crucial for a successful containerization process.The process of building an image from a Dockerfile involves the following steps:

- Dockerfile Creation: A Dockerfile is created in the root directory of the application. This file Artikels the steps required to build the image, including specifying the base image, copying application code, installing dependencies, and configuring the application.

- Dockerfile Instructions: The Dockerfile utilizes various instructions, each serving a specific purpose:

FROM: Specifies the base image to use. For example,FROM ubuntu:latest.COPY: Copies files or directories from the host machine into the container.RUN: Executes commands during the image build process, such as installing dependencies (e.g.,RUN apt-get update && apt-get install -y).WORKDIR: Sets the working directory within the container.CMD: Specifies the default command to execute when the container starts.ENTRYPOINT: Configures a command that will always be executed when the container starts.ENV: Sets environment variables within the container.EXPOSE: Declares which ports the container will listen on.

- Image Build Execution: The Docker build command is executed to build the image:

docker build -t <image_name> .. The `-t` flag tags the image with a name, and the `.` specifies the build context (the current directory, which contains the Dockerfile). - Image Layers: Docker builds images layer by layer, caching the results of each instruction. This allows for faster builds when changes are made, as only the modified layers need to be rebuilt.

- Image Tagging: Tagging an image is essential for versioning and managing different builds. Tags allow you to identify specific versions of your image (e.g., `my-legacy-app:1.0`, `my-legacy-app:latest`).

An example of a simplified Dockerfile for a legacy application might look like this:

FROM ubuntu:20.04RUN apt-get update && apt-get install -y --no-install-recommendsWORKDIR /appCOPY . /appEXPOSE 8080CMD ["./run_application.sh"]

This Dockerfile uses Ubuntu 20.04 as a base image, installs dependencies, sets the working directory to `/app`, copies the application code, exposes port 8080, and specifies the command to run the application. The dependencies specified are just examples; the actual dependencies will depend on the legacy application. The use of `–no-install-recommends` during the installation process can significantly reduce the image size.

Best Practices for Testing the Containerized Application

Comprehensive testing is crucial to validate the functionality and performance of the containerized legacy application. A robust testing strategy includes various types of tests to ensure the application behaves as expected in its new environment.

A testing strategy for containerized applications should include the following types of tests:

- Unit Tests: Unit tests verify the functionality of individual components or modules of the application in isolation. They help ensure that each unit of code functions correctly. Unit tests are typically performed within the container environment. For instance, if the legacy application includes a specific module for data processing, unit tests should be designed to validate that module’s behavior with different data inputs.

- Integration Tests: Integration tests verify the interaction between different components or modules of the application. These tests ensure that the components work together as expected. They might involve testing communication between different services or components within the container or with external dependencies. For example, integration tests could verify that the containerized application can successfully connect to a database or an external API.

- Performance Tests: Performance tests measure the application’s performance under different load conditions. They assess the application’s response time, throughput, and resource utilization. Performance tests help identify potential bottlenecks or performance issues that may arise after containerization. Load testing tools, such as JMeter or Locust, can be utilized to simulate realistic user traffic.

- End-to-End (E2E) Tests: E2E tests simulate user interactions with the entire application, from the user interface to the backend systems. They ensure that the application functions correctly from the user’s perspective. E2E tests often use tools like Selenium or Cypress to automate user interactions with the application.

- Security Tests: Security tests are critical to identify vulnerabilities and ensure the containerized application is secure. These tests include vulnerability scanning, penetration testing, and static code analysis. Tools like OWASP ZAP and SonarQube can be used to automate these tests.

An effective testing strategy should include a combination of these test types to provide comprehensive coverage and ensure the quality of the containerized application. Automation of these tests is highly recommended to enable continuous integration and continuous delivery (CI/CD) pipelines.

Common Troubleshooting Techniques for Resolving Issues

Troubleshooting during the image building and testing phases is often required. Common issues can range from dependency problems to configuration errors. Understanding common problems and how to resolve them is crucial for efficient containerization.

Troubleshooting techniques include:

- Build Logs Analysis: Docker build logs provide detailed information about the image building process. Analyzing these logs is the first step in troubleshooting build failures. Pay close attention to error messages, warning messages, and any unexpected behavior during the build.

- Dependency Issues: Ensure that all required dependencies are correctly installed in the Dockerfile. Check for missing packages, version conflicts, and compatibility issues. The use of a package manager (e.g., `apt-get`, `yum`) can help manage dependencies. For example, if a legacy application requires a specific version of a library, ensure that version is specified during installation.

- Permissions Issues: Incorrect file permissions can prevent the application from running correctly inside the container. Verify that the application code has the necessary permissions to access files and directories. Consider using the `USER` instruction in the Dockerfile to specify a non-root user for running the application, which enhances security.

- Network Configuration: Network issues can arise when the application needs to communicate with external services or other containers. Verify that the network configuration is correct, including port mappings and network settings. Use the `EXPOSE` instruction in the Dockerfile to declare the ports the application uses.

- Application Configuration: Configuration errors can prevent the application from starting or functioning correctly. Ensure that all necessary configuration files are correctly placed and that environment variables are properly set. Use environment variables within the Dockerfile to pass configuration values to the application, promoting flexibility and portability.

- Container Logging and Monitoring: Implement logging and monitoring to track the application’s behavior within the container. This will help you identify errors and performance bottlenecks. Use tools like `docker logs` to view container logs. Integrate the application with a logging system (e.g., ELK stack, Splunk) to collect and analyze logs centrally.

- Container Inspection: Use the `docker inspect` command to examine the container’s configuration, environment variables, and network settings. This can provide valuable information for troubleshooting.

- Iterative Approach: When troubleshooting, adopt an iterative approach. Make small changes, rebuild the image, and re-test the application. This approach helps isolate the root cause of the problem.

Container Orchestration and Deployment

Containerization of legacy applications is not merely about packaging; it’s about enabling efficient management, scalability, and continuous delivery. This requires sophisticated tools and strategies to handle the lifecycle of containerized applications, from initial deployment to ongoing updates and scaling. Orchestration and deployment are crucial elements in realizing the full benefits of containerization, ensuring that legacy applications, often complex and stateful, can operate reliably and efficiently in a containerized environment.

Container Orchestration Tools

Container orchestration tools automate the deployment, scaling, and management of containerized applications. These tools address the challenges of managing a large number of containers, ensuring high availability, and optimizing resource utilization. Selecting the appropriate orchestration tool depends on factors such as application complexity, team expertise, and existing infrastructure.

- Kubernetes: Kubernetes (K8s) is the most widely adopted container orchestration platform. It provides a comprehensive set of features for managing containerized applications at scale. Kubernetes automates deployment, scaling, and management of containerized applications. It offers features such as self-healing, automated rollouts and rollbacks, service discovery, and load balancing. Kubernetes uses a declarative approach, where users define the desired state of the application, and Kubernetes works to achieve that state.

Kubernetes is particularly well-suited for complex applications and large-scale deployments, making it suitable for orchestrating complex legacy applications.

- Docker Compose: Docker Compose is a tool for defining and running multi-container Docker applications. It simplifies the development and testing of containerized applications by allowing users to define all application services in a single YAML file. Docker Compose is suitable for development and testing environments and simpler production deployments. It provides a streamlined way to define dependencies between containers and manage their lifecycles.

While Docker Compose is easier to set up than Kubernetes, it lacks the advanced features and scalability of Kubernetes.

- Comparison: Kubernetes offers greater scalability, fault tolerance, and a richer feature set than Docker Compose, making it suitable for production environments. Docker Compose excels in local development and testing scenarios. The choice depends on the specific needs of the legacy application and the scale of deployment. Kubernetes’ ability to manage application state and handle complex deployments makes it a better choice for many production scenarios involving legacy applications.

Deployment Strategies for Legacy Applications

Deployment strategies determine how new versions of a containerized application are rolled out, minimizing downtime and risk. Several strategies can be employed, each with its advantages and disadvantages. The choice of strategy depends on the application’s criticality, the acceptable level of downtime, and the complexity of the application.

- Rolling Updates: Rolling updates gradually replace old versions of the application with new versions, one container at a time. This strategy ensures that some instances of the application remain available during the update process. The orchestration tool automatically handles the scaling and replacement of containers, ensuring minimal disruption to users. This is a straightforward approach and suitable for many legacy applications, especially those with less stringent availability requirements.

The main advantage is zero downtime.

- Blue/Green Deployments: Blue/green deployments involve running two identical environments: the “blue” environment (current live version) and the “green” environment (new version). Traffic is initially directed to the blue environment. When the green environment is ready, traffic is switched to the green environment. This approach allows for quick rollbacks if the new version has issues. This strategy is generally preferred for applications with high availability requirements.

This strategy requires careful planning and resource allocation.

- Canary Deployments: Canary deployments involve releasing the new version to a small subset of users (the “canary”) to test it in a production environment before a full rollout. If the canary deployment is successful, the new version is gradually rolled out to the rest of the users. This strategy minimizes the impact of potential issues with the new version. It provides an opportunity to monitor the new version’s performance and identify any problems before a full rollout.

- Considerations for Legacy Applications: Legacy applications may have dependencies or stateful components that require careful consideration during deployment. For example, databases need to be handled with care during updates. Rolling updates may be suitable for stateless legacy applications. Blue/green deployments may be preferable for stateful applications to ensure data consistency and minimize downtime. The specific strategy should be chosen based on the characteristics of the legacy application.

Deployment Pipeline Design for Containerized Legacy Applications

A deployment pipeline automates the process of building, testing, and deploying containerized applications. It streamlines the release process, improves efficiency, and reduces the risk of errors. The pipeline typically includes stages for code integration, build, testing, and deployment.

- CI/CD Integration: Continuous Integration (CI) and Continuous Delivery/Deployment (CD) are essential components of a modern deployment pipeline. CI involves automatically integrating code changes from multiple developers into a shared repository. CD automates the build, test, and deployment of code changes to various environments. This approach enables frequent releases and faster feedback loops.

- Pipeline Stages:

- Code Commit: Developers commit code changes to a version control system (e.g., Git).

- Build: The CI/CD system triggers a build process that creates the container image.

- Testing: Automated tests are run to validate the container image. These tests may include unit tests, integration tests, and end-to-end tests.

- Image Repository: The container image is pushed to a container registry (e.g., Docker Hub, Google Container Registry, Amazon Elastic Container Registry).

- Deployment: The orchestration tool (e.g., Kubernetes) deploys the container image to the target environment. This can be a staging environment for testing or a production environment.

- Monitoring: Monitoring tools track the performance and health of the deployed application.

- Example: A simplified deployment pipeline for a containerized legacy application might use Jenkins as the CI/CD tool, Docker for building container images, and Kubernetes for deployment. When a developer commits code changes, Jenkins automatically builds the container image, runs automated tests, and deploys the image to a staging environment. After successful testing in the staging environment, the image is deployed to the production environment.

The pipeline is integrated with monitoring tools to provide real-time insights into the application’s performance.

- Benefits: Automating the deployment process reduces manual errors, accelerates the release cycle, and improves the reliability of deployments. A well-designed deployment pipeline ensures that legacy applications are deployed consistently and efficiently, enabling faster iteration and improved responsiveness to user needs.

Networking and Data Management

Containerizing legacy applications necessitates careful consideration of networking and data management. These aspects are crucial for ensuring that the containerized application can communicate with other services, both within and outside the container environment, and that its data persists reliably. Effective networking enables communication, while robust data management ensures data integrity and availability.

Container Networking Configuration

Container networking facilitates communication between containers and the outside world. It also enables communication between containers within the same host or across multiple hosts. Understanding the different networking models and their configurations is vital for successfully containerizing legacy applications.

The primary networking models include:

- Bridge Networking: This is the default network configuration. Each container is connected to a virtual bridge on the host machine. Containers on the same bridge can communicate directly. To expose services to the outside world, port mapping is typically used, where ports on the host machine are mapped to ports within the container. This is a good starting point for many legacy applications.

- Host Networking: In this mode, a container uses the host’s network namespace directly. The container has the host’s IP address and can access the network as if it were running directly on the host. This simplifies networking in some cases but can lead to port conflicts if multiple containers try to use the same ports. It’s often less secure.

- Overlay Networking: This type of networking allows containers running on different hosts to communicate with each other as if they were on the same network. It’s typically used in multi-host container deployments managed by orchestration tools like Kubernetes or Docker Swarm. It involves creating a virtual network that spans multiple physical hosts.

- None Networking: This disables all networking for a container. The container has no network access. This is suitable for applications that don’t need to communicate with the outside world.

Configuring network access often involves:

- Port Mapping: Exposing container ports to the host machine. For example, if a legacy application running inside a container listens on port 8080, you can map port 80 on the host to port 8080 inside the container. This allows users to access the application via the host’s IP address on port 80.

- Network Configuration Files: Containers often use configuration files (e.g., `docker-compose.yml`) to define network settings. These files specify the network mode, port mappings, and other network-related parameters.

- Firewall Rules: Host firewalls and container-specific firewall rules can be configured to control network traffic to and from the containers. This is important for security.

- Service Discovery: In a multi-container environment, service discovery mechanisms (e.g., DNS-based service discovery) can be used to allow containers to find and communicate with each other by name rather than by IP address.

For legacy applications, bridge networking is often the simplest approach. However, overlay networking is often needed for more complex deployments involving multiple hosts and scaling.

Data Persistence Solutions

Managing data persistence is crucial for legacy applications, ensuring that data survives container restarts and host failures. Several solutions address this need, each with its trade-offs in terms of performance, scalability, and complexity.

Common data persistence solutions include:

- Volumes: Volumes provide a mechanism to store data in a location that is separate from the container’s writable layer. Volumes are managed by Docker and can be created and mounted to one or more containers. Data stored in a volume persists even if the container is stopped or removed. Volumes can be backed by a local directory on the host, a network file system, or a cloud storage service.

- Bind Mounts: Bind mounts allow you to mount a directory or file from the host machine into the container. Changes made inside the container are reflected on the host, and vice versa. This is useful for development and testing but can be less portable than volumes.

- tmpfs Mounts: tmpfs mounts store data in the host’s memory (RAM). Data in a tmpfs mount is not persistent and is lost when the container stops. This is suitable for temporary files or caches.

- Network File Systems (NFS): NFS allows you to share a file system across multiple hosts. Containers can mount an NFS share to access and store data. This is a good solution for shared storage in a multi-host environment, but it can introduce performance bottlenecks.

- Database Systems: For applications that require a database, using a dedicated database container (e.g., PostgreSQL, MySQL) or a managed database service (e.g., AWS RDS, Azure Database) is often the best approach. This ensures data durability, scalability, and availability.

Choosing the appropriate data persistence solution depends on the specific requirements of the legacy application, including data size, access patterns, and availability requirements. For example, if the application requires high performance and data loss is acceptable, tmpfs mounts might be sufficient. If data durability is critical, a volume or a database system should be used.

Storage Solutions Comparison

This table compares different storage solutions for containerized applications.

| Storage Solution | Description | Pros | Cons |

|---|---|---|---|

| Volumes | Managed by Docker; data stored in a location separate from the container. | Easy to manage; data persists across container restarts; can be backed by different storage drivers (local, NFS, cloud). | Performance can be slower than bind mounts; limited portability if using local storage. |

| Bind Mounts | Mounts a directory or file from the host machine into the container. | Simple and fast; useful for development and testing; direct access to the host file system. | Less portable; changes made inside the container are reflected on the host, and vice versa. |

| tmpfs Mounts | Stores data in the host’s memory (RAM). | Very fast; no disk I/O. | Data is not persistent; lost when the container stops; limited capacity. |

| Network File Systems (NFS) | Shares a file system across multiple hosts. | Allows shared storage in a multi-host environment; relatively easy to set up. | Can introduce performance bottlenecks; requires network connectivity; single point of failure. |

| Database Systems | Dedicated database containers or managed database services. | Ensures data durability, scalability, and availability; optimized for database operations. | Requires more setup and configuration; can be more expensive. |

Security Considerations

Containerizing legacy applications introduces a new attack surface and necessitates a reevaluation of security practices. Legacy applications, often riddled with vulnerabilities accumulated over years of development, become even more susceptible when deployed in containers. This section details the security risks, best practices, and auditing steps essential for securing containerized legacy applications, aiming to mitigate potential threats and maintain the integrity of the system.

Identifying Security Risks

The process of containerizing a legacy application exposes several security vulnerabilities. Understanding these risks is the first step in developing a robust security strategy.

- Image Vulnerabilities: Container images, built from base images and application dependencies, can inherit vulnerabilities from these components. Legacy applications often rely on outdated libraries and frameworks, increasing the likelihood of exploitable weaknesses. For instance, an application using an outdated version of OpenSSL might be vulnerable to known exploits.

- Runtime Vulnerabilities: The container runtime environment itself can be a target. Compromises of the Docker daemon or Kubernetes control plane can lead to complete system takeover. Incorrectly configured container orchestration platforms can expose sensitive information or allow unauthorized access.

- Network Attacks: Containerized applications are often interconnected, both internally and externally. Misconfigured network policies can allow attackers to move laterally within the container environment or access sensitive services. Denial-of-service (DoS) attacks and man-in-the-middle (MITM) attacks are potential threats.

- Privilege Escalation: If containers run with excessive privileges, a successful compromise of an application within a container can allow an attacker to escalate privileges and gain control of the host system. This is particularly risky in legacy applications where security best practices may not have been consistently applied.

- Data Breaches: Legacy applications often store sensitive data. Insecure storage configurations, such as using weak encryption or failing to protect data at rest and in transit, can lead to data breaches. Incorrectly configured volume mounts can also expose data to unauthorized access.

- Supply Chain Attacks: The dependencies of a legacy application, including third-party libraries and base images, can be compromised. Malicious code injected into these components can then be executed within the containerized application.

Best Practices for Securing Containerized Applications

Implementing a multi-layered security approach is crucial for protecting containerized legacy applications. This approach involves several key practices.

- Image Scanning: Regularly scan container images for vulnerabilities. Tools like Clair, Trivy, and Anchore Engine can identify known vulnerabilities in the image’s components, including base images, libraries, and application code. For example, Trivy can be integrated into CI/CD pipelines to automatically scan images before deployment, preventing vulnerable images from reaching production.

- Vulnerability Management: Establish a process for addressing identified vulnerabilities. This includes prioritizing vulnerabilities based on severity and exploitability, applying patches, and rebuilding images with updated components. A vulnerability management system should track the status of each vulnerability and ensure that remediation efforts are timely and effective.

- Access Control: Implement strict access control policies. Use role-based access control (RBAC) to limit user access to only the resources they need. Employ multi-factor authentication (MFA) to protect administrative accounts. Restrict access to container orchestration platforms like Kubernetes and ensure that only authorized personnel can deploy or manage containers.

- Least Privilege: Run containers with the least privileges necessary. Avoid running containers as root whenever possible. Use user namespaces to isolate container processes from the host system. Limit the capabilities granted to containers and only provide access to the necessary resources.

- Network Segmentation: Segment the container network to isolate applications and limit the impact of a security breach. Use network policies in Kubernetes or similar tools to control traffic flow between containers and external networks. Employ firewalls to restrict access to containerized services.

- Data Encryption: Encrypt sensitive data at rest and in transit. Use TLS/SSL for secure communication between containers and external services. Encrypt persistent volumes to protect data stored within the container environment. Implement key management systems to securely manage encryption keys.

- Regular Auditing: Conduct regular security audits to identify and address vulnerabilities. Audits should include penetration testing, code reviews, and configuration reviews. Document all security-related configurations and processes.

- Security Monitoring and Logging: Implement robust monitoring and logging to detect and respond to security incidents. Collect logs from containers, the container runtime, and the underlying infrastructure. Use security information and event management (SIEM) systems to analyze logs and identify suspicious activity. Set up alerts to notify security teams of potential threats.

Implementing a Security Audit

A security audit is a systematic assessment of the security posture of a containerized legacy application. The process involves several stages.

- Planning: Define the scope and objectives of the audit. Identify the assets to be assessed, the threats to be considered, and the compliance requirements. Create an audit plan that Artikels the tasks, timelines, and resources required.

- Information Gathering: Collect information about the application, the container environment, and the underlying infrastructure. This includes gathering configuration files, network diagrams, and security policies. Review documentation and interview key personnel to understand the system’s architecture and security practices.

- Vulnerability Scanning: Perform vulnerability scans using tools like Nessus, OpenVAS, or commercial vulnerability scanners. Scan container images, the container runtime, and the underlying infrastructure. Analyze the scan results to identify vulnerabilities and prioritize remediation efforts.

- Penetration Testing: Conduct penetration testing to simulate real-world attacks. This involves attempting to exploit identified vulnerabilities and assess the effectiveness of security controls. Penetration testing should be performed by qualified security professionals.

- Configuration Review: Review the configuration of the container environment and the application to identify misconfigurations and security weaknesses. This includes reviewing network policies, access control settings, and logging configurations. Ensure that all configurations adhere to security best practices.

- Code Review: Review the application code to identify security vulnerabilities. This includes looking for common coding errors, such as SQL injection, cross-site scripting (XSS), and buffer overflows. Use static and dynamic analysis tools to assist in the code review process.

- Log Analysis: Analyze logs to identify security incidents and assess the effectiveness of security monitoring and logging. Review logs from containers, the container runtime, and the underlying infrastructure. Look for suspicious activity, such as unauthorized access attempts, privilege escalation attempts, and data breaches.

- Reporting: Prepare a detailed report that summarizes the audit findings, including identified vulnerabilities, risks, and recommendations. The report should include an executive summary, technical details, and a remediation plan.

- Remediation: Implement the recommendations from the audit report. This includes patching vulnerabilities, correcting misconfigurations, and improving security practices. Track the progress of remediation efforts and ensure that all vulnerabilities are addressed in a timely manner.

- Retesting: After implementing remediation measures, retest the system to verify that the vulnerabilities have been resolved and that the security posture has improved. Conduct regular audits to ensure ongoing security.

Monitoring and Logging

Containerizing a legacy application, while offering significant benefits in terms of portability and resource utilization, introduces new complexities in monitoring and logging. Effective monitoring and logging are crucial for maintaining the health, performance, and security of the containerized application. They provide insights into the application’s behavior, facilitate rapid troubleshooting, and enable proactive identification of potential issues. Without robust monitoring and logging, it becomes challenging to understand the application’s state, diagnose problems, and ensure its long-term stability.

Importance of Monitoring and Logging in Containerized Legacy Applications

Monitoring and logging are not just desirable features; they are essential for the successful operation of a containerized legacy application. They provide critical visibility into the application’s internal workings and external interactions, enabling informed decision-making and proactive problem-solving.

- Real-time Insights: Monitoring tools provide real-time metrics on resource utilization (CPU, memory, network I/O), application performance (response times, error rates), and container health. Logging captures detailed events, errors, and warnings, providing a historical record of the application’s activity. This real-time and historical data are essential for understanding the application’s behavior and identifying anomalies.

- Performance Optimization: Monitoring data allows for the identification of performance bottlenecks. For example, if a container consistently experiences high CPU utilization, it may indicate inefficient code or a need for resource scaling. Logging can reveal the specific operations or code paths that are contributing to the performance issues.

- Troubleshooting and Debugging: When issues arise, monitoring and logging are invaluable for diagnosing the root cause. Monitoring data can pinpoint the time when a problem started, and logging can provide detailed information about the events leading up to the failure. By analyzing these data sources, developers can quickly identify and resolve issues.

- Security and Compliance: Logging plays a crucial role in security monitoring. It captures events such as user logins, access attempts, and security breaches. Analyzing these logs can help identify malicious activity and ensure compliance with security regulations.

- Automated Alerting: Monitoring tools can be configured to generate alerts based on predefined thresholds. For example, an alert can be triggered if CPU utilization exceeds a certain percentage or if the error rate increases dramatically. These alerts allow operators to proactively respond to issues before they impact users.

- Capacity Planning: Monitoring provides data on resource usage trends. This information can be used to predict future resource needs and proactively scale the application to meet demand. For instance, if memory usage is consistently increasing over time, the application can be scaled up to avoid performance degradation.

Tools and Techniques for Monitoring Application Performance and Health

A variety of tools and techniques are available for monitoring the performance and health of containerized legacy applications. The choice of tools depends on the application’s complexity, the container orchestration platform used, and the specific monitoring requirements.

- Metrics Collection: Metrics collection involves gathering data about the application’s performance and resource utilization. Several tools can be used for this purpose:

- Prometheus: Prometheus is a popular open-source monitoring system that collects metrics from applications using a pull-based model. Applications expose metrics in a specific format, and Prometheus scrapes these metrics at regular intervals. Prometheus is particularly well-suited for containerized environments due to its ability to automatically discover and monitor containers.

It supports a powerful query language (PromQL) for analyzing metrics and creating dashboards.

- Grafana: Grafana is a powerful data visualization and dashboarding tool that integrates with Prometheus and other data sources. It allows users to create interactive dashboards that display metrics in a variety of formats, such as graphs, charts, and tables. Grafana is used to visualize the data collected by Prometheus, making it easier to understand the application’s performance and health.

- cAdvisor: cAdvisor (Container Advisor) is a container resource usage and performance analysis tool. It provides real-time information about resource usage (CPU, memory, network I/O) for each container running on a host. cAdvisor can be integrated with Prometheus to collect and visualize these metrics.

- Application Performance Monitoring (APM) tools: APM tools such as Datadog, New Relic, and Dynatrace provide comprehensive monitoring capabilities, including metrics collection, distributed tracing, and application-specific insights. These tools often offer automatic instrumentation and require minimal configuration.

- Prometheus: Prometheus is a popular open-source monitoring system that collects metrics from applications using a pull-based model. Applications expose metrics in a specific format, and Prometheus scrapes these metrics at regular intervals. Prometheus is particularly well-suited for containerized environments due to its ability to automatically discover and monitor containers.

- Logging: Logging is the process of capturing events, errors, and warnings from the application. Logs provide a detailed record of the application’s activity and are essential for troubleshooting and debugging.

- Centralized Logging: Centralized logging is the practice of collecting logs from multiple sources (containers, hosts, network devices) and storing them in a central location. This allows for easier searching, analysis, and correlation of logs.

Popular centralized logging tools include:

- Elasticsearch, Fluentd, and Kibana (EFK stack): This is a popular open-source stack for centralized logging. Elasticsearch is a search and analytics engine, Fluentd is a data collector, and Kibana is a visualization tool. Fluentd collects logs from various sources, processes them, and sends them to Elasticsearch, where they can be searched and analyzed using Kibana.

- Graylog: Graylog is another open-source log management platform that provides a user-friendly interface for searching, analyzing, and visualizing logs.

- Splunk: Splunk is a commercial log management platform that offers advanced search, analysis, and reporting capabilities.

- Log Aggregation and Forwarding: Tools like Fluentd, Fluent Bit, and Logstash are used to collect, process, and forward logs from containers to a centralized logging system. These tools can parse log messages, add metadata, and filter out irrelevant information.

- Centralized Logging: Centralized logging is the practice of collecting logs from multiple sources (containers, hosts, network devices) and storing them in a central location. This allows for easier searching, analysis, and correlation of logs.

- Health Checks: Health checks are used to determine the health of a containerized application. The container orchestration platform (e.g., Kubernetes) uses health checks to monitor the application’s health and automatically restart unhealthy containers.

- Liveness Probes: Liveness probes determine if the application is running and healthy. If a liveness probe fails, the container is restarted.

- Readiness Probes: Readiness probes determine if the application is ready to receive traffic. If a readiness probe fails, the container is removed from the load balancer.

- Distributed Tracing: Distributed tracing is used to track requests as they flow through a distributed system. It provides insights into the performance of individual components and helps identify performance bottlenecks. Tools like Jaeger and Zipkin are used for distributed tracing.

Procedure for Setting Up Logging and Alerting for a Containerized Legacy Application

Setting up effective logging and alerting involves several steps, from configuring the application to emit logs to setting up the monitoring and alerting infrastructure. This procedure Artikels the key steps involved in setting up logging and alerting for a containerized legacy application.

- Configure the Application for Logging: The first step is to configure the legacy application to emit logs in a consistent and structured format.

- Choose a Logging Library: Select a logging library appropriate for the application’s programming language (e.g., log4j for Java, logging for Python). Ensure the library supports configurable log levels (e.g., DEBUG, INFO, WARN, ERROR, FATAL).

- Structure Log Messages: Structure log messages consistently, including timestamps, log levels, application name, and relevant contextual information (e.g., user ID, request ID). Consider using JSON format for structured logging, which simplifies parsing and analysis.

- Log to Standard Output (stdout) and Standard Error (stderr): Container orchestration platforms typically collect logs from stdout and stderr. Configure the application to write logs to these streams.

- Select a Logging Solution: Choose a centralized logging solution that meets the application’s requirements.

- Consider the EFK stack (Elasticsearch, Fluentd, Kibana): This is a popular and cost-effective open-source solution.

- Evaluate commercial solutions like Splunk or Datadog: These offer advanced features and support.

- Consider the scale of the application and the volume of logs generated: Choose a solution that can handle the expected log volume.

- Deploy and Configure a Log Collector: Deploy and configure a log collector (e.g., Fluentd, Fluent Bit, Logstash) within the container or on the host.

- Configure the Log Collector: Configure the log collector to collect logs from stdout and stderr.

- Parse and Enrich Logs: Configure the log collector to parse log messages and add metadata (e.g., container ID, pod name, namespace).

- Forward Logs to the Centralized Logging System: Configure the log collector to forward the processed logs to the centralized logging system (e.g., Elasticsearch).

- Set Up the Centralized Logging System: Set up and configure the centralized logging system.

- Install and Configure the Logging System: Install and configure the chosen logging system (e.g., Elasticsearch, Graylog, Splunk).

- Create Indexes or Data Streams: Create indexes or data streams to store the logs.

- Configure Access Controls: Configure access controls to restrict access to the logs.

- Create Dashboards and Alerts: Create dashboards and alerts to monitor the application’s health and performance.

- Create Dashboards: Create dashboards to visualize key metrics and log data. Use the dashboards to monitor CPU usage, memory usage, error rates, and other important metrics.

- Define Alerting Rules: Define alerting rules to trigger notifications when certain conditions are met. For example, create an alert if the error rate exceeds a threshold or if CPU utilization is consistently high.

- Configure Alerting Channels: Configure alerting channels (e.g., email, Slack, PagerDuty) to receive notifications.

- Test and Refine: Test the logging and alerting setup and refine it based on the application’s behavior and the monitoring requirements.

- Simulate Errors: Simulate errors in the application to test the alerting system.

- Monitor and Analyze Logs: Regularly monitor and analyze the logs to identify potential issues and refine the alerting rules.

- Document the Setup: Document the logging and alerting setup, including the configuration of the logging library, log collectors, and alerting rules.

Summary