The migration to cloud computing represents a pivotal shift in modern IT infrastructure, promising enhanced scalability, cost efficiency, and agility. However, the selection of a cloud provider is a critical decision, demanding a thorough understanding of business needs, technical capabilities, and long-term strategic goals. This guide provides a structured approach to navigating the complexities of cloud provider selection, ensuring a successful migration and maximizing the benefits of cloud adoption.

This analysis will delve into the core considerations for cloud provider selection, encompassing aspects such as service offerings, pricing models, security protocols, data migration strategies, network connectivity, and vendor lock-in mitigation. By examining these facets, organizations can make informed decisions that align with their specific requirements and foster sustainable cloud adoption.

Understanding Your Needs and Goals

Before embarking on a cloud migration, a thorough understanding of your business objectives is paramount. This understanding dictates the strategic direction of the migration, ensuring alignment between IT infrastructure and overarching business goals. Without a clear definition of these goals, the migration can become a costly exercise, potentially failing to deliver the expected benefits and even hindering operational efficiency. A well-defined strategy helps to avoid common pitfalls, such as selecting inappropriate cloud services, overspending on resources, and failing to meet compliance requirements.

Defining Business Objectives for Cloud Migration

Establishing concrete business objectives forms the foundation for a successful cloud migration. These objectives should be measurable, achievable, relevant, and time-bound (SMART). They should clearly articulate the desired outcomes of the migration, guiding decision-making throughout the process. Examples of business objectives include improving agility, reducing operational costs, enhancing scalability, and strengthening disaster recovery capabilities.

Identifying Current IT Infrastructure Strengths and Weaknesses

Assessing the existing IT infrastructure provides a critical baseline for the cloud migration strategy. This assessment identifies areas for improvement and potential challenges. This involves a comprehensive evaluation of hardware, software, and network components, considering their performance, security, and cost-effectiveness.

- Hardware Inventory and Performance Analysis: Documenting the current hardware assets, including servers, storage devices, and network equipment. Analyze performance metrics such as CPU utilization, memory usage, disk I/O, and network bandwidth. Identify bottlenecks and underutilized resources.

- Software Inventory and Licensing Compliance: Cataloging all installed software applications and operating systems. Verify software licensing compliance and identify any potential licensing issues that could impact the migration. Consider the compatibility of existing applications with various cloud platforms.

- Network Infrastructure Assessment: Evaluating the existing network infrastructure, including network devices, bandwidth capacity, and security configurations. Analyze network latency, throughput, and security vulnerabilities. Identify potential network bottlenecks and areas for optimization.

- Security Posture Evaluation: Assessing the current security measures, including firewalls, intrusion detection systems, and access controls. Identify security vulnerabilities and assess the organization’s compliance with relevant security standards and regulations. Evaluate the need for enhanced security measures in the cloud environment.

- Cost Analysis: Conducting a detailed cost analysis of the current IT infrastructure, including hardware costs, software licensing fees, maintenance expenses, and operational costs. Compare these costs with the projected costs of cloud services to determine the potential cost savings.

Assessing Resource Requirements for a Cloud Environment

Determining resource requirements is crucial for selecting the appropriate cloud services and ensuring optimal performance and cost-efficiency. This assessment involves analyzing the current workload characteristics and predicting future resource needs based on business growth and anticipated demand. This process helps to avoid over-provisioning (wasting resources) or under-provisioning (compromising performance).

- Compute Resource Assessment: Evaluating the compute resource requirements based on the current CPU utilization, memory usage, and processing power demands of existing applications. Consider the scalability needs and anticipate peak loads.

Example: An e-commerce website experiences a significant increase in traffic during holiday seasons. The cloud migration strategy should account for the need to scale compute resources dynamically to handle these peak loads.

- Storage Resource Assessment: Determining the storage capacity requirements based on the volume of data stored, data growth rate, and data access patterns. Consider the different storage tiers (e.g., hot, cold, archive) based on the frequency of data access and the associated costs.

Example: A healthcare organization needs to store a large volume of medical images. The cloud migration strategy should consider the need for scalable and cost-effective storage solutions.

- Network Resource Assessment: Evaluating the network bandwidth requirements based on the data transfer volume, network latency, and application performance needs. Consider the network connectivity options and the associated costs.

Example: A video streaming service requires high bandwidth and low latency to deliver content to its users. The cloud migration strategy should consider the need for a content delivery network (CDN) to optimize network performance.

- Application Performance Profiling: Analyzing the performance characteristics of existing applications, including response times, transaction rates, and resource consumption. Identify any performance bottlenecks and areas for optimization. This information will help determine the appropriate cloud services and configurations to ensure optimal application performance in the cloud.

Example: A financial institution’s core banking system requires high availability and low latency. The cloud migration strategy should consider the need for a highly available and scalable cloud infrastructure.

Evaluating Cloud Provider Services

Choosing the right cloud provider involves a deep dive into the services they offer. Understanding the core offerings, their strengths, and weaknesses is crucial for making informed decisions that align with your specific migration needs. This evaluation extends beyond a simple feature comparison, encompassing considerations such as service level agreements (SLAs), storage options, and the overall ecosystem each provider cultivates.

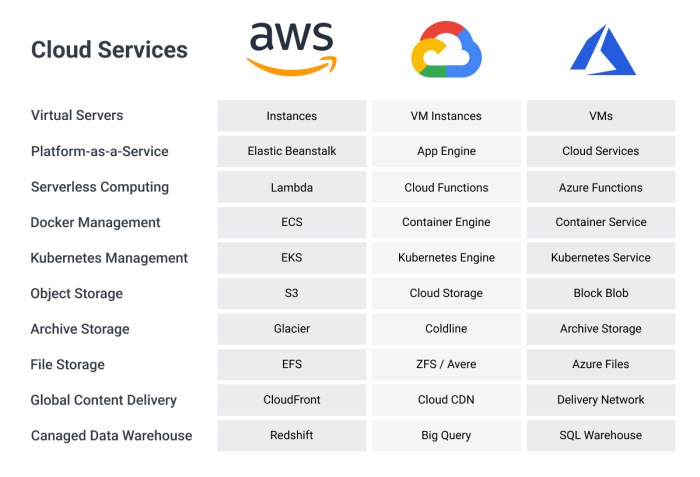

Comparing Core Cloud Services: AWS, Azure, and GCP

A comparative analysis of core services across AWS, Azure, and GCP is essential for understanding their individual strengths and weaknesses. This comparison focuses on compute, storage, database, and networking services, providing a snapshot of their capabilities. The following table provides a high-level overview:

| Service Category | AWS | Azure | GCP |

|---|---|---|---|

| Compute | Amazon EC2 (Virtual Machines), AWS Lambda (Serverless), Amazon ECS/EKS (Container Orchestration) | Azure Virtual Machines, Azure Functions (Serverless), Azure Kubernetes Service (AKS) | Google Compute Engine (Virtual Machines), Cloud Functions (Serverless), Google Kubernetes Engine (GKE) |

| Storage | Amazon S3 (Object Storage), Amazon EBS (Block Storage), Amazon EFS (File Storage), Amazon Glacier (Archival) | Azure Blob Storage (Object Storage), Azure Disks (Block Storage), Azure Files (File Storage), Azure Archive Storage (Archival) | Cloud Storage (Object Storage), Persistent Disk (Block Storage), Cloud Filestore (File Storage), Cloud Storage Nearline/Coldline (Archival) |

| Database | Amazon RDS (Relational Databases), Amazon DynamoDB (NoSQL), Amazon Aurora (MySQL & PostgreSQL compatible), Amazon Redshift (Data Warehouse) | Azure SQL Database (Relational Databases), Azure Cosmos DB (NoSQL), Azure Database for PostgreSQL/MySQL, Azure Synapse Analytics (Data Warehouse) | Cloud SQL (Relational Databases), Cloud Datastore/Firestore (NoSQL), Cloud Spanner (Globally Distributed), BigQuery (Data Warehouse) |

| Networking | Amazon VPC, AWS CloudFront (CDN), AWS Route 53 (DNS) | Azure Virtual Network, Azure CDN, Azure DNS | Google Cloud Virtual Network, Cloud CDN, Cloud DNS |

Each provider offers a comprehensive suite of services, but nuances in pricing, performance, and feature sets differentiate them. AWS, with its mature ecosystem, often boasts a wider range of services. Azure, leveraging its integration with Microsoft products, appeals to enterprises heavily invested in the Microsoft stack. GCP, known for its innovation in data analytics and machine learning, excels in those areas.

Service Level Agreements (SLAs) and Uptime Guarantees

Service Level Agreements (SLAs) are legally binding contracts that define the level of service a cloud provider promises to deliver. These agreements are crucial for ensuring business continuity and mitigating risks associated with downtime. Uptime guarantees, a key component of SLAs, specify the minimum percentage of time a service will be available.Factors influencing the effectiveness of SLAs include:

- Uptime Percentage: Higher uptime percentages translate to greater reliability. For instance, an SLA guaranteeing 99.99% uptime allows for only a few minutes of downtime per month, whereas 99% allows for hours of downtime.

- Credits/Remedies: SLAs typically include remedies for failing to meet the uptime guarantee, such as service credits or refunds. The specifics of these remedies vary by provider and service.

- Service Scope: SLAs vary depending on the service. Some services may have more stringent guarantees than others.

- Exclusions: SLAs often include exclusions for planned maintenance, events outside the provider’s control (e.g., natural disasters), and user errors.

For example, AWS offers varying SLAs for its services, with different guarantees depending on the service and the specific configuration. Azure provides similar SLAs, and GCP’s SLAs also vary by service. The specific details of each provider’s SLAs should be carefully reviewed during the cloud provider selection process. Failure to meet the SLA terms can result in service credits, but it is essential to understand the limitations and exclusions to avoid any surprises during a potential outage.

Storage Options and Data Type Suitability

Cloud providers offer a variety of storage options, each optimized for different data types and access patterns. Selecting the appropriate storage solution is critical for cost optimization, performance, and data durability. Understanding the characteristics of different storage types is key to making the right choice.Common storage types include:

- Object Storage: Ideal for unstructured data like images, videos, and backups. Offers high scalability and durability at a lower cost. Examples: Amazon S3, Azure Blob Storage, Google Cloud Storage.

- Block Storage: Provides raw storage capacity for virtual machines and databases. Offers high performance and low latency. Examples: Amazon EBS, Azure Disks, Google Persistent Disk.

- File Storage: Enables shared access to files across multiple instances. Suitable for applications requiring shared file systems. Examples: Amazon EFS, Azure Files, Google Cloud Filestore.

- Archival Storage: Designed for infrequently accessed data, offering the lowest cost per GB. Suitable for long-term data retention. Examples: Amazon Glacier, Azure Archive Storage, Google Cloud Storage Nearline/Coldline.

The choice of storage depends on factors such as:

- Data Type: Unstructured data (images, videos) typically suits object storage. Structured data (databases) often benefits from block storage.

- Access Frequency: Frequently accessed data benefits from higher-performance storage. Infrequently accessed data can be stored in archival storage.

- Performance Requirements: Applications requiring low latency need high-performance storage.

- Cost Considerations: Storage costs vary significantly between storage types. Archival storage is the cheapest, while high-performance storage is the most expensive.

For instance, a media streaming service would likely use object storage (e.g., Amazon S3) for storing video files, block storage (e.g., Amazon EBS) for the operating system disk of its streaming servers, and potentially archival storage (e.g., Amazon Glacier) for storing older, infrequently accessed videos. The selection should be made by considering the access frequency, performance requirements, and budget.

Cost Considerations and Pricing Models

Migrating to the cloud necessitates a thorough understanding of cost implications. Cloud providers offer various pricing models, each with its own advantages and disadvantages. A well-defined cost optimization strategy is crucial for managing cloud expenses effectively. Furthermore, estimating the total cost of ownership (TCO) provides a comprehensive view of the financial commitment associated with cloud adoption.

Pricing Models

Cloud providers employ diverse pricing models to cater to varying workloads and usage patterns. Understanding these models is fundamental to selecting the most cost-effective option.

- Pay-as-you-go (On-Demand): This model charges users only for the resources consumed, such as compute time, storage, and data transfer. It offers flexibility and is suitable for unpredictable workloads or short-term projects.

- Advantages: No upfront commitment, high flexibility, ideal for testing and development.

- Disadvantages: Can be more expensive than other models for sustained usage.

- Reserved Instances (RI): Reserved Instances provide a significant discount compared to on-demand pricing in exchange for a commitment to use a specific instance type for a defined period (typically one or three years).

- Advantages: Substantial cost savings for consistent workloads, predictable costs.

- Disadvantages: Requires upfront commitment, less flexibility in instance type changes.

- Spot Instances: Spot instances allow users to bid on unused compute capacity, often at a substantial discount compared to on-demand pricing. However, these instances can be terminated if the current spot price exceeds the user’s bid or if the capacity is needed by the cloud provider.

- Advantages: Significant cost savings for fault-tolerant and flexible workloads.

- Disadvantages: Risk of interruption, not suitable for all workloads.

- Savings Plans: Some providers offer Savings Plans, which provide discounted rates in exchange for a commitment to a consistent amount of compute usage (measured in dollars per hour) over a period (e.g., one or three years). These plans offer flexibility across instance families and regions.

- Advantages: Flexible commitment, cost savings across various instance types.

- Disadvantages: Requires commitment to a consistent spend.

Cost Optimization Strategy for Cloud Resources

Developing a robust cost optimization strategy is essential for maximizing the value of cloud investments. This involves continuous monitoring, analysis, and adjustments.

- Right-Sizing Resources: Regularly analyze resource utilization (CPU, memory, storage, network) and adjust instance sizes accordingly. Over-provisioned resources lead to unnecessary costs. Under-provisioned resources can lead to performance bottlenecks.

- Automated Scaling: Implement automated scaling mechanisms to dynamically adjust resources based on demand. This ensures optimal resource utilization and minimizes costs during periods of low activity.

- Utilizing Reserved Instances and Savings Plans: Commit to Reserved Instances or Savings Plans for stable, predictable workloads to benefit from discounted pricing.

- Data Storage Optimization: Choose appropriate storage tiers based on data access frequency. Utilize lifecycle policies to automatically move data to less expensive storage tiers (e.g., cold storage) when it is infrequently accessed.

- Cost Monitoring and Reporting: Implement cost monitoring tools to track cloud spending and identify cost-saving opportunities. Generate regular reports to analyze spending patterns and identify areas for improvement.

- Tagging and Budgeting: Apply tags to cloud resources for better cost allocation and tracking. Set budgets and alerts to monitor spending and prevent unexpected costs.

- Rightsizing of Databases: Optimize database instances by selecting appropriate instance types and storage configurations based on workload requirements. Consider managed database services for simplified management and cost optimization.

- Leveraging Serverless Technologies: Utilize serverless technologies (e.g., AWS Lambda, Azure Functions) for event-driven workloads to pay only for the actual compute time consumed. This can be highly cost-effective for intermittent tasks.

Estimating the Total Cost of Ownership (TCO) of a Cloud Migration

Estimating the TCO provides a holistic view of the financial implications of migrating to the cloud. This includes direct costs, indirect costs, and potential savings.

The TCO calculation should consider the following elements:

- Direct Costs: These are the readily quantifiable costs associated with cloud usage.

- Compute Costs: Costs for virtual machines, containers, and serverless functions.

- Storage Costs: Costs for storing data in various storage services (e.g., object storage, block storage).

- Networking Costs: Costs for data transfer, bandwidth, and other network services.

- Database Costs: Costs for managed database services or self-managed databases.

- Licensing Costs: Costs for software licenses, operating systems, and other third-party software.

- Indirect Costs: These costs are often less visible but significantly impact the overall cost.

- Migration Costs: Costs associated with planning, migrating data, and re-architecting applications.

- Training Costs: Costs for training IT staff on cloud technologies and management.

- Management Costs: Costs for managing and maintaining cloud resources, including monitoring, security, and automation.

- Downtime Costs: Potential costs associated with service interruptions.

- Potential Savings: Cloud migration can also generate savings that should be factored into the TCO calculation.

- Reduced Hardware Costs: Elimination of the need for on-premises hardware, servers, and associated maintenance.

- Reduced Energy Costs: Lower energy consumption compared to on-premises data centers.

- Reduced IT Staff Costs: Reduced need for on-premises IT staff for hardware maintenance.

- Increased Productivity: Enhanced agility, scalability, and access to innovative services.

Example: Consider a company migrating its application to AWS. The direct costs include compute costs based on instance usage, storage costs for data in S3, and network costs for data transfer. Indirect costs encompass migration planning, training staff on AWS services, and ongoing management. Savings can be realized from reduced hardware costs, lower energy bills, and potentially increased developer productivity due to the availability of managed services.

TCO = Direct Costs + Indirect Costs – Potential Savings

Security and Compliance

Migrating to the cloud necessitates a thorough evaluation of security and compliance considerations. Cloud providers offer a range of security features and adhere to various industry regulations. Understanding the shared responsibility model and how cloud providers manage security is critical for ensuring data protection and maintaining regulatory compliance during and after the migration.

Shared Responsibility Model

The shared responsibility model defines the security obligations of both the cloud provider and the customer. This model ensures that both parties take responsibility for securing different aspects of the cloud environment.The cloud provider is responsible for securing the “cloud,” meaning the underlying infrastructure, including:

- Physical security of data centers (e.g., access control, surveillance).

- Hardware and software infrastructure (e.g., servers, networking equipment, virtualization).

- Security of the cloud services themselves (e.g., patching, vulnerability management of the platform).

The customer is responsible for securing what they put in the cloud, including:

- Data (e.g., encryption, access control, data loss prevention).

- Applications (e.g., secure coding practices, vulnerability scanning).

- Operating systems, middleware, and runtime environments.

- Identity and access management (IAM) for users and resources.

- Network configuration (e.g., firewalls, intrusion detection/prevention).

The division of responsibilities varies depending on the cloud service model (IaaS, PaaS, SaaS). For example, with IaaS, the customer has more control and responsibility over the infrastructure, while with SaaS, the provider manages more aspects of the security.

Security Features Offered by Cloud Providers

Cloud providers offer a comprehensive suite of security features to protect customer data and applications. These features are designed to address various security threats and help customers meet their security requirements.

- Encryption: Encryption is a fundamental security feature used to protect data confidentiality. Cloud providers offer various encryption options, including:

- Encryption at rest: Protects data stored in the cloud, such as data in databases, object storage, and disk volumes. Encryption keys are typically managed by the cloud provider or can be customer-managed. For example, Amazon Web Services (AWS) offers Key Management Service (KMS) for managing encryption keys, and Azure offers Azure Key Vault.

- Encryption in transit: Protects data as it travels between the customer’s environment and the cloud, or between different cloud services. This is typically achieved using Transport Layer Security (TLS/SSL) protocols.

- Identity and Access Management (IAM): IAM systems control access to cloud resources, ensuring that only authorized users and services can access specific data and functionalities.

- Identity providers: Cloud providers support integration with various identity providers, such as Active Directory, LDAP, and SAML-based identity providers.

- Authentication: Cloud providers offer multi-factor authentication (MFA) to enhance account security.

- Authorization: IAM systems allow for fine-grained access control based on roles, permissions, and policies. Customers can define custom roles and policies to match their specific security requirements. For example, a user might be granted read-only access to a specific database.

- Network Security: Cloud providers offer various network security features, including:

- Firewalls: Cloud-based firewalls provide network traffic filtering and intrusion detection capabilities. Customers can configure firewalls to control inbound and outbound traffic.

- Virtual Private Networks (VPNs): VPNs enable secure connections between the customer’s on-premises network and the cloud.

- Web Application Firewalls (WAFs): WAFs protect web applications from common attacks, such as cross-site scripting (XSS) and SQL injection.

- Security Monitoring and Auditing: Cloud providers offer security monitoring and auditing tools to help customers detect and respond to security threats.

- Logging: Cloud services generate logs that record user activities, system events, and security-related events.

- Monitoring: Cloud providers offer monitoring tools to track resource usage, performance metrics, and security events.

- Auditing: Customers can use auditing tools to review logs and identify potential security vulnerabilities or compliance violations.

Importance of Compliance with Industry Regulations

Compliance with industry regulations is crucial for organizations operating in regulated industries or handling sensitive data. Cloud providers assist customers in meeting these requirements by offering compliant services and providing tools and resources to support compliance efforts.Many organizations must adhere to regulations like:

- HIPAA (Health Insurance Portability and Accountability Act): Applies to healthcare providers and their business associates in the United States. Cloud providers offering HIPAA-compliant services provide features like data encryption, access controls, and audit logging to protect protected health information (PHI).

- GDPR (General Data Protection Regulation): Applies to organizations that process the personal data of individuals in the European Union (EU). Cloud providers offering GDPR-compliant services help customers meet requirements such as data minimization, data subject rights, and data breach notification.

- PCI DSS (Payment Card Industry Data Security Standard): Applies to organizations that handle credit card data. Cloud providers offering PCI DSS-compliant services provide features like secure storage of cardholder data, access controls, and regular security assessments.

- SOC 2 (System and Organization Controls 2): A widely recognized standard for assessing the security, availability, processing integrity, confidentiality, and privacy of a service provider’s systems. Cloud providers often undergo SOC 2 audits to demonstrate their commitment to security and compliance.

Cloud providers typically offer services and features that support compliance with these and other regulations. This can include providing documentation, certifications, and audit reports. However, customers are ultimately responsible for ensuring that their cloud deployments comply with all applicable regulations. This involves selecting appropriate cloud services, configuring them securely, and implementing necessary security controls.

Data Migration Strategies

Data migration is a critical phase in cloud adoption, involving the transfer of data from on-premises systems or other cloud environments to the chosen cloud provider. The strategy employed significantly impacts the project’s success, cost, and timeline. Selecting the optimal approach requires careful consideration of the data volume, application dependencies, downtime tolerance, and desired level of modernization.

Different Data Migration Approaches

Several distinct strategies exist for migrating data to the cloud, each with unique characteristics and implications. The selection of a specific strategy is driven by factors such as the complexity of the existing environment, the desired level of application transformation, and the business requirements.

- Lift-and-Shift (Rehosting): This approach, also known as rehosting, involves moving data and applications to the cloud with minimal changes. The existing infrastructure is replicated in the cloud environment. It is often the fastest and least expensive migration method initially, suitable for organizations seeking a quick transition. While it preserves the existing architecture, it may not fully leverage the cloud’s capabilities and benefits, such as scalability and cost optimization.

- Re-platforming: Re-platforming involves making some changes to the application to take advantage of cloud services, such as using managed databases or containerization. The core application logic remains largely unchanged. This approach offers a balance between speed and cloud optimization, potentially improving performance and reducing operational overhead.

- Re-factoring (Re-architecting): Re-factoring involves redesigning and rewriting the application to fully leverage cloud-native services. This is the most complex and time-consuming approach, but it offers the greatest potential for optimization, scalability, and cost savings. It often involves breaking down monolithic applications into microservices and adopting cloud-specific features like serverless computing.

Tools and Processes Involved in Data Migration

Data migration requires a combination of tools and processes to ensure data integrity, minimize downtime, and efficiently transfer data. The specific tools and processes vary depending on the chosen migration strategy, the data volume, and the complexity of the source and target environments.

- Assessment and Planning: This initial phase involves assessing the existing data landscape, identifying data sources and targets, defining migration scope, and developing a detailed migration plan. This includes selecting the appropriate migration strategy, defining the migration timeline, and identifying potential risks and mitigation strategies.

- Data Extraction: Data is extracted from the source systems. This process may involve using native tools, custom scripts, or third-party data extraction tools. The extraction method should consider data format, data volume, and performance requirements.

- Data Transformation: This step involves transforming the extracted data to meet the requirements of the target environment. Data transformation may include data cleansing, data mapping, data enrichment, and data type conversions. Tools like ETL (Extract, Transform, Load) platforms are commonly used.

- Data Loading: Transformed data is loaded into the target cloud environment. This process requires selecting the appropriate data loading method based on the data volume, network bandwidth, and target database requirements.

- Data Validation: After data loading, it is essential to validate the migrated data to ensure data integrity and accuracy. Data validation involves comparing the data in the source and target environments, identifying any discrepancies, and correcting them.

- Cutover and Go-Live: This final phase involves switching over from the source systems to the cloud environment. The cutover process requires careful planning and execution to minimize downtime and ensure a smooth transition.

- Monitoring and Optimization: After the migration is complete, it is crucial to monitor the performance of the cloud environment and optimize the data migration process for improved efficiency and cost-effectiveness.

Best Practices for Data Migration

Following best practices can significantly improve the success of data migration projects, minimizing risks and maximizing the benefits of cloud adoption. These practices encompass various aspects of the migration process, from planning to execution and post-migration activities.

- Plan Thoroughly: Develop a comprehensive migration plan that includes clear objectives, timelines, resource allocation, and risk assessment. The plan should address all aspects of the migration, from data extraction to data validation.

- Choose the Right Migration Strategy: Select the appropriate migration strategy based on the specific requirements of the project, considering factors like data volume, application complexity, and business objectives.

- Select the Right Tools: Choose the appropriate data migration tools based on the specific requirements of the project, considering factors like data volume, data format, and target database requirements.

- Prioritize Data Security: Implement robust security measures throughout the migration process to protect sensitive data. This includes data encryption, access controls, and regular security audits.

- Test, Test, and Test Again: Conduct thorough testing at each stage of the migration process to ensure data integrity, application functionality, and performance. This includes data validation, user acceptance testing, and performance testing.

- Automate as Much as Possible: Automate repetitive tasks, such as data extraction, transformation, and loading, to reduce manual effort, minimize errors, and improve efficiency.

- Monitor and Optimize: Continuously monitor the performance of the cloud environment and optimize the data migration process for improved efficiency and cost-effectiveness.

- Back Up Data: Create backups of both the source and target data throughout the migration process to ensure data recoverability in case of any issues.

- Document Everything: Maintain detailed documentation of all aspects of the migration process, including the migration plan, tool configurations, and test results.

- Train Staff: Provide adequate training to staff involved in the migration process to ensure they have the necessary skills and knowledge.

Network and Connectivity

Establishing robust network connectivity is paramount when migrating to the cloud. It underpins all aspects of the migration, from the initial data transfer to the ongoing operation of cloud-based applications. A poorly designed network architecture can lead to performance bottlenecks, security vulnerabilities, and increased costs. Therefore, careful planning and implementation of network connectivity are crucial for a successful cloud migration.

Establishing Secure Network Connectivity

Securing network connectivity between on-premises infrastructure and the cloud requires a multi-layered approach, encompassing encryption, authentication, and access control. This ensures data confidentiality, integrity, and availability throughout the migration and operational phases. The primary goal is to create a secure tunnel through which all traffic flows.

- Encryption: All data transmitted over the public internet must be encrypted to protect it from eavesdropping and tampering. This is typically achieved using protocols such as Transport Layer Security (TLS) for application-level traffic and Internet Protocol Security (IPsec) for network-level traffic.

- Authentication: Securely verifying the identity of both on-premises and cloud-based systems is essential. This can be accomplished through various methods, including:

- Multi-Factor Authentication (MFA): MFA adds an extra layer of security by requiring users to provide multiple forms of verification, such as a password and a one-time code from a mobile device.

- Certificate-Based Authentication: This involves using digital certificates to verify the identity of devices and users.

- Access Control: Implementing strict access controls limits access to cloud resources based on the principle of least privilege. This means granting users and systems only the minimum necessary permissions to perform their tasks. This involves using firewalls, security groups, and identity and access management (IAM) policies.

- Monitoring and Logging: Continuous monitoring and logging of network traffic and security events are crucial for detecting and responding to potential security threats. Security Information and Event Management (SIEM) systems can be used to collect, analyze, and correlate security logs from various sources.

Virtual Private Networks (VPNs) and Direct Connect Options

Choosing the right connectivity method depends on factors such as bandwidth requirements, latency sensitivity, security needs, and budget constraints. VPNs and Direct Connect are the two main methods for establishing connectivity.

- Virtual Private Networks (VPNs): VPNs create an encrypted tunnel over the public internet, allowing secure communication between on-premises networks and the cloud.

- Site-to-Site VPNs: Connect entire networks together, allowing resources to be accessed seamlessly between on-premises and cloud environments.

- Client VPNs: Allow individual users to connect to the cloud securely from their devices.

VPNs are generally less expensive and easier to set up than Direct Connect, making them suitable for smaller bandwidth requirements and less demanding latency needs. However, they can be subject to network congestion and have higher latency compared to Direct Connect.

- Direct Connect: Direct Connect provides a dedicated, private network connection between an on-premises data center and the cloud provider’s network. This eliminates the need to traverse the public internet, resulting in lower latency, higher bandwidth, and improved reliability.

- Dedicated Connections: Offer a dedicated physical connection with guaranteed bandwidth.

- Hosted Connections: Allow customers to connect to the cloud through a partner’s network infrastructure.

Direct Connect is ideal for applications that require high bandwidth, low latency, and consistent performance, such as real-time data processing, high-performance computing, and disaster recovery. The initial setup cost is typically higher, and the ongoing costs can be more expensive than VPNs. However, the performance benefits often justify the investment for critical workloads.

Designing Network Architecture for High Availability and Low Latency

Designing a network architecture that prioritizes high availability and low latency is crucial for ensuring the performance and resilience of cloud-based applications. This involves a combination of redundancy, geographic distribution, and intelligent traffic management.

- Redundancy: Implementing redundancy at all levels of the network architecture is crucial to prevent single points of failure. This includes:

- Multiple Internet Connections: Using multiple internet service providers (ISPs) provides failover in case one connection goes down.

- Redundant VPN Tunnels or Direct Connect Connections: Establishing multiple VPN tunnels or Direct Connect connections to different availability zones ensures connectivity even if one connection fails.

- Load Balancing: Distributing traffic across multiple servers or network devices prevents any single device from becoming overloaded.

- Geographic Distribution: Deploying applications and data across multiple geographic regions reduces latency for users located in different areas. Cloud providers offer multiple regions and availability zones, allowing you to deploy resources closer to your users. For example, a company serving customers in both North America and Europe could deploy its application in regions within both continents. This reduces the distance data must travel, improving response times.

- Load Balancing: Employing load balancing techniques distributes network traffic across multiple servers or instances, improving performance and availability. Load balancers can be used to distribute traffic across different availability zones or regions. The load balancer analyzes incoming traffic and distributes it to the most available and least-loaded servers. This ensures that no single server is overwhelmed, and that traffic is routed efficiently.

- Content Delivery Networks (CDNs): CDNs cache content closer to users, reducing latency for static content such as images, videos, and JavaScript files. A CDN caches content at multiple points of presence (PoPs) around the world. When a user requests content, the CDN serves it from the PoP closest to the user, reducing the time it takes to receive the content.

- Network Monitoring and Optimization: Continuous monitoring of network performance is essential to identify and address potential bottlenecks and latency issues. Tools like network monitoring software and cloud provider-specific monitoring services can be used to track network traffic, latency, and packet loss. Based on the insights from monitoring, network configurations can be optimized. This could involve adjusting routing tables, scaling network resources, or implementing Quality of Service (QoS) policies.

Vendor Lock-in and Portability

Migrating to the cloud presents a significant opportunity to leverage advanced technologies and optimize resource utilization. However, it’s crucial to address the potential challenges associated with vendor lock-in and ensure application portability. Understanding these concepts and implementing appropriate strategies is paramount for long-term flexibility and business agility.

Understanding Vendor Lock-in

Vendor lock-in refers to a situation where a customer becomes dependent on a particular cloud provider’s services, making it difficult and costly to switch to another provider. This dependence can arise from proprietary technologies, unique service implementations, or complex data formats that are not easily transferable. The implications of vendor lock-in can be substantial, including increased costs, limited innovation, and reduced negotiating power.

Mitigating Vendor Lock-in Risks

Several strategies can be employed to mitigate the risks of vendor lock-in and enhance cloud portability. These approaches often involve a combination of architectural choices, technology selection, and operational practices.

- Adopting Open Standards and Technologies: Favoring open-source technologies and industry-standard protocols minimizes dependence on proprietary solutions. This allows for easier migration between providers as the underlying infrastructure and code are more portable. For example, utilizing open-source databases like PostgreSQL or MySQL instead of a proprietary database offered by a specific cloud provider reduces lock-in.

- Containerization: Containerizing applications with tools like Docker and Kubernetes encapsulates them with all their dependencies, making them portable across different cloud environments. This allows for seamless deployment and management regardless of the underlying infrastructure.

- Microservices Architecture: Designing applications as a collection of loosely coupled microservices enables independent scaling and deployment of individual components. This modularity simplifies the process of migrating or re-platforming specific services without affecting the entire application.

- Abstraction Layers: Implementing abstraction layers that separate the application logic from the underlying cloud infrastructure allows for a more flexible and portable architecture. This involves creating APIs or middleware that provide a consistent interface to cloud services, regardless of the provider.

- Multi-Cloud Strategy: Distributing workloads across multiple cloud providers, also known as a multi-cloud strategy, reduces reliance on a single vendor. This approach offers greater flexibility, improved resilience, and the ability to leverage the strengths of different providers.

- Regular Backups and Data Portability: Regularly backing up data and ensuring it can be easily exported in a standard format is essential for data portability. This allows for the seamless transfer of data to another provider in case of a migration or a disaster recovery scenario.

- Contract Negotiation: Carefully negotiating contracts with cloud providers can help to mitigate lock-in risks. This includes clauses related to data portability, service level agreements (SLAs), and pricing transparency.

Containerization Technologies: Docker and Kubernetes

Containerization has emerged as a pivotal technology for enhancing application portability and simplifying cloud migrations. Docker and Kubernetes are two leading technologies in this space, each playing a distinct role in containerized application management.

- Docker: Docker is a platform for building, shipping, and running applications in containers. It provides a standardized way to package an application and its dependencies into a single unit, called a container. This container can then be deployed and run consistently across different environments, regardless of the underlying infrastructure.

Docker uses a layered filesystem, where each layer represents a change to the container image.

This architecture allows for efficient storage and distribution of images, as only the changed layers need to be transferred.

A practical example is packaging a web application with its dependencies (e.g., a Python application, a web server like Nginx, and any required libraries) into a Docker container. This container can then be deployed on any platform that supports Docker, such as a local development machine, a cloud provider’s virtual machine, or a Kubernetes cluster.

- Kubernetes: Kubernetes, often abbreviated as K8s, is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It provides a robust framework for managing containers across a cluster of machines, ensuring high availability, scalability, and efficient resource utilization.

Kubernetes uses a declarative approach to configuration, where users define the desired state of their applications, and Kubernetes automatically manages the resources to achieve that state.

This approach simplifies the management of complex deployments and enables automated scaling and self-healing capabilities.

For example, consider a scenario where a web application needs to handle fluctuating traffic. Kubernetes can automatically scale the number of container instances based on resource utilization (e.g., CPU or memory). If the application experiences a surge in traffic, Kubernetes will automatically create more container instances to handle the load.

When traffic subsides, Kubernetes will scale down the number of instances to conserve resources.

- Comparison:

- Scope: Docker focuses on containerization (packaging applications), while Kubernetes focuses on orchestration (managing containers at scale).

- Functionality: Docker builds and runs containers. Kubernetes deploys, scales, and manages containers.

- Complexity: Docker is generally easier to learn and use for basic containerization tasks. Kubernetes is more complex but offers more advanced features for managing large-scale deployments.

- Use Cases: Docker is ideal for creating and running individual containers. Kubernetes is best suited for managing containerized applications in production environments.

Performance and Scalability

Cloud migration success hinges not only on choosing the right provider but also on ensuring the migrated applications perform optimally and can handle fluctuating workloads. Performance and scalability are intertwined, as scalability allows applications to adapt to changing demands without compromising performance. Effective management of these aspects is crucial for cost efficiency, user satisfaction, and business agility.

Importance of Scalability and Elasticity in the Cloud

Scalability and elasticity are fundamental advantages of cloud computing, enabling businesses to dynamically adjust their resources based on real-time needs. This dynamic allocation is critical for maintaining optimal performance, controlling costs, and ensuring application availability, especially during peak usage periods.Elasticity, in the cloud context, refers to the ability of a system to automatically scale resources up or down based on demand.

This capability is often described as:

The ability to rapidly and elastically provision and release compute, storage, and network resources to scale up or down based on demand.

There are two main types of scalability:

- Vertical Scaling (Scale Up/Down): This involves increasing or decreasing the resources allocated to a single instance, such as adding more CPU, RAM, or storage to an existing server. While straightforward, vertical scaling is limited by the hardware capabilities of a single instance and can lead to downtime during scaling operations.

- Horizontal Scaling (Scale Out/In): This involves adding or removing instances of an application or service. This approach offers greater flexibility and resilience, as it allows for scaling to handle virtually unlimited workloads. Horizontal scaling is typically achieved through load balancing and automated instance provisioning.

Elasticity leverages both vertical and horizontal scaling, but its defining characteristic is its automation. Cloud providers offer services like auto-scaling groups that monitor resource utilization (CPU, memory, network I/O) and automatically adjust the number of instances based on predefined thresholds. This automated responsiveness is key to cost optimization, as resources are only provisioned when needed. Consider a retail website during a holiday season; auto-scaling ensures the site handles increased traffic without manual intervention, preventing performance degradation or service outages.

This automated scaling directly translates to cost savings compared to on-premises infrastructure, where resources are often over-provisioned to handle peak loads.

Monitoring and Optimizing Application Performance in the Cloud

Effective monitoring and optimization are crucial for ensuring applications perform optimally in the cloud. Cloud providers offer a range of tools and services for monitoring application performance, identifying bottlenecks, and making data-driven decisions about resource allocation and code optimization.Comprehensive monitoring involves several key areas:

- Resource Utilization: Monitoring CPU usage, memory consumption, disk I/O, and network traffic provides insights into resource bottlenecks. High CPU utilization may indicate a need for more processing power, while excessive memory consumption could point to inefficient code or memory leaks.

- Application Performance Metrics: Tracking key performance indicators (KPIs) such as response times, error rates, and transaction throughput is essential for understanding application health and user experience. High response times or error rates can indicate performance issues or code errors.

- Database Performance: Monitoring database queries, connection times, and storage utilization is crucial, as database performance often bottlenecks application performance. Slow queries or high storage utilization can impact overall application responsiveness.

- Network Performance: Monitoring network latency, packet loss, and bandwidth utilization is important for identifying network-related issues. High latency or packet loss can degrade application performance, especially for applications that are sensitive to network conditions.

Optimization strategies include:

- Right-Sizing Instances: Selecting the appropriate instance sizes based on workload requirements prevents over-provisioning and reduces costs. Analyzing resource utilization data helps identify instances that are underutilized or over-provisioned.

- Code Optimization: Identifying and resolving performance bottlenecks in the application code, such as inefficient database queries or memory leaks, can significantly improve performance. Profiling tools and code analysis can help identify areas for improvement.

- Caching: Implementing caching mechanisms to store frequently accessed data can reduce database load and improve response times. Caching can be implemented at various levels, including web server caching, application caching, and database caching.

- Load Balancing: Distributing traffic across multiple instances using load balancers ensures high availability and improves performance. Load balancers can also perform health checks to detect and remove unhealthy instances.

- Database Optimization: Optimizing database queries, indexing tables, and tuning database parameters can improve database performance. Regularly monitoring database performance and analyzing query execution plans can help identify areas for improvement.

Tools for monitoring and optimization are provided by the cloud provider:

- CloudWatch (AWS): Offers comprehensive monitoring services for AWS resources, including metrics, logs, and alarms. CloudWatch can be used to monitor resource utilization, application performance, and custom metrics.

- Azure Monitor (Azure): Provides a unified monitoring platform for Azure resources, including metrics, logs, and alerts. Azure Monitor can be used to monitor application performance, infrastructure health, and security events.

- Cloud Monitoring (Google Cloud): Offers monitoring and logging services for Google Cloud resources, including metrics, logs, and dashboards. Cloud Monitoring can be used to monitor application performance, infrastructure health, and custom metrics.

By leveraging these tools and implementing optimization strategies, organizations can ensure their applications perform optimally in the cloud and provide a superior user experience.

Diagram Illustrating Auto-Scaling Capabilities of a Cloud Provider

Below is a descriptive representation of an auto-scaling setup within a cloud environment.

+---------------------+ | User Traffic | +---------+-----------+ | | HTTP/HTTPS V +-------------------------------------+ | Load Balancer | | (e.g., Application Load Balancer) | +----------+----------+--------------+ | | | Health Checks V V +-----------------------+ +-----------------------+ | Auto Scaling Group | | Auto Scaling Group | | (Monitoring CPU, | | (Monitoring CPU, | | Memory, etc.) | | Memory, etc.) | +--------+-------------+ +--------+-------------+ | | | | | | Scale Out | | Scale In | | (Add Instances) | | (Remove Instances) V V V V +-------+ +-------+ +-------+ +-------+ | Instance | | Instance | | Instance | | Instance | | (App 1) | | (App 2) | | (App 3) | | (App 4) | +-------+ +-------+ +-------+ +-------+

Diagram Description:

The diagram illustrates a typical auto-scaling setup. At the top, “User Traffic” represents incoming requests from users. These requests are routed through a “Load Balancer,” which distributes the traffic across multiple instances of an application. The load balancer continuously performs “Health Checks” on the application instances to ensure they are responsive and healthy.

Below the load balancer, two “Auto Scaling Groups” are shown. Each group monitors key metrics like CPU utilization, memory usage, and other configurable parameters. Based on these metrics, the auto-scaling groups automatically adjust the number of instances. When the load increases and the metrics exceed predefined thresholds, the groups “Scale Out” by adding new instances to handle the increased traffic.

Conversely, when the load decreases, the groups “Scale In” by removing underutilized instances, optimizing resource usage and reducing costs. The “Instances” represent the actual application servers, each running the application code. The diagram demonstrates the dynamic and automated nature of cloud auto-scaling, allowing applications to adapt to fluctuating workloads.

Support and Management

Selecting the right cloud provider involves not only assessing its technical capabilities but also understanding the support and management infrastructure offered. Comprehensive support and effective management tools are crucial for the smooth operation and optimization of cloud resources, ensuring business continuity, and minimizing operational overhead. The availability and quality of support can significantly impact the success of a cloud migration and the ongoing management of cloud-based applications and infrastructure.

Support Options Offered by Cloud Providers

Cloud providers offer a variety of support options, ranging from basic, free tiers to premium, paid services. The level of support often correlates with the cost and the complexity of the services being used. Understanding the different support tiers and the services they provide is essential for choosing a provider that aligns with an organization’s specific needs and budget.

- Basic Support: Typically offered at no cost, this level of support usually includes access to documentation, community forums, and limited technical support for billing and account management. It may not include direct access to technical experts or guaranteed response times. This tier is suitable for users with limited technical needs or those who are experimenting with cloud services.

- Developer Support: This paid tier provides access to technical support via email and potentially phone, with faster response times than basic support. It often includes access to support engineers and may offer guidance on best practices and troubleshooting.

- Business Support: This tier offers more comprehensive support, including faster response times, access to support engineers, and potentially proactive support, such as architectural reviews and guidance on optimizing cloud resources. It is suitable for businesses with critical workloads and a need for rapid issue resolution.

- Enterprise Support: The highest tier of support typically provides the most comprehensive services, including dedicated technical account managers (TAMs), 24/7 support, and proactive assistance with performance optimization, security, and compliance. This tier is often chosen by large enterprises with complex cloud environments and stringent service level agreements (SLAs).

- Premium Support: Some providers offer specialized premium support options tailored to specific needs, such as mission-critical support, which provides a higher level of availability and rapid response times. This is often the most expensive support tier.

Importance of Comprehensive Documentation and Support

Selecting a cloud provider with comprehensive documentation and robust support is paramount for several reasons. Well-documented services and readily available support resources can significantly reduce the learning curve, accelerate troubleshooting, and minimize downtime. This directly translates to increased productivity, reduced operational costs, and improved business agility.

- Documentation: High-quality documentation provides detailed information on how to use cloud services, including tutorials, API references, and best practices. It enables users to quickly learn how to implement and manage cloud resources effectively. Comprehensive documentation reduces the reliance on support staff and empowers users to resolve issues independently.

- Knowledge Base: A well-maintained knowledge base contains solutions to common problems, FAQs, and troubleshooting guides. It serves as a valuable resource for self-service support and helps users resolve issues quickly.

- Community Forums: Active community forums allow users to share knowledge, ask questions, and receive assistance from other users and experts. These forums provide a valuable source of information and can accelerate problem-solving.

- Technical Support Channels: Reliable technical support channels, such as email, phone, and chat, provide direct access to support engineers who can assist with complex issues. Fast response times and knowledgeable support staff are critical for minimizing downtime and resolving critical issues.

Tools and Services for Managing and Monitoring Cloud Resources

Cloud providers offer a suite of tools and services designed to manage and monitor cloud resources effectively. These tools provide insights into resource utilization, performance metrics, security posture, and cost optimization opportunities. Leveraging these tools is essential for optimizing cloud environments, ensuring business continuity, and maintaining a high level of service availability.

- Monitoring Services: These services collect and analyze performance metrics, such as CPU utilization, memory usage, and network traffic. They provide real-time insights into the health and performance of cloud resources. Examples include Amazon CloudWatch, Azure Monitor, and Google Cloud Monitoring.

- Logging Services: These services collect and store logs from various cloud resources, such as virtual machines, databases, and applications. Logs are essential for troubleshooting issues, auditing security events, and analyzing application behavior. Examples include Amazon CloudWatch Logs, Azure Monitor Logs, and Google Cloud Logging.

- Alerting Services: These services allow users to define thresholds and trigger alerts when specific events occur, such as high CPU utilization or a failed database connection. Alerts enable proactive issue detection and rapid response. Examples include Amazon CloudWatch Alarms, Azure Monitor Alerts, and Google Cloud Monitoring Alerts.

- Cost Management Tools: These tools provide insights into cloud spending, allowing users to track costs, identify areas for optimization, and set budgets. They help control cloud costs and prevent unexpected expenses. Examples include AWS Cost Explorer, Azure Cost Management, and Google Cloud Cost Management.

- Automation Tools: These tools enable the automation of tasks, such as infrastructure provisioning, configuration management, and application deployment. Automation reduces manual effort, improves efficiency, and minimizes the risk of errors. Examples include AWS CloudFormation, Azure Resource Manager, and Google Cloud Deployment Manager.

- Security and Compliance Tools: These tools help users manage security and compliance requirements, such as vulnerability scanning, identity and access management, and data encryption. They help ensure the security and integrity of cloud resources. Examples include AWS Security Hub, Azure Security Center, and Google Cloud Security Command Center.

Future-Proofing Your Cloud Strategy

The cloud computing landscape is constantly evolving, driven by technological advancements and shifting business requirements. Selecting a cloud provider is not a one-time decision but a strategic commitment that must anticipate future trends. This involves evaluating not only current capabilities but also the provider’s roadmap for innovation, their adaptability, and their ability to support your long-term business objectives. Failing to consider future developments can lead to vendor lock-in, missed opportunities, and ultimately, hinder your organization’s ability to remain competitive.

Trends and Innovations in Cloud Computing

Cloud computing is experiencing rapid innovation, with several key trends shaping its future. Understanding these trends is critical for developing a future-proof cloud strategy.

- Serverless Computing: Serverless computing allows developers to build and run applications without managing servers. This model offers significant advantages in terms of scalability, cost-efficiency, and developer productivity. The increasing adoption of serverless architectures reflects a move towards more agile and responsive application development. For instance, companies like Netflix and Coca-Cola are leveraging serverless functions for event processing and data analytics, resulting in improved scalability and reduced operational overhead.

- Edge Computing: Edge computing brings computation and data storage closer to the data source, reducing latency and improving performance for applications that require real-time processing. This is particularly relevant for applications like IoT devices, autonomous vehicles, and augmented reality. For example, industrial manufacturers are deploying edge computing solutions to analyze data from sensors on the factory floor, enabling real-time monitoring and predictive maintenance.

- Artificial Intelligence and Machine Learning (AI/ML): Cloud providers are increasingly offering AI/ML services, including pre-trained models, development tools, and infrastructure for training custom models. These services are democratizing AI/ML, making it accessible to a wider range of organizations. Healthcare providers are using AI/ML to analyze medical images, improve diagnostics, and personalize patient treatment plans.

- Hybrid and Multi-Cloud Strategies: Organizations are increasingly adopting hybrid and multi-cloud strategies to avoid vendor lock-in, optimize costs, and leverage the best services from different providers. This approach requires robust management and orchestration tools to ensure seamless integration and portability across different cloud environments. For example, a retail company might use one cloud provider for its e-commerce platform, another for its data warehousing, and a third for its customer relationship management (CRM) system.

- Sustainability and Green Computing: With growing awareness of environmental concerns, cloud providers are focusing on sustainability initiatives, such as using renewable energy sources and optimizing data center efficiency. Choosing a cloud provider with a strong commitment to sustainability can help organizations reduce their carbon footprint. For example, Google has committed to running its data centers on 24/7 carbon-free energy by 2030.

Importance of Alignment with Long-Term Business Goals

Selecting a cloud provider must align with your organization’s long-term business goals. This involves considering factors such as scalability, flexibility, cost-effectiveness, and innovation. A provider that supports your future growth and evolving needs will be crucial for success.

- Scalability: The provider should be able to scale resources up or down to meet changing demands. Consider whether the provider can handle anticipated growth in data volume, user traffic, and application complexity. For example, a streaming service needs a cloud provider that can instantly scale its video transcoding and delivery infrastructure to handle peak viewing times.

- Flexibility: The provider should offer a wide range of services and tools to support your business needs. This includes compute, storage, networking, databases, and specialized services like AI/ML and IoT. For example, a research institution needs a cloud provider that offers flexible compute resources for scientific simulations and data analysis.

- Cost-Effectiveness: The provider’s pricing models should be transparent and predictable, allowing you to optimize your cloud spending. Consider factors such as pay-as-you-go pricing, reserved instances, and cost management tools. For instance, a startup company needs a cloud provider that offers cost-effective storage and compute options to manage its limited budget.

- Innovation: The provider should continuously innovate and offer new services and features to help you stay ahead of the competition. This includes staying up-to-date with the latest technologies and trends in cloud computing. For example, a software development company needs a cloud provider that offers the latest development tools and services to improve developer productivity and accelerate time to market.

- Vendor Lock-in Mitigation: While some degree of vendor lock-in is inevitable, choose a provider that facilitates data portability and allows you to migrate workloads to other platforms if needed. Using open standards and technologies can reduce lock-in. For instance, an enterprise company should prioritize a provider that offers tools and services for data migration and supports open-source technologies to avoid being trapped by proprietary solutions.

Steps for Developing a Cloud Strategy Roadmap

Creating a comprehensive cloud strategy roadmap is essential for future-proofing your cloud investments. This roadmap should Artikel your goals, requirements, and the steps needed to achieve them.

- Define Business Goals and Objectives: Clearly articulate your business goals and how cloud computing can help you achieve them. This involves identifying your key performance indicators (KPIs) and defining your desired outcomes.

- Assess Current State: Evaluate your existing IT infrastructure, applications, and processes. Identify the strengths and weaknesses of your current environment and determine what needs to be migrated or modernized.

- Identify Requirements: Define your technical and business requirements for cloud computing. This includes considering factors such as performance, security, compliance, and cost.

- Evaluate Cloud Providers: Research and compare different cloud providers based on your requirements. Consider factors such as services offered, pricing, support, and future roadmap.

- Develop a Migration Plan: Create a detailed migration plan that Artikels the steps needed to move your workloads to the cloud. This includes selecting the appropriate migration strategy, choosing the right tools, and defining a timeline.

- Implement and Monitor: Implement your migration plan and continuously monitor your cloud environment. This includes tracking performance, security, and cost, and making adjustments as needed.

- Optimize and Innovate: Continuously optimize your cloud environment to improve performance, reduce costs, and leverage new cloud services. Stay up-to-date with the latest cloud trends and innovations to identify new opportunities.

Closing Notes

In conclusion, selecting the optimal cloud provider for your migration is a multifaceted process requiring a strategic approach. By meticulously evaluating business objectives, technical requirements, and long-term goals, organizations can confidently choose a provider that fosters innovation, drives efficiency, and ensures a successful transition to the cloud. The considerations Artikeld herein serve as a foundation for informed decision-making, ultimately enabling organizations to leverage the full potential of cloud computing and future-proof their IT strategies.

FAQ

What is the difference between Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS)?

IaaS provides the fundamental building blocks of IT infrastructure—servers, storage, and networking—allowing users to manage the operating systems, middleware, and applications. PaaS offers a platform for developing, running, and managing applications without the complexity of managing the underlying infrastructure. SaaS delivers software applications over the internet, typically on a subscription basis, with the provider managing all aspects of the application and infrastructure.

How do I calculate the total cost of ownership (TCO) for cloud migration?

TCO calculation involves assessing the costs associated with both on-premises and cloud environments. This includes hardware, software, power, cooling, and IT staff costs for on-premises, and compute, storage, network, and support costs for the cloud. Additional considerations include migration costs, training expenses, and potential cost savings from reduced IT management overhead.

What are the key considerations for data security in the cloud?

Data security in the cloud requires a multi-layered approach, including encryption of data at rest and in transit, robust identity and access management (IAM) policies, regular security audits, and compliance with relevant industry regulations. Choosing a provider with comprehensive security features and a strong track record is essential.

How do I choose the right data migration strategy?

The choice of data migration strategy depends on the complexity of the data, the required downtime, and the desired level of modernization. Lift-and-shift is suitable for quick migrations with minimal changes. Re-platforming involves some modifications to optimize for the cloud. Re-factoring or re-architecting requires significant changes to leverage cloud-native services.