The advent of serverless architecture has revolutionized software development, offering unparalleled scalability and cost-efficiency. This paradigm shift is particularly impactful in chatbot development, enabling the creation of sophisticated conversational interfaces without the complexities of traditional server management. This guide delves into the intricacies of building a serverless chatbot, exploring the core concepts, technical components, and practical implementation steps necessary to bring your chatbot vision to life.

We will navigate the landscape of cloud platforms, dissect the roles of serverless functions and API gateways, and examine the best practices for data storage and security. From designing intuitive conversation flows to integrating with popular messaging platforms, this analysis provides a structured approach to mastering serverless chatbot development. Furthermore, we will investigate advanced features, deployment strategies, and essential security considerations, equipping you with the knowledge to build robust, scalable, and secure chatbots.

Introduction to Serverless Chatbots

Serverless chatbots represent a paradigm shift in chatbot development, leveraging the benefits of serverless computing to create scalable, cost-effective, and easily maintainable conversational interfaces. This approach eliminates the need for managing underlying infrastructure, allowing developers to focus solely on the chatbot’s logic and functionality. This streamlined process enables rapid prototyping, deployment, and iteration, making serverless architectures a compelling choice for a variety of chatbot applications.

Core Concept of a Serverless Chatbot

A serverless chatbot operates on a “pay-as-you-go” model, where the underlying infrastructure is managed by a cloud provider. The core concept revolves around the execution of code in response to events, such as user messages, without the need for provisioning or managing servers. This is achieved through Function-as-a-Service (FaaS) platforms, which allow developers to deploy individual functions that are triggered by specific events.

These functions handle the chatbot’s logic, processing user input, retrieving information, and generating responses. The cloud provider automatically scales the resources required to execute these functions, based on the demand. The chatbot’s state, including conversation history and user context, is often managed using serverless databases or key-value stores.

Benefits of Serverless Architecture for Chatbot Development

Serverless architectures offer several advantages for chatbot development. These benefits stem from the inherent characteristics of serverless computing.

- Cost Efficiency: The pay-per-use model significantly reduces operational costs. Developers only pay for the compute time and resources consumed by the chatbot’s functions, eliminating the costs associated with idle servers. For example, a chatbot that handles a low volume of interactions might only incur minimal charges, while a chatbot experiencing peak traffic will automatically scale and pay for the increased usage.

This dynamic scaling ensures cost optimization, as resources are allocated only when needed.

- Scalability and High Availability: Serverless platforms automatically scale resources based on demand. This ensures that the chatbot can handle sudden spikes in traffic without performance degradation. The platform also handles the underlying infrastructure, including redundancy and failover mechanisms, to ensure high availability. For example, during a marketing campaign that drives a surge in chatbot interactions, the serverless platform automatically allocates more resources to accommodate the increased traffic.

- Reduced Operational Overhead: Developers do not need to manage servers, operating systems, or infrastructure. This frees up development teams to focus on the core chatbot logic and features, such as natural language processing (NLP), intent recognition, and response generation. The cloud provider handles tasks like server patching, security updates, and infrastructure maintenance.

- Faster Development and Deployment: Serverless architectures enable rapid prototyping and deployment. Developers can quickly deploy and test new chatbot features without the complexities of managing infrastructure. The ease of deployment facilitates faster iteration cycles, allowing for continuous improvement and optimization of the chatbot’s performance.

Common Use Cases for Serverless Chatbots

Serverless chatbots are applicable in various scenarios, offering flexible and scalable solutions. These applications often leverage the benefits of serverless architectures to optimize performance and cost-effectiveness.

- Customer Service and Support: Chatbots can handle common customer inquiries, provide troubleshooting assistance, and escalate complex issues to human agents. For example, a serverless chatbot integrated into a company’s website can answer questions about product features, shipping details, and return policies, reducing the workload on customer service representatives.

- E-commerce: Chatbots can assist customers with product discovery, provide recommendations, and guide them through the purchasing process. For example, a serverless chatbot on an e-commerce platform can help customers find specific products, compare prices, and complete their orders.

- Lead Generation and Qualification: Chatbots can collect leads, qualify potential customers, and schedule appointments. For instance, a serverless chatbot on a real estate website can engage with visitors, ask qualifying questions, and schedule property viewings for interested leads.

- Internal Communication and Automation: Chatbots can automate internal tasks, such as answering employee questions, providing access to company resources, and processing requests. For example, a serverless chatbot can be used to provide employees with information about HR policies, benefits, and company procedures, streamlining internal communication.

- Information Retrieval: Chatbots can retrieve information from databases, APIs, and other data sources to provide users with specific answers. For example, a serverless chatbot can be built to answer questions about weather forecasts, stock prices, or flight schedules.

Choosing a Platform and Tools

The selection of the appropriate platform and tools is crucial for the successful development and deployment of serverless chatbots. This choice significantly impacts development speed, scalability, cost-effectiveness, and the overall performance of the chatbot. Careful consideration of the available options, their capabilities, and associated pricing models is essential for making informed decisions.

Popular Cloud Platforms for Serverless Chatbots

Several cloud platforms offer robust infrastructure and services tailored for building and deploying serverless chatbots. Each platform provides a range of tools and features, catering to different development preferences and business requirements.

- Amazon Web Services (AWS): AWS is a leading cloud provider, offering a comprehensive suite of services suitable for serverless chatbot development. Key services include:

- Amazon Lex: A fully managed conversational AI service for building chatbots. It supports natural language understanding (NLU) and natural language generation (NLG).

- AWS Lambda: A serverless compute service that allows you to run code without provisioning or managing servers. This is essential for executing chatbot logic.

- Amazon API Gateway: A service for creating, publishing, maintaining, monitoring, and securing APIs, which can be used to expose your chatbot to users.

- Amazon DynamoDB: A fully managed NoSQL database service for storing chatbot data, such as user conversations and state.

- Amazon S3: A storage service for files like audio, images, or documents that the chatbot might use or generate.

AWS’s extensive ecosystem and mature services make it a popular choice for enterprise-grade chatbot deployments.

- Microsoft Azure: Azure provides a powerful platform for building serverless chatbots, especially for organizations already invested in the Microsoft ecosystem. Key services include:

- Azure Bot Service: A managed service for building, testing, deploying, and managing intelligent bots. It supports various channels, including Microsoft Teams, Skype, and Facebook Messenger.

- Azure Functions: A serverless compute service, similar to AWS Lambda, for running chatbot code.

- Azure Cognitive Services: A suite of AI services, including Language Understanding (LUIS) for NLU and text-to-speech/speech-to-text capabilities.

- Azure Cosmos DB: A globally distributed, multi-model database service, suitable for storing chatbot data.

Azure’s integration with other Microsoft products and its strong focus on enterprise solutions make it a viable alternative.

- Google Cloud Platform (GCP): GCP offers a compelling platform for serverless chatbot development, leveraging Google’s expertise in AI and machine learning. Key services include:

- Dialogflow: A conversational AI platform for building chatbots, supporting NLU and integration with various channels.

- Cloud Functions: A serverless compute service, similar to AWS Lambda and Azure Functions, for running chatbot code.

- Cloud Storage: For storing files and other chatbot assets.

- Cloud Firestore: A NoSQL database for storing chatbot data.

GCP’s strong AI capabilities and competitive pricing make it a strong contender, particularly for chatbots leveraging Google’s AI services.

Necessary Tools and Services for Development and Deployment

Beyond the core cloud platform services, several additional tools and services are crucial for the development and deployment process. These tools streamline the development workflow, improve chatbot performance, and enhance the user experience.

- Integrated Development Environment (IDE): An IDE provides a comprehensive environment for writing, testing, and debugging code. Popular choices include Visual Studio Code, IntelliJ IDEA, and Eclipse.

- Version Control System (VCS): A VCS, such as Git, is essential for managing code changes, collaborating with other developers, and tracking the history of the project.

- Testing Frameworks: Testing frameworks, like Jest (for JavaScript), or unittest (for Python), are used to write automated tests to ensure the chatbot functions correctly.

- Continuous Integration/Continuous Deployment (CI/CD) Tools: CI/CD tools, such as Jenkins, GitLab CI, or AWS CodePipeline, automate the build, test, and deployment processes. This enables faster releases and reduces the risk of errors.

- Monitoring and Logging Tools: Monitoring and logging tools, like CloudWatch (AWS), Azure Monitor, or Google Cloud Logging, are crucial for tracking chatbot performance, identifying errors, and gathering usage data. This information is vital for optimizing the chatbot and ensuring its availability.

- Chatbot Frameworks: Frameworks such as Bot Framework SDK (Microsoft), or Rasa, can simplify the development process by providing pre-built components and tools for building conversational interfaces.

Comparison of Pricing Models for Chatbot Services

Cloud providers offer various pricing models for their chatbot services. Understanding these models is essential for accurately estimating and managing the costs associated with building and deploying a serverless chatbot.

Pricing is typically based on resource consumption, and various factors influence the cost.

| Service | Pricing Factors | Example Pricing (Illustrative) | Notes |

|---|---|---|---|

| Serverless Compute (e.g., AWS Lambda, Azure Functions, Cloud Functions) |

|

| Costs scale with the number of users and the complexity of the chatbot logic. Careful optimization of code execution time and memory usage can minimize costs. |

| Conversational AI (e.g., Amazon Lex, Azure Bot Service, Dialogflow) |

|

| Pricing can vary significantly depending on the volume of user interactions and the features used. Monitoring usage and optimizing the chatbot’s conversation flow can help manage costs. |

| Database (e.g., DynamoDB, Cosmos DB, Cloud Firestore) |

|

| Costs depend on the amount of data stored and the frequency of data access. Designing the database schema efficiently and optimizing data access patterns can reduce costs. |

| API Gateway (e.g., Amazon API Gateway, Azure API Management, Cloud Endpoints) |

|

| The cost scales with the number of user interactions with the chatbot. Caching frequently accessed data and optimizing API calls can reduce costs. |

Important Note: Pricing models are subject to change. Always refer to the latest pricing information provided by the cloud providers. The examples provided are for illustrative purposes only and do not reflect real-time prices.

Understanding Serverless Components

The architecture of a serverless chatbot hinges on several key components that work in concert to handle user interactions, process data, and provide responses. These components, leveraging the serverless paradigm, allow for scalable, cost-effective, and highly available chatbot deployments. This section delves into the core serverless elements: functions, API gateways, and databases.

Serverless Functions in Chatbots

Serverless functions, such as AWS Lambda, Azure Functions, and Google Cloud Functions, serve as the computational engines within a serverless chatbot. They execute specific tasks in response to triggers, such as user messages, API requests, or scheduled events. Their inherent characteristics, including automatic scaling, pay-per-use pricing, and event-driven execution, make them ideal for building chatbots.The core function of serverless functions in a chatbot involves:

- Processing User Input: When a user sends a message, an API gateway (as discussed later) typically routes the request to a function. This function can then parse the input, identify the user’s intent, and extract relevant information. For example, a function might use Natural Language Processing (NLP) libraries to determine the user’s intent, such as “book a flight” or “check the weather.”

- Calling External APIs: Serverless functions can interact with external APIs to retrieve information or perform actions. For instance, a function might call a weather API to get current weather conditions, or an e-commerce API to check product availability. This capability is crucial for integrating the chatbot with various services.

- Generating Responses: Based on the processed input and any data retrieved from external APIs or databases, the function generates a response to the user. This response can be formatted text, images, or other interactive elements, depending on the chatbot’s capabilities and the chosen platform.

- Managing State and Context: While serverless functions are stateless by design, they can be used to manage the conversational context by interacting with databases or other state management services. A function can store information about the ongoing conversation, such as the user’s preferences or the current stage of a task.

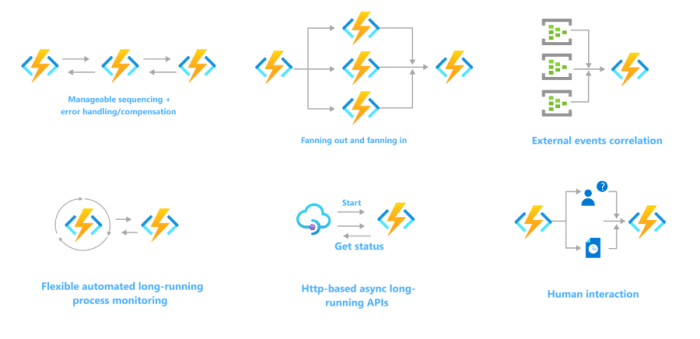

- Orchestrating Complex Workflows: Serverless functions can be chained together to create complex chatbot workflows. One function might handle intent recognition, another might fetch data, and a third might generate the response. This modular approach allows for the development of sophisticated chatbot interactions.

An example of this is the process of booking a flight using a serverless chatbot. The user inputs a request such as, “I want to book a flight from New York to London on December 24th.”

- An API Gateway receives this message.

- The API Gateway triggers a Lambda function (in AWS), or an equivalent function in Azure or Google Cloud.

- The function parses the input using an NLP library. The intent is identified as “book flight”, and entities like “New York”, “London”, and “December 24th” are extracted.

- The function calls an external flight booking API, passing the extracted information.

- The API returns available flights.

- The function formats the flight options and sends them back to the user via the API Gateway.

API Gateways in Chatbots

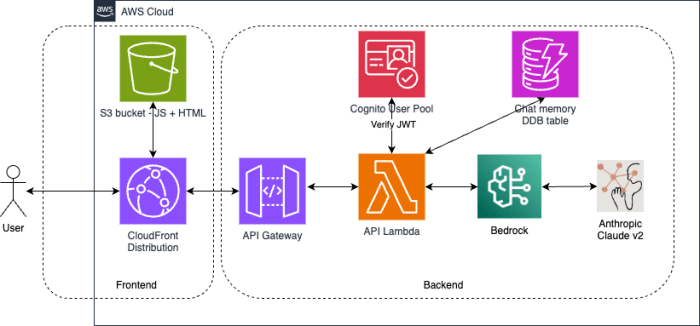

API gateways act as the entry point for all interactions with a serverless chatbot. They manage API requests, handle authentication and authorization, route traffic to the appropriate serverless functions, and provide other crucial functionalities. They are indispensable for ensuring the security, scalability, and manageability of a chatbot. Examples include AWS API Gateway, Azure API Management, and Google Cloud Endpoints.The functions of API gateways in a serverless chatbot include:

- Request Routing: API gateways direct incoming requests to the correct serverless functions based on the request’s path, method, and other criteria. This allows for organizing the chatbot’s functionality into different functions.

- Authentication and Authorization: API gateways handle authentication (verifying user identity) and authorization (determining user permissions). This ensures that only authorized users can interact with the chatbot and access its features. They often support various authentication methods, such as API keys, OAuth, and JWT (JSON Web Tokens).

- Rate Limiting and Throttling: API gateways can limit the number of requests a user or application can make within a specific timeframe. This prevents abuse and ensures the chatbot remains available even under heavy load.

- Request Transformation and Validation: API gateways can transform incoming requests before they reach the serverless functions and validate request parameters to ensure they meet the required format and constraints. This reduces the workload on the functions and improves data integrity.

- Monitoring and Logging: API gateways provide monitoring and logging capabilities, allowing developers to track API usage, identify errors, and troubleshoot performance issues. They offer insights into API traffic, latency, and error rates.

- API Versioning: API gateways facilitate API versioning, enabling developers to make changes to the chatbot’s API without breaking existing integrations. This allows for backward compatibility and smooth transitions between different versions of the API.

For example, consider a chatbot integrated with a mobile app. The API gateway would handle the following:

- The mobile app sends a request to the API gateway.

- The API gateway authenticates the user based on a token provided by the app.

- The API gateway routes the request to the appropriate serverless function, such as a function that handles user queries about order status.

- The function processes the request and retrieves the order status information from a database.

- The function sends the order status information back to the API gateway.

- The API gateway transforms the response into a format suitable for the mobile app (e.g., JSON) and sends it back.

- The API gateway logs the request and response for monitoring and debugging purposes.

Databases for Chatbot Data

Databases are essential for storing the data a chatbot needs to function effectively. This includes user data, conversation history, chatbot configuration, and any other information required to provide a personalized and engaging experience. Serverless databases, like DynamoDB, Cosmos DB, and Cloud Firestore, are particularly well-suited for serverless chatbot architectures due to their scalability, pay-per-use pricing, and ease of integration with serverless functions.The primary uses of databases in a serverless chatbot involve:

- Storing User Data: Databases store user profiles, preferences, and other relevant information. This allows the chatbot to personalize interactions and provide a better user experience. This includes storing user IDs, names, location, and any custom settings.

- Saving Conversation History: Databases can store the conversation history between the user and the chatbot. This enables the chatbot to maintain context, remember previous interactions, and provide more relevant responses over time. The data stored typically includes user messages, chatbot responses, and timestamps.

- Managing Chatbot Configuration: Databases can store the chatbot’s configuration, such as intent definitions, entity recognition models, and response templates. This allows for easy updates and modifications to the chatbot’s behavior without requiring code deployments.

- Tracking State and Context: Databases can be used to store the current state of a conversation, such as the user’s progress through a task or the information collected during an interaction. This is crucial for multi-turn conversations.

- Storing Training Data: Some chatbots use databases to store training data used to train and improve their NLP models. This data is used to teach the chatbot to understand user intents and generate appropriate responses.

For instance, a chatbot used for customer support could utilize a database as follows:

- A user starts a conversation and the API gateway triggers a serverless function.

- The function checks the database (e.g., DynamoDB) for the user’s ID to retrieve their profile information.

- If the user is new, the function creates a new entry in the database for the user.

- As the conversation progresses, the function stores the conversation history in the database, including user messages, chatbot responses, and any relevant data.

- If the user asks about an order, the function queries the database to retrieve the order details.

- The function uses the order details to generate a response and send it to the user via the API gateway.

Designing the Chatbot’s Conversation Flow

Designing the conversation flow is a critical step in building a serverless chatbot, as it dictates how users interact with the bot and how effectively the bot fulfills its intended purpose. A well-designed flow ensures a smooth and intuitive user experience, leading to higher user satisfaction and task completion rates. This section focuses on constructing a conversation flow, illustrating it with a specific use case, and outlining the chatbot’s responses.

Creating a Sample Conversation Flow for Food Ordering

A sample conversation flow for ordering food can be structured to guide the user through the process, from menu browsing to order confirmation. This flow ensures a clear and efficient user journey. The following is a structured representation of the user interactions and bot responses.

- Greeting and Menu Presentation: The chatbot initiates the conversation with a greeting and presents the user with menu options.

- User Input: “Hi, I’d like to order some food.”

- Bot Response: “Hello! Welcome to our food ordering service. Here’s our menu:” (Displays a menu with categories like Appetizers, Entrees, Desserts).

- User Input: “I’d like to order the Chicken Caesar Salad and the Chocolate Lava Cake.”

- Bot Response: “Great choices! The Chicken Caesar Salad and Chocolate Lava Cake. Anything else?”

- User Input: “Yes, can I have the salad without croutons and two Chocolate Lava Cakes?”

- Bot Response: “Okay, Chicken Caesar Salad without croutons and two Chocolate Lava Cakes. Anything else?”

- User Input: “No, that’s all.”

- Bot Response: “Alright, here’s your order: Chicken Caesar Salad (no croutons), two Chocolate Lava Cakes. Ready to proceed to checkout?”

- User Input: “Yes, please.”

- Bot Response: “Please provide your delivery address:” (Prompts for address input).

- User Input: “123 Main Street, Anytown.”

- Bot Response: “And your payment method?” (Prompts for payment information).

- User Input: “Credit Card.”

- Bot Response: “Please enter your card details:” (Collects card details, securely).

- Bot Response: “Thank you! Your order has been placed. Estimated delivery time: 30-45 minutes. Order ID: #12345.”

Organizing the Conversation Flow Using a Flowchart or Diagram

A flowchart visually represents the conversation flow, allowing for easier understanding and management of the chatbot’s logic. This flowchart helps in identifying potential decision points, error handling, and the overall user journey.The flowchart starts with a “Start” node, followed by a “Greeting and Menu Presentation” process. After this, the flow branches based on user input:

- If the user selects menu items, it proceeds to the “Selection of Items” process.

- If the user requires customization or quantity adjustments, it moves to the “Customization and Quantity” process.

- The flowchart merges the selection and customization paths into an “Order Review” process.

- The flow proceeds to “Checkout and Payment” and then to “Order Confirmation.”

- Error handling paths are included at each stage, such as when the user enters invalid input.

- The flowchart ends with an “End” node, signifying the successful completion of the order or a point where the interaction concludes.

The flowchart structure includes the following shapes:

- Start/End: Represented by rounded rectangles.

- Process: Represented by rectangles, indicating a specific action or step in the conversation.

- Decision: Represented by diamonds, indicating a point where the bot makes a decision based on user input.

- Input/Output: Represented by parallelograms, indicating user input or bot output.

- Arrows: Connect the shapes, indicating the flow of the conversation.

Designing the Chatbot’s Responses for Different User Inputs

The design of chatbot responses must be comprehensive and adaptable to various user inputs. This involves anticipating different user queries, providing clear and concise responses, and handling errors gracefully.The following Artikels response strategies:

- Greeting: The chatbot uses a friendly greeting to initiate the conversation.

- Example: “Hello! Welcome to our food ordering service. How can I help you today?”

- Menu Presentation: The chatbot presents the menu with clear categories and item descriptions.

- Example: “Here’s our menu:” (Displays menu items with prices and descriptions).

- Item Selection: The chatbot confirms the user’s selections and prompts for further orders.

- Example: “Okay, you’ve selected the Chicken Caesar Salad and the Chocolate Lava Cake. Anything else?”

- Customization: The chatbot confirms customization requests and updates the order accordingly.

- Example: “Understood. The Chicken Caesar Salad will be prepared without croutons.”

- Order Review: The chatbot summarizes the order accurately before proceeding to checkout.

- Example: “Your order includes: Chicken Caesar Salad (no croutons), and two Chocolate Lava Cakes. Ready to proceed to checkout?”

- Checkout and Payment: The chatbot guides the user through the checkout process, collecting necessary information securely.

- Example: “Please provide your delivery address:” (Followed by prompts for address and payment details).

- Error Handling: The chatbot provides helpful messages if the user’s input is unclear or invalid.

- Example: “I’m sorry, I didn’t understand that. Could you please rephrase your request?”

- Confirmation: The chatbot confirms the order, provides an order ID, and estimates the delivery time.

- Example: “Thank you! Your order has been placed. Estimated delivery time: 30-45 minutes. Order ID: #12345.”

Implementing the Chatbot’s Logic

The core of a serverless chatbot lies in its ability to process user input, understand the intent behind the input, and generate appropriate responses. This section details the implementation of this logic, focusing on the serverless functions that handle user interactions and the integration of Natural Language Understanding (NLU) services. Proper implementation ensures the chatbot can effectively understand and respond to user queries, providing a seamless conversational experience.

Writing Code for a Serverless Function to Handle User Input

Serverless functions act as the computational units that process user input. These functions are triggered by events, such as an incoming message from a user. The code within the function then analyzes the input, interacts with NLU services, and formulates a response. The choice of programming language depends on the platform, but common choices include JavaScript (Node.js), Python, and Java.Here’s an example using Node.js and AWS Lambda, illustrating the basic structure:“`javascriptexports.handler = async (event) => try const userInput = event.body; // Assuming the input is in the request body console.log(‘User Input:’, userInput); // Placeholder for processing the input and calling NLU service const response = await processUserInput(userInput); const statusCode = 200; const body = JSON.stringify( message: response ); const responseHeaders = “Content-Type”: “application/json”, “Access-Control-Allow-Origin”: “*” // Consider restricting for security ; return statusCode, body, headers: responseHeaders ; catch (error) console.error(‘Error:’, error); return statusCode: 500, body: JSON.stringify( message: ‘Internal Server Error’ ) ; ;async function processUserInput(input) // Replace with actual NLU integration return `You said: $input.

Processing…`;“`The code first extracts the user input from the event object. It then logs the input for debugging purposes. The `processUserInput` function is a placeholder for the actual logic, which would include calling an NLU service to determine the user’s intent and extract relevant entities. Finally, it constructs a JSON response to be sent back to the user. The response includes a status code and a message.

The error handling is also included to provide better user experience and proper logging of issues.

Integrating a Natural Language Understanding (NLU) Service

NLU services are crucial for understanding the meaning behind user input. These services use machine learning models to analyze text and identify the user’s intent (what they want to do) and entities (the specific information related to their request). Popular choices include Dialogflow (Google), Amazon Lex, and LUIS (Microsoft). The integration process typically involves sending the user’s input to the NLU service, receiving the analysis, and using the results to determine the appropriate response.Here’s an example using Dialogflow (Google) and Node.js:“`javascriptconst dialogflow = require(‘@google-cloud/dialogflow’);async function processUserInput(userInput) const projectId = ‘YOUR_PROJECT_ID’; // Replace with your Dialogflow project ID const sessionId = ‘unique-session-id’; // Unique ID for each user session const languageCode = ‘en-US’; const sessionClient = new dialogflow.SessionsClient(); const sessionPath = sessionClient.sessionPath(projectId, sessionId); const request = session: sessionPath, queryInput: text: text: userInput, languageCode: languageCode, , , ; const responses = await sessionClient.detectIntent(request); const result = responses[0].queryResult; console.log(` Intent: $result.intent.displayName`); console.log(` Confidence: $result.intentDetectionConfidence`); console.log(` Fulfillment Text: $result.fulfillmentText`); return result.fulfillmentText;“`This code snippet demonstrates how to interact with the Dialogflow API.

The `processUserInput` function now initializes a Dialogflow client, sets up the session, and sends the user input to Dialogflow. The response from Dialogflow contains the identified intent, the confidence score, and the fulfillment text (the chatbot’s response). The code extracts the fulfillment text and returns it.

Providing Code Snippets for Handling User Intents and Entities

Once the NLU service identifies the intent and extracts entities, the serverless function needs to use this information to generate the appropriate response. This involves conditional logic based on the identified intent and the values of the entities.Here’s an example extending the previous Dialogflow example to handle a “book.flight” intent:“`javascriptasync function processUserInput(userInput) // … (Dialogflow setup and request as before) …

const result = responses[0].queryResult; if (result.intent.displayName === ‘book.flight’) const origin = result.parameters.fields.origin.stringValue; const destination = result.parameters.fields.destination.stringValue; const travelDate = result.parameters.fields.travel_date.stringValue; if (origin && destination && travelDate) return `OK.

Booking a flight from $origin to $destination on $travelDate. (This is a simulation)`; else return “I need more information to book your flight. Please provide origin, destination, and travel date.”; else return result.fulfillmentText; // Use Dialogflow’s default response “`This code checks the intent returned by Dialogflow.

If the intent is “book.flight”, it extracts the “origin”, “destination”, and “travel_date” entities from the parameters. If all required entities are present, it constructs a response. Otherwise, it prompts the user for more information. The logic can be expanded to handle different intents and entities to support a wide range of chatbot functionalities.

Integrating with Messaging Platforms

Integrating a serverless chatbot with messaging platforms is crucial for user accessibility and engagement. This process involves configuring the chatbot to receive and respond to messages from specific platforms, leveraging platform-specific features while adhering to their limitations. The integration often involves webhooks, APIs, and authentication mechanisms to ensure secure and efficient communication. The choice of platform impacts the chatbot’s functionality and user experience, requiring careful consideration of each platform’s capabilities.

Platform-Specific Integration Process

The integration process varies depending on the messaging platform chosen. Each platform provides its own set of APIs, authentication protocols, and feature sets. The general workflow involves setting up a webhook to receive incoming messages, processing the messages, and sending responses back to the platform.

- Facebook Messenger: Facebook Messenger integration utilizes the Messenger Platform. Developers create a Facebook App, configure a webhook to receive message events, and use the Send API to send messages. The app needs to be approved by Facebook to access certain features.

- Slack: Slack integration leverages Slack’s API and event subscriptions. A Slack app is created and installed in a Slack workspace. The app subscribes to message events and responds using the Web API to send messages and perform other actions.

- Microsoft Teams: Microsoft Teams integration employs the Microsoft Bot Framework. Bots are registered on the Azure Bot Service and can be deployed to Teams. The Bot Framework handles message routing and provides a unified interface for interacting with various channels, including Teams.

Handling Platform-Specific Features and Limitations

Each messaging platform offers unique features and has its limitations. The chatbot must be designed to handle these differences gracefully.

- Rich Media Support: Platforms support varying levels of rich media, such as images, videos, and interactive elements. The chatbot needs to detect the platform and adapt its responses accordingly. For instance, Facebook Messenger supports quick replies and carousels, while Slack offers blocks for structured messages.

- Message Formatting: Formatting options, such as markdown, vary between platforms. The chatbot should format messages appropriately for each platform to ensure optimal readability.

- Rate Limits and API Usage: Messaging platforms impose rate limits on API calls. The chatbot needs to be designed to handle these limits, potentially by implementing queuing mechanisms or optimizing message delivery. Exceeding these limits can result in message delays or failures.

- User Interface Consistency: The user interface (UI) design should adapt to the platform. The chatbot’s appearance and interaction style must align with the platform’s guidelines to maintain a consistent user experience.

Example Code for Sending and Receiving Messages on Facebook Messenger (Node.js)

This example illustrates the basic process of sending and receiving messages on Facebook Messenger using Node.js and the `node-fetch` library for making HTTP requests.“`javascript// Import the fetch library (or use a different HTTP client)const fetch = require(‘node-fetch’);// Facebook App Access Token (obtained from your Facebook App dashboard)const PAGE_ACCESS_TOKEN = ‘YOUR_PAGE_ACCESS_TOKEN’;// Webhook Verification Token (configured in your Facebook App)const VERIFY_TOKEN = ‘YOUR_VERIFY_TOKEN’;// Function to handle incoming messagesasync function handleMessage(sender_psid, received_message) let response; if (received_message.text) response = “text”: `You sent the message: “$received_message.text”.

Now try to send a new one!` else if (received_message.attachments) response = “text”: “Sorry, but the attachment is not supported yet.” await callSendAPI(sender_psid, response);// Function to send a message to Facebook Messengerasync function callSendAPI(sender_psid, response) const request_body = “recipient”: “id”: sender_psid , “message”: response try const fetchResponse = await fetch(`https://graph.facebook.com/v18.0/me/messages?access_token=$PAGE_ACCESS_TOKEN`, method: ‘POST’, headers: ‘Content-Type’: ‘application/json’ , body: JSON.stringify(request_body) ); const jsonResponse = await fetchResponse.json(); console.log(‘Message sent successfully:’, jsonResponse); catch (error) console.error(‘Failed to send message:’, error); // Webhook setup for GET requests (verification)app.get(“/webhook”, (req, res) => const mode = req.query[“hub.mode”]; const token = req.query[“hub.verify_token”]; const challenge = req.query[“hub.challenge”]; if (mode && token) if (mode === “subscribe” && token === VERIFY_TOKEN) console.log(“WEBHOOK_VERIFIED”); res.status(200).send(challenge); else res.sendStatus(403); );// Webhook setup for POST requests (message handling)app.post(“/webhook”, async (req, res) => const body = req.body; if (body.object === “page”) body.entry.forEach(async function(entry) const webhook_event = entry.messaging[0]; const sender_psid = webhook_event.sender.id; if (webhook_event.message) await handleMessage(sender_psid, webhook_event.message); ); res.status(200).send(“EVENT_RECEIVED”); else res.sendStatus(404); );“`This code demonstrates the basic structure.

The `handleMessage` function processes the incoming message, and `callSendAPI` sends the response. The webhook endpoint `/webhook` handles GET requests for verification and POST requests for message handling. The PAGE_ACCESS_TOKEN and VERIFY_TOKEN must be replaced with the appropriate values from your Facebook App configuration.This code requires the installation of `node-fetch` using `npm install node-fetch`. Also, a public URL accessible by Facebook is required for the webhook.

The code also doesn’t implement error handling, rate limiting, or complex conversation logic, which are crucial for production-ready chatbots.

Data Storage and Management

Effective data storage and management are critical for the functionality, scalability, and security of a serverless chatbot. Properly handling user data, conversation history, and chatbot settings enables personalized experiences, improves performance, and ensures compliance with privacy regulations. This section Artikels the key considerations for data storage within a serverless chatbot architecture.

Storing User Data, Conversation History, and Chatbot Settings

The choice of how to store data depends on the specific requirements of the chatbot. Different data types and access patterns necessitate different storage solutions. Several strategies are employed to efficiently manage the diverse data requirements of a serverless chatbot.

- User Data: This encompasses information like user IDs, preferences, and profile details. User data is often stored in a database that supports efficient lookups and updates based on user identifiers. This data is crucial for personalization. For example, a chatbot designed to provide travel recommendations might store a user’s preferred destinations, travel dates, and budget.

- Conversation History: Maintaining a record of past conversations allows the chatbot to provide context-aware responses and improve its understanding of user needs over time. Conversation history is typically stored in a database optimized for time-series data or in a document database. The storage structure often includes timestamps, user input, chatbot responses, and any associated metadata.

- Chatbot Settings: These settings control the chatbot’s behavior, such as the welcome message, the available commands, and the integration details with messaging platforms. Chatbot settings are typically stored in a configuration file or a key-value store, allowing for easy modification and retrieval. This could include API keys, webhook URLs, and language settings.

Importance of Data Security and Privacy

Data security and privacy are paramount considerations when handling user data. Serverless architectures, while offering inherent security benefits, still require careful attention to data protection. Compliance with regulations like GDPR and CCPA is mandatory.

- Data Encryption: Implementing encryption both in transit and at rest is essential to protect sensitive data from unauthorized access. Encryption ensures that even if data is intercepted, it remains unreadable without the appropriate decryption keys.

- Access Control: Implementing robust access control mechanisms limits who can access and modify the data. This involves using role-based access control (RBAC) and least privilege principles to restrict access to only the necessary resources.

- Data Minimization: Collecting only the data that is strictly necessary for the chatbot’s functionality and deleting data when it is no longer needed helps to minimize the risk of data breaches and comply with privacy regulations.

- Regular Auditing: Regular security audits and penetration testing are crucial for identifying and addressing vulnerabilities in the data storage and access mechanisms. This involves analyzing logs, reviewing access controls, and simulating attacks to identify weaknesses.

- Compliance: Adhering to relevant data privacy regulations (e.g., GDPR, CCPA) is essential. This includes obtaining user consent, providing data access and deletion options, and implementing data processing agreements with any third-party providers.

Using a Database to Store and Retrieve Chatbot Data

Databases are the cornerstone of data storage for serverless chatbots. The choice of database depends on the data structure and access patterns. Common options include NoSQL databases (like DynamoDB) for flexible schema and high scalability, and relational databases (like PostgreSQL) for structured data and complex queries.

Here’s a simplified example using Amazon DynamoDB (a NoSQL database) to store user preferences. The code snippets are illustrative and would need to be integrated with the appropriate serverless functions (e.g., AWS Lambda) and SDKs.

Data Model (Example):

A user’s preference is represented by the following attributes:

userId(String, Primary Key): Unique identifier for the user.themePreference(String): User’s preferred theme (e.g., “light”, “dark”).notificationPreference(Boolean): Whether the user wants notifications.

Creating a Table (Conceptual):

Using the AWS CLI, a DynamoDB table named ‘UserPreferences’ would be created with the following attributes:

aws dynamodb create-table \ --table-name UserPreferences \ --attribute-definitions \ AttributeName=userId,AttributeType=S \ --key-schema \ AttributeName=userId,KeyType=HASH \ --provisioned-throughput \ ReadCapacityUnits=5,WriteCapacityUnits=5

In this example, the userId is the primary key, ensuring uniqueness and enabling efficient lookups.

Storing User Preferences (Conceptual):

To store a user’s theme preference, a serverless function would be triggered by a user interaction, like changing the theme within the chatbot interface. The following Python code snippet illustrates how to store the data in DynamoDB (using the AWS SDK for Python – Boto3):

import boto3import jsondynamodb = boto3.resource('dynamodb')table = dynamodb.Table('UserPreferences')def store_preference(user_id, theme): try: response = table.put_item( Item= 'userId': user_id, 'themePreference': theme, 'notificationPreference': True # Assuming notifications are enabled by default. ) print("PutItem succeeded:") print(json.dumps(response, indent=2)) return True except Exception as e: print(f"Error storing preference: e") return False# Example usageif store_preference("user123", "dark"): print("Preference stored successfully.")

This code snippet demonstrates how to write data to DynamoDB.

The put_item operation inserts a new item (or overwrites an existing one if the primary key matches). Error handling is included to manage potential issues during data insertion.

Retrieving User Preferences (Conceptual):

When the chatbot needs to retrieve a user’s theme preference, another serverless function would be triggered. The following Python code illustrates retrieving data from DynamoDB:

import boto3import jsondynamodb = boto3.resource('dynamodb')table = dynamodb.Table('UserPreferences')def get_preference(user_id): try: response = table.get_item( Key= 'userId': user_id ) if 'Item' in response: return response['Item'] else: return None except Exception as e: print(f"Error retrieving preference: e") return None# Example usageuser_data = get_preference("user123")if user_data: print(f"User data: json.dumps(user_data, indent=2)") theme = user_data.get('themePreference') if theme: print(f"User's theme preference: theme")else: print("User data not found.") The get_item operation retrieves an item from the table based on the primary key ( userId).

If the item exists, the function returns the item; otherwise, it returns None. This function is crucial for personalizing the chatbot’s behavior based on the user’s stored preferences.

Explanation of Database Operations:

The DynamoDB operations put_item and get_item are fundamental to data storage and retrieval. These operations are designed for high throughput and low latency, making them suitable for serverless chatbot applications. The example demonstrates how to manage user-specific data and tailor the chatbot’s responses based on user preferences.

Important Note: These are simplified examples. In a real-world scenario, additional considerations include:

- Error handling: Implement robust error handling for all database operations.

- Data validation: Validate data before storing it in the database.

- Security: Securely manage database credentials and access permissions.

- Scalability: Design the database schema and access patterns for scalability.

Testing and Debugging

Rigorous testing and effective debugging are crucial for the successful deployment and maintenance of a serverless chatbot. These processes ensure that the chatbot functions as intended, handles user interactions correctly, and can be easily corrected when errors arise. A well-defined testing strategy and a suite of debugging tools are essential for identifying and resolving issues efficiently.

Testing Chatbot Functionality and Performance

Testing the chatbot’s functionality and performance involves evaluating its ability to respond accurately, its efficiency in handling user requests, and its resilience under varying loads. This includes verifying the chatbot’s conversational flow, its integration with external services, and its overall performance characteristics.

- Functional Testing: This verifies that the chatbot behaves as expected for various user inputs. It ensures the chatbot understands user intent, provides accurate responses, and correctly executes actions.

- Unit Testing: Testing individual components, such as serverless functions, to ensure they work in isolation. This involves providing specific inputs and verifying the outputs.

- Integration Testing: Testing the interaction between different components, such as the chatbot logic and external APIs. This ensures that data flows correctly between components.

- End-to-End Testing: Testing the entire chatbot system from user input to response. This simulates real-world user interactions to validate the complete functionality.

- Performance Testing: This assesses the chatbot’s performance under different load conditions, including response times, throughput, and resource utilization.

- Load Testing: Simulating a large number of concurrent users to evaluate the chatbot’s ability to handle high traffic volumes without performance degradation.

- Stress Testing: Pushing the chatbot beyond its expected limits to identify its breaking points and assess its resilience.

- Performance Monitoring: Continuously monitoring key metrics, such as response times and error rates, to identify performance bottlenecks and ensure optimal performance over time. Tools like AWS CloudWatch or Google Cloud Monitoring can be employed for this purpose.

- Usability Testing: Evaluating the chatbot’s ease of use and user experience.

- User Acceptance Testing (UAT): Involving real users to test the chatbot and provide feedback on its usability, clarity, and overall satisfaction.

- A/B Testing: Comparing different versions of the chatbot’s conversation flows or responses to determine which performs better.

Debugging Serverless Functions and API Integrations

Debugging serverless functions and API integrations requires a combination of logging, monitoring, and debugging tools. Serverless environments provide specific tools for tracing execution, inspecting logs, and identifying the root causes of errors.

- Logging: Implementing comprehensive logging to capture information about the chatbot’s execution, including input parameters, function execution details, and any errors that occur.

- Structured Logging: Using structured logging formats, such as JSON, to make logs easier to parse and analyze.

- Log Aggregation: Aggregating logs from different sources, such as serverless functions and API integrations, into a central location for easier analysis. Services like AWS CloudWatch Logs or Google Cloud Logging can be used for this purpose.

- Monitoring: Setting up monitoring to track key metrics, such as function invocations, error rates, and latency.

- Metrics Collection: Collecting metrics from serverless functions and API integrations to track performance and identify potential issues.

- Alerting: Configuring alerts to notify developers when specific thresholds are exceeded, such as high error rates or slow response times.

- Debugging Tools: Utilizing debugging tools to inspect function execution and identify the root causes of errors.

- Local Debugging: Debugging serverless functions locally using tools provided by the serverless platform, such as AWS SAM CLI or Google Cloud Functions emulator.

- Remote Debugging: Debugging serverless functions in the cloud using remote debugging tools, which allow developers to step through code execution and inspect variables.

- Tracing: Implementing distributed tracing to track requests across multiple services and identify performance bottlenecks. Services like AWS X-Ray or Google Cloud Trace can be used for this purpose.

Designing a Test Plan for the Chatbot

A comprehensive test plan Artikels the scope of testing, the test cases to be executed, and the expected results. This plan helps to ensure that the chatbot is thoroughly tested and that any issues are identified and resolved.

- Test Plan Components:

- Test Objectives: Defining the goals of the testing process, such as verifying the chatbot’s functionality, performance, and usability.

- Test Scope: Identifying the components of the chatbot that will be tested, including serverless functions, API integrations, and user interfaces.

- Test Cases: Creating detailed test cases that specify the inputs, actions, and expected results for each test scenario.

- Test Data: Defining the data that will be used for testing, including user inputs, API responses, and database records.

- Test Environment: Describing the environment in which the testing will be performed, including the serverless platform, messaging platforms, and any external services.

- Test Schedule: Establishing a timeline for the testing process, including the start and end dates for each test phase.

- Test Reporting: Defining how the test results will be documented and reported, including the use of test management tools.

- User Scenarios:

- Happy Path Scenarios: Testing the chatbot’s ability to handle typical user interactions and provide correct responses. Example: A user asks for the current weather forecast, and the chatbot provides the information.

- Error Scenarios: Testing the chatbot’s ability to handle unexpected user inputs or errors. Example: A user enters an invalid date format, and the chatbot provides an appropriate error message.

- Edge Case Scenarios: Testing the chatbot’s ability to handle extreme or unusual situations. Example: A user asks for a weather forecast for a location that does not exist, and the chatbot provides a suitable response.

- Performance Scenarios: Testing the chatbot’s performance under different load conditions. Example: Simulating a large number of concurrent users to assess response times and resource utilization.

- Integration Scenarios: Testing the chatbot’s integration with external services and APIs. Example: Verifying that the chatbot correctly retrieves and displays data from an external weather API.

- Test Execution and Reporting:

- Test Execution: Running the test cases and recording the results, including any errors or unexpected behavior.

- Bug Tracking: Logging any bugs or issues that are identified during testing, including the steps to reproduce the bug and the expected behavior.

- Test Reporting: Generating reports that summarize the test results, including the number of tests passed, failed, and skipped, as well as any identified bugs.

Deployment and Scalability

Deploying a serverless chatbot involves transitioning the developed code and infrastructure configurations onto a cloud platform. This process requires careful consideration of various factors, including platform-specific configurations, security measures, and automated scaling mechanisms. The core benefit of a serverless architecture lies in its inherent ability to scale automatically, adapting to fluctuating user demand without manual intervention. Effective monitoring is crucial for observing performance, identifying bottlenecks, and optimizing the chatbot’s functionality.

Steps for Deploying a Serverless Chatbot

Deploying a serverless chatbot typically involves several key steps, tailored to the specific cloud platform being used. These steps ensure the application is correctly configured, securely deployed, and ready to handle user interactions.

- Code Packaging and Upload: The chatbot’s code, including the logic, any necessary libraries, and configuration files, must be packaged and uploaded to the cloud platform. This often involves creating a deployment package (e.g., a ZIP file) containing all the required components.

- Resource Configuration: Define and configure the necessary cloud resources, such as functions (e.g., AWS Lambda, Azure Functions, Google Cloud Functions), databases (e.g., DynamoDB, Azure Cosmos DB, Google Cloud Datastore), and API gateways (e.g., API Gateway, Azure API Management, Google Cloud API Gateway). This includes specifying memory allocation, execution timeouts, and security settings.

- API Gateway Setup: Configure an API gateway to act as the entry point for user interactions. This involves defining API endpoints, request/response mappings, and authentication mechanisms. The API gateway routes incoming requests to the appropriate serverless functions.

- Environment Variables and Secrets Management: Configure environment variables to store sensitive information like API keys and database credentials. Employ a secrets management service to securely store and manage these secrets. This is crucial for protecting sensitive data.

- Deployment and Testing: Deploy the configured resources to the cloud platform. After deployment, thoroughly test the chatbot’s functionality, including its ability to handle different user inputs, its integration with messaging platforms, and its overall performance. This includes unit tests, integration tests, and end-to-end tests.

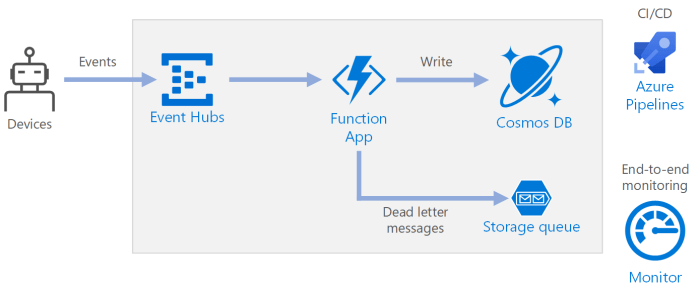

- Monitoring and Logging Configuration: Configure logging and monitoring services to track the chatbot’s performance, identify errors, and gather insights into user behavior. This involves setting up logging for function invocations, API gateway requests, and database interactions.

Serverless Architecture and Automatic Scalability

Serverless architecture intrinsically supports automatic scalability, eliminating the need for manual capacity provisioning and management. The cloud provider dynamically allocates resources based on demand.

The core principle of serverless scalability relies on the ‘pay-per-use’ model.

The cloud provider automatically scales the underlying infrastructure to meet the demand.

- Function Scaling: When the chatbot receives more requests, the cloud platform automatically provisions additional instances of the serverless functions. This ensures that the chatbot can handle a high volume of concurrent users without performance degradation. The scaling is often based on metrics like the number of requests per second or the CPU utilization of the function instances.

- Database Scalability: Serverless databases, such as DynamoDB, are designed to scale automatically. They can handle a large number of read and write operations without requiring manual scaling. This is achieved through features like automatic partitioning and replication.

- API Gateway Scalability: The API gateway also scales automatically to handle increased traffic. It can distribute incoming requests across multiple function instances and handle a high volume of concurrent connections.

- Event-Driven Architecture: Serverless chatbots often use an event-driven architecture, where different components of the system communicate through events. This allows for asynchronous processing and further enhances scalability. For example, when a user sends a message, an event is triggered, which can then trigger a function to process the message.

Monitoring the Chatbot’s Performance

Monitoring is critical for ensuring the chatbot functions correctly, identifying potential issues, and optimizing its performance. Comprehensive monitoring provides valuable insights into the chatbot’s behavior and helps to identify areas for improvement.

- Key Performance Indicators (KPIs): Define and track key performance indicators (KPIs) to measure the chatbot’s success. These may include:

- Response Time: The time it takes for the chatbot to respond to a user’s input.

- Conversation Completion Rate: The percentage of conversations that reach a successful conclusion.

- Error Rate: The percentage of interactions that result in errors.

- User Satisfaction: Measured through feedback mechanisms, such as ratings or surveys.

- Cost: The operational costs associated with running the chatbot, including function invocations, database usage, and API gateway requests.

- Logging: Implement comprehensive logging to capture events, errors, and other relevant information. Logs should include timestamps, user IDs, input/output data, and error messages. These logs can be analyzed to identify patterns, diagnose issues, and track user behavior.

- Alerting: Set up alerts to notify administrators of critical events, such as high error rates, slow response times, or unusual traffic patterns. These alerts should be configured to trigger notifications via email, SMS, or other communication channels.

- Dashboarding: Create dashboards to visualize the chatbot’s performance metrics. Dashboards provide a real-time overview of the chatbot’s health and performance. They allow for quick identification of issues and trends.

- Tracing: Implement distributed tracing to track requests as they flow through the different components of the chatbot. This can help to identify performance bottlenecks and understand the end-to-end behavior of the system.

- Tools and Services: Utilize cloud-provider-specific monitoring tools and services. Examples include:

- AWS: CloudWatch, X-Ray.

- Azure: Application Insights, Azure Monitor.

- Google Cloud: Cloud Monitoring, Cloud Trace.

Advanced Features and Enhancements

Serverless chatbots, while inherently scalable and cost-effective, can be significantly enhanced through the integration of advanced features. These additions move beyond basic conversational capabilities, providing richer user experiences and enabling more sophisticated interactions. This section explores several such enhancements, focusing on sentiment analysis, proactive messaging, API integration, and user authentication.

Sentiment Analysis Implementation

Sentiment analysis allows the chatbot to understand the emotional tone of user input, enabling more context-aware responses. This feature can improve user satisfaction and provide valuable insights into user needs.To implement sentiment analysis:

- Utilize Natural Language Processing (NLP) services: Several cloud providers offer NLP services specifically for sentiment analysis. For example, Amazon Comprehend, Google Cloud Natural Language, and Azure Cognitive Services provide pre-trained models that can analyze text and return sentiment scores (positive, negative, neutral, or mixed).

- Integrate sentiment analysis into the conversation flow: When a user’s message is received, it is sent to the NLP service. The returned sentiment score can be used to tailor the chatbot’s response. For example, if the sentiment is negative, the chatbot could offer assistance or apologize.

- Store sentiment data for analysis: Logging sentiment scores alongside user interactions provides valuable data for understanding user behavior and improving the chatbot’s performance over time. This data can be visualized to identify trends in user sentiment.

For example, consider a customer service chatbot. If a user types, “I’m extremely frustrated with your service!”, the sentiment analysis would likely identify a negative sentiment. The chatbot could then respond with a pre-programmed message like, “I understand your frustration. I’m here to help. Can you please describe the issue you are experiencing?” This demonstrates the chatbot’s ability to recognize and respond appropriately to user emotions.

The use of sentiment analysis enhances the perceived empathy and effectiveness of the chatbot.

Proactive Messaging Strategies

Proactive messaging involves the chatbot initiating conversations or providing information without explicit user requests. This can be highly effective for delivering timely updates, offering relevant suggestions, and improving user engagement.Implementing proactive messaging involves several key considerations:

- Trigger Events: Define events that trigger proactive messages. These could include:

- User inactivity: After a certain period of inactivity, the chatbot can check in with the user.

- Completion of a task: After an order is placed or a form is submitted, the chatbot can provide confirmation and next steps.

- Arrival of new information: The chatbot can alert the user of a new blog post, a new product, or a new discount.

- Message Content: Craft messages that are informative, helpful, and non-intrusive. The goal is to provide value to the user without overwhelming them.

- Delivery Channels: Choose the appropriate messaging platform for proactive messages, considering user preferences and the nature of the information being shared.

- User Consent: Obtain user consent before sending proactive messages, respecting privacy regulations and building trust.

For example, an e-commerce chatbot could proactively send a message to a user who has items in their cart but hasn’t completed the purchase, offering assistance or reminding them about the items. Or, a news chatbot could send a summary of the top stories of the day at a specific time. Proactive messaging, when implemented thoughtfully, can significantly increase user engagement and satisfaction.

Integrating with External APIs

Integrating a chatbot with external APIs expands its capabilities by allowing it to access and utilize data and services from various sources. This allows the chatbot to perform tasks such as fetching information, processing payments, and interacting with other applications.To integrate with external APIs:

- API Selection: Identify relevant APIs based on the chatbot’s purpose. These could include weather APIs, payment gateways, or CRM systems.

- Authentication: Implement appropriate authentication mechanisms (API keys, OAuth) to secure access to the APIs.

- API Calls: Use the chatbot’s logic to make API calls. This involves constructing the correct request parameters and handling the responses.

- Data Handling: Parse the API responses and format the data for presentation to the user.

For instance, a travel chatbot could integrate with a flight booking API. When a user asks to book a flight, the chatbot would:

- Collect the necessary information (departure city, destination, dates).

- Use this information to construct a request to the flight booking API.

- Receive a response from the API containing flight options.

- Present the flight options to the user.

This integration allows the chatbot to provide a complete and seamless travel booking experience. The ability to seamlessly integrate with external APIs is a key strength of serverless chatbots.

User Authentication and Authorization

Implementing user authentication and authorization is crucial for protecting sensitive data, personalizing user experiences, and complying with privacy regulations. This ensures that only authorized users can access specific features or information.To implement user authentication and authorization:

- Authentication Methods: Choose an appropriate authentication method. Common options include:

- Username/Password: A simple method where users create accounts with usernames and passwords.

- OAuth/OpenID Connect: Allows users to log in using their existing accounts from services like Google, Facebook, or Microsoft.

- Multi-Factor Authentication (MFA): Adds an extra layer of security by requiring users to verify their identity through a second factor, such as a code sent to their phone.

- Authorization: Define roles and permissions to control what users can access. For example, an administrator role might have access to all features, while a regular user might only have access to specific data.

- Integration with Authentication Services: Utilize authentication services like AWS Cognito, Azure Active Directory, or Google Identity Platform to handle user registration, login, and password management.

- Session Management: Implement a mechanism to maintain user sessions and track user authentication status. This might involve using cookies or tokens.

For example, consider a financial chatbot. Before allowing a user to access their account information, the chatbot would require the user to authenticate using their credentials. Once authenticated, the chatbot would verify the user’s authorization to access specific account details. This level of security is essential to protect user privacy and financial data. The implementation of robust authentication and authorization mechanisms is paramount for any chatbot that handles sensitive information.

Security Considerations

Serverless chatbots, while offering scalability and cost-effectiveness, introduce unique security challenges. Their distributed nature and reliance on third-party services necessitate a proactive approach to security. Implementing robust security measures is crucial to protect sensitive data, prevent malicious attacks, and maintain user trust. Failure to address these concerns can lead to data breaches, service disruptions, and reputational damage.

Securing API Endpoints and Data Storage

Securing API endpoints and data storage are paramount in serverless chatbot security. These are the primary attack surfaces where malicious actors can attempt to gain unauthorized access to sensitive information or disrupt the chatbot’s functionality. Comprehensive security strategies are required.

- API Gateway Security: API gateways, such as AWS API Gateway or Azure API Management, act as the entry point for all requests to the chatbot’s backend. Implementing robust security measures at this layer is crucial.

- Authentication: Implement strong authentication mechanisms, such as API keys, JSON Web Tokens (JWT), or OAuth 2.0, to verify the identity of users or client applications accessing the API.

- Authorization: Define and enforce access control policies to restrict access to specific API endpoints and resources based on user roles or permissions.

- Rate Limiting: Implement rate limiting to prevent abuse and protect the API from denial-of-service (DoS) attacks. This limits the number of requests a client can make within a specific timeframe. For example, a rate limit could be set to allow no more than 100 requests per minute from a single IP address.

- Input Validation: Validate all incoming requests to ensure they conform to the expected format and data types. This helps prevent injection attacks, such as SQL injection or cross-site scripting (XSS).

- Encryption: Enable HTTPS (TLS/SSL) to encrypt all traffic between the client and the API gateway, protecting data in transit.

- Data Storage Security: Secure the data storage mechanisms used by the chatbot. This includes databases, object storage, and other storage solutions.

- Encryption: Encrypt data at rest and in transit. For databases, enable encryption at rest features provided by the database service. Use HTTPS for data transfer.

- Access Control: Implement strict access control policies to restrict access to the data storage to only authorized users and services. Use the principle of least privilege, granting only the necessary permissions.

- Regular Backups: Implement regular backups to protect against data loss due to accidental deletion, hardware failures, or malicious attacks. Store backups in a secure and geographically separate location.

- Data Masking and Anonymization: Implement data masking and anonymization techniques to protect sensitive data. This involves replacing or obfuscating sensitive information with less sensitive alternatives.

- Serverless Function Security: Secure the serverless functions that handle the chatbot’s logic.

- Code Reviews: Conduct regular code reviews to identify and address potential security vulnerabilities in the function code.

- Dependency Management: Carefully manage dependencies to ensure that all third-party libraries and packages are up-to-date and free of known vulnerabilities.

- Input Validation: Validate all inputs received by the functions to prevent injection attacks.

- Secrets Management: Securely store and manage sensitive information, such as API keys and database credentials, using a secrets management service, such as AWS Secrets Manager or Azure Key Vault.

Preventing Common Security Vulnerabilities

Serverless chatbots are susceptible to various security vulnerabilities. Proactive measures are essential to mitigate these risks and protect the chatbot from attacks.

- Input Validation and Sanitization: Implement rigorous input validation and sanitization to prevent injection attacks, such as SQL injection, cross-site scripting (XSS), and command injection. This involves validating all user inputs and sanitizing them to remove any malicious code or characters. For example, consider the following SQL injection vulnerability and its mitigation:

- Authentication and Authorization: Implement robust authentication and authorization mechanisms to control access to the chatbot’s resources and functionalities.

- Multi-Factor Authentication (MFA): Enable MFA for all user accounts and administrative access to the chatbot’s infrastructure.

- Role-Based Access Control (RBAC): Implement RBAC to grant users access to only the resources and functionalities they need.

- Dependency Management: Regularly update all dependencies, including libraries, frameworks, and operating systems, to patch known vulnerabilities.

- Vulnerability Scanning: Use vulnerability scanning tools to identify and address potential security flaws in dependencies.

- Dependency Auditing: Regularly audit dependencies to ensure they are up-to-date and free of known vulnerabilities.

- Secure Configuration: Securely configure all components of the chatbot’s infrastructure, including API gateways, serverless functions, and data storage.

- Least Privilege: Apply the principle of least privilege, granting each component only the necessary permissions.

- Regular Security Audits: Conduct regular security audits to identify and address potential misconfigurations.

- Logging and Monitoring: Implement comprehensive logging and monitoring to detect and respond to security incidents.

- Centralized Logging: Centralize logs from all components of the chatbot’s infrastructure for easy analysis and correlation.

- Security Information and Event Management (SIEM): Integrate logs with a SIEM system to automate threat detection and incident response.

- Regular Security Assessments: Conduct regular security assessments, including penetration testing and vulnerability scanning, to identify and address potential security vulnerabilities. A penetration test simulates a real-world attack to identify weaknesses in the chatbot’s security posture. Vulnerability scanning uses automated tools to identify known vulnerabilities in the chatbot’s infrastructure.

- Compliance with Security Standards: Adhere to relevant security standards and regulations, such as GDPR, HIPAA, and PCI DSS, depending on the chatbot’s use case and data handling practices. For instance, if the chatbot handles personal data of EU citizens, it must comply with GDPR, including data minimization, data security, and user consent requirements.

Vulnerable Code: `SELECT

– FROM users WHERE username = ‘”+userInput+”‘ AND password = ‘”+passwordInput+”‘;`Mitigated Code: `SELECT

– FROM users WHERE username = ? AND password = ?;` (using parameterized queries)

This illustrates the importance of using parameterized queries to prevent SQL injection attacks.

Final Summary

In conclusion, building a serverless chatbot offers a compelling pathway to creating intelligent and scalable conversational experiences. By leveraging the power of serverless functions, cloud platforms, and integrated services, developers can craft efficient, cost-effective chatbots that meet diverse user needs. This guide provides a solid foundation for embarking on this journey, equipping you with the knowledge to design, implement, and deploy a serverless chatbot that enhances user engagement and streamlines interactions.

Continuous learning and adaptation are crucial in this rapidly evolving field, so keep exploring and experimenting to unlock the full potential of serverless chatbot technology.

Quick FAQs

What are the primary advantages of using a serverless architecture for chatbots?

Serverless architecture offers several key benefits, including automatic scaling, reduced operational overhead (no server management), cost-effectiveness (pay-per-use), and increased agility in development and deployment. This allows developers to focus on the chatbot’s logic rather than infrastructure.

How do I choose the right cloud platform for my serverless chatbot?

The choice depends on factors like existing cloud experience, pricing, available services (NLU, databases, etc.), and platform-specific features. Popular options include AWS (with services like Lambda, API Gateway, and DynamoDB), Azure (Functions, API Management, Cosmos DB), and Google Cloud (Cloud Functions, Cloud Endpoints, Cloud Firestore).

What is the role of Natural Language Understanding (NLU) in a serverless chatbot?

NLU services (like Dialogflow, Lex, or LUIS) are essential for enabling chatbots to understand user input. They analyze text to identify user intents (what the user wants to do) and extract entities (relevant information, such as dates, locations, or products). This allows the chatbot to respond appropriately and perform the requested action.

How do I handle user data security and privacy in a serverless chatbot?

Data security is paramount. Implement encryption for data at rest and in transit, use secure authentication and authorization mechanisms, adhere to data privacy regulations (e.g., GDPR, CCPA), and regularly audit your chatbot’s security configurations. Avoid storing sensitive user information unless absolutely necessary.

How can I monitor the performance of my serverless chatbot?

Cloud platforms provide monitoring tools (e.g., AWS CloudWatch, Azure Monitor, Google Cloud Monitoring) to track metrics such as invocation counts, execution times, error rates, and resource utilization. Use these tools to identify performance bottlenecks, optimize code, and ensure the chatbot is functioning correctly.