The process of migrating data and applications presents a significant challenge in modern IT environments, demanding meticulous planning and execution. This guide, framed with an analytical lens, dissects the complexities inherent in this process, specifically focusing on the automation of various migration phases. We will explore the critical steps required to streamline and optimize migrations, thereby reducing manual effort, minimizing errors, and accelerating project timelines.

This examination delves into the identification of automatable tasks, the application of scripting languages and tools, and the strategic automation of data migration, infrastructure provisioning, and application deployment. Furthermore, we will examine best practices for testing, validation, monitoring, and security, ensuring a robust and efficient migration process.

Planning and Preparation for Automated Migration

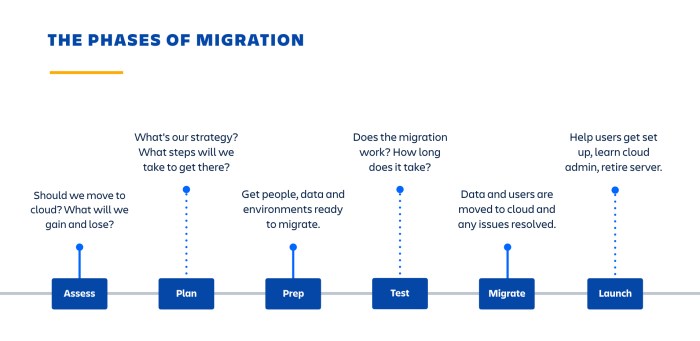

Automated migration, while offering significant efficiency gains, necessitates meticulous planning and preparation. A poorly planned migration can lead to data loss, downtime, and operational disruptions. The following sections Artikel the critical steps involved in ensuring a smooth and successful automated migration process.

Assessing Current Infrastructure

A thorough assessment of the existing infrastructure is the cornerstone of a successful migration. This assessment provides a baseline understanding of the current environment, enabling informed decision-making throughout the migration process.

- Server Specifications: Determining the hardware and software configurations of all servers is critical. This involves identifying the operating system, CPU, RAM, storage capacity, and network bandwidth of each server. Tools like `lshw` (Linux) and `System Information` (Windows) can be utilized for gathering this information. Example: A web server running on a 64-bit CentOS 7 system with 16GB RAM, a 4-core CPU, and 1TB of storage.

- Application Dependencies: Mapping out the dependencies between applications and their respective components is crucial. This includes identifying all software packages, libraries, and services that each application relies on. The `ldd` command (Linux) can be used to identify shared library dependencies. For instance, a Java application might depend on the Java Runtime Environment (JRE) and specific database drivers.

- Data Volumes and Types: Analyzing the volume and type of data stored within the existing environment is essential for capacity planning and choosing appropriate migration strategies. This involves determining the size of databases, file systems, and other data repositories. Tools like `du` (Linux) and `Get-ChildItem` (PowerShell) can be used to estimate data volumes. Consider the following: A database containing 500GB of customer data, including relational data and associated media files.

- Network Topology: Documenting the network topology, including network devices, IP addresses, subnets, and firewall rules, is necessary to ensure network connectivity during and after the migration. This documentation allows for the correct configuration of network settings in the new environment. Consider: A network with a firewall, a load balancer, and multiple subnets.

- Security Considerations: Assessing the current security posture, including authentication methods, access controls, and encryption protocols, is essential for maintaining security during and after migration. This involves reviewing security policies and identifying any vulnerabilities. Consider: A system utilizing TLS encryption for data transmission and role-based access control.

- Performance Metrics: Gathering performance metrics, such as CPU utilization, memory usage, disk I/O, and network latency, helps establish a performance baseline and identify potential bottlenecks. This data informs the sizing of the new infrastructure. Tools like `top` (Linux) and `Performance Monitor` (Windows) can be used to monitor performance. Consider: An application consistently experiencing high CPU utilization during peak hours.

- Backup and Recovery Procedures: Documenting existing backup and recovery procedures is vital for data protection. This involves understanding the frequency of backups, the storage location of backups, and the procedures for restoring data. Consider: Daily full backups and incremental backups every hour.

Creating a Comprehensive Migration Plan

A well-defined migration plan provides a roadmap for the entire process, ensuring that all aspects of the migration are considered and addressed. This plan should be a living document, updated as the migration progresses.

- Define Migration Scope: Clearly defining the scope of the migration is the first step. This involves specifying which applications, data, and infrastructure components will be migrated. The scope should be documented to avoid scope creep and ensure that the migration remains focused. For instance, migrating only the web application and database to the new environment, excluding other ancillary services.

- Establish Timelines: Creating a detailed timeline is crucial for managing expectations and tracking progress. This timeline should include specific milestones, deadlines, and dependencies. The timeline should incorporate buffer time to accommodate unforeseen issues. Example: The migration should be completed within a 4-week timeframe, with specific deadlines for each phase.

- Resource Allocation: Identifying and allocating the necessary resources, including personnel, hardware, software, and budget, is essential. This includes assigning roles and responsibilities to team members. Consider: Allocating a dedicated team comprising a project manager, system administrators, database administrators, and network engineers.

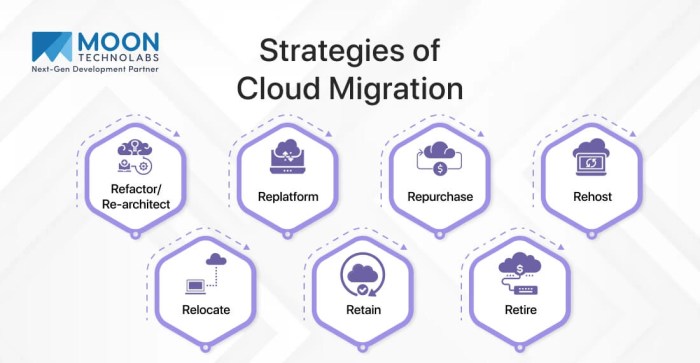

- Select Migration Strategy: Choosing the appropriate migration strategy is critical for minimizing downtime and ensuring data integrity. The strategy should be chosen based on the specific requirements of the environment. (See table below).

- Data Migration Strategy: Planning the specific data migration strategy, including data extraction, transformation, and loading (ETL) processes, is vital. This includes determining how data will be migrated and how potential data inconsistencies will be addressed. Consider: Using a database replication tool to synchronize data between the old and new environments.

- Testing and Validation: Developing a comprehensive testing plan is crucial for verifying the successful migration of applications and data. This includes functional testing, performance testing, and security testing. Consider: Performing thorough testing in a staging environment before migrating to production.

- Risk Assessment and Mitigation: Identifying potential risks and developing mitigation strategies is essential for minimizing the impact of unforeseen issues. This includes documenting potential risks, their likelihood, and the impact they could have on the migration. Consider: Having a rollback plan in place in case of major failures.

- Communication Plan: Establishing a communication plan is vital for keeping stakeholders informed throughout the migration process. This includes defining communication channels, frequency, and the information to be shared. Consider: Sending regular status updates to stakeholders.

Migration Strategies: Pros and Cons

Choosing the right migration strategy is crucial for minimizing downtime and ensuring a successful transition. Each strategy has its own advantages and disadvantages, and the best choice depends on the specific requirements of the environment.

| Migration Strategy | Pros | Cons |

|---|---|---|

| Big Bang |

|

|

| Phased |

|

|

| Parallel |

|

|

| Lift and Shift (Rehosting) |

|

|

| Replatforming |

|

|

| Refactoring |

|

|

Identifying Automatable Tasks in the Migration Process

Automating tasks within a migration process significantly reduces manual effort, minimizes human error, and accelerates the overall timeline. This section focuses on identifying common manual activities ripe for automation, the scripting languages suitable for streamlining these processes, and the tools and technologies that facilitate automated data transfer. Successful automation requires careful planning and a deep understanding of the migration environment to ensure a smooth and efficient transition.

Common Manual Tasks for Automation

Many steps in a migration process are repetitive and predictable, making them ideal candidates for automation. Automating these tasks frees up migration teams to focus on more complex challenges and strategic decision-making.

- Data Validation: Manual data validation is time-consuming and prone to errors. Automation can involve scripting to compare source and destination data, identify inconsistencies, and generate reports. This can include checking data types, format validation, and ensuring data integrity after the migration. For example, a script could compare the number of records in a database table before and after migration, highlighting discrepancies for further investigation.

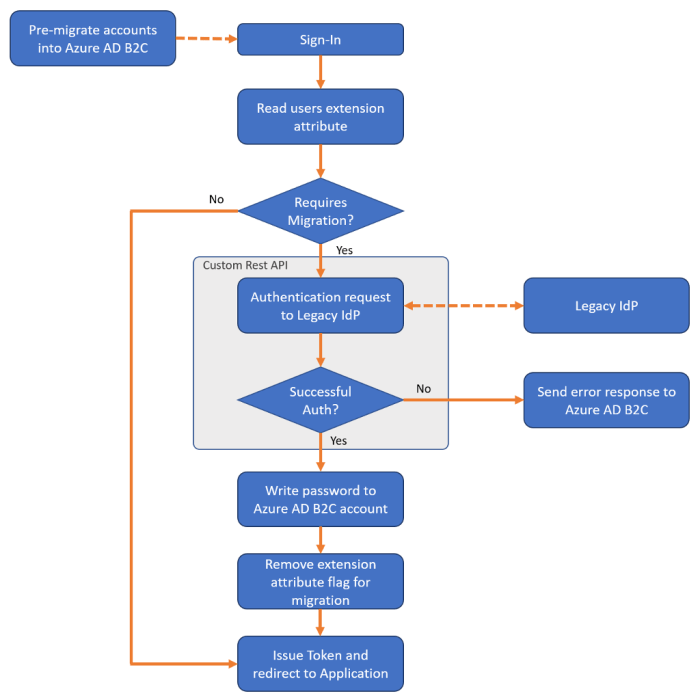

- User Provisioning and De-provisioning: Manually creating user accounts, assigning permissions, and managing access rights across different systems is a bottleneck. Automation allows for the rapid provisioning of users based on pre-defined templates or source system information. This can include creating accounts in Active Directory, assigning licenses in cloud services, and configuring access to shared resources. Automated de-provisioning is equally crucial, ensuring that user access is revoked when it is no longer required, thus enhancing security.

- Configuration Setup: Configuring servers, network devices, and applications manually is error-prone and time-intensive. Automation enables the repeatable and consistent deployment of configurations, reducing the risk of misconfigurations. This includes tasks such as setting up network interfaces, installing software, configuring security settings, and customizing application parameters. Configuration management tools like Ansible or Chef can be used to automate these tasks.

- Data Transformation: Migrating data often requires transforming it to fit the target system’s structure or format. Manual data transformation is extremely labor-intensive. Automation allows the execution of data transformations, such as changing data types, cleaning data, and mapping data fields between the source and destination systems. Scripting languages and ETL (Extract, Transform, Load) tools are commonly used for these purposes.

- Testing and Verification: Manually testing and verifying the migrated data and system functionality can be tedious and inefficient. Automated testing scripts can validate data accuracy, system performance, and application functionality after migration. This helps ensure that the migration has been successful and that the system is operating as expected.

Scripting Languages and Their Uses

Scripting languages are powerful tools for automating various migration tasks. Their flexibility and versatility allow for customized solutions tailored to specific migration needs.

- Python: Python is a versatile and widely used scripting language, well-suited for a broad range of automation tasks. Its extensive libraries, such as `pandas` for data manipulation, `requests` for API interactions, and `paramiko` for SSH connections, make it ideal for data transformation, data validation, and system administration tasks. For instance, a Python script can be used to extract data from a source database, transform it according to specific requirements, and load it into a target database.

Another example could be using Python to automate the creation of user accounts in a cloud environment by interacting with the cloud provider’s API.

- PowerShell: PowerShell is a scripting language primarily used for Windows system administration. It provides powerful cmdlets for managing Windows servers, Active Directory, and other Microsoft technologies. PowerShell can be used to automate tasks such as user provisioning, server configuration, and file transfer. For example, a PowerShell script can be used to create user accounts in Active Directory, assign permissions, and configure email settings.

Another example could be automating the deployment of software to multiple Windows servers.

- Bash: Bash is a Unix shell scripting language, suitable for automating tasks on Linux and macOS systems. It can be used for tasks such as file transfer, system configuration, and command execution. For example, a Bash script can be used to automate the backup and restore of files, or to configure network settings on a Linux server.

- JavaScript (Node.js): JavaScript, particularly with Node.js, is increasingly used for automating tasks, especially in cloud environments and for web-based applications. Node.js allows for creating scripts to interact with APIs, manage cloud resources, and perform data transformations. It can be used for tasks such as automating the deployment of web applications, managing cloud infrastructure, and integrating different systems during a migration.

Tools and Technologies for Automated Data Transfer

Selecting the right tools and technologies is crucial for successful automated data transfer. Several options offer various features and functionalities, catering to different migration requirements.

- rsync: rsync is a fast and versatile file transfer tool, suitable for synchronizing files and directories between two systems. Its key features include delta transfer (transferring only the changed parts of files), compression, and encryption. It is compatible with Linux, macOS, and Windows (using tools like Cygwin). rsync is suitable for scenarios involving large files or incremental data transfers.

- Robocopy: Robocopy (Robust File Copy) is a command-line utility for Windows, providing robust file copying capabilities. Key features include the ability to copy file permissions, attributes, and timestamps. It is compatible with Windows operating systems and is particularly useful for migrating files from one Windows server to another.

- SCP (Secure Copy): SCP is a secure file transfer protocol that uses SSH for data transfer. It is simple to use and provides secure file transfer between systems. Key features include encryption and authentication. It is compatible with Linux, macOS, and Windows (using tools like PuTTY). SCP is ideal for transferring files securely over a network.

- SFTP (SSH File Transfer Protocol): SFTP is a secure file transfer protocol that uses SSH for data transfer. It provides a more robust and feature-rich approach compared to SCP. Key features include support for resuming interrupted transfers and directory browsing. It is compatible with Linux, macOS, and Windows (using various SFTP clients). SFTP is suitable for secure file transfers with advanced features.

- AWS DataSync: AWS DataSync is a data transfer service provided by Amazon Web Services (AWS). It simplifies, automates, and accelerates moving data between on-premises storage and AWS storage services. Key features include automatic data transfer, bandwidth optimization, and encryption. It is compatible with various on-premises storage systems and AWS storage services like Amazon S3, Amazon EFS, and Amazon FSx.

- Azure Data Box: Azure Data Box is a physical device provided by Microsoft Azure for offline data transfer. It is suitable for transferring large datasets when network bandwidth is limited or unreliable. Key features include high storage capacity and data encryption. It is compatible with various on-premises storage systems and Azure storage services.

- Database Replication Tools (e.g., Oracle GoldenGate, SQL Server Replication): These tools are designed for replicating data between databases. They provide features such as real-time data replication, data transformation, and conflict resolution. They are compatible with specific database systems, such as Oracle and SQL Server, respectively. Database replication tools are suitable for migrating databases with minimal downtime.

Automating Data Migration and Transformation

Automating data migration and transformation is crucial for minimizing manual effort, reducing errors, and accelerating the overall migration timeline. This section details the automation of data extraction, transformation, and loading (ETL) processes, data validation procedures, and conflict resolution strategies. The objective is to create a robust, automated system that ensures data integrity and minimizes downtime during the migration.

Automating Data Extraction, Transformation, and Loading (ETL)

The ETL process is a cornerstone of data migration. Automating it significantly improves efficiency. Several tools and methodologies facilitate this automation, each offering different strengths.One common approach involves utilizing specialized ETL tools. These tools provide a graphical user interface (GUI) for designing and managing ETL workflows.

- Tool Selection: The choice of ETL tool depends on factors such as data volume, data complexity, and the existing IT infrastructure. Popular options include:

- Informatica PowerCenter: Known for its robust features and scalability, suitable for large and complex data migrations.

- Talend Data Integration: An open-source alternative, offering a wide range of connectors and transformation capabilities.

- Microsoft SQL Server Integration Services (SSIS): Integrated with the Microsoft ecosystem, ideal for migrations within a Microsoft environment.

- Workflow Design: The ETL workflow is designed within the chosen tool. This involves defining data sources, specifying transformations, and configuring the loading process. For example, in Informatica, a developer would create mappings that define how data flows from source tables to target tables. Transformations such as data type conversions, cleansing, and aggregations are configured within these mappings.

- Data Extraction: Automated data extraction involves connecting to the source database (e.g., Oracle, MySQL, PostgreSQL) or data files (e.g., CSV, JSON) and retrieving the required data. The tool uses connectors or drivers to read data from various source systems. The efficiency of extraction is influenced by network bandwidth, database performance, and the number of concurrent connections.

- Data Transformation: This stage cleanses, transforms, and prepares the data for loading into the target system. Transformations can include:

- Data type conversion (e.g., converting strings to numbers).

- Data cleansing (e.g., removing duplicate records, handling missing values).

- Data aggregation (e.g., calculating sums, averages).

- Data enrichment (e.g., adding new columns based on lookup tables).

- Data Loading: The transformed data is loaded into the target database. This involves defining the target schema, specifying the loading method (e.g., bulk load, incremental load), and handling error conditions. Loading strategies often involve partitioning large datasets to improve performance.

- Scheduling and Monitoring: ETL workflows are scheduled to run automatically, often during off-peak hours to minimize impact on production systems. Monitoring tools track the execution of ETL jobs, providing alerts in case of failures or performance issues.

Another methodology involves scripting languages like Python or shell scripting, coupled with database-specific tools and libraries. This approach offers greater flexibility and control, especially for complex transformations.

Example: Using Python with the `pandas` library and a database connector (e.g., `psycopg2` for PostgreSQL):

import pandas as pd import psycopg2

# Extraction from CSV df = pd.read_csv('source_data.csv')

# Transformation (e.g., data cleaning) df['date_column'] = pd.to_datetime(df['date_column'], errors='coerce') df = df.dropna()

# Loading into PostgreSQL conn = psycopg2.connect(database="target_db", user="user", password="password", host="host", port="port") df.to_sql('target_table', conn, if_exists='replace', index=False) conn.close()

This example illustrates a basic ETL process using Python, pandas, and psycopg2. The process extracts data from a CSV file, transforms the ‘date_column’ by converting it into the correct format and removing missing values, and then loads the processed data into a PostgreSQL database. The use of pandas simplifies data manipulation, while psycopg2 enables interaction with the PostgreSQL database.

Providing a Step-by-Step Procedure for Automating Data Validation

Data validation is a critical step in ensuring data integrity during migration. Automated validation processes proactively identify and correct errors.

- Defining Validation Rules: Before automation, establish comprehensive validation rules. These rules should cover:

- Data Type Validation: Ensure data conforms to the expected data types (e.g., numeric, text, date).

- Format Validation: Verify data formats (e.g., email addresses, phone numbers).

- Range Validation: Check if data falls within acceptable ranges (e.g., age between 0 and 120).

- Referential Integrity Checks: Verify relationships between tables (e.g., foreign key constraints).

- Completeness Checks: Ensure required fields are not empty.

- Implementing Validation Scripts or Tools: Employ automated tools or scripts to enforce these rules.

- ETL Tools: Many ETL tools provide built-in validation capabilities. Validation rules are defined within the ETL workflow and executed during the transformation process.

- Database Constraints: Utilize database constraints (e.g., CHECK constraints, NOT NULL constraints) to enforce data integrity at the database level.

- Custom Scripts: Develop custom scripts (e.g., using Python, SQL) to perform more complex validation checks.

- Automated Execution: Schedule validation processes to run automatically. Validation can be integrated into the ETL workflow or executed as a separate process.

- Error Handling and Reporting: Implement robust error handling and reporting mechanisms.

- Error Logging: Log all validation errors, including the specific error, the data that caused the error, and the source of the data.

- Error Notification: Configure automated notifications (e.g., email alerts) to notify relevant stakeholders of validation failures.

- Data Correction: Design mechanisms for data correction, either through automated correction or manual intervention. Automated correction might involve default values or lookups. Manual intervention might involve flagging the data for review and correction by data stewards.

- Continuous Monitoring and Improvement: Regularly monitor the effectiveness of the validation process and refine the validation rules as needed. This involves reviewing error logs, analyzing the frequency and type of errors, and updating the validation rules to address new data quality issues.

Designing a System for Automatically Handling Data Conflicts and Inconsistencies

Data conflicts and inconsistencies are common during data migration. Designing an automated system to handle these issues is essential.

- Conflict Detection: Implement automated mechanisms to detect data conflicts.

- Duplicate Record Detection: Identify duplicate records based on predefined criteria (e.g., unique identifiers, matching fields).

- Data Comparison: Compare data values between the source and target systems to identify discrepancies. This can be done using SQL queries or data comparison tools.

- Change Data Capture (CDC): Employ CDC techniques to track changes in the source data and identify conflicts that arise from concurrent updates.

- Conflict Resolution Strategies: Develop strategies for resolving detected conflicts.

- Precedence Rules: Define rules to determine which data source takes precedence in case of conflicts (e.g., prioritize data from the source system for the first month, then switch to the target system).

- Data Merging: Merge data from different sources, using rules to combine data fields.

- Data Transformation: Transform data to resolve conflicts. This may involve data cleansing, standardization, or aggregation.

- Manual Review: Flag conflicting data for manual review and resolution by data stewards.

- Automated Resolution: Automate the application of conflict resolution strategies.

- ETL Transformations: Integrate conflict resolution logic into the ETL workflows.

- Data Reconciliation Scripts: Develop scripts to automatically apply precedence rules or merge data.

- Data Quality Services: Utilize data quality services to cleanse and standardize data.

- Workflow and Process:

- Conflict Handling Workflow: Define a clear workflow for handling data conflicts, including detection, resolution, and reconciliation.

- Error Logging and Auditing: Log all conflict resolutions, including the conflict type, the resolution strategy applied, and the data involved. This provides an audit trail for data changes.

- Data Reconciliation: Reconcile the migrated data with the source data to ensure data consistency.

- Example of Conflict Resolution:

- Scenario: Two source systems have different addresses for the same customer.

- Resolution:

- Precedence: Define a rule that the address from the more recent system (e.g., the system with the most recent update) takes precedence.

- Transformation: If the address fields are slightly different (e.g., different abbreviations for “Street”), standardize the address using a data quality service.

- Manual Review: Flag the customer record for manual review if the address differences are significant and require human judgment.

Automating Infrastructure Provisioning and Configuration

Automating infrastructure provisioning and configuration is crucial for a successful migration. It allows for rapid deployment of the target environment, reduces human error, and ensures consistency across all provisioned resources. This section details the process of automating these critical aspects of the migration strategy.

Automating Provisioning with Infrastructure as Code (IaC)

Infrastructure as Code (IaC) is the practice of managing and provisioning infrastructure through code, rather than manual processes. This approach allows for the automation of infrastructure deployment, version control, and repeatable configurations.

IaC employs declarative or imperative approaches. Declarative IaC, commonly used by tools like Terraform, focuses on defining the desired state of the infrastructure. The tool then automatically determines the steps needed to achieve that state. Imperative IaC, used by tools like Ansible, defines the specific steps to be executed to build the infrastructure.

Here’s how IaC tools automate the provisioning of new servers or cloud instances:

- Defining Infrastructure as Code: The process begins by writing code that describes the desired infrastructure. This code defines the resources required, such as virtual machines, networks, storage, and security groups. This code is usually written in a human-readable format like YAML, JSON, or a domain-specific language (DSL).

- Version Control: IaC code is stored in a version control system, such as Git. This allows for tracking changes, collaborating with teams, and reverting to previous configurations if necessary.

- Automated Deployment: IaC tools automate the deployment of infrastructure based on the defined code. The tools interpret the code and interact with the cloud provider’s APIs or the server’s operating system to provision the resources.

- Repeatability and Consistency: IaC ensures that the infrastructure is provisioned consistently across different environments (e.g., development, testing, production). Each time the IaC code is executed, it creates the same infrastructure, eliminating configuration drift.

- Example: A Terraform configuration file might define a virtual machine instance on AWS, specifying the instance type, AMI (Amazon Machine Image), network settings, and security groups. When this configuration is applied, Terraform will automatically provision the specified VM instance.

Automating Operating System, Application, and Network Configuration

Once the infrastructure is provisioned, the next step is to configure the operating systems, applications, and network settings. Automation tools streamline this process, reducing manual effort and ensuring consistency.

Configuration management tools are central to this process. These tools manage the state of the systems by ensuring the configuration is the desired one. They provide features for installing software, managing files, and executing commands on remote servers.

Here’s a guide to automating the configuration of operating systems, applications, and network settings:

- Configuration Management Tools: Utilize tools like Ansible, Chef, Puppet, or SaltStack to automate configuration tasks. These tools use agents or agentless approaches to manage configuration.

- Automated OS Configuration: Automate tasks like installing operating system packages, configuring users and groups, setting up network interfaces, and configuring security settings. This can be achieved using modules or playbooks within the configuration management tool.

- Application Deployment and Configuration: Automate the deployment of applications, including installing dependencies, configuring application settings, and deploying application code. The tools provide modules for managing application-specific tasks, such as database setup or web server configuration.

- Network Configuration: Automate network settings, such as configuring firewalls, setting up DNS, and configuring load balancers. The configuration management tools can interact with network devices and services through APIs or command-line interfaces.

- Idempotency: Configuration management tools are designed to be idempotent, meaning that running the same configuration multiple times will have the same result as running it once. This is important for ensuring consistency and avoiding unintended changes.

- Example: An Ansible playbook can be written to install a web server, configure its settings, and deploy a website. The playbook can be executed on multiple servers, ensuring consistent configuration across all instances.

Best Practices for Securing the Target Environment

Securing the target environment is paramount during and after the automated migration. Implementing security best practices from the outset minimizes vulnerabilities and protects sensitive data.

The principle of least privilege should be implemented throughout the migration process. This means granting users and systems only the minimum necessary permissions to perform their tasks. Regularly review and update access controls to maintain security.

- Network Security: Implement network segmentation to isolate different parts of the environment. Configure firewalls to restrict network traffic, and regularly monitor network activity for suspicious behavior.

- Access Control: Implement strong access controls, including multi-factor authentication (MFA), to protect access to the environment. Regularly review and update access controls to ensure only authorized users have access.

- Data Encryption: Encrypt data at rest and in transit. Use encryption keys to protect sensitive data, and manage key rotation to maintain security.

- Vulnerability Scanning: Regularly scan the target environment for vulnerabilities. Utilize automated vulnerability scanning tools to identify and remediate potential security weaknesses.

- Security Auditing: Implement security auditing to track and monitor security-related events. This includes logging user activity, system events, and security incidents.

- Compliance and Governance: Ensure that the target environment complies with relevant security standards and regulations. Implement security policies and procedures to guide security practices.

- Regular Updates and Patching: Regularly update and patch the operating systems, applications, and security tools to address security vulnerabilities. Automate the patching process to ensure that patches are applied in a timely manner.

Automating Application Migration

Automating application migration is crucial for reducing downtime, minimizing errors, and ensuring a smooth transition to a new environment. This involves streamlining the process of moving applications, including their code, databases, and configurations, while maintaining functionality and data integrity. The core objective is to replace manual, error-prone steps with repeatable, automated processes, thereby improving efficiency and reliability.

Automating Application Migration Processes

The automation of application migration encompasses several key areas. These include code deployment, database setup, and application configuration. Automation tools and scripts are employed to execute these tasks consistently and efficiently.

- Automated Code Deployment: This involves using tools such as Jenkins, GitLab CI, or Azure DevOps to automatically build, test, and deploy application code to the target environment. The process typically includes:

- Fetching the code from a version control system (e.g., Git).

- Building the application (compiling, packaging).

- Running automated tests.

- Deploying the built artifact to the target servers or cloud infrastructure.

This approach eliminates manual intervention, reducing the risk of human error and accelerating the deployment cycle. For example, consider a web application built with Java. The deployment pipeline might involve using Maven to build a WAR file, followed by deployment to a Tomcat server using a tool like Ansible.

- Automated Database Setup: Database migrations are often the most complex part of application migration. Automation tools help manage schema changes, data transfer, and database configuration. Common techniques include:

- Using database migration tools like Flyway or Liquibase to manage schema changes. These tools allow developers to define database schema changes in a version-controlled manner and apply them automatically to the target database.

- Employing tools like AWS Database Migration Service (DMS) or Azure Database Migration Service to replicate data from the source database to the target database. These services handle the complexities of data transfer, including schema conversion and data synchronization.

- Scripting database configuration tasks, such as creating users, setting permissions, and configuring connection parameters.

These automated steps ensure that the database is correctly set up in the new environment.

- Automated Application Configuration: Application configuration involves setting up environment-specific parameters, such as database connection strings, API keys, and other settings. Automation tools simplify this process.

- Using configuration management tools like Ansible, Chef, or Puppet to manage application configuration files. These tools allow administrators to define configuration states and automatically apply them to the target servers.

- Employing environment variables or configuration files to store sensitive information.

- Leveraging infrastructure-as-code (IaC) tools like Terraform or CloudFormation to provision and configure the infrastructure on which the application runs, including servers, networks, and storage.

Automating application configuration ensures that the application is properly configured in the new environment.

Automated Application Testing Approaches

Automated testing is critical for validating application functionality before and after migration. Different approaches are employed to ensure the application behaves as expected.

- Automated Testing Before Migration: Before migrating, thorough testing is crucial to establish a baseline of functionality and identify any existing issues.

- Unit Testing: Testing individual components or modules of the application in isolation. This is typically done using frameworks like JUnit (Java), pytest (Python), or Mocha (JavaScript).

- Integration Testing: Testing the interaction between different components or modules of the application. This ensures that the different parts of the application work together correctly.

- System Testing: Testing the entire application as a whole to ensure that it meets the functional and non-functional requirements. This involves testing the application in an environment that closely resembles the production environment.

- Performance Testing: Assessing the application’s performance under different load conditions. This helps to identify potential performance bottlenecks and ensure that the application can handle the expected traffic.

This pre-migration testing provides a benchmark against which the post-migration application can be compared.

- Automated Testing After Migration: After migration, testing is essential to verify that the application has been successfully migrated and that it functions correctly in the new environment.

- Regression Testing: Re-running the existing test suite to ensure that the migration has not introduced any new bugs or regressions.

- End-to-End Testing: Testing the entire application flow from the user’s perspective. This ensures that the application is working as expected from start to finish.

- User Acceptance Testing (UAT): Involving end-users in the testing process to ensure that the application meets their requirements and expectations. This can be automated using tools like Selenium or Cypress.

- Monitoring and Observability: Setting up monitoring and observability tools to track the application’s performance and behavior in the new environment. This provides insights into any potential issues that may arise after the migration.

This post-migration testing confirms that the application is functioning as intended in the new environment.

- Comparison of Approaches: The main benefit of testing before migration is to establish a known-good state. Testing after migration verifies the migrated application. Both are essential and should be used in conjunction. Unit tests are useful for testing individual components, while integration and end-to-end tests validate the application as a whole. The choice of testing approach depends on the specific application and migration requirements.

Automated Script Examples for Application Migration

Automated scripts are vital for streamlining the migration process. Here are examples for different types of applications.

- Web Application Migration (Example: Python/Django):

The script below Artikels a simplified example using Ansible.

--- -hosts: target_servers become: yes tasks: -name: Install Python and pip apt: name: -python3 -python3-pip state: present update_cache: yes -name: Install Django and dependencies pip: name: -django -psycopg2 # Example: PostgreSQL adapter state: present -name: Deploy the application code git: repo: " git_repo_url " dest: /opt/my_django_app version: " git_branch " -name: Install Python requirements pip: requirements: /opt/my_django_app/requirements.txt virtualenv: /opt/my_django_app/venv -name: Migrate database schema django_manage: app_path: /opt/my_django_app command: migrate -name: Restart the web server (e.g., Gunicorn) systemd: name: gunicorn state: restartedThis Ansible playbook installs Python and pip, deploys the Django application code from a Git repository, installs the necessary Python packages, migrates the database schema, and restarts the web server. Variables like `git_repo_url` and `git_branch` allow for dynamic configuration.

- Database Application Migration (Example: MySQL):

A bash script example using `mysqldump` and `mysql`:

#!/bin/bash # Source Database Details SOURCE_DB_HOST="source_db_host" SOURCE_DB_USER="source_db_user" SOURCE_DB_PASSWORD="source_db_password" SOURCE_DB_NAME="source_db_name" # Target Database Details TARGET_DB_HOST="target_db_host" TARGET_DB_USER="target_db_user" TARGET_DB_PASSWORD="target_db_password" TARGET_DB_NAME="target_db_name" # Dump the source database mysqldump -h "$SOURCE_DB_HOST" -u "$SOURCE_DB_USER" -p"$SOURCE_DB_PASSWORD" "$SOURCE_DB_NAME" > db_dump.sql # Restore the database to the target mysql -h "$TARGET_DB_HOST" -u "$TARGET_DB_USER" -p"$TARGET_DB_PASSWORD" "$TARGET_DB_NAME" < db_dump.sql echo "Database migration completed."This script uses `mysqldump` to create a backup of the source database and then uses the `mysql` command to restore the database to the target. The script can be extended to include checks for database availability and error handling.

Automating User and Access Management

Automating user and access management is crucial for a seamless and secure migration process. This involves migrating user accounts, permissions, and access controls to the new environment while ensuring business continuity and minimizing downtime. Automation reduces the risk of human error, accelerates the migration timeline, and provides a consistent approach to access governance across the organization.

Migrating User Accounts, Permissions, and Access Controls

The automated migration of user accounts, permissions, and access controls typically involves several key steps. These steps are essential for preserving user access rights and maintaining the integrity of the security posture during the transition.

- Account Discovery and Mapping: This initial phase involves identifying all existing user accounts and their associated attributes (usernames, email addresses, group memberships, etc.) in the source environment. A mapping strategy is then defined to translate these attributes to the target environment, accounting for any differences in naming conventions or organizational structures. Automated tools often utilize connectors to various directory services (like Active Directory or LDAP) and cloud identity providers (like Okta or Azure Active Directory) to extract this information.

- Permission and Role Definition: Existing permissions and roles assigned to users are analyzed and mapped to their equivalents in the target environment. This process considers the different access control models (e.g., role-based access control, attribute-based access control) that might be employed. Automated scripts or tools often facilitate the translation of these permissions, ensuring users retain appropriate access to resources in the new system.

- Account Provisioning: Once the mapping is complete, the automated system provisions the user accounts in the target environment. This includes creating user accounts, setting initial passwords (often temporary), and assigning the appropriate roles and permissions. The provisioning process typically interacts with the target directory service or identity provider through APIs or dedicated connectors.

- Data Synchronization: In some cases, user profile data (e.g., contact information, department assignments) needs to be synchronized between the source and target environments. Automated solutions can synchronize data, often on a scheduled basis, ensuring data consistency during and after the migration. This synchronization might involve tools like directory synchronization services or custom scripts that utilize APIs.

- Access Verification and Testing: After account provisioning, it's crucial to verify that users can access the resources they need. This typically involves automated testing scripts that simulate user logins and test access to applications and data. This step helps to identify and resolve any access-related issues before the migration is fully completed.

Automating Password Resets and User Notification

Automating password resets and user notifications is a critical component of user experience during a migration. It minimizes disruption and ensures that users can quickly regain access to their accounts in the new environment.

- Password Reset Automation: Automated systems can facilitate password resets during or after the migration. This may involve generating temporary passwords, sending password reset links, or integrating with self-service password reset portals. The process should be secure, compliant with security policies, and user-friendly.

- Notification of Migration: Users should be informed about the migration well in advance, including details about the new system, any changes to their accounts, and instructions on how to access the new environment. Automated email notifications, often triggered by the provisioning system, can be used to communicate this information.

- Password Expiration Policies: If the migration involves a change in password policies (e.g., complexity requirements, expiration frequency), these changes should be communicated to users. The automated system should enforce these new policies, ensuring compliance with security standards.

- Post-Migration Support: Provide clear instructions for users to follow, including troubleshooting steps and contact information for support. The automated notification system can include links to FAQs, help desk resources, and support channels.

Comparing Identity and Access Management (IAM) Solutions

The selection of an appropriate Identity and Access Management (IAM) solution is a key decision when automating user and access management during a migration. Different solutions offer varying levels of automation capabilities, features, and integrations.

| IAM Solution | Automation Capabilities | Key Features | Pros | Cons |

|---|---|---|---|---|

| Active Directory (AD) |

|

|

|

|

| Azure Active Directory (Azure AD) |

|

|

|

|

| Okta |

|

|

|

|

| OneLogin |

|

|

|

|

| Ping Identity |

|

|

|

|

Automating Testing and Validation

Automating testing and validation is a critical component of a successful migration process. It provides assurance that the data integrity is maintained, and that the migrated applications function as expected. This automated approach reduces manual effort, minimizes the risk of human error, and accelerates the validation phase, allowing for faster identification and resolution of issues.

Automating Testing Procedures for Data Integrity and Application Functionality

Automating testing procedures involves creating and executing tests that systematically verify the integrity of migrated data and the functionality of applications post-migration. This process typically involves several key steps, from defining test cases to analyzing results.

The process begins with defining clear and specific test cases. These cases should cover a range of scenarios, including:

- Data Validation Tests: These tests verify the accuracy and completeness of the migrated data. They often involve comparing data sets between the source and target environments, ensuring that all required data fields are present, and that data types and formats are consistent. For instance, a test might compare the number of records in a database table before and after migration, or check that specific data values match.

- Functional Tests: These tests evaluate the application's behavior after migration. They ensure that key functionalities, such as user logins, data processing, and report generation, work as expected. Functional tests often involve simulating user interactions with the application and verifying the outcomes.

- Performance Tests: Performance tests assess the application's responsiveness and efficiency in the new environment. They measure metrics like response times, transaction throughput, and resource utilization under various load conditions.

- Integration Tests: These tests ensure that different components of the application, or the application and its dependencies, work together seamlessly after migration. They verify the correct flow of data and interactions between various modules.

- Security Tests: These tests evaluate the security posture of the migrated application. They ensure that security configurations, access controls, and data encryption are correctly implemented and that the application is protected against potential vulnerabilities.

Once test cases are defined, they are automated using appropriate testing tools and frameworks. The automation process involves writing scripts that execute the test cases and record the results. These scripts can be triggered automatically, typically as part of the migration pipeline.

After the tests are executed, the results are analyzed to identify any failures or discrepancies. The analysis involves reviewing test logs, error messages, and performance metrics. Any identified issues are documented and reported for remediation.

Finally, the testing process is repeated iteratively throughout the migration process. This continuous testing approach ensures that any issues introduced during the migration are quickly identified and resolved, minimizing the risk of disruption.

Designing a Process for Automatically Generating Reports on Migration Progress and Identifying Potential Issues

Automated reporting is essential for providing real-time insights into the migration process, enabling proactive issue identification and timely intervention. This process typically involves collecting data from various sources and generating reports in a structured format.

Data collection forms the foundation of automated reporting. It involves gathering data from several sources, including:

- Test Execution Logs: These logs contain detailed information about the execution of automated tests, including test results, error messages, and performance metrics.

- Migration Logs: Migration logs record the progress of the migration process, including the status of data transfers, infrastructure provisioning, and application deployments.

- Monitoring Systems: Monitoring systems provide real-time data on the performance and health of the migrated applications and infrastructure.

- Configuration Management Tools: These tools provide data on the configuration of the migrated systems.

Once the data is collected, it is processed and aggregated to generate reports. This process typically involves:

- Data Aggregation: Data from different sources is combined and consolidated into a unified dataset.

- Data Analysis: The aggregated data is analyzed to identify trends, anomalies, and potential issues.

- Report Generation: Reports are generated in a predefined format, such as dashboards, charts, and tables.

Automated reporting systems typically generate several types of reports:

- Progress Reports: These reports provide an overview of the migration progress, including the percentage of completed tasks, the number of issues identified, and the overall status of the migration.

- Test Results Reports: These reports summarize the results of automated tests, including the number of passed, failed, and skipped tests. They also provide detailed information about any test failures, including error messages and stack traces.

- Performance Reports: These reports track the performance of the migrated applications and infrastructure, including response times, throughput, and resource utilization.

- Issue Tracking Reports: These reports track the status of identified issues, including the severity, the assigned owner, and the resolution progress.

The reporting process is typically automated using scripting languages (like Python) or specialized reporting tools. These tools can automatically generate and distribute reports to relevant stakeholders, such as project managers, developers, and operations teams. Reports are often integrated into dashboards for real-time monitoring of the migration process.

Examples of Automated Testing Frameworks and Their Implementation in a Migration Context

Several automated testing frameworks can be used to validate data integrity and application functionality during a migration. The choice of framework depends on the specific requirements of the migration project, the technologies involved, and the existing testing infrastructure.

Here are some examples of automated testing frameworks and their implementation in a migration context:

- Selenium: Selenium is a widely used framework for automating web browser interactions. In a migration context, Selenium can be used to test the functionality of web applications after migration. Test scripts can simulate user interactions, such as clicking buttons, entering data, and navigating between pages. Selenium can verify that web applications are functioning correctly in the new environment, ensuring that the user interface is working as expected and that data is being displayed and processed correctly.

- JUnit/TestNG: JUnit and TestNG are popular Java testing frameworks for unit and integration testing. In a migration context, these frameworks can be used to test the functionality of Java-based applications. Test scripts can be written to test individual components, modules, and classes. JUnit/TestNG can be used to verify that the core business logic of the application is working correctly after migration.

- Appium: Appium is an open-source framework for automating mobile application testing. In a migration context, Appium can be used to test the functionality of mobile applications after migration. Test scripts can be written to simulate user interactions on mobile devices, such as tapping buttons, swiping screens, and entering text. Appium can verify that mobile applications are functioning correctly in the new environment, ensuring that the user interface is working as expected and that data is being displayed and processed correctly.

- Robot Framework: Robot Framework is a generic, open-source automation framework for acceptance testing and acceptance test-driven development. It supports testing of different types of applications and platforms. In a migration context, Robot Framework can be used to test a variety of application types, including web, mobile, and desktop applications. It uses a -driven approach, which makes it easy to write and maintain test scripts.

- Database Testing Tools: Tools like DBUnit or custom SQL scripts can be used to validate data integrity in databases. These tools can compare data between the source and target databases, ensuring that data has been migrated accurately. They can verify data types, constraints, and relationships. For example, a test script might compare the number of records in a table, the values of specific fields, or the results of complex queries.

- Performance Testing Tools (e.g., JMeter, Gatling): These tools are used to assess the performance of migrated applications under load. JMeter and Gatling allow simulating a large number of concurrent users to measure response times, throughput, and resource utilization. They can identify performance bottlenecks and ensure that the migrated application can handle the expected workload.

Implementation of these frameworks involves:

- Framework Selection: Choosing the appropriate framework based on the application type, technology stack, and testing requirements.

- Test Script Development: Writing test scripts that define the test cases and the expected behavior of the application.

- Test Execution: Running the test scripts and collecting the results.

- Result Analysis: Analyzing the test results to identify any issues or failures.

- Issue Resolution: Investigating and resolving any identified issues.

- Continuous Integration/Continuous Deployment (CI/CD) Integration: Integrating automated tests into the CI/CD pipeline to ensure that tests are run automatically after each code change or deployment.

For example, a migration project involving a web application could use Selenium to automate functional tests. These tests would simulate user interactions with the application, such as logging in, navigating to different pages, and submitting forms. The test results would be analyzed to ensure that the application functions correctly in the new environment. Another example is in a database migration; DBUnit can be used to compare data between the source and target databases to verify data integrity.

Automating Monitoring and Alerting

Automating monitoring and alerting is crucial for a successful migration, ensuring the health and performance of migrated systems are continuously tracked and any issues are promptly addressed. This proactive approach minimizes downtime, improves troubleshooting efficiency, and provides valuable insights into the post-migration environment. Automating these processes reduces manual effort, allowing administrators to focus on more strategic tasks.

Setting Up Automated Monitoring Tools

Automating the setup of monitoring tools is a fundamental step in ensuring the operational integrity of migrated systems. This involves configuring the tools to collect relevant metrics, define performance thresholds, and establish data storage mechanisms. The automation process typically leverages scripting and configuration management tools.

- Tool Selection and Configuration: The initial step involves selecting appropriate monitoring tools based on the system requirements and the existing infrastructure. Popular choices include Prometheus, Grafana, Nagios, Zabbix, and Datadog. Configuration management tools like Ansible, Chef, or Puppet can then be used to automate the deployment and configuration of these tools across the migrated infrastructure. This includes defining the monitoring agents' installation, configuration files, and data collection intervals.

- Metric Definition and Collection: Defining the relevant metrics is essential. These metrics should cover key aspects of system performance, including CPU utilization, memory usage, disk I/O, network traffic, and application-specific metrics such as response times and error rates. Automation scripts can be used to automatically discover and configure the collection of these metrics based on the type of system being monitored (e.g., a web server, a database server).

- Data Storage and Retention Policies: Configuring data storage and retention policies is crucial for long-term analysis and troubleshooting. Monitoring tools often store data in time-series databases. Automation scripts can be used to configure the database settings, including the data retention period, the data compression algorithms, and the backup strategies. For example, a script might configure Prometheus to store metrics for 30 days and automatically back up the data every week.

- Integration with Notification Systems: Integrating the monitoring tools with notification systems ensures that administrators are promptly alerted to critical events. Automation can be used to configure integrations with email, SMS, and messaging platforms like Slack or Microsoft Teams. For instance, a script can be written to automatically configure alerts in Prometheus to send notifications to a designated Slack channel when CPU usage exceeds 90% for more than 5 minutes.

Creating Automated Alerts

Creating automated alerts is a critical component of proactive system management. Automated alerts enable timely detection and resolution of issues, minimizing the impact on users and business operations. The process involves defining alert rules, configuring notification channels, and testing the alerts to ensure their effectiveness.

- Alert Rule Definition: Alert rules are the core of automated alerting. These rules define the conditions under which an alert should be triggered. They are based on the metrics collected by the monitoring tools and typically involve threshold-based logic, rate-of-change detection, or anomaly detection. For example, an alert rule might trigger an alert if the response time of a web application exceeds 2 seconds.

- Notification Channel Configuration: Once the alert rules are defined, the notification channels need to be configured. This involves specifying the destinations for the alerts, such as email addresses, SMS numbers, or messaging platform channels. The configuration should also include the alert severity levels (e.g., critical, warning, informational) and the escalation paths for each level.

- Testing and Validation: Thoroughly testing and validating the alerts is crucial to ensure they function correctly and provide accurate information. This involves simulating various failure scenarios to trigger the alerts and verifying that the notifications are sent to the correct destinations. For example, a test might involve artificially increasing the CPU load on a server to trigger a CPU utilization alert.

- Alert Automation Examples:

- High CPU Utilization: If the CPU utilization on a server consistently exceeds 90% for more than 5 minutes, an alert is triggered. The notification would include the server name, the CPU utilization percentage, and a timestamp.

- Disk Space Exhaustion: When the disk space on a server reaches 90% capacity, an alert is triggered. The notification would include the server name, the disk partition, and the percentage of disk space used.

- Application Error Rate Increase: If the error rate of a web application suddenly increases above a predefined threshold, an alert is triggered. The notification would include the application name, the error rate, and a timestamp.

Visualizing System Status with Dashboards

Visualizing the status of migrated systems through dashboards provides a comprehensive overview of their performance and health. Dashboards consolidate key metrics into an easily understandable format, allowing administrators to quickly identify issues and trends.

- Dashboard Components and Descriptions:

- Overview Panel: Displays a summary of key metrics such as CPU utilization, memory usage, disk I/O, and network traffic across all monitored systems. This panel provides a high-level view of the overall system health.

- Application Performance Panel: Shows metrics related to application performance, such as response times, error rates, and transaction volumes. This panel is crucial for monitoring the end-user experience.

- Database Performance Panel: Presents metrics related to database performance, such as query execution times, connection pool usage, and transaction throughput. This panel is essential for identifying database bottlenecks.

- Network Performance Panel: Displays metrics related to network traffic, such as bandwidth usage, latency, and packet loss. This panel helps identify network-related issues.

- Log Analysis Panel: Integrates log data and provides visualizations of error rates, event counts, and log trends. This panel facilitates quick identification of issues and troubleshooting.

- Dashboard Tools and Technologies: Popular tools for creating dashboards include Grafana, Kibana, and Datadog. These tools allow users to create customizable dashboards with various chart types, such as line graphs, bar charts, and heatmaps.

- Example: A Grafana Dashboard for a Web Application A Grafana dashboard might include the following components:

- A line graph showing the average response time of the web application over time.

- A bar chart displaying the number of HTTP requests per minute.

- A gauge showing the error rate of the application.

- A table listing the top 10 slowest requests.

Best Practices and Considerations for Automation

Automation, while offering significant advantages in migration processes, necessitates careful planning and execution to avoid potential setbacks and ensure a smooth transition. This section focuses on best practices, addressing common pitfalls, tool selection strategies, and crucial security and compliance considerations. These elements are essential for realizing the full benefits of automation while mitigating risks.

Identifying Common Pitfalls to Avoid

Successful automation hinges on anticipating and circumventing potential obstacles. Failing to address these issues can lead to project delays, increased costs, and operational disruptions. Understanding these pitfalls is crucial for proactive mitigation.

- Lack of Proper Planning and Scope Definition: Inadequate planning is a primary cause of automation failure. Without a clearly defined scope, objectives, and a detailed migration strategy, automation efforts are likely to be misdirected and inefficient. A well-defined plan should include timelines, resource allocation, and success metrics.

- Underestimating Complexity: Migration projects are inherently complex, involving various systems, data formats, and dependencies. Underestimating this complexity can lead to unrealistic expectations and insufficient resources. It's essential to thoroughly assess the existing environment and anticipate potential challenges.

- Poor Tool Selection: Choosing the wrong automation tools can hinder the entire process. Selecting tools that are incompatible with the existing infrastructure, lack necessary features, or have poor integration capabilities can result in significant delays and rework.

- Ignoring Security and Compliance: Failing to integrate security and compliance considerations into the automation process can expose sensitive data to risks. Ignoring these aspects can lead to data breaches, regulatory violations, and reputational damage.

- Insufficient Testing and Validation: Automation without rigorous testing and validation can result in data loss, system downtime, and operational failures. Thorough testing at each stage of the migration process is critical to ensure data integrity and system functionality.

- Lack of Documentation and Knowledge Transfer: Insufficient documentation and knowledge transfer can create dependencies on specific individuals and hinder long-term maintenance and support. Comprehensive documentation and training are essential for ensuring the sustainability of the automated migration process.

- Over-Automation: Automating every aspect of the migration process may not always be the most efficient or cost-effective approach. Over-automation can lead to increased complexity, unnecessary costs, and potential risks. A balanced approach, focusing on automating the most repetitive and error-prone tasks, is often preferable.

Selecting the Right Automation Tools

Choosing the appropriate automation tools is a critical determinant of migration success. The selection process should be guided by specific migration requirements, existing infrastructure, and organizational capabilities.

- Assess Migration Needs: Begin by thoroughly analyzing the migration requirements. Identify the specific tasks that need to be automated, the data types involved, and the target environment. Consider factors such as the size and complexity of the data, the desired level of automation, and the available resources.

- Evaluate Tool Features: Research and compare available automation tools, focusing on their features and capabilities. Consider factors such as:

- Data Migration Capabilities: The tool's ability to handle different data formats, perform data transformation, and ensure data integrity.

- Infrastructure Provisioning: The tool's support for provisioning and configuring the target infrastructure.

- Application Migration Support: The tool's ability to migrate applications, including their dependencies and configurations.

- Testing and Validation: The tool's features for testing and validating the migrated data and applications.

- Security Features: The tool's security features, such as encryption, access control, and audit logging.

- Integration Capabilities: The tool's ability to integrate with existing systems and tools.

- Consider Vendor Reputation and Support: Evaluate the vendor's reputation, track record, and level of support. Consider factors such as the vendor's experience, the availability of documentation and training, and the responsiveness of their support team.

- Prioritize Scalability and Flexibility: Select tools that are scalable and flexible to accommodate future growth and changes in requirements. The chosen tools should be able to handle increasing data volumes, evolving infrastructure, and changing business needs.

- Assess Cost and Licensing: Evaluate the total cost of ownership, including licensing fees, implementation costs, and ongoing maintenance expenses. Consider the long-term cost implications and ensure that the tool's benefits justify its cost.

- Pilot Projects and Proof of Concept: Before committing to a specific tool, conduct pilot projects or proof-of-concept exercises to evaluate its performance and suitability. This allows for hands-on testing and validation of the tool's capabilities in a real-world environment.

Ensuring Security and Compliance of Automated Migration Processes

Maintaining the security and compliance of automated migration processes is paramount. It involves implementing robust security measures and adhering to relevant regulatory requirements throughout the entire migration lifecycle.

- Implement Strong Access Controls: Restrict access to automation tools and data to authorized personnel only. Utilize role-based access control (RBAC) to ensure that users have only the necessary permissions to perform their tasks. Implement multi-factor authentication (MFA) to further enhance security.

- Encrypt Data in Transit and at Rest: Protect sensitive data by encrypting it both during transit and while it is stored. Utilize industry-standard encryption protocols and algorithms. Implement key management practices to securely manage encryption keys.

- Regularly Audit and Monitor Automation Activities: Implement comprehensive audit logging to track all automation activities. Regularly review audit logs to identify and address any security incidents or compliance violations. Implement real-time monitoring to detect and respond to security threats promptly.

- Comply with Relevant Regulations and Standards: Ensure that the automated migration process complies with all relevant regulations and industry standards, such as GDPR, HIPAA, and PCI DSS. Implement appropriate security controls to meet these requirements.

- Secure the Automation Tools Themselves: Regularly update automation tools with the latest security patches and vulnerability fixes. Secure the infrastructure where the automation tools are deployed. Implement security best practices for the tool's configuration and usage.

- Implement Data Loss Prevention (DLP) Measures: Implement DLP measures to prevent sensitive data from leaving the organization's control. This may include data classification, data masking, and data loss prevention policies.

- Conduct Regular Security Assessments and Penetration Testing: Conduct regular security assessments and penetration testing to identify vulnerabilities and weaknesses in the automated migration process. Address any identified vulnerabilities promptly.

- Establish a Robust Incident Response Plan: Develop and maintain a comprehensive incident response plan to address any security incidents or data breaches. The plan should include procedures for detection, containment, eradication, recovery, and post-incident analysis.

Conclusive Thoughts

In summary, automating the migration process is no longer a luxury but a necessity for organizations aiming to maintain agility and minimize downtime. This analysis has demonstrated the importance of careful planning, tool selection, and the implementation of automated testing and monitoring. By embracing these principles, IT professionals can significantly reduce the risks associated with migrations, ultimately resulting in smoother transitions and enhanced operational efficiency.

FAQ Overview

What are the primary benefits of automating the migration process?

Automation reduces human error, accelerates migration timelines, improves consistency, and allows for better resource allocation, ultimately lowering costs and increasing efficiency.

What types of migration strategies are best suited for automation?

Automation is applicable across various strategies, including big bang, phased, and parallel migrations. The best approach depends on factors like downtime tolerance, application dependencies, and data volume.

How can I ensure data integrity during automated migration?

Implement robust data validation procedures, including checksum verification, data type checking, and conflict resolution mechanisms. Automated testing is also crucial to ensure the data's integrity.

What are the key considerations for securing the target environment during migration?

Employ strong access controls, encrypt sensitive data, and adhere to security best practices for Infrastructure as Code (IaC) configurations. Regularly audit security settings and monitor for vulnerabilities.