The serverless computing paradigm, while offering significant benefits in terms of scalability and cost-efficiency, often presents a significant hurdle: vendor lock-in. This challenge arises because each serverless provider (AWS, Azure, Google Cloud, etc.) offers unique services, APIs, and configurations, making it difficult to seamlessly migrate or deploy applications across platforms. This guide delves into the intricacies of achieving portability between serverless providers, offering a structured approach to navigate the complexities of cross-platform deployment.

We will explore the core challenges, examine abstraction layers and frameworks, consider code and runtime best practices, and delve into infrastructure-as-code strategies. The discussion extends to event triggers, data source portability, testing, debugging, monitoring, logging, security considerations, and cost optimization. This comprehensive analysis provides a roadmap for developers seeking to maximize flexibility and avoid the constraints of a single vendor ecosystem, ensuring a more resilient and adaptable serverless architecture.

Understanding Serverless Portability Challenges

The ambition of serverless portability – the ability to seamlessly migrate functions and applications across different cloud providers – is significantly hampered by a complex interplay of vendor-specific implementations and architectural divergences. Achieving true portability requires a deep understanding of these challenges and a strategic approach to mitigating their impact.

Core Challenges of Serverless Portability

The core challenges stem from the inherent differences in how serverless platforms operate, manage resources, and integrate with other services. These differences introduce complexities that necessitate significant code modifications or architectural overhauls when migrating applications.

- Vendor Lock-in: This arises from the reliance on proprietary services, APIs, and tooling offered by individual providers. These lock-in mechanisms can be difficult to abstract, making it challenging to switch providers without rewriting substantial portions of the application. For example, using AWS Lambda-specific IAM roles and policies tightly couples the function with the AWS ecosystem.

- Event Trigger Variations: Serverless functions are typically triggered by events. The specific event types, their configurations, and how they are managed vary across providers. Migrating a function that is triggered by an AWS S3 bucket event to Google Cloud Storage requires understanding and adapting to Google Cloud Storage’s event notification mechanisms.

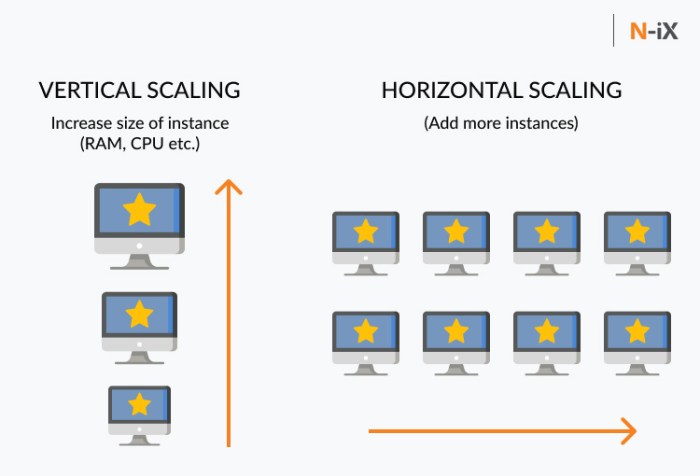

- Resource Management Differences: Each provider has unique ways of managing resources such as memory, compute time, and storage. These differences can lead to performance discrepancies and require careful optimization during migration. For instance, a function optimized for AWS Lambda’s cold start behavior might perform poorly on Azure Functions if not adapted.

- API Gateway Implementations: API Gateway implementations vary considerably. Differences in authentication, authorization, rate limiting, and request/response handling can require significant code adjustments when migrating a serverless API from one provider to another.

- Monitoring and Logging: Monitoring and logging services are provider-specific. Migrating an application involves replacing the existing monitoring and logging infrastructure with the target provider’s equivalents. This often necessitates code changes to accommodate different logging formats and metrics.

Common Vendor Lock-in Scenarios

Vendor lock-in manifests in several common scenarios that hinder serverless portability. These scenarios often involve the use of provider-specific features or services that are not easily replicated on other platforms.

- Proprietary SDKs and Libraries: The use of vendor-specific SDKs and libraries for interacting with other cloud services introduces strong dependencies. For example, an application heavily reliant on AWS SDK for DynamoDB will require code adjustments to interact with Google Cloud’s Cloud Datastore.

- Custom Extensions and Plugins: The use of custom extensions or plugins designed specifically for a particular provider creates dependencies that are difficult to port. These extensions might not have equivalents on other platforms or require significant re-engineering.

- IAM Role and Policy Dependencies: Serverless functions often rely on specific IAM roles and policies to access other cloud resources. The differences in IAM implementations across providers necessitate careful adaptation of these roles and policies during migration.

- Serverless Database Integrations: The seamless integration with serverless databases is often a key selling point of serverless platforms. Using provider-specific database offerings, such as AWS DynamoDB, can create significant lock-in.

- Managed Service Integrations: The integration with other managed services like message queues (e.g., AWS SQS, Google Cloud Pub/Sub, Azure Service Bus) creates vendor lock-in. Functions that depend on these services must be adapted to the equivalent services of the target provider.

Differences in Event Triggers Across Serverless Platforms

Event triggers are the mechanisms that initiate the execution of serverless functions. The specific event types, their configuration options, and their overall behavior vary across the major serverless platforms, leading to significant portability challenges.

- HTTP Triggers: All major platforms support HTTP triggers, but the configuration options and underlying mechanisms differ. For example, AWS API Gateway offers a range of features, including request transformation and authentication, that might require different approaches on Azure Functions or Google Cloud Functions.

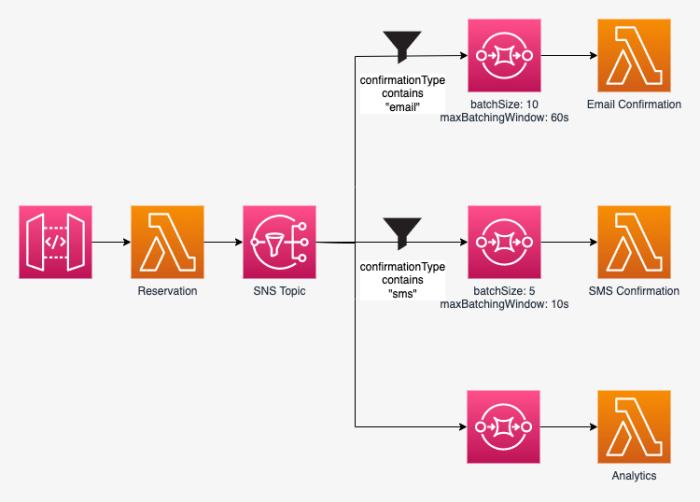

- Queue Triggers: Queue triggers allow functions to be initiated by messages in a queue. The specific queue services (e.g., AWS SQS, Azure Queue Storage, Google Cloud Pub/Sub) and their associated configuration options vary. Adapting a function triggered by AWS SQS to Azure Queue Storage involves modifying the code to interact with Azure’s API and adapting to its message format.

- Database Triggers: Database triggers enable functions to respond to changes in a database. The specific databases supported and the event notification mechanisms differ across providers. For example, triggering a function based on changes in an AWS DynamoDB table requires a different configuration than triggering on Google Cloud Firestore changes.

- Storage Bucket Triggers: These triggers activate functions based on events in object storage services. The supported event types (e.g., object creation, deletion), and the notification mechanisms (e.g., event notifications) differ. Migrating a function triggered by an AWS S3 object creation event to Google Cloud Storage requires understanding Google Cloud Storage’s event notification mechanisms and adapting the code accordingly.

- Scheduled Triggers: Scheduled triggers allow functions to run at a predefined time or interval. The configuration options for scheduling, such as cron expressions and time zones, vary across providers.

Abstraction Layers and Frameworks for Portability

Serverless portability necessitates the ability to deploy and manage functions across different cloud providers with minimal code changes. Abstraction layers and serverless frameworks play a crucial role in achieving this by providing a unified interface that hides the underlying complexities of each provider’s infrastructure. This approach promotes vendor independence and reduces the effort required to switch or deploy applications across multiple platforms.

Role of Abstraction Layers in Serverless Portability

Abstraction layers provide a critical mechanism for serverless portability by isolating the application code from the specific implementation details of each cloud provider. They act as intermediaries, translating provider-agnostic function definitions and configurations into the provider-specific formats required for deployment and execution.

- Standardized Interface: Abstraction layers define a consistent API for developers to interact with serverless functions, regardless of the underlying provider. This allows developers to write code that is portable across different platforms.

- Provider-Specific Implementation: The abstraction layer handles the provider-specific details, such as deployment packages, function configurations, and event triggers. This shields developers from the complexities of each provider’s infrastructure.

- Configuration Management: Abstraction layers provide a unified way to manage function configurations, including environment variables, memory allocation, and timeouts. This simplifies the process of configuring functions across different providers.

- Deployment Automation: Abstraction layers often include tools for automating the deployment process, such as packaging code, uploading artifacts, and configuring function triggers. This reduces the manual effort required to deploy and manage serverless functions.

- Vendor Lock-in Mitigation: By abstracting away provider-specific details, abstraction layers reduce vendor lock-in, making it easier to switch providers or deploy applications across multiple platforms.

Comparison of Open-Source Serverless Frameworks and Their Portability Features

Several open-source serverless frameworks aim to simplify the development and deployment of serverless applications, offering varying degrees of portability. Understanding their strengths and limitations is crucial for selecting the right tool for a specific project.

The following table compares the portability features of several popular open-source serverless frameworks. The comparison considers aspects such as provider support, templating capabilities, and the level of abstraction provided.

| Framework | Provider Support | Templating Language | Portability Features | Limitations |

|---|---|---|---|---|

| Serverless Framework | AWS, Azure, Google Cloud, Cloudflare, etc. (via plugins) | YAML, JSON | Extensive plugin ecosystem for provider support; good support for multi-provider deployments. | Portability relies heavily on plugins, which may vary in quality and maintenance. |

| AWS SAM (Serverless Application Model) | AWS | YAML, JSON | Native support for AWS services; leverages CloudFormation for infrastructure provisioning. | Limited to AWS; less portable than other frameworks. |

| Azure Functions Core Tools | Azure | JSON, YAML (via ARM templates) | Native support for Azure Functions; local development and debugging tools. | Primarily focused on Azure; limited portability features. |

| Google Cloud Functions Framework | Google Cloud | Configuration via code (e.g., Python, Node.js) | Focuses on local development and testing; provides an abstraction layer for Google Cloud Functions. | Limited portability; primarily focused on Google Cloud. |

Design of a Hypothetical Abstraction Layer and Its Key Components for Function Deployment

A well-designed abstraction layer for serverless portability should provide a consistent interface for function definition, deployment, and management, while handling the provider-specific nuances.

The following components constitute a hypothetical abstraction layer for function deployment across providers:

- Function Definition Language (FDL): This component defines a standardized language (e.g., YAML, JSON, or a custom DSL) for describing serverless functions. It specifies function metadata such as the runtime environment (e.g., Python, Node.js), memory allocation, timeout settings, and event triggers (e.g., HTTP requests, scheduled events, message queue events). The FDL should be provider-agnostic, allowing developers to define functions without specifying a particular cloud provider.

- Provider Adapters: Provider adapters are the core of the abstraction layer. Each adapter is responsible for translating the FDL into the specific format required by a particular cloud provider. This includes creating deployment packages, configuring function settings, and setting up event triggers. Adapters encapsulate all the provider-specific details, shielding the application code from these complexities. For example, an AWS adapter would use CloudFormation or SAM templates, while an Azure adapter would utilize Azure Resource Manager templates.

- Deployment Engine: The deployment engine orchestrates the deployment process. It parses the FDL, selects the appropriate provider adapter, and executes the deployment steps. The engine also handles error handling, logging, and rollback mechanisms. It might also include features for managing dependencies and versioning.

- Configuration Management: This component provides a unified interface for managing function configurations, such as environment variables, secrets, and resource allocations. It allows developers to configure functions in a provider-agnostic manner, and the configuration is then translated by the provider adapters into the provider-specific format.

- Monitoring and Logging Interface: The abstraction layer provides a standardized interface for monitoring and logging function execution. This interface should allow developers to view logs and metrics from all providers in a consistent format. Provider adapters would translate the standardized logs and metrics into the format required by each provider’s monitoring and logging services.

Code and Runtime Considerations

Achieving portability across serverless platforms necessitates careful attention to code design and runtime environment management. This involves adopting strategies that minimize dependencies on provider-specific features and ensure consistent execution across different infrastructures. Successful portability hinges on creating functions that are as platform-agnostic as possible, along with robust dependency management practices.

Code-Level Portability Techniques

Code-level portability is paramount for serverless function migration. It centers on writing functions that are independent of specific provider APIs and services. Several techniques facilitate this, including the use of platform-agnostic libraries and the avoidance of vendor lock-in through provider-specific SDKs.

- Utilizing Platform-Agnostic Libraries: Choosing libraries that offer abstractions over underlying platform-specific functionalities is crucial. These libraries provide a consistent interface, shielding the code from the intricacies of each provider’s implementation. For example, libraries that abstract database interactions or queuing services can significantly enhance portability.

- Avoiding Provider-Specific SDKs: Directly integrating provider-specific SDKs can introduce vendor lock-in. While these SDKs often provide convenient access to platform features, they tightly couple the code to a single provider. Instead, consider using wrapper libraries or implementing a layer of abstraction that interacts with the provider’s services through a generic interface.

- Employing Abstraction Layers: Creating abstraction layers is a powerful strategy. This involves defining a set of interfaces or APIs that represent the services required by the function. The actual implementation of these interfaces is then handled by platform-specific adapters. This approach isolates the core business logic from the underlying infrastructure, making it easier to switch providers.

- Adhering to Standardized Protocols: Favoring standard protocols, such as HTTP for API interactions or MQTT for messaging, can enhance portability. These protocols are widely supported across different platforms, minimizing the need for platform-specific code.

Best Practices for Managing Dependencies

Managing dependencies effectively is crucial for maintaining consistent runtime environments across serverless platforms. Inconsistencies in dependencies can lead to unexpected behavior and portability issues. Implementing best practices ensures that the same dependencies are available and configured correctly on each platform.

- Dependency Management Tools: Leverage dependency management tools specific to the programming language being used (e.g., npm for Node.js, pip for Python, Maven or Gradle for Java). These tools allow specifying and managing project dependencies in a declarative manner.

- Lock Files: Utilize lock files (e.g., `package-lock.json` in Node.js, `requirements.txt` with `pip` in Python, `pom.xml` or `build.gradle` in Java) to pin down specific versions of dependencies. This ensures that the exact same versions are used across different environments, preventing version-related conflicts.

- Containerization: Employing containerization technologies like Docker provides a consistent environment that bundles the application code, dependencies, and runtime environment. This approach eliminates the “it works on my machine” problem, ensuring consistent behavior across different platforms.

- Versioning and Testing: Rigorously version dependencies and thoroughly test functions across various platforms. This allows identifying and addressing compatibility issues early in the development lifecycle.

Code Snippets Demonstrating Portable Function Implementation

The following code snippets illustrate the implementation of a portable function across Python, Node.js, and Java. The examples demonstrate how to create a simple function that retrieves a message from an environment variable. These examples emphasize the use of built-in functions and avoid provider-specific SDKs.

Python

The Python example uses the `os` module to access environment variables. This module is part of the Python standard library and does not depend on any external libraries or provider-specific SDKs, making it highly portable.

import os def handler(event, context): message = os.environ.get("MESSAGE", "Hello from a portable function!") return "statusCode": 200, "body": message Node.js

The Node.js example uses the `process.env` object to access environment variables. This object is provided by the Node.js runtime and is a standard way to access environment variables without requiring any external dependencies.

exports.handler = async (event) => const message = process.env.MESSAGE || "Hello from a portable function!"; const response = statusCode: 200, body: message, ; return response; ;

Java

The Java example uses the `System.getenv()` method to retrieve environment variables. This method is part of the Java standard library and does not require any external libraries or provider-specific SDKs, ensuring portability.

import com.amazonaws.services.lambda.runtime.Context; import com.amazonaws.services.lambda.runtime.RequestHandler; public class HelloHandler implements RequestHandler @Override public String handleRequest(Object event, Context context) String message = System.getenv("MESSAGE"); if (message == null || message.isEmpty()) message = "Hello from a portable function!"; return message; Infrastructure as Code (IaC) for Cross-Platform Deployment

Infrastructure as Code (IaC) represents a paradigm shift in cloud infrastructure management, allowing for the automated provisioning and management of resources through code.

This approach significantly enhances portability by enabling the definition of infrastructure in a declarative manner, making it provider-agnostic and repeatable across different serverless platforms. IaC tools facilitate consistent deployments, reduce human error, and streamline the management of complex serverless applications.

IaC Tools and Cross-Provider Deployment

IaC tools are instrumental in achieving cross-platform serverless deployments. They abstract away the provider-specific nuances, allowing developers to define infrastructure in a unified language. This abstraction simplifies the process of deploying applications across different cloud providers.

- Terraform: Terraform, developed by HashiCorp, is a widely adopted IaC tool that supports a vast array of cloud providers. Its declarative configuration language allows users to define infrastructure resources in a human-readable format (HCL – HashiCorp Configuration Language). Terraform then translates this configuration into provider-specific API calls to create and manage the infrastructure. Terraform’s state management capabilities ensure that infrastructure changes are applied correctly and efficiently.

- Pulumi: Pulumi is another IaC tool that supports multiple cloud providers. Unlike Terraform, Pulumi allows users to define infrastructure using general-purpose programming languages like Python, JavaScript, TypeScript, Go, and .NET. This approach enables developers to leverage their existing programming skills and integrate infrastructure management into their application development workflows more seamlessly. Pulumi also provides a rich set of libraries and features for managing infrastructure.

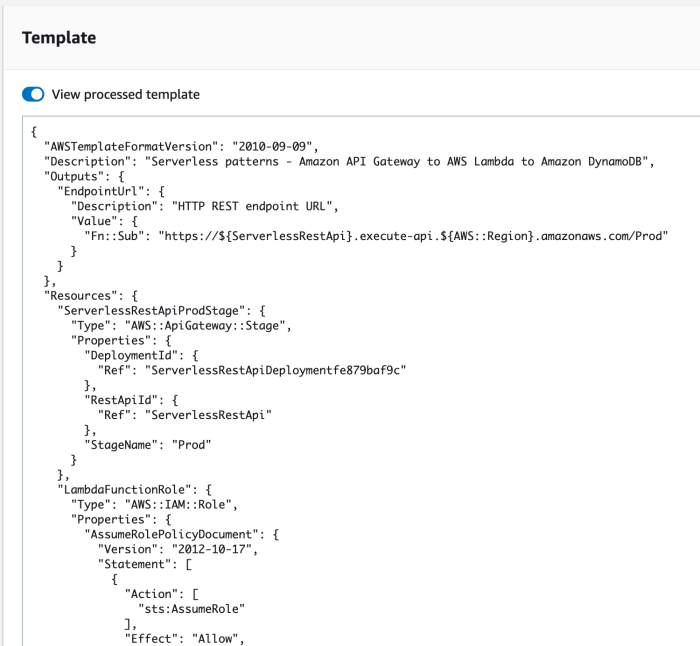

- AWS CloudFormation: AWS CloudFormation is a service provided by Amazon Web Services (AWS) that allows users to model and set up their AWS resources so they can spend less time managing those resources and more time focusing on their applications that run in AWS. It uses a declarative language (YAML or JSON) to define infrastructure templates. While CloudFormation is primarily focused on AWS, it can be used in conjunction with other tools to achieve cross-platform deployments, particularly when coupled with tools like Terraform.

Terraform Configuration for a Simple HTTP Endpoint

The following Terraform configuration demonstrates the deployment of a simple HTTP endpoint (e.g., a “Hello, World!” function) across AWS Lambda, Azure Functions, and Google Cloud Functions. This example highlights the core principles of cross-platform deployment using IaC. This configuration is a simplified illustration, and real-world deployments would involve more complex configurations.

“`terraform

# Configure the AWS provider

provider “aws”

region = “us-east-1” # Replace with your desired AWS region

# Configure the Azure provider

provider “azurerm”

features

# Configure the Google Cloud provider

provider “google”

project = “your-gcp-project-id” # Replace with your GCP project ID

region = “us-central1” # Replace with your desired GCP region

# Common configuration for the function

resource “local_file” “function_code”

content = “exports.handler = async (event) => return statusCode: 200, body: ‘Hello, World!’ ; ;” # Basic function code

filename = “index.js”

# AWS Lambda function

resource “aws_lambda_function” “example”

function_name = “hello-world-aws”

filename = “index.js”

source_code_hash = filebase64sha256(“index.js”)

handler = “index.handler”

runtime = “nodejs18.x”

role = aws_iam_role.lambda_role.arn

resource “aws_iam_role” “lambda_role”

name = “lambda_basic_execution”

assume_role_policy = jsonencode(

“Version”: “2012-10-17”,

“Statement”: [

“Action”: “sts:AssumeRole”,

“Principal”:

“Service”: “lambda.amazonaws.com”

,

“Effect”: “Allow”,

“Sid”: “”

]

)

resource “aws_lambda_permission” “api_gateway”

statement_id = “AllowAPIGatewayInvoke”

action = “lambda:InvokeFunction”

function_name = aws_lambda_function.example.function_name

principal = “apigateway.amazonaws.com”

source_arn = aws_api_gateway_rest_api.example.execution_arn

resource “aws_api_gateway_rest_api” “example”

name = “hello-world-api”

description = “Example API Gateway”

resource “aws_api_gateway_resource” “example”

rest_api_id = aws_api_gateway_rest_api.example.id

parent_id = aws_api_gateway_rest_api.example.root_resource_id

path_part = “hello”

resource “aws_api_gateway_method” “example”

rest_api_id = aws_api_gateway_rest_api.example.id

resource_id = aws_api_gateway_resource.example.id

http_method = “GET”

authorization_type = “NONE”

resource “aws_api_gateway_integration” “example”

rest_api_id = aws_api_gateway_rest_api.example.id

resource_id = aws_api_gateway_resource.example.id

http_method = aws_api_gateway_method.example.http_method

integration_http_method = “POST”

type = “AWS_PROXY”

uri = aws_lambda_function.example.invoke_arn

# Azure Function App (placeholder – requires more detailed configuration)

resource “azurerm_resource_group” “example”

name = “hello-world-azure-rg”

location = “eastus” # Replace with your desired Azure region

resource “azurerm_function_app” “example”

name = “hello-world-azure”

location = azurerm_resource_group.example.location

resource_group_name = azurerm_resource_group.example.name

app_service_plan_id = azurerm_app_service_plan.example.id

storage_account_name = azurerm_storage_account.example.name

storage_account_access_key = azurerm_storage_account.example.primary_access_key

resource “azurerm_app_service_plan” “example”

name = “hello-world-azure-asp”

location = azurerm_resource_group.example.location

resource_group_name = azurerm_resource_group.example.name

sku_name = “Y1” # Consumption plan

resource “azurerm_storage_account” “example”

name = “helloworldazurestorage” # Replace with a unique name

location = azurerm_resource_group.example.location

resource_group_name = azurerm_resource_group.example.name

account_tier = “Standard”

account_replication_type = “LRS”

# Google Cloud Function (placeholder – requires more detailed configuration)

resource “google_project_iam_member” “example”

project = “your-gcp-project-id” # Replace with your GCP project ID

role = “roles/cloudfunctions.invoker”

member = “allUsers” # For public access. Consider restricting in a production environment.

resource “google_cloudfunctions_function” “example”

name = “hello-world-gcp”

runtime = “nodejs18”

region = “us-central1” # Replace with your desired GCP region

project = “your-gcp-project-id” # Replace with your GCP project ID

source_archive_bucket = google_storage_bucket.function_bucket.name

source_archive_object = google_storage_bucket_object.function_zip.name

entry_point = “handler”

trigger_http = true

resource “google_storage_bucket” “function_bucket”

name = “hello-world-gcp-bucket”

location = “US”

force_destroy = true

resource “google_storage_bucket_object” “function_zip”

name = “function.zip”

bucket = google_storage_bucket.function_bucket.name

source = “index.js” # Requires packaging into a zip

“`

This configuration defines the core infrastructure components: a function (using a simple “Hello, World!” implementation), and, for AWS, an API Gateway to expose the function via HTTP. The Azure and Google Cloud Functions configurations are simplified placeholders, and would require further refinements to function completely. The core file `index.js` will be created by the `local_file` resource, containing the function code.

The AWS configuration creates an IAM role with permissions to execute the Lambda function. The `aws_api_gateway_rest_api` and associated resources set up an API Gateway endpoint that triggers the Lambda function.

The Azure configuration includes a resource group, an App Service plan (using a consumption plan for serverless), and a storage account. A complete Azure Function App configuration would require further resources.

The Google Cloud Functions configuration deploys a function and configures public access, with a Google Cloud Storage bucket for the function’s code.

The example illustrates the basic structure of deploying the same function code across different providers. Each provider requires specific configuration for the function, the access method, and any required supporting resources.

Comparison of Results

The following table summarizes the key aspects of deploying this simple HTTP endpoint across the three providers, showing a simplified view of the differences and similarities.

| Feature | AWS Lambda | Azure Functions | Google Cloud Functions |

|---|---|---|---|

| Function Runtime | Node.js 18.x | Node.js (configurable) | Node.js 18 |

| Invocation Method | API Gateway (HTTP) | HTTP Trigger | HTTP Trigger |

| Configuration | IAM Role, API Gateway | Resource Group, App Service Plan, Storage Account (additional configuration) | Service Account, Bucket, and permissions. |

| Deployment Process | Terraform, Lambda function, API Gateway resources | Terraform (with more configuration), Azure Function App | Terraform, GCF function |

This table highlights the differences in the deployment process, the required resources, and the invocation methods. Although the underlying function code is the same, the IaC configuration varies significantly to accommodate the specific requirements of each provider.

Handling Provider-Specific Configurations with IaC Conditional Logic

IaC tools provide mechanisms to handle provider-specific configurations using conditional logic. This is crucial for managing resources that have different requirements across providers. Terraform, for instance, offers the `count` and `for_each` meta-arguments, and `conditional expressions` to conditionally create resources or configure their properties based on the provider.

Consider the example of configuring an API endpoint. AWS API Gateway, Azure API Management, and Google Cloud Endpoints have different feature sets and configurations. Using conditional logic, we can define the appropriate API gateway resources based on the chosen provider.

“`terraform

# Example using conditional expressions to choose API Gateway type

resource “aws_api_gateway_rest_api” “api”

count = var.provider == “aws” ? 1 : 0

name = “my-api-aws”

description = “API for AWS”

resource “azurerm_api_management” “api”

count = var.provider == “azure” ? 1 : 0

name = “my-api-azure”

location = “eastus” # replace

resource_group_name = “my-resource-group” # replace

publisher_name = “My Company”

publisher_email = “[email protected]”

sku_name = “Consumption”

resource “google_api_gateway_api” “api”

count = var.provider == “gcp” ? 1 : 0

api_id = “my-api-gcp”

project = “your-gcp-project-id” # replace

# Example: Provider selection via a variable

variable “provider”

type = string

description = “Cloud provider (aws, azure, gcp)”

default = “aws”

“`

In this example, the `count` meta-argument is used with a conditional expression. If the `var.provider` variable is set to “aws”, the `aws_api_gateway_rest_api` resource is created. If it is “azure”, the `azurerm_api_management` resource is created, and if it is “gcp”, the `google_api_gateway_api` resource is created. This allows for the creation of provider-specific resources based on a single variable, enhancing portability.

The conditional logic ensures that only the resources appropriate for the selected provider are created, avoiding deployment errors and simplifying the configuration. This approach is crucial for creating infrastructure that can be deployed across different cloud providers with minimal code changes.

Event Trigger and Data Source Portability

The ability to seamlessly move serverless functions between providers hinges on addressing the inherent differences in how these providers handle event triggers and data sources. Portability in this context necessitates strategies to abstract away provider-specific implementations, allowing developers to write code that functions consistently regardless of the underlying infrastructure. This section explores these strategies, providing concrete examples and a practical migration procedure.

Event Trigger Differences

Event triggers are the mechanisms that initiate the execution of serverless functions. These mechanisms vary significantly across providers, encompassing API gateways, event buses, and message queues. Successfully navigating these differences is crucial for achieving portability.

To handle the diverse event trigger mechanisms across providers, several strategies can be employed:

- Abstraction Layers: Creating an abstraction layer is a key approach. This layer acts as an intermediary between the function code and the provider-specific event trigger. It translates provider-specific events into a standardized format that the function code understands.

- Frameworks: Leveraging serverless frameworks like Serverless Framework or AWS SAM can simplify event trigger management. These frameworks provide abstractions and deployment tools that facilitate the definition and deployment of event triggers across multiple providers.

- Provider-Specific Adapters: For complex scenarios, implementing provider-specific adapters might be necessary. These adapters would handle the translation of events from the provider’s native format to a common, internal format.

- Conditional Logic: Utilizing conditional logic within the function code to handle different event types is another possibility, though this can lead to code that is less portable and more complex.

For example, consider API Gateway triggers. AWS API Gateway, Azure API Management, and Google Cloud Endpoints all serve similar functions but have different configuration parameters and event formats. An abstraction layer could normalize the request data (headers, body, parameters) from each gateway into a consistent structure that the function code processes.

Data Source Portability

Data source portability focuses on enabling serverless functions to interact with various data stores without being tightly coupled to a specific provider’s database or storage service. This allows for greater flexibility and reduces vendor lock-in.

Data source portability can be achieved through:

- Database-Agnostic ORMs (Object-Relational Mappers): Using ORMs like SQLAlchemy (Python) or TypeORM (TypeScript/JavaScript) allows developers to interact with databases using a unified API. The ORM handles the database-specific details, such as SQL dialect differences.

- Abstracting Data Access: Creating a data access layer (DAL) is another effective approach. This layer encapsulates all database interactions, providing a consistent interface for the function code. The DAL can then be configured to use different database drivers or connection strings based on the deployment environment.

- Cloud Storage Abstraction: For object storage, libraries like `aws-sdk` (for AWS), `@azure/storage-blob` (for Azure), and `@google-cloud/storage` (for Google Cloud) provide provider-specific APIs. However, these can be abstracted using custom wrappers or libraries to provide a consistent interface.

- Message Queues: For message queues, providers like AWS SQS, Azure Service Bus, and Google Cloud Pub/Sub provide different APIs. Abstraction layers can normalize the message format and handle the queuing/dequeueing operations.

For example, if a function needs to read data from a database, instead of directly using the AWS SDK for DynamoDB, the code could use an ORM like SQLAlchemy. The ORM would then translate the code’s database queries into the appropriate SQL dialect for the underlying database (e.g., PostgreSQL, MySQL, or even DynamoDB). This approach makes the function code database-agnostic and easier to port.

Procedure for Migrating an S3 Bucket Event Trigger

Migrating a function triggered by an S3 bucket event on AWS to Azure Blob Storage and Google Cloud Storage involves several steps. The following procedure Artikels this process:

- Analyze the Existing Implementation: Examine the existing function code and infrastructure configuration on AWS. Identify the specific S3 bucket, event types (e.g., object creation, deletion), and any dependencies on AWS-specific services.

- Define the Abstraction Layer: Design an abstraction layer that provides a consistent interface for interacting with object storage services. This layer should handle the differences in API calls, event formats, and authentication mechanisms.

- Adapt the Function Code: Modify the function code to use the abstraction layer instead of the AWS SDK directly. This will involve replacing AWS-specific API calls with the abstraction layer’s methods.

- Configure Azure Blob Storage Trigger:

- Create an Azure Blob Storage account and a container.

- Set up an Azure Function with a Blob trigger, configuring it to listen for events in the container.

- Configure the Azure Function to use the abstraction layer for data access.

- Configure Google Cloud Storage Trigger:

- Create a Google Cloud Storage bucket.

- Set up a Cloud Function with a Cloud Storage trigger, configuring it to listen for events in the bucket.

- Configure the Cloud Function to use the abstraction layer for data access.

- Test and Verify: Thoroughly test the function on both Azure and Google Cloud to ensure that it correctly processes events and interacts with the data storage services. Verify that the function behaves as expected and that there are no provider-specific issues.

- Deploy and Monitor: Deploy the functions to Azure and Google Cloud and monitor their performance and behavior. Adjust the configuration and code as necessary to optimize performance and ensure stability.

This procedure demonstrates the key steps involved in migrating an S3 bucket event trigger to Azure Blob Storage and Google Cloud Storage, emphasizing the use of an abstraction layer to facilitate portability. The success of this migration relies on careful planning, the creation of a well-defined abstraction layer, and thorough testing across all target platforms.

Testing and Debugging Portable Serverless Applications

Testing and debugging portable serverless applications are crucial for ensuring that the code functions correctly across different serverless providers. The inherent complexity of distributed systems, coupled with the potential for variations in provider implementations, necessitates a rigorous approach to testing and debugging. This section details strategies for comprehensive testing and debugging of portable serverless functions, focusing on unit, integration, and end-to-end testing, along with techniques and tools for identifying and resolving issues.

Strategies for Testing Portable Serverless Functions

Testing portable serverless functions requires a layered approach, starting with individual component verification and culminating in system-wide validation. The aim is to verify that the code behaves as expected, regardless of the underlying provider infrastructure.

- Unit Tests: Unit tests are fundamental for verifying the functionality of individual functions or modules in isolation. They focus on testing the smallest testable units of the application, such as individual functions or classes. For portable serverless applications, unit tests should be designed to be provider-agnostic, meaning they should not rely on any specific provider’s SDK or API calls. This can be achieved by:

- Mocking Dependencies: Replace external dependencies, such as database connections, API calls, and event triggers, with mock objects.

Mocking allows for controlling the input and output of these dependencies, facilitating the testing of different scenarios without actually interacting with the external services. For instance, if a function interacts with a database, a mock database object can be used to simulate the database interactions and verify the function’s behavior under various data conditions.

- Using Provider-Agnostic Libraries: Leverage libraries that abstract provider-specific details. Libraries like `serverless-offline` can be helpful, although their capabilities are limited.

- Test Coverage: Aim for high test coverage to ensure that all code paths are tested. High test coverage increases confidence in the application’s reliability and reduces the likelihood of undetected bugs.

- Mocking Dependencies: Replace external dependencies, such as database connections, API calls, and event triggers, with mock objects.

- Integration Tests: Integration tests verify the interaction between different components or modules of the application. They test how the different parts of the application work together, including interactions with external services, such as databases, APIs, and message queues. For portable serverless applications, integration tests should be designed to be as close to the real-world deployment as possible. This can be achieved by:

- Provider-Specific Configurations: Configure integration tests to use provider-specific configurations, such as API endpoints, database credentials, and event trigger configurations.

This ensures that the tests accurately reflect the deployment environment.

- Using Provider-Specific SDKs: While unit tests should avoid provider-specific SDKs, integration tests can leverage them to interact with external services, but only when necessary and with careful consideration.

- Test Environment Setup: Setup a dedicated test environment for integration tests to isolate the tests from production environments. The test environment should mimic the production environment as closely as possible, including provider-specific configurations and infrastructure.

- Provider-Specific Configurations: Configure integration tests to use provider-specific configurations, such as API endpoints, database credentials, and event trigger configurations.

- End-to-End Tests: End-to-end (E2E) tests validate the entire application flow, from user interaction to data storage. They simulate real-world scenarios and ensure that the application functions correctly from start to finish. For portable serverless applications, E2E tests are critical for verifying the compatibility and functionality across different providers. This involves:

- Deploying to Multiple Providers: Deploy the application to multiple serverless providers and run E2E tests against each deployment.

This allows for validating the application’s behavior across different provider environments.

- Testing Event Triggers: Simulate events that trigger the serverless functions, such as HTTP requests, database updates, or message queue messages. This ensures that the application correctly handles different event types.

- Monitoring Performance: Monitor the performance of the application across different providers, including latency, throughput, and error rates. This helps identify performance bottlenecks and potential compatibility issues.

- Deploying to Multiple Providers: Deploy the application to multiple serverless providers and run E2E tests against each deployment.

Debugging Techniques for Cross-Platform Deployments

Debugging portable serverless applications involves identifying and resolving issues related to cross-platform deployments. The debugging process should be systematic and involve the use of various techniques to pinpoint the root cause of the problem.

- Logging: Implement comprehensive logging throughout the application. Logging is essential for tracking the execution flow, capturing errors, and identifying performance issues.

- Structured Logging: Use structured logging to log key information, such as function names, timestamps, request IDs, and error messages. Structured logging makes it easier to analyze logs and identify patterns.

- Provider-Specific Logging: Leverage provider-specific logging tools and services to capture logs from different environments. This ensures that logs are accessible and searchable across different providers.

- Log Aggregation: Aggregate logs from different providers into a central logging system to facilitate analysis. Centralized logging allows for correlating events across different providers and identifying cross-platform issues.

- Tracing: Implement distributed tracing to track the execution flow of requests across different services. Tracing helps identify performance bottlenecks and pinpoint the source of errors in complex serverless applications.

- Use OpenTelemetry: Employ open-source tools like OpenTelemetry to trace requests across different providers. OpenTelemetry provides a standardized way to collect and export tracing data, making it easier to monitor application performance across different environments.

- Correlate Traces: Correlate traces across different providers to identify cross-platform issues. Correlating traces allows for identifying the root cause of errors and performance bottlenecks across different providers.

- Monitoring: Implement monitoring to track the performance and health of the application. Monitoring helps identify performance issues, errors, and other anomalies that may affect the application’s functionality.

- Provider-Specific Monitoring Tools: Use provider-specific monitoring tools to track the performance and health of the application in each environment. Each provider offers its own monitoring tools that can provide valuable insights into the application’s performance.

- Centralized Monitoring Dashboard: Create a centralized monitoring dashboard to aggregate metrics and alerts from different providers. A centralized dashboard allows for a holistic view of the application’s performance and health.

- Error Handling: Implement robust error handling to gracefully handle exceptions and prevent unexpected application behavior. Error handling is essential for ensuring the reliability and stability of the application.

- Catch Exceptions: Catch exceptions and log error messages with detailed information, such as the function name, error message, and stack trace. Detailed error messages make it easier to diagnose and resolve issues.

- Implement Retries: Implement retries for transient errors, such as network issues or temporary service unavailability. Retries can improve the application’s resilience and reduce the impact of transient errors.

- Implement Circuit Breakers: Implement circuit breakers to prevent cascading failures. Circuit breakers can automatically detect and isolate failing services, preventing them from affecting the entire application.

Tools and Techniques for Simulating Different Provider Environments

Simulating different provider environments during testing is crucial for verifying the portability of serverless applications. Several tools and techniques can be used to mimic the behavior of different providers, allowing developers to test their code locally and reduce the need for deployments to multiple environments.

- Local Development Environments: Use local development environments to simulate serverless functions and their interactions. Local development environments allow for testing code without deploying to the cloud, which can save time and cost.

- Serverless Framework Plugins: Utilize plugins like `serverless-offline` to emulate serverless functions locally. These plugins allow developers to run and test their functions in a local environment that mimics the behavior of different providers.

- Containerization: Employ containerization technologies like Docker to create isolated environments that simulate different provider environments. Containerization allows for creating consistent and reproducible testing environments.

- Mocking and Stubbing: Employ mocking and stubbing techniques to simulate interactions with external services and dependencies. Mocking and stubbing allows for controlling the input and output of dependencies, facilitating the testing of different scenarios.

- Mocking Frameworks: Use mocking frameworks to create mock objects that simulate the behavior of external services. Mocking frameworks, such as Mockito (for Java) or Jest (for JavaScript), allow for creating realistic simulations of external services.

- Stubbing HTTP Requests: Use stubbing libraries to simulate HTTP requests and responses. Stubbing allows for testing the application’s behavior without relying on actual HTTP requests.

- Testing Providers: Test your application against multiple serverless providers, which is crucial for verifying its portability.

- Provider-Specific SDKs: Utilize provider-specific SDKs to interact with the different serverless providers. This allows for testing the application’s behavior in each provider’s environment.

- Automated Testing: Automate the testing process using tools like Terraform or CloudFormation. Automated testing reduces the manual effort required to test the application and increases the speed and reliability of testing.

- Configuration Management: Implement a robust configuration management strategy to handle provider-specific configurations. Configuration management is essential for ensuring that the application can be deployed to different providers without requiring code changes.

- Environment Variables: Use environment variables to store provider-specific configurations, such as API keys, database credentials, and region settings. Environment variables allow for changing the application’s behavior without modifying the code.

- Configuration Files: Utilize configuration files to manage provider-specific configurations. Configuration files allow for organizing and managing provider-specific configurations in a structured way.

Monitoring and Logging Across Providers

Maintaining observability is crucial for the operational success of any serverless application, and this becomes even more critical when aiming for portability. Consistent monitoring and logging across different serverless providers allows developers to gain a unified view of application performance, troubleshoot issues effectively, and ensure compliance with service level agreements (SLAs). The challenges associated with portability, such as vendor-specific APIs and different data formats, necessitate careful consideration of monitoring and logging strategies.

Importance of Consistent Monitoring and Logging

The ability to consistently monitor and log across serverless providers provides numerous benefits. These benefits contribute significantly to the overall reliability, performance, and maintainability of portable serverless applications.

- Unified Visibility: Consistent logging and monitoring provide a centralized view of application behavior, regardless of the underlying infrastructure. This enables rapid identification of performance bottlenecks, error patterns, and security vulnerabilities across all deployments.

- Simplified Troubleshooting: With a unified logging and monitoring system, debugging becomes significantly easier. Developers can correlate events and metrics from different providers to quickly pinpoint the root cause of issues. This is especially crucial when dealing with complex distributed serverless architectures.

- Performance Optimization: By analyzing performance metrics and logs, developers can identify areas for optimization, such as inefficient code or resource allocation. This data-driven approach enables continuous improvement of application performance and resource utilization.

- Compliance and Auditing: Consistent logging is essential for meeting regulatory requirements and conducting security audits. A unified logging system simplifies the process of collecting and analyzing logs for compliance purposes.

- Automated Alerting and Incident Response: Centralized monitoring allows for the implementation of automated alerting based on predefined thresholds and patterns. This enables proactive incident response and reduces downtime.

Comparison of Serverless Provider Monitoring and Logging Capabilities

Serverless providers offer a range of built-in monitoring and logging tools, each with its own strengths and weaknesses. Understanding these differences is essential for designing a portable solution.

Let’s consider the monitoring and logging capabilities of three prominent serverless providers: AWS, Azure, and Google Cloud.

| Feature | AWS (e.g., CloudWatch) | Azure (e.g., Azure Monitor) | Google Cloud (e.g., Cloud Monitoring & Cloud Logging) |

|---|---|---|---|

| Logging Service | CloudWatch Logs | Azure Monitor Logs | Cloud Logging |

| Monitoring Service | CloudWatch | Azure Monitor | Cloud Monitoring |

| Metrics Collection | Automatic metrics for services (e.g., Lambda invocations, API Gateway requests), custom metrics via API | Automatic metrics for services (e.g., Azure Functions executions, API Management requests), custom metrics via API | Automatic metrics for services (e.g., Cloud Functions invocations, API Gateway requests), custom metrics via API |

| Log Format | JSON, plain text | JSON, plain text | JSON, plain text |

| Log Retention | Configurable, tiered pricing based on retention duration | Configurable, tiered pricing based on retention duration | Configurable, tiered pricing based on retention duration |

| Alerting | CloudWatch Alarms | Azure Monitor Alerts | Cloud Monitoring Alerts |

| Log Querying | CloudWatch Logs Insights | Kusto Query Language (KQL) | Cloud Logging’s query language |

| Integration with other services | Extensive integration with other AWS services (e.g., S3, Kinesis) | Extensive integration with other Azure services (e.g., Event Hubs, Storage) | Extensive integration with other Google Cloud services (e.g., Pub/Sub, BigQuery) |

Each provider offers robust logging and monitoring capabilities. AWS CloudWatch provides a comprehensive suite of tools for logging, monitoring, and alerting, with tight integration across the AWS ecosystem. Azure Monitor offers similar functionality, with integration across Azure services and the ability to query logs using Kusto Query Language (KQL). Google Cloud’s Cloud Logging and Cloud Monitoring provide powerful features for log analysis, metric collection, and alerting, with seamless integration with other Google Cloud services.

The key challenge is that each provider’s tools use different APIs, query languages, and data formats, making it difficult to achieve portability without additional abstraction.

Integration with Centralized Logging and Monitoring Solutions

To achieve portability, it’s necessary to integrate applications with centralized logging and monitoring solutions that support multiple cloud providers. These solutions act as an abstraction layer, normalizing the data and providing a unified view.

Consider the following approach using a third-party logging and monitoring service such as Datadog, New Relic, or Splunk.

- Choose a Centralized Platform: Select a platform that supports all target serverless providers. Consider factors such as pricing, features, supported integrations, and ease of use.

- Instrument the Code: Modify the application code to send logs and metrics to the centralized platform. This typically involves using the platform’s SDK or API.

- Configure Logging Libraries: Use logging libraries that are compatible with the chosen platform. These libraries provide abstractions for writing logs in a consistent format. Example: Use the Datadog Python library.

- Use Environment Variables: Configure the platform-specific settings, such as API keys and region information, using environment variables. This ensures that the application can be deployed to different providers without code changes.

- Implement Infrastructure as Code (IaC): Use IaC tools, such as Terraform or CloudFormation, to deploy and configure the logging and monitoring agents or collectors. This ensures consistent configuration across all deployments.

- Create Dashboards and Alerts: Define dashboards and alerts within the centralized platform to monitor key metrics and trigger notifications based on predefined thresholds.

Example: Integrating with Datadog

Imagine a Python function deployed on AWS Lambda and Google Cloud Functions. The following steps demonstrate integration with Datadog.

- Install Datadog Library: Install the Datadog Python library within the function’s environment:

pip install datadog - Configure Datadog Agent: Configure the Datadog agent using environment variables.

- Import and Initialize the Datadog Client: In your function code, import the Datadog client and initialize it.

- Log Events and Metrics: Use the Datadog client to log events and metrics.

This approach provides a single pane of glass for monitoring, regardless of the underlying serverless provider. The application code remains portable, and the centralized platform handles the provider-specific details. The environment variables, for instance, would be different on AWS, Azure, and Google Cloud, but the core application logic remains the same.

Consider a scenario where an e-commerce platform uses serverless functions for processing orders. By integrating with a centralized logging and monitoring solution, such as Datadog, the platform can:

- Track the number of orders processed per minute across AWS Lambda, Azure Functions, and Google Cloud Functions.

- Monitor the latency of order processing functions on each provider.

- Set up alerts to notify the operations team if the error rate exceeds a certain threshold on any provider.

This provides a consistent view of the platform’s health, enabling the operations team to quickly identify and resolve issues, regardless of the deployment location.

Security Considerations in a Multi-Provider Environment

The implementation of portable serverless applications across multiple cloud providers introduces a complex landscape for security. Ensuring robust security posture necessitates a meticulous approach to authentication, authorization, data protection, and secret management. This is further complicated by the inherent differences in security models and features offered by each provider.

Security Best Practices for Portable Serverless Applications

Adhering to established security best practices is paramount when designing and deploying portable serverless applications. This involves a multifaceted approach to securing the application’s components and data.

- Authentication: Implement robust authentication mechanisms to verify the identity of users and services accessing the application. This includes:

- Utilizing industry-standard protocols such as OAuth 2.0 or OpenID Connect for user authentication. These protocols allow for delegated authentication, where a user’s identity is verified by a trusted identity provider.

- Employing service accounts with limited permissions for inter-service communication within the application. These accounts should have the minimum necessary privileges to perform their tasks.

- Enforcing multi-factor authentication (MFA) for all user accounts, especially those with privileged access. MFA adds an extra layer of security by requiring users to provide multiple forms of verification.

- Authorization: Implement granular authorization policies to control access to application resources. This entails:

- Defining clear roles and permissions to restrict access based on the principle of least privilege. Users and services should only have the permissions necessary to perform their designated tasks.

- Using access control lists (ACLs) or identity and access management (IAM) policies provided by each cloud provider to manage resource access. This ensures that only authorized entities can interact with the application’s resources.

- Regularly reviewing and auditing authorization policies to ensure they remain effective and aligned with the application’s security requirements.

- Data Encryption: Employ encryption to protect data at rest and in transit. This involves:

- Encrypting sensitive data stored in databases, object storage, and other data stores. This prevents unauthorized access to data even if the underlying infrastructure is compromised.

- Using Transport Layer Security (TLS) to encrypt data transmitted over the network. TLS ensures that data is protected from eavesdropping and tampering during transit.

- Leveraging provider-specific key management services (KMS) or using dedicated KMS solutions to manage encryption keys securely. KMS services provide features such as key rotation, access control, and auditing.

- Input Validation: Validate all user inputs to prevent injection attacks, such as SQL injection and cross-site scripting (XSS). This includes:

- Sanitizing user input to remove or neutralize potentially malicious characters.

- Using parameterized queries or prepared statements to prevent SQL injection vulnerabilities.

- Implementing content security policies (CSPs) to mitigate XSS attacks.

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration tests to identify and address vulnerabilities. This involves:

- Performing vulnerability scans to detect known vulnerabilities in the application’s code and dependencies.

- Conducting penetration tests to simulate real-world attacks and assess the application’s resilience.

- Implementing a bug bounty program to incentivize security researchers to report vulnerabilities.

Managing Secrets and Credentials Securely Across Multiple Providers

Secure secret and credential management is crucial for protecting sensitive information such as API keys, database passwords, and other secrets. Due to the varying capabilities of each cloud provider, the approach requires careful consideration.

- Provider-Specific Secret Management Services: Utilize the secret management services offered by each cloud provider. These services provide secure storage, access control, and rotation of secrets.

- AWS Secrets Manager: Offers features such as secret rotation, auditing, and integration with other AWS services.

- Azure Key Vault: Provides secure storage for secrets, keys, and certificates, along with access control and auditing capabilities.

- Google Cloud Secret Manager: Enables secure storage, versioning, and access control for secrets.

- Abstraction Layer with Frameworks: Employ abstraction layers or frameworks that provide a unified interface for accessing secrets across different providers.

- HashiCorp Vault: Acts as a centralized secret management solution that can integrate with multiple cloud providers.

- Skaffold: A developer tool for Kubernetes that simplifies the build, push, and deployment of containerized applications. It can be configured to retrieve secrets from various secret management services.

- Environment Variables: Use environment variables to inject secrets into serverless functions. This allows for decoupling secrets from the code.

- Avoid hardcoding secrets directly into the code.

- Ensure environment variables are properly secured and accessible only to the necessary functions.

- Least Privilege Principle: Grant the minimum necessary permissions to access secrets.

- Use IAM roles or service accounts with limited access to secret management services.

- Implement fine-grained access control policies to restrict access to specific secrets.

- Secret Rotation: Regularly rotate secrets to reduce the risk of compromise.

- Automate the process of rotating secrets, such as API keys and database passwords.

- Implement a schedule for rotating secrets.

Challenges of Implementing Consistent Security Policies Across Different Serverless Platforms

Achieving consistent security policies across multiple serverless platforms poses significant challenges due to the inherent differences in security models and features.

- Provider-Specific Security Features: Each cloud provider offers its own set of security features, such as IAM roles, network security groups, and encryption options.

- The implementation of these features varies across providers, requiring developers to adapt their security configurations to each platform.

- The lack of a standardized approach can lead to inconsistencies and vulnerabilities.

- IAM and Access Control: Implementing consistent IAM and access control policies across different providers can be complex.

- Different providers use different syntax and conventions for defining IAM policies.

- Ensuring that the same level of access control is enforced across all platforms requires careful planning and configuration.

- Network Security: Managing network security across multiple providers can be challenging.

- Each provider has its own networking concepts, such as virtual private clouds (VPCs) and security groups.

- Establishing secure network connections between different serverless functions and resources requires understanding and configuring these networking components.

- Monitoring and Logging: Monitoring and logging security events consistently across multiple providers can be difficult.

- Each provider uses its own logging formats and monitoring tools.

- Collecting and analyzing security logs from different providers requires the use of centralized logging and monitoring solutions.

- Compliance and Regulatory Requirements: Meeting compliance and regulatory requirements, such as GDPR or HIPAA, across multiple providers adds another layer of complexity.

- Ensuring that the application meets the requirements of each regulatory framework across all platforms requires careful planning and implementation.

- Differences in provider offerings can complicate compliance efforts.

Cost Optimization Strategies for Cross-Platform Deployments

Deploying serverless applications across multiple providers necessitates a proactive approach to cost management. The inherent pay-per-use model of serverless computing offers significant potential for cost savings, but the complexity of multiple provider pricing structures can quickly lead to unexpected expenses if not carefully monitored and optimized. This section details strategies for minimizing costs when operating in a multi-provider serverless environment.

Comparing Serverless Provider Pricing Models

Understanding the nuances of pricing models across different serverless providers is fundamental to cost optimization. Providers employ various pricing strategies for compute, storage, and networking resources, making direct comparisons complex.

- Compute Pricing: Compute pricing is often based on the number of function invocations, the duration of execution (in milliseconds or seconds), and the allocated memory.

- AWS Lambda: Charges are based on request counts and duration. The first 1 million requests per month are free. Pricing varies by region. For example, in the US East (N.

Virginia) region, the price is $0.0000004 per GB-s.

- Azure Functions: Pricing is based on the number of executions and resource consumption. The consumption plan charges per execution and resource allocation. Free grant includes a monthly free grant.

- Google Cloud Functions: Pricing depends on the number of invocations, compute time, and memory allocation. The first 2 million invocations per month are free. Compute time is priced per GB-s.

- AWS Lambda: Charges are based on request counts and duration. The first 1 million requests per month are free. Pricing varies by region. For example, in the US East (N.

- Storage Pricing: Storage costs vary based on storage type (e.g., object storage, database storage) and the amount of data stored.

- AWS S3: Charges for storage capacity, requests, and data transfer out. Pricing tiers are based on storage volume.

- Azure Blob Storage: Pricing is based on the storage type (hot, cool, archive), data volume, and transaction costs.

- Google Cloud Storage: Pricing is based on storage class (Standard, Nearline, Coldline, Archive), data volume, and operations.

- Networking Pricing: Networking costs involve data transfer, both within and between regions and to the internet.

- Data Transfer Out: Providers typically charge for data transferred out of their network. The cost varies based on the destination (e.g., within the same region, different regions, or the internet).

- Data Transfer In: Data transfer into the provider’s network is usually free.

- Inter-Region Data Transfer: Data transfer between different regions within the same provider incurs charges.

Analyzing these pricing models requires a detailed assessment of the application’s resource consumption patterns. Tools like cost calculators provided by each cloud provider can help estimate the cost based on projected usage.

Methods for Setting Up Cost Alerts and Monitoring

Proactive cost monitoring is crucial for preventing unexpected expenses. Implementing robust alerting and monitoring systems allows for timely identification and resolution of cost anomalies.

- Provider-Specific Cost Management Tools: Each provider offers built-in cost management tools.

- AWS Cost Explorer: Provides detailed cost analysis, forecasting, and budgeting features. Users can create budgets and set up alerts to be notified when costs exceed a defined threshold.

- Azure Cost Management + Billing: Offers cost analysis, budgeting, and recommendations for cost optimization. Alerts can be configured based on cost, resource consumption, or other metrics.

- Google Cloud Billing: Provides cost dashboards, budget alerts, and recommendations for optimizing resource utilization. Users can define budget thresholds and receive notifications via email or other channels.

- Third-Party Cost Management Tools: Third-party tools offer cross-provider cost monitoring and optimization capabilities.

- Cloudability (now Apptio): Provides a unified view of cloud costs across multiple providers, enabling cost allocation, forecasting, and optimization recommendations.

- CloudHealth by VMware: Offers comprehensive cost management, including cost optimization, resource utilization analysis, and compliance reporting.

- ProsperOps: An automated cost optimization platform for cloud infrastructure, including serverless.

- Setting Up Cost Alerts: Cost alerts are essential for proactively managing spending.

- Budget Thresholds: Define budget thresholds based on expected usage and set alerts to trigger notifications when costs approach or exceed these thresholds.

- Anomaly Detection: Implement anomaly detection to identify unexpected cost spikes or unusual spending patterns. Tools like AWS Anomaly Detection (using Amazon Lookout for Metrics) can automatically detect anomalies in cost data.

- Alerting Channels: Configure alerts to be sent via email, Slack, or other communication channels to ensure timely notification of cost-related issues.

- Cost Monitoring Metrics: Track key metrics to understand cost drivers and identify areas for optimization.

- Function Execution Duration: Monitor the average and maximum execution times of serverless functions. Longer execution times increase compute costs.

- Memory Consumption: Track the memory usage of functions. Over-provisioning memory leads to unnecessary costs.

- Number of Invocations: Monitor the number of function invocations to understand usage patterns and identify potential areas for optimization.

- Data Transfer: Monitor data transfer in and out of the cloud environment. Data transfer costs can significantly impact overall spending.

For example, setting up an alert in AWS Cost Explorer to notify you if your Lambda function costs exceed a predefined budget threshold, say $100 per month, allows for immediate investigation and corrective action if necessary. This can prevent runaway costs due to unexpected function invocations or inefficient code.

Closing Summary

In conclusion, achieving serverless portability is not a simple task but a multifaceted endeavor that demands careful planning and execution. By embracing abstraction layers, leveraging infrastructure-as-code tools, and adhering to best practices in code and runtime environments, developers can significantly mitigate vendor lock-in. The journey towards portability requires a commitment to consistent monitoring, robust security, and diligent cost optimization. Ultimately, the ability to deploy and manage serverless functions across multiple providers empowers developers with greater agility, resilience, and control over their cloud infrastructure, fostering innovation and reducing dependencies.

Question & Answer Hub

What are the primary benefits of serverless portability?

Serverless portability offers several key advantages, including reduced vendor lock-in, increased flexibility in choosing the best provider for specific workloads, improved disaster recovery capabilities, and enhanced negotiation power with cloud providers.

What is the role of abstraction layers in achieving serverless portability?

Abstraction layers act as intermediaries between your application code and the underlying serverless infrastructure. They provide a consistent API and configuration model, shielding developers from provider-specific details and simplifying cross-platform deployments.

How does infrastructure-as-code (IaC) facilitate portability?

IaC allows you to define your serverless infrastructure using code (e.g., Terraform, Pulumi). This code can then be deployed across multiple providers, ensuring consistency and simplifying the management of your serverless resources.

What are the key considerations for testing portable serverless applications?

Testing portable serverless applications requires a multi-faceted approach. Unit tests should verify individual function logic, integration tests should validate interactions with external services, and end-to-end tests should simulate real-world scenarios across different providers. Tools for mocking and simulating provider environments are also crucial.

How can I manage secrets and credentials securely in a multi-provider environment?

Securely managing secrets in a multi-provider environment involves using a secrets management service (e.g., AWS Secrets Manager, Azure Key Vault, Google Cloud Secret Manager) and implementing a consistent approach to secret retrieval and injection into your serverless functions. Consider using environment variables and encrypting sensitive data.