Edge computing is revolutionizing how data is processed, dramatically impacting real-time applications. By bringing computation closer to the data source, edge computing significantly reduces latency, enabling faster responses and enhanced user experiences. This approach is particularly crucial in industries demanding near-instantaneous feedback, such as autonomous vehicles and industrial automation.

This exploration delves into the mechanisms behind edge computing’s latency reduction, examining the technical aspects, practical applications, and future trends in this rapidly evolving field.

Defining Edge Computing

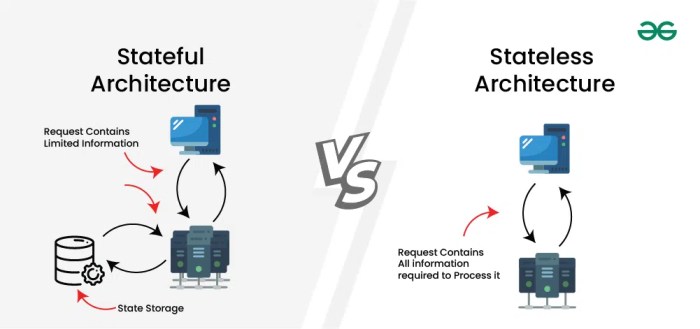

Edge computing represents a paradigm shift in data processing, moving the computational power closer to the source of data generation. This shift, driven by the explosive growth of data-intensive applications and the need for near real-time responses, distinguishes it from traditional cloud computing models. This closer proximity dramatically reduces latency, enabling faster and more responsive applications.The key differentiator between edge computing and traditional cloud computing lies in the location of data processing.

Cloud computing centers data processing in a centralized location, often far from the source of the data. In contrast, edge computing processes data at the network edge, where sensors, devices, and users reside. This decentralized approach optimizes responsiveness and reduces reliance on centralized infrastructure.

Edge Devices and Their Functionalities

Edge computing relies on a diverse array of devices, each designed to collect, process, and potentially act on data locally. These devices range from simple sensors to complex gateways and micro-controllers. The functionalities vary significantly depending on the device’s role in the system.

- Sensors: These devices collect raw data from the environment. Examples include temperature sensors, pressure sensors, and motion detectors. Their role is primarily data acquisition, providing the raw material for subsequent processing.

- Gateways: These act as intermediaries between sensors and the cloud or other processing units. They aggregate data from multiple sensors, perform basic processing (e.g., filtering, aggregation), and forward relevant data to the appropriate destinations. They are crucial in managing the volume and complexity of data flowing from the edge.

- Microcontrollers: These small, embedded computers handle specific tasks at the edge. They can control actuators, execute simple algorithms, and make real-time decisions based on the data they process. Their capabilities are well-suited for automation and control applications.

Industries Leveraging Edge Computing

Edge computing is revolutionizing various industries by enabling real-time data analysis and decision-making. Its ability to reduce latency and improve responsiveness is critical for applications demanding speed and efficiency.

- Manufacturing: Predictive maintenance, real-time quality control, and automated production processes are examples of where edge computing improves efficiency. By processing data locally, manufacturers can identify potential equipment failures before they occur and optimize production lines in real-time.

- Healthcare: Remote patient monitoring, real-time medical imaging analysis, and robotic surgery all benefit from the reduced latency offered by edge computing. The immediate access to data enables faster diagnoses and treatment decisions.

- Transportation: Self-driving cars, traffic optimization, and logistics management rely on the ability to process data locally to enable rapid responses. The reduced latency ensures safe and efficient operations.

Examples of Edge Devices and Their Applications

The table below illustrates the different types of edge devices, their typical locations, the data processing they handle, and the common use cases.

| Device Type | Location | Data Processing | Typical Use Cases |

|---|---|---|---|

| Temperature Sensor | Industrial Plant, Farm | Measures temperature; transmits readings | Monitoring environmental conditions, predictive maintenance |

| Network Gateway | Retail Store, Warehouse | Aggregates sensor data, filters noise | Real-time inventory tracking, security monitoring |

| Microcontroller | Smart Home, Automated Vehicle | Executes control algorithms, makes real-time decisions | Home automation, autonomous vehicle control |

Understanding Latency

Latency, in the context of network communication, refers to the time it takes for data to travel from a source to a destination. This delay, often measured in milliseconds (ms), impacts the perceived responsiveness and performance of applications, especially those requiring real-time interaction. Understanding latency is crucial for optimizing network performance and ensuring a smooth user experience.Latency isn’t simply a single, static value.

Instead, it’s a complex phenomenon influenced by a multitude of factors, from the physical distance between points to the processing power of intermediary devices. Analyzing these factors is key to identifying bottlenecks and implementing effective solutions.

Factors Contributing to High Latency

Several factors can contribute to increased latency in network communication. Understanding these factors allows for targeted optimization efforts.

- Network congestion: Heavy network traffic can lead to delays as data packets compete for transmission channels. This is a common issue, especially during peak hours or when multiple users access a shared network simultaneously.

- Distance and physical infrastructure: The physical distance between the source and destination plays a significant role in latency. Longer distances necessitate more time for data to travel, potentially impacting response times.

- Network protocols and routing: Different network protocols and routing algorithms can introduce varying degrees of latency. Efficient routing and protocol implementations minimize delays, while less optimized ones can significantly increase them.

- Processing time at intermediate nodes: Each device along the data path, including routers and servers, introduces processing time to handle and forward the data packets. Inefficient processing at these nodes can contribute to overall latency.

- Server load: High server load can result in longer processing times, impacting the time it takes to retrieve or send data. This can be a major source of latency in cloud-based systems.

Impact of Latency on Real-Time Applications

High latency can significantly degrade the performance of real-time applications, leading to a poor user experience.

- Video conferencing: In video conferencing, high latency can cause noticeable delays in audio and video transmission, leading to frustrating pauses and disruptions. This makes real-time communication difficult and unpleasant.

- Online gaming: In online games, latency can manifest as noticeable lag, affecting the responsiveness of controls and the ability to react to events in real-time. High latency can lead to a frustrating and potentially unfair gameplay experience.

- Stock trading: In high-frequency trading, even small delays in data transmission can lead to missed opportunities and reduced profits. Minimizing latency is critical for maintaining competitive edge in this domain.

Latency Comparison: Cloud vs. Edge

Cloud-based systems often experience higher latency compared to edge-based systems due to the greater distance data needs to travel. The implications are significant for real-time applications.

| Factor | Cloud-based System | Edge-based System |

|---|---|---|

| Data Transmission Distance | Potentially greater distance, traversing multiple networks and data centers | Shorter distance, reducing the number of hops and network segments |

| Processing Time | Processing occurs at a centralized location, potentially increasing delays due to server load and network congestion | Processing occurs closer to the user, reducing the impact of long-distance transmission and server congestion |

| Response Time | Higher latency due to the combined effects of distance and processing time at remote locations | Lower latency due to reduced distance and localized processing |

Impact of Network Topologies on Latency

Network topology significantly influences latency, impacting the performance of applications that rely on real-time data.

- Star topology: In a star topology, all devices connect to a central hub, creating a potential bottleneck. Data passing through this central point can experience higher latency compared to other topologies, particularly under heavy load.

- Mesh topology: In a mesh topology, each device connects to multiple other devices, creating redundancy and minimizing latency in some cases. The reduced reliance on a single point of failure contributes to lower latency in many scenarios.

- Ring topology: In a ring topology, data travels in a circular path. Latency can vary depending on the number of devices and the data flow patterns.

Edge Computing and Data Processing

Data processing at the edge is a critical component of edge computing’s effectiveness. It enables real-time analysis and decision-making by handling data close to its source, significantly reducing latency and improving responsiveness. This localized processing also reduces the volume of data that needs to be transmitted to centralized cloud servers, lowering bandwidth requirements and costs.The immediate proximity of data processing to the data source allows for faster response times, particularly crucial in applications requiring near-instantaneous feedback.

This localized processing also ensures better security and privacy as sensitive data remains within a more controlled environment.

Data Processing at the Edge

Data processing at the edge involves a variety of techniques designed to handle data streams efficiently and effectively. These techniques are tailored to specific application needs and often leverage specialized hardware and software. The key is to find the right balance between computational power, storage capacity, and energy efficiency.

Different Processing Techniques

Various techniques are used for data processing at the edge. These include:

- Real-time Analytics: Edge devices can process data as it arrives, enabling immediate actions and responses. This is vital for applications requiring immediate feedback, such as autonomous vehicles reacting to changing road conditions or industrial sensors monitoring equipment for anomalies.

- Machine Learning Inference: Edge devices can run pre-trained machine learning models to make predictions or classifications on the data. This eliminates the need to send data to the cloud for processing, ensuring faster responses and preserving privacy.

- Data Aggregation and Preprocessing: Edge devices can collect and prepare data for later analysis. This involves filtering, transforming, and compressing data, reducing the amount of data that needs to be transmitted to the cloud.

- Data Transformation and Enrichment: Edge devices can enhance data by incorporating additional information or contextual elements. This enriched data can then be used for more sophisticated analysis, such as identifying patterns or anomalies.

Benefits of Processing Data Closer to the Source

Processing data closer to its source yields several advantages:

- Reduced Latency: Data processing closer to the source minimizes the time it takes to process and respond to data, leading to faster reaction times.

- Improved Performance: Real-time analysis and decision-making are facilitated, leading to more efficient operations and enhanced responsiveness.

- Enhanced Security and Privacy: Sensitive data remains within a controlled environment, reducing the risk of breaches and ensuring compliance with data protection regulations.

- Lower Bandwidth Costs: Transmission of data to cloud servers is reduced, decreasing network costs and improving efficiency.

Examples of Data Processing Tasks at the Edge

Numerous applications utilize edge computing for data processing. Examples include:

- Industrial Automation: Sensors in manufacturing plants can detect equipment malfunctions, trigger maintenance, and optimize production processes in real-time.

- Autonomous Vehicles: Real-time processing of sensor data enables autonomous vehicles to navigate safely and make split-second decisions.

- Smart Cities: Traffic management systems can use edge devices to monitor traffic flow and adjust traffic signals dynamically.

- Retail: Edge devices in retail environments can analyze customer behavior, personalize recommendations, and optimize inventory management.

Cloud vs. Edge Computing Data Processing Comparison

| Feature | Cloud Computing | Edge Computing |

|---|---|---|

| Data Processing Speed | Slower, depending on network latency and cloud infrastructure load | Faster, due to processing near the data source |

| Latency | Higher latency due to data transmission | Lower latency, enabling real-time processing and responses |

| Bandwidth Requirements | Higher, as data needs to be transmitted to the cloud | Lower, as processing happens closer to the source |

| Cost | Potentially higher due to data transmission costs | Potentially lower due to reduced bandwidth usage |

| Scalability | High, cloud infrastructure can be scaled easily | Scalability depends on the number of edge devices and their processing capabilities |

Reducing Latency Through Edge Computing

Edge computing significantly reduces latency compared to traditional cloud-based architectures by bringing computing power closer to the data source. This proximity dramatically shortens the time required for data processing and transmission, leading to faster response times and enhanced user experiences. The benefits are particularly pronounced in applications requiring near real-time processing and responsiveness.The fundamental principle behind edge computing’s latency reduction is its proximity to the data source.

Data processing occurs at the edge, minimizing the need to transmit vast quantities of data to a centralized cloud server. This localized processing drastically reduces transmission time and, consequently, the overall latency. This is a critical advantage in scenarios demanding low-latency operations, such as autonomous vehicles, industrial automation, and real-time video streaming.

Latency Reduction Compared to Cloud Computing

Edge computing dramatically reduces latency compared to cloud computing by localizing data processing. Instead of transmitting data across potentially vast distances to a central cloud server, edge devices process data immediately, minimizing the transmission time. This results in considerably faster response times. For example, a sensor monitoring a manufacturing process can send data directly to an edge device for analysis, rather than transmitting it to a distant cloud server.

This localized processing allows for immediate responses, crucial for real-time control and decision-making in the manufacturing process.

Benefits for Low-Latency Applications

Edge computing offers substantial advantages for applications demanding low latency. These applications often require immediate responses, and edge computing’s localized processing ensures minimal delays. Real-time video streaming, for example, benefits immensely from edge computing, as the processing of video streams occurs near the viewer, reducing buffering and improving playback quality. Similarly, autonomous vehicles require immediate processing of sensor data to maintain safe operation.

Edge computing’s ability to process this data rapidly enables near real-time decision-making, ensuring responsiveness and safety.

Strategies for Optimizing Latency in Edge Computing

Several strategies can optimize latency in edge computing systems. Efficient data compression techniques significantly reduce the volume of data needing transmission to the cloud. By minimizing the amount of data transmitted, latency is reduced. Furthermore, the selection of appropriate edge devices with sufficient processing power and bandwidth capacity directly influences the latency. Implementing optimized communication protocols specifically designed for low-latency data transfer between edge devices and the cloud also minimizes latency.

Finally, deploying edge devices strategically in proximity to data sources further reduces the latency associated with data transmission.

Real-Time Applications Benefitting from Edge Computing

Edge computing is ideal for various real-time applications requiring immediate data processing and responses. Autonomous vehicles rely heavily on edge computing to process sensor data in real-time, enabling safe navigation and decision-making. Similarly, industrial automation systems leverage edge computing for real-time control and monitoring of equipment, enhancing efficiency and preventing downtime. Furthermore, real-time video streaming platforms benefit from edge computing’s reduced latency, providing a smooth and responsive viewing experience for users.

Finally, remote surgery and control systems rely on the ability to process critical data locally and immediately, which is a key advantage of edge computing.

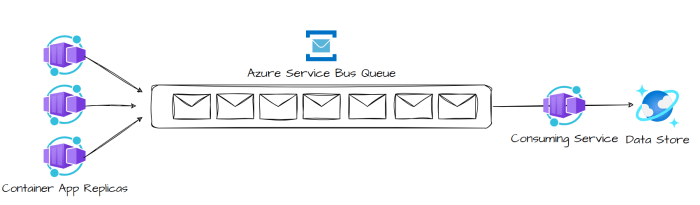

Minimizing Latency When Transferring Data to the Cloud

Efficient techniques can minimize latency when transferring data to the cloud from edge devices. Prioritizing data compression reduces the size of the data packets needing transmission, thereby decreasing transmission time. Implementing low-latency communication protocols specifically designed for transferring data between edge devices and the cloud can significantly reduce transmission time. Using a direct connection to the cloud, bypassing intermediaries, also helps in reducing latency.

Finally, optimizing the routing of data to the cloud server minimizes delays by ensuring efficient data paths.

Network Architecture for Low Latency

Optimizing network architecture is crucial for minimizing latency in edge computing deployments. Effective network design directly impacts the responsiveness and performance of applications running at the edge. Careful consideration of various topologies and protocols is essential to achieve the desired level of low latency.

Network Topologies for Edge Computing

Different network topologies offer varying degrees of efficiency and scalability. Understanding the trade-offs associated with each topology is vital for selecting the most appropriate architecture for a specific edge computing application. Choosing the right topology can significantly impact latency and overall performance.

- Star Topology: This topology centers around a central hub, with edge devices connected to it. A central switch or router manages all communication between devices. This structure is relatively straightforward to manage, making it suitable for smaller deployments. However, a single point of failure exists in the central hub, which can lead to significant disruption if the hub malfunctions.

Centralized management can be advantageous for monitoring and troubleshooting. For instance, in a small retail store deployment for real-time inventory updates, a star topology might suffice, but it may not be suitable for large-scale deployments with complex dependencies.

- Mesh Topology: In a mesh topology, each edge device is connected to multiple other devices. This redundant connectivity provides high fault tolerance and robustness. Multiple paths exist for data transmission, making the system less susceptible to disruptions. However, the complexity of managing connections and the potential for increased bandwidth usage are significant drawbacks. This topology is often preferred in applications where reliability and fault tolerance are paramount, such as critical infrastructure monitoring or industrial control systems.

- Ring Topology: In a ring topology, devices are connected in a closed loop. Data travels in a single direction around the ring. This topology is relatively simple to implement, and data transmission is predictable. However, a single failure in the ring can disrupt communication across the entire network. It might be suitable for simple applications where the impact of a single failure is not catastrophic, such as a local control system for a factory floor.

- Tree Topology: A tree topology combines elements of a star and bus topology. A central hub connects to multiple branches, each branch containing more devices. This structure allows for scalability and hierarchical organization, enabling the deployment to expand gradually. It offers a balance between simplicity and scalability, making it suitable for various edge computing scenarios, including sensor networks or distributed data collection systems.

Network Design Impact on Latency

Several factors in network design directly influence latency. Proximity of devices, network bandwidth, and the efficiency of routing protocols are key considerations. Choosing the right protocol and optimizing network configuration can significantly improve latency. For example, the distance between edge devices and the data center greatly affects latency, and efficient routing protocols are crucial for minimal delay in data transmission.

Comparison of Network Protocols for Edge Communication

Different protocols cater to various needs in edge computing. Factors like data rate, reliability, and security requirements influence the choice of protocol.

| Architecture Type | Advantages | Disadvantages | Use Cases |

|---|---|---|---|

| Star | Simple to manage, centralized monitoring | Single point of failure | Small deployments, real-time inventory updates |

| Mesh | High fault tolerance, multiple paths | Complex to manage, increased bandwidth | Critical infrastructure, industrial control systems |

| Ring | Simple implementation, predictable transmission | Single point of failure | Simple applications, local control systems |

| Tree | Scalability, hierarchical organization | Complexity increases with expansion | Sensor networks, distributed data collection |

Edge Computing and Real-Time Applications

Edge computing’s ability to process data closer to its source significantly impacts real-time applications. This proximity reduces latency, enabling faster responses and improved user experiences. By offloading processing tasks from centralized servers, edge computing enhances the responsiveness and reliability of applications requiring immediate feedback, such as those in industrial automation, autonomous vehicles, and video conferencing.

Real-Time Application Examples

Edge computing dramatically improves the performance of real-time applications by processing data closer to the source. This reduces the latency associated with transmitting data across vast networks to centralized servers, enabling faster responses and more reliable operation. This is crucial for applications demanding immediate feedback.

Impact on Real-Time Application Performance

Edge computing significantly enhances the performance of real-time applications. By processing data locally, it reduces the time required for data transmission, minimizing latency and improving responsiveness. This immediate processing and feedback loop is vital for applications needing near-instantaneous reactions, like autonomous vehicles or industrial control systems. The result is a more stable and reliable application experience.

Video Conferencing

Real-time video conferencing benefits significantly from edge computing. Processing video and audio streams locally at the edge reduces latency, enabling smooth, uninterrupted communication. This is particularly important for geographically dispersed users, where significant network delays can cause buffering or disconnections. By offloading the heavy processing from the central server to the edge, edge computing ensures a seamless user experience.

Autonomous Vehicles

Edge computing plays a critical role in autonomous vehicle operations. Processing sensor data locally at the edge allows for rapid decision-making, enabling vehicles to react swiftly to changing conditions. This is essential for safety and efficient navigation. By reducing latency, edge computing empowers autonomous vehicles to make real-time adjustments and maintain safe operations in diverse environments.

Industrial Automation

In industrial automation, edge computing optimizes real-time control systems. Processing sensor data from machines and equipment at the edge allows for immediate adjustments to processes, enabling faster responses to fluctuations and ensuring optimal performance. The reduction in latency through edge computing enhances efficiency and safety in industrial settings, preventing costly downtime and equipment failures.

Latency Requirements for Real-Time Applications

The table below illustrates the diverse latency requirements for various real-time applications. This table highlights the critical need for edge computing in optimizing application performance in these environments.

| Application | Latency Requirement (milliseconds) | Impact of High Latency |

|---|---|---|

| Video Conferencing | 20-50 | Lagging audio/video, poor user experience |

| Autonomous Vehicles | 10-20 | Delayed responses, safety risks |

| Industrial Automation | 5-15 | Process inefficiencies, equipment failures |

| Real-time Trading | 1-5 | Missed opportunities, financial losses |

Security Considerations in Edge Computing

Edge computing, while offering numerous advantages, presents unique security challenges. The distributed nature of edge devices, often deployed in remote locations, and the processing of sensitive data closer to the source necessitates robust security measures to protect against threats. Ensuring data integrity, confidentiality, and availability throughout the edge computing lifecycle is paramount.

Security Challenges Specific to Edge Computing

Edge devices are frequently resource-constrained, which can impact the performance of security measures. This constraint necessitates a careful selection of security protocols and algorithms to balance security needs with the performance limitations of the devices. Furthermore, the increased attack surface due to the distributed nature of edge computing requires comprehensive security strategies. The diversity of devices, operating systems, and applications deployed at the edge also presents a significant challenge, requiring a flexible and adaptable security framework.

Additionally, the potential for unauthorized access to sensitive data by compromised edge devices poses a considerable threat.

Security Implications of Data Processing at the Edge

Data processing at the edge often involves sensitive information, making the security of this data critical. Unauthorized access or modification of this data can have severe consequences, including financial losses, reputational damage, and potential legal repercussions. Furthermore, the increased volume of data processed at the edge necessitates efficient and scalable security mechanisms. The location of data processing also raises privacy concerns, which need careful consideration to ensure compliance with relevant regulations.

Security breaches at the edge can potentially impact the entire system, requiring comprehensive incident response plans.

Measures to Ensure Data Security in Edge Computing

A multi-layered security approach is essential for safeguarding data in edge computing environments. This includes strong access controls, regular security audits, and robust encryption protocols. Implementing intrusion detection and prevention systems at the edge can proactively identify and mitigate potential threats. Regular software updates and patches are critical to address vulnerabilities and maintain the integrity of edge devices.

Data encryption, both in transit and at rest, is a fundamental security measure. Furthermore, robust authentication mechanisms, such as multi-factor authentication, are vital for verifying the identity of users and devices.

Security Protocols and Encryption Techniques Used in Edge Environments

A variety of security protocols and encryption techniques are employed in edge computing environments. These include Transport Layer Security (TLS) for secure communication channels, and various encryption algorithms like AES (Advanced Encryption Standard) for data protection. Hashing algorithms are used for data integrity checks, ensuring that data hasn’t been tampered with. Furthermore, secure boot processes are used to ensure the integrity of the operating systems on edge devices, preventing malicious code from being loaded.

The specific protocols and techniques used will vary based on the specific application and security requirements.

Comparison of Security Measures for Cloud and Edge Computing

| Feature | Cloud Computing | Edge Computing |

|---|---|---|

| Data Storage | Centralized, often in geographically dispersed data centers. | Distributed, often on devices at the network edge. |

| Security Infrastructure | Typically managed by cloud providers with centralized security policies. | Often managed by individual organizations or device manufacturers with varying security configurations. |

| Access Control | Based on cloud provider’s policies and access management systems. | Requires granular access control mechanisms on individual devices. |

| Data Encryption | Often managed by the cloud provider. | Requires encryption at rest and in transit on edge devices. |

| Vulnerability Management | Cloud providers address vulnerabilities with updates and patches. | Requires proactive management and patching of vulnerabilities on individual devices. |

The table above highlights the key differences in security measures between cloud and edge computing environments. The distributed nature of edge computing necessitates a different approach to security, requiring greater attention to the individual devices and their security configurations.

Data Transfer and Storage

Efficient data transfer and storage are critical components of edge computing’s success. By processing data closer to the source, edge systems reduce the volume of data that needs to travel to the cloud, thereby minimizing latency and improving responsiveness. This localized processing also enables real-time insights and actions, a significant advantage in applications requiring immediate feedback.The strategies for optimizing data transfer and the choice of storage options greatly influence the performance and cost-effectiveness of edge deployments.

Different industries require different approaches to balance data integrity, security, and performance requirements. This section will explore these nuances, emphasizing the trade-offs between edge and cloud storage and showcasing successful implementation strategies.

Data Transfer from Edge Devices to the Cloud

The process of transferring data from edge devices to the cloud often involves intermediary steps and protocols. Direct connections between edge devices and cloud services are not always feasible or optimal. Network configurations, security policies, and data volumes can significantly impact transfer speeds. Data compression and encryption techniques are crucial for efficient and secure data transmission.

Optimizing Data Transfer for Reduced Latency

Several strategies can optimize data transfer for reduced latency. Data compression algorithms, such as lossless and lossy compression, can significantly reduce the size of data packets. Using dedicated and optimized network paths for data transfer is another key strategy. Additionally, implementing caching mechanisms at the edge can reduce the amount of data that needs to be transferred to the cloud.

The specific strategies employed depend heavily on the type of data being transferred and the desired level of performance.

Different Storage Options for Edge Data

Edge devices can employ various storage solutions, each with its own set of advantages and disadvantages. Local storage solutions, such as SSDs or hard drives, offer fast access to data within the edge device. Cloud storage, on the other hand, offers scalability and redundancy, but introduces latency associated with cloud access. Specialized edge storage solutions often combine local storage with cloud backup or synchronization capabilities.

Trade-offs Between Edge Storage and Cloud Storage

Choosing between edge and cloud storage involves a careful evaluation of the trade-offs. Edge storage provides faster access and reduced latency but may be limited in capacity and requires local management. Cloud storage offers greater scalability, redundancy, and potentially lower upfront costs, but introduces latency and reliance on network connectivity. The optimal solution depends on the specific application requirements, such as data volume, access frequency, and security needs.

Examples of Optimized Data Transfer and Storage in Specific Industries

Several industries have successfully implemented optimized data transfer and storage strategies. In the manufacturing sector, real-time monitoring of production lines often benefits from edge storage and local processing to avoid delays in responding to anomalies. Similarly, in the retail industry, edge computing can improve the responsiveness of point-of-sale systems and personalized customer experiences. The financial industry leverages edge computing for high-frequency trading, where the milliseconds saved in data transfer can significantly impact profits.

Scalability and Management

Scaling edge computing infrastructure and managing the diverse array of edge devices require careful planning and execution. Successfully deploying and maintaining edge deployments hinges on selecting appropriate scaling strategies, robust management tools, and effective monitoring procedures. This involves understanding the specific needs of various use cases and choosing solutions that balance performance, cost-effectiveness, and security.Effective edge computing deployments rely on a well-defined strategy for scaling the infrastructure to accommodate increasing data volumes and processing demands.

The choice of management tools and monitoring methodologies is crucial for maintaining optimal performance and security of the edge devices.

Scaling Strategies for Edge Computing

Different scaling strategies cater to various use cases. The optimal strategy depends on factors like data volume, processing requirements, and geographical distribution.

- Horizontal Scaling: This involves adding more edge devices to the network. It is a straightforward approach, particularly suitable for scenarios where processing demand can be distributed across multiple devices. For example, in a smart city application with distributed sensors, adding more sensor nodes allows for greater coverage and increased data collection.

- Vertical Scaling: This strategy involves upgrading the capabilities of existing edge devices. It can be more cost-effective in the short term, but it might not be suitable for rapidly growing data volumes. An example would be upgrading the processing power of a video surveillance camera to handle higher resolution streams.

- Hybrid Scaling: This combines horizontal and vertical scaling to address specific needs. For example, a factory automation system might employ more sensor nodes (horizontal scaling) while upgrading the processing capabilities of the central edge gateway (vertical scaling) to manage the increasing data flow.

Managing Edge Devices

Effective management of edge devices is crucial for ensuring smooth operation and security. The diverse range of devices, their varying operating systems, and potential remote locations present unique challenges.

- Device heterogeneity: Managing a variety of devices with differing operating systems, hardware specifications, and communication protocols demands a flexible management system. A centralized management platform with device-specific configurations is essential.

- Remote access and maintenance: Ensuring remote access for configuration, updates, and troubleshooting is essential. Secure remote access protocols are critical to avoid vulnerabilities.

- Security considerations: Protecting edge devices from cyber threats is paramount. Implementing strong security measures, such as secure access protocols and regular security updates, is essential to prevent unauthorized access and data breaches.

Management Tools and Techniques

Several tools and techniques facilitate effective management of edge deployments.

- Centralized management platforms: These platforms offer a unified interface for managing multiple edge devices, facilitating configurations, updates, and monitoring. A well-designed platform provides insights into device health, performance, and security status.

- Software-defined networking (SDN): This allows for dynamic configuration and management of network resources, enabling greater flexibility and scalability in edge deployments. SDN can automate tasks like routing and resource allocation.

- Remote diagnostics and troubleshooting: Tools for remote diagnostics and troubleshooting are critical for quickly identifying and resolving issues with edge devices. Real-time monitoring of device performance is a key component.

Monitoring and Maintaining Edge Infrastructure

Monitoring and maintaining edge infrastructure ensures consistent performance and reliability. A comprehensive monitoring system is necessary for tracking device health, network performance, and security.

- Real-time monitoring: Continuous monitoring of edge device performance, network traffic, and data processing times is crucial. Alerts and dashboards provide immediate notification of potential issues.

- Automated maintenance: Automated procedures for software updates, security patching, and performance optimization help maintain operational efficiency.

- Regular audits: Regular audits of edge devices and infrastructure are necessary to identify vulnerabilities and ensure ongoing compliance with security standards. This ensures a consistent level of security and prevents potential threats.

Scaling Strategies Table

| Scaling Strategy | Description | Suitability for Use Cases |

|---|---|---|

| Horizontal Scaling | Adding more devices | Distributed sensor networks, data collection, parallel processing |

| Vertical Scaling | Upgrading existing devices | Specific processing demands, high-resolution data |

| Hybrid Scaling | Combining horizontal and vertical | Complex applications requiring both increased capacity and processing power |

Case Studies of Edge Computing

Edge computing is transforming industries by bringing data processing closer to the source, significantly reducing latency. This proximity enables real-time responses and improved user experiences, leading to increased efficiency and productivity. Analyzing real-world case studies provides valuable insights into the practical applications and benefits of edge computing.Real-world implementations of edge computing showcase the effectiveness of this distributed computing architecture.

These case studies demonstrate how edge computing’s proximity to data sources reduces latency, leading to improved performance and enabling new business opportunities. The specific challenges faced and how they were overcome are crucial learning points for organizations considering implementing edge computing solutions.

Autonomous Vehicle Navigation

Autonomous vehicles rely heavily on real-time data processing for safe and efficient navigation. Edge computing plays a vital role in processing sensor data from cameras, radar, and lidar, enabling rapid decision-making. By bringing the processing closer to the vehicles, latency is significantly reduced, allowing the vehicle to react swiftly to changing road conditions and obstacles.This localized processing reduces the time required for data transmission to a central processing unit, which is essential for maintaining safety and responsiveness.

The improved responsiveness leads to enhanced safety features and improved performance. Challenges in implementing edge computing in autonomous vehicles include ensuring consistent performance across various environments and managing the sheer volume of data generated by numerous sensors. These challenges were overcome by using robust hardware, efficient algorithms, and cloud-based backup systems. The impact of edge computing on business outcomes is evident in the enhanced safety and reliability of autonomous vehicle systems, driving increased adoption and investor confidence.

Autonomous vehicle companies can achieve significant business outcomes, including cost savings from reduced accident rates and improved customer experience.

Smart Manufacturing

In the manufacturing sector, real-time monitoring and control of machinery are essential for optimizing production processes and preventing equipment failures. Edge computing facilitates the rapid analysis of sensor data from manufacturing equipment, enabling prompt responses to issues and predictive maintenance.Edge devices deployed throughout the factory collect and process data, reducing latency in detecting and responding to anomalies. This real-time data analysis allows for quicker adjustments to manufacturing processes, minimizing downtime and maximizing efficiency.

Challenges faced in this implementation include integrating diverse hardware platforms and ensuring data security. These challenges were addressed through standardized communication protocols and robust security measures. The impact on business outcomes is evident in reduced downtime, increased productivity, and lower maintenance costs. The efficiency gains translate directly into cost savings and increased revenue.

Smart Cities

Edge computing enables the development of intelligent city infrastructure by bringing data processing closer to the source, facilitating real-time responses to city events and needs. This includes traffic management, emergency response systems, and public safety applications.The processing of sensor data from traffic cameras, streetlights, and other infrastructure elements occurs locally, reducing latency. This allows for quicker responses to traffic congestion, accidents, and other incidents, improving public safety and the overall efficiency of city operations.

The challenge was ensuring the seamless integration of various sensors and data sources. Solutions involved developing standardized data formats and open communication platforms. The impact on business outcomes includes enhanced public safety, reduced congestion, and improved resource management. By implementing edge computing, cities can improve their response times to emergencies and enhance the quality of life for their citizens.

“The implementation of edge computing has significantly reduced latency in our autonomous vehicle navigation system, allowing for a more responsive and safer driving experience. This has been instrumental in building customer trust and confidence in our technology.”

[Representative Name], [Company Name]

Future Trends in Edge Computing

The landscape of edge computing is constantly evolving, driven by the increasing demand for real-time data processing and the need to reduce latency in diverse applications. This evolution is fueled by advancements in hardware, software, and network technologies. This section explores the emerging trends in edge computing, focusing on their impact on latency reduction, and highlighting the technologies poised to shape its future.

Emerging Technologies Enhancing Edge Computing Capabilities

Edge computing’s potential is amplified by a confluence of emerging technologies. These technologies enable more sophisticated data processing, improved security, and enhanced network performance at the edge. The advancements in these areas are key to the future of edge computing and its ability to meet evolving needs.

- Artificial Intelligence (AI) and Machine Learning (ML): AI and ML algorithms are increasingly integrated into edge devices. This enables real-time data analysis and decision-making, further minimizing latency. For example, in autonomous vehicles, AI models running on edge devices can process sensor data instantly, allowing for quicker responses to changing road conditions.

- 5G and Beyond: Next-generation wireless networks, such as 5G and beyond, provide the necessary bandwidth and low latency to support the burgeoning demands of edge computing applications. 5G’s low latency and high throughput enable real-time data transmission, critical for applications such as augmented reality (AR) and virtual reality (VR). This faster, more reliable connectivity significantly reduces the time it takes to process and transmit data.

- Edge Cloud Platforms: Dedicated cloud platforms optimized for edge computing provide a robust infrastructure for deploying, managing, and scaling edge applications. These platforms streamline the development process and improve the overall performance of edge deployments, including features like automated updates and simplified monitoring.

Future Developments and Improvements in Edge Computing Infrastructure

The infrastructure supporting edge computing is evolving to meet the demands of a wide range of applications. This includes improvements in hardware, software, and security.

- Hardware advancements: More powerful and energy-efficient edge devices are becoming available, enabling more complex computations to be performed closer to the data source. The reduction in power consumption is crucial for deployment in remote or resource-constrained locations. Examples include specialized hardware designed for AI and ML tasks.

- Software advancements: Software frameworks and tools are being developed to streamline the development and deployment of edge applications. This reduces development time and enhances the flexibility of deployment. These frameworks are also being optimized for security and reliability.

- Enhanced security protocols: Edge devices are increasingly vulnerable to cyberattacks, necessitating robust security protocols and measures. Security features such as encryption, authentication, and intrusion detection systems are becoming integral parts of edge infrastructure to protect sensitive data and ensure reliable operation.

Impact of 5G and Other Next-Generation Networks

G and subsequent generations of wireless networks are revolutionizing edge computing by enabling near real-time data transmission and processing. This translates to reduced latency in applications requiring immediate responses, which significantly improves user experience.

- Reduced Latency: 5G’s lower latency compared to previous generations allows for faster data transmission to edge devices. This reduction is critical for applications such as remote surgery and autonomous vehicles, where millisecond delays can have significant consequences.

- Increased Bandwidth: The increased bandwidth provided by 5G enables the transfer of larger datasets more efficiently, supporting more complex applications at the edge. This is crucial for high-definition video streaming and high-resolution sensor data processing.

- Improved Reliability: 5G networks offer improved reliability and stability, minimizing disruptions in data transmission. This is essential for mission-critical applications, ensuring continuous operation and minimizing downtime.

Description of New Technologies and Their Applications

Several new technologies are shaping the future of edge computing. These technologies address specific needs and improve the overall performance and security of edge deployments.

- Edge AI: Edge AI platforms enable real-time data analysis and decision-making close to the source. This is particularly useful in applications requiring rapid responses, such as autonomous vehicles and industrial automation. This localized processing is critical to minimizing latency and improving responsiveness in these applications.

- Internet of Things (IoT) security: With the increasing number of IoT devices, robust security measures are essential. This includes encryption, authentication, and intrusion detection systems to secure data at the edge. Robust security measures protect sensitive data, critical for maintaining operational reliability.

Concluding Remarks

In conclusion, edge computing’s ability to reduce latency stems from its proximity to data sources. This localized processing, combined with optimized network architectures and security measures, allows for swift data handling and improved real-time application performance. The future of edge computing promises further enhancements in latency reduction, fueling innovation across various sectors.

Clarifying Questions

What are the key differences between edge and cloud computing in terms of latency?

Edge computing significantly reduces latency compared to cloud computing by processing data closer to the source. This localized processing eliminates the need to transmit data across vast distances, resulting in faster response times. Cloud computing, while offering scalability and cost-effectiveness, incurs higher latency due to the distance data must travel to and from the central server.

How does network topology influence latency in edge computing?

Network topology directly affects latency. A well-designed network architecture with optimized connections minimizes data transfer time. Factors like the number of hops data needs to travel and the bandwidth of the communication channels play a critical role in latency. Choosing the right topology, such as a mesh or star network, is crucial for low-latency edge deployments.

What security considerations are unique to edge computing?

Edge computing introduces security challenges due to the distributed nature of data processing. Protecting data at the edge requires robust security measures for each device, including encryption and access controls. Data breaches at the edge can have significant consequences, making security protocols and regular vulnerability assessments essential.

What are some examples of real-time applications that benefit from edge computing?

Autonomous vehicles, real-time video streaming, industrial automation systems, and stock trading platforms are prime examples. These applications demand rapid response times, making edge computing an ideal solution for reducing latency and ensuring responsiveness.